Deploy RAG on Raspberry Pi! Microsoft Phi-3 technical report reveals how the "small and beautiful" model was born

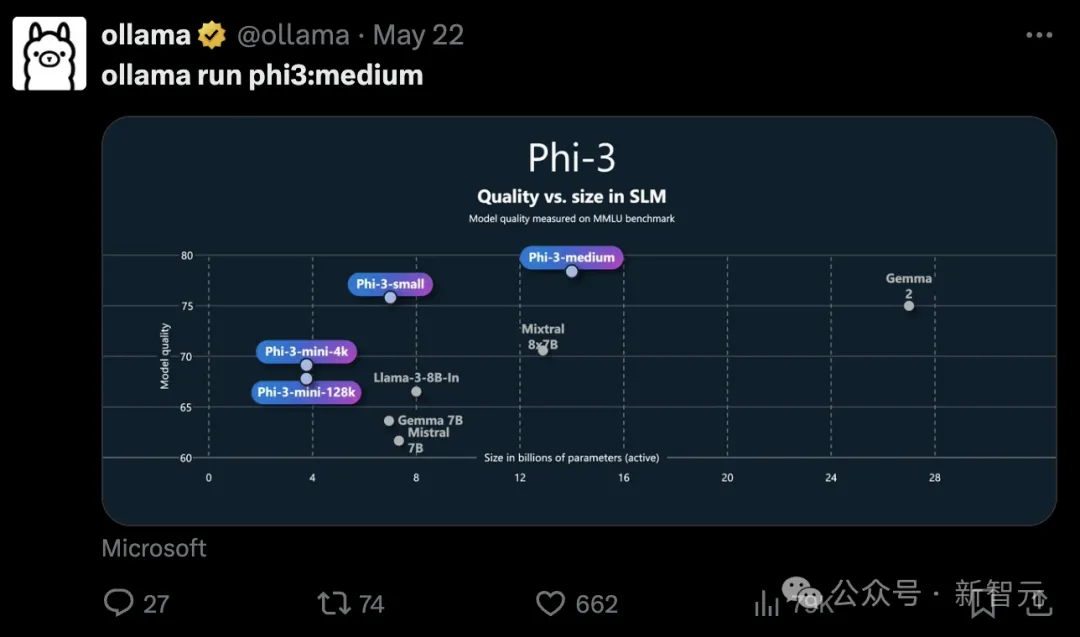

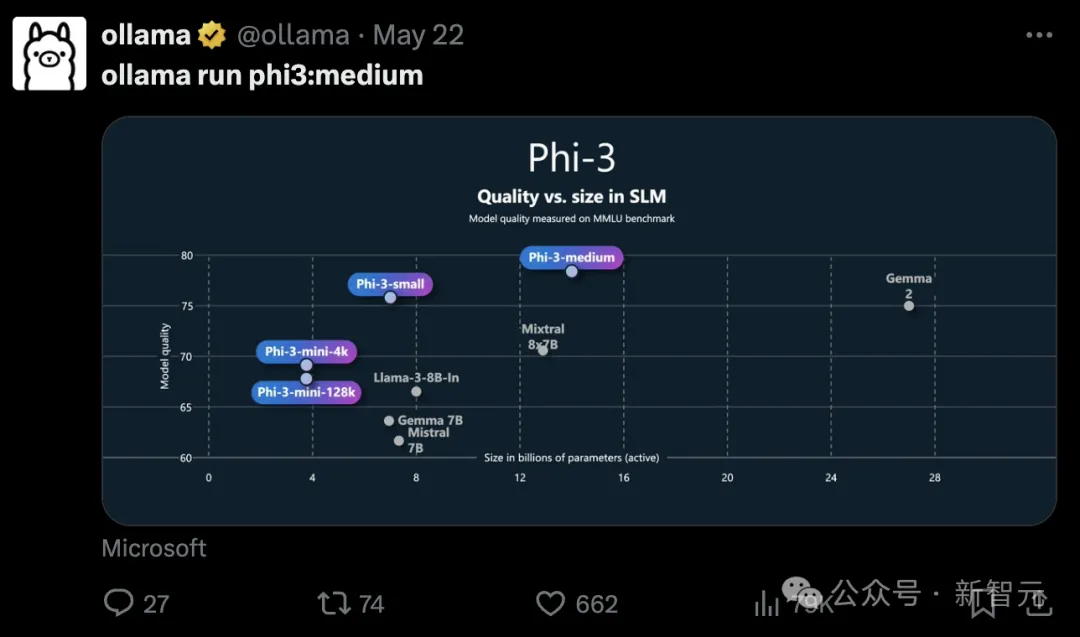

At the Microsoft Build conference this month, Microsoft officially announced the new members of the Phi-3 family: Phi-3-vision, Phi-3-small, and Phi-3-medium.

In addition, there is the Phi-3-mini, whose technical report was released last month. When it was first launched, it impressed many developers.

For example, Xenova developed a browser chat application WebGPU based on Phi-3-mini that runs completely locally. The demo looks like an accelerated video:

As a result, Xenova had to make a special statement: the video was not accelerated, the deployed model was just that fast, generating an average of 69.85 tokens per second.

In an environment where everyone is pursuing LLM, Microsoft has never given up on the SLM path.

From the launch of Phi-1 in June last year, to Phi-1.5, Phi-2, and now Phi-3, Microsoft's small model has completed four iterative upgrades.

This time, the 3.8B parameter Phi-3-mini, 7B small and 14B medium all showed stronger capabilities than larger models.

And as an open source model, it can be deployed on mobile phones and even on Raspberry Pi. Microsoft really loves developers.

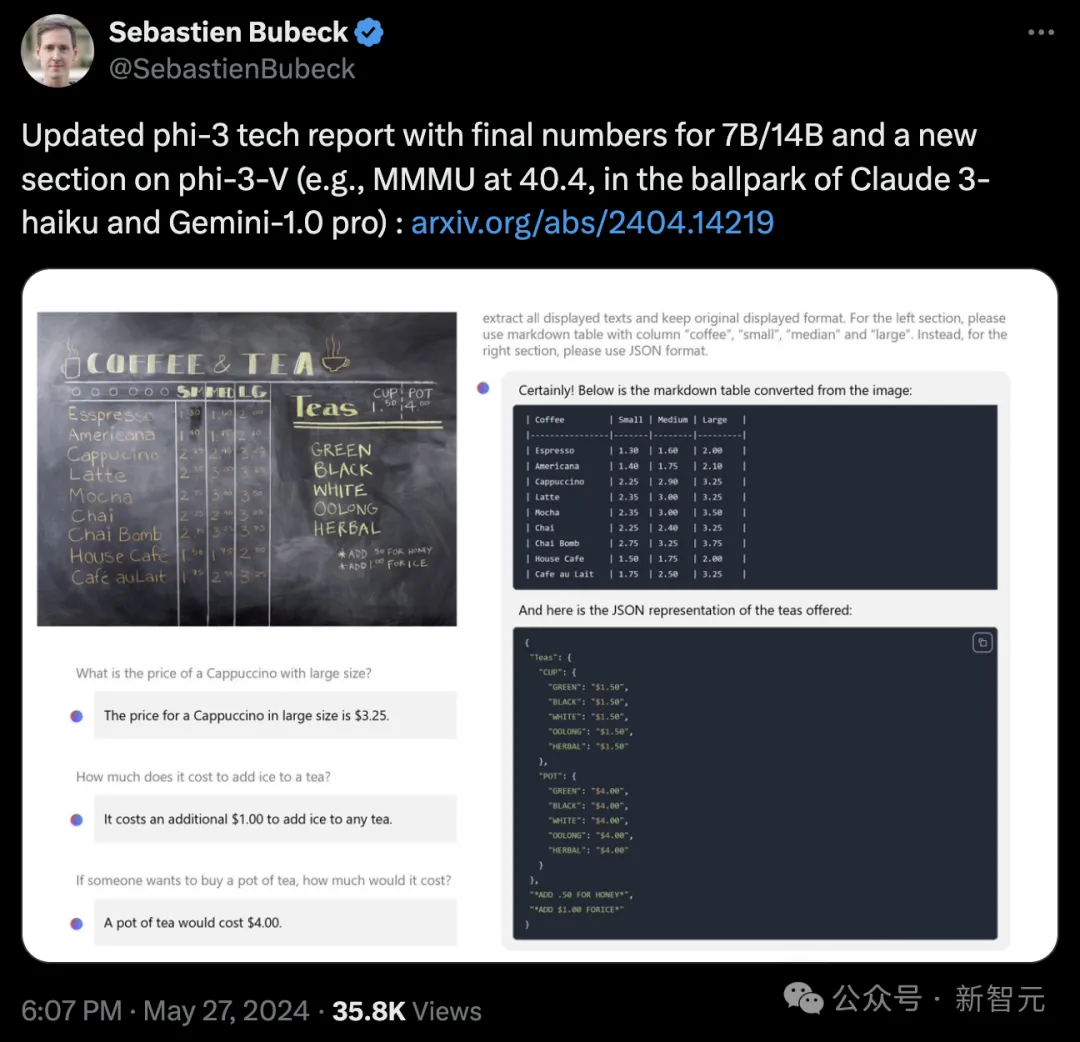

Yesterday, Sebastien Bubeck, vice president of Microsoft Gen AI Research, announced on Twitter that he had updated the Phi-3 technical report, adding the final scores for the small and medium versions, as well as the evaluation results for the visual model.

In the comment section, netizens expressed their love for the open source and powerful small model like Phi-3.

Some even described it as "Microsoft's gift to the open source world."

Perhaps Microsoft has made the right bet on SLM? Only by making powerful models truly available and infiltrating various applications can real change be achieved.

The Phi-3 results give us a hint of what is possible with such a small memory footprint, and there is now a real opportunity to scale the model to a wide variety of apps.

So, what is the strength of the Phi-3 series? We can find out from the latest technical report.

Phi-3 language model: small and beautiful

Both Phi-3-mini and small use the standard decoder-only Transformer architecture.

To maximize the convenience of the open source community, Phi-3-mini uses the same tokenizer and similar block structure as Llama 2, which means that all software packages deployed on Llama 2 can be migrated seamlessly.

The small version of the model uses OpenAI's Tiktoken tokenizer, which is more suitable for multilingual tasks. In addition, in order to achieve efficient training and inference, many improvements have been made to the model architecture:

- Use GEGLU activation function instead of GELU

- Use the Maximal Update Parameterization strategy to adjust the hyperparameters on the proxy model to ensure parameter stability during model training

- Using Group Query Attention

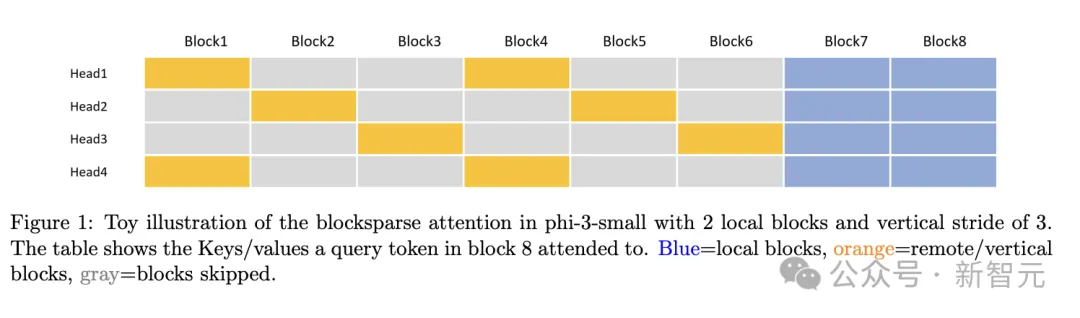

- Designed a blocksparse attention module to handle longer contexts with fewer KV caches

- Implement different kernels for training and inference respectively, truly take advantage of the block sparsity mechanism and achieve model acceleration after deployment

When deploying Phi-3-mini, INT4 quantization can be used, which only takes up about 1.8GB of memory. After quantization, when deployed on an iPhone 14 equipped with an A16 chip, offline operation can achieve a generation speed of 12 tokens/s.

After training, we also used a variety of high-quality data to fine-tune SFT and DPO, covering multiple areas such as mathematics, encoding, reasoning, dialogue, model identity and security. In addition, the context length of the mini version was expanded to 128k at this stage.

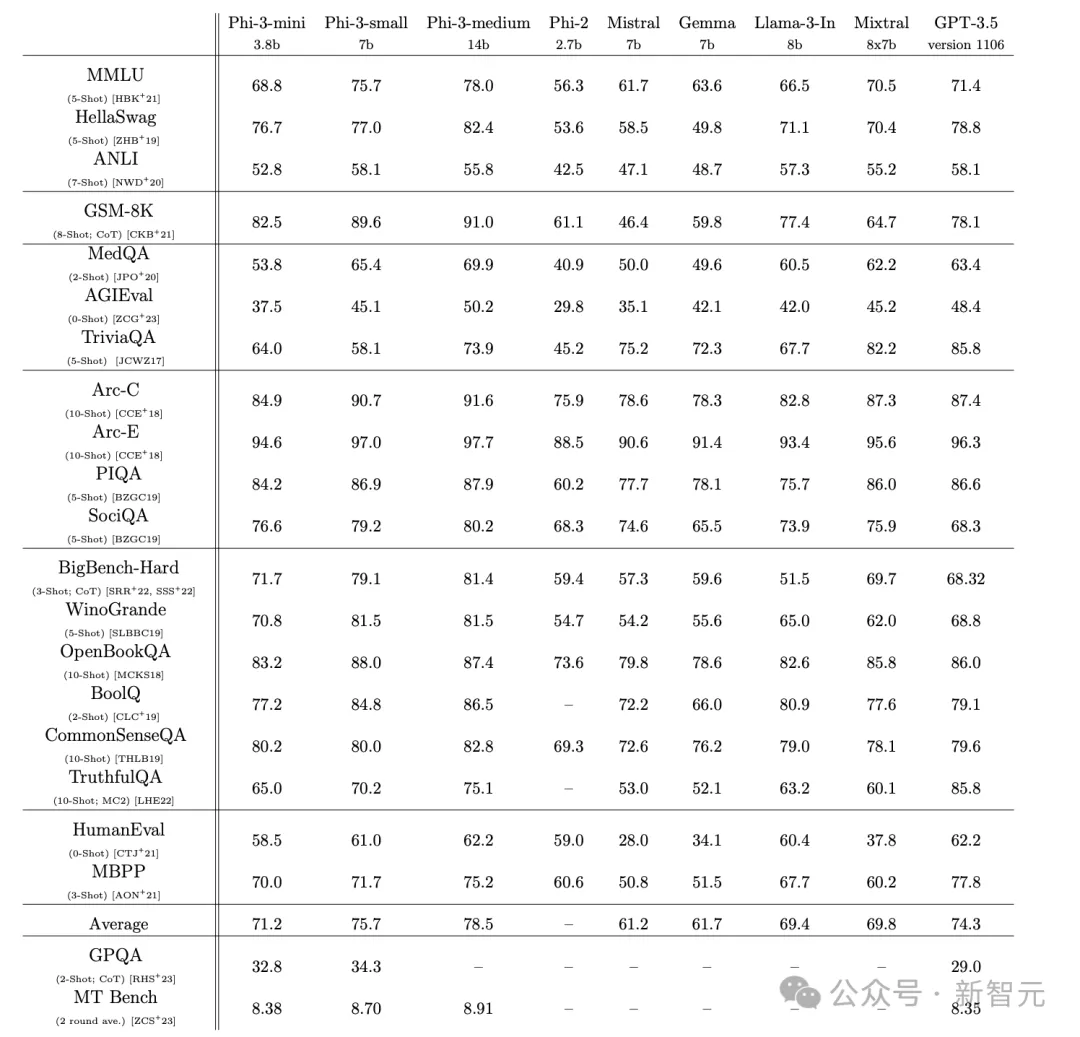

By using few-sample prompts, the technical report compares the Phi-3 model with different parameter amounts with Mistral, Gemma, Llama 3, Mixtral (8X7B), GPT-3.5 and other models, and tests them on 21 benchmarks.

Compared with the 2.7B Phi-2 model, the parameters of the Phi-3 model increased by 1.1B, but it achieved a performance improvement of 10 points or more in almost all tests, which is basically the same as the 8B Llama 3.

In general, the small version with 7B parameters can be compared with GPT-3.5. Except for the large gap in TriviaQA and TrufulQA, the scores of other tests are basically the same, and it even has a large lead in tests such as GSM-8K, SociQA, BigBench-Hard, and WinoGrande.

The performance of the medium model is not satisfactory. Although it has 7B more parameters than the small model, it uses the same architecture as Phi-3-mini. It has no obvious advantages in any test, and its performance has regressed in ANLI and OpenBookQA.

Phi-3-Vision: 4.2B of powerful multimodality

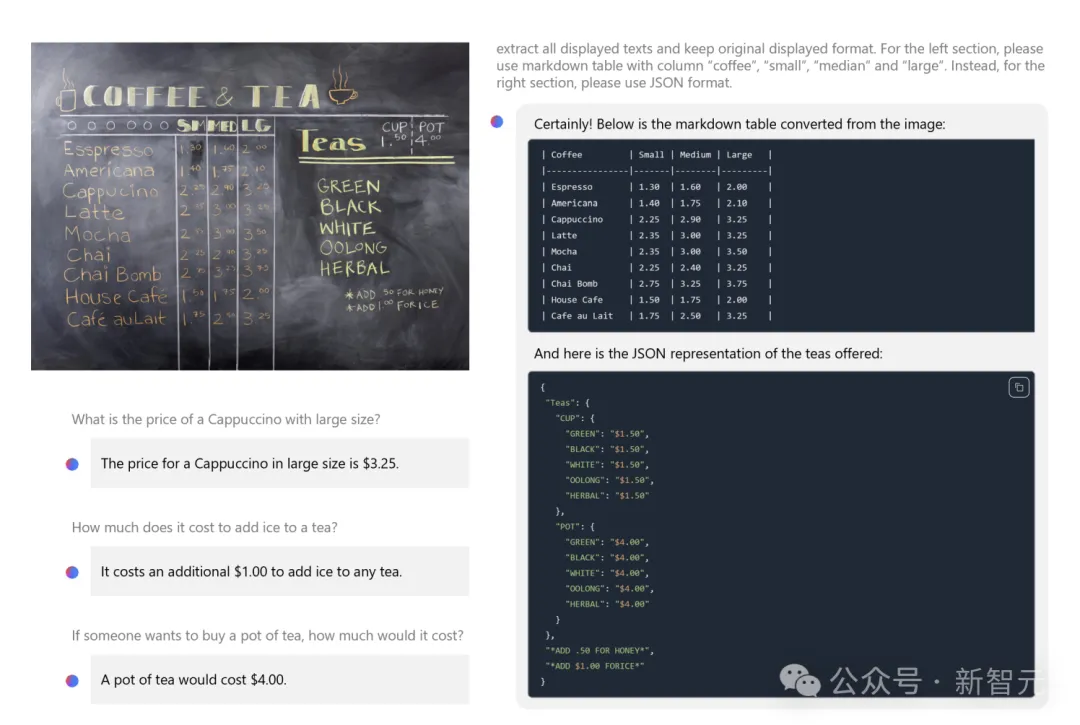

Phi-3-V still adheres to the "small parameter" strategy of the language model, with only 4.2B parameters. It can accept images or text prompts as input and then generate text output.

The model architecture is also very simple, including the image encoder CLIP ViT-L and the text decoder Phi-3-mini-128K-instruct.

The pre-training process involves a total of 0.5T token data, including plain text, tables, charts, and data interleaved with images and text. After pre-training, SFT and DPO fine-tuning were also performed, and multimodal tasks and plain text tasks were performed simultaneously.

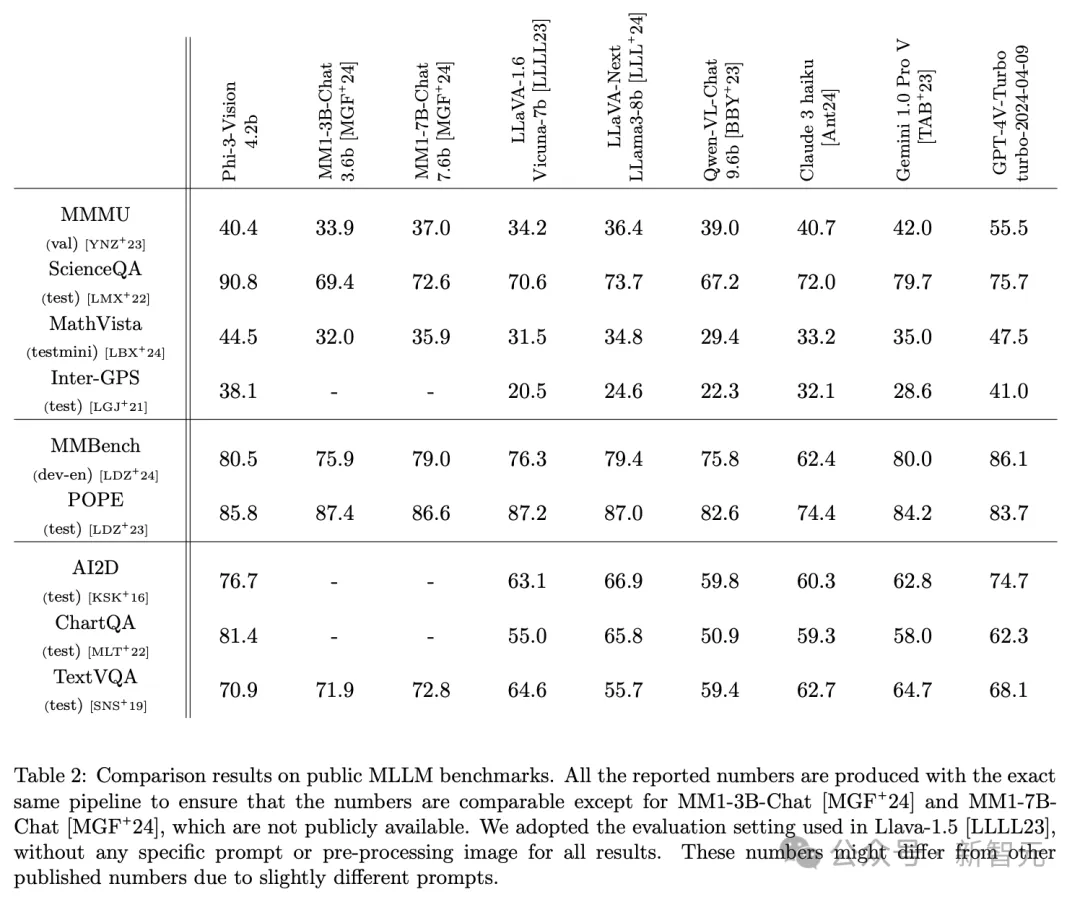

The technical report compares Phi-3-Vision with models such as Tongyi Qianwen, Claude, Gemini 1.0 Pro, and GPT-4V Turbo, using results generated by the exact same pipeline.

Among a total of 12 benchmarks, Phi-3-V achieved higher scores than GPT-4V-Turbo on five tasks: ScienceQA, POPE, AI2D, ChartQA, and TextVQA.

In the chart question-answering task ChartQA, Phi-3 improved by 19 points compared to GPT-4V, and ScienceQA led by 15 points to 90.8 points.

On other tasks, although the scores are not as good as GPT-4V, they are high enough to compete with Claude 3 and Gemini 1.0 Pro, and even surpass these larger models.

For example, on MathVista and Inter-GPS, it is about 10 points ahead of Gemini.

Phi-3-Vision’s capabilities in image understanding and reasoning

Data for parameters

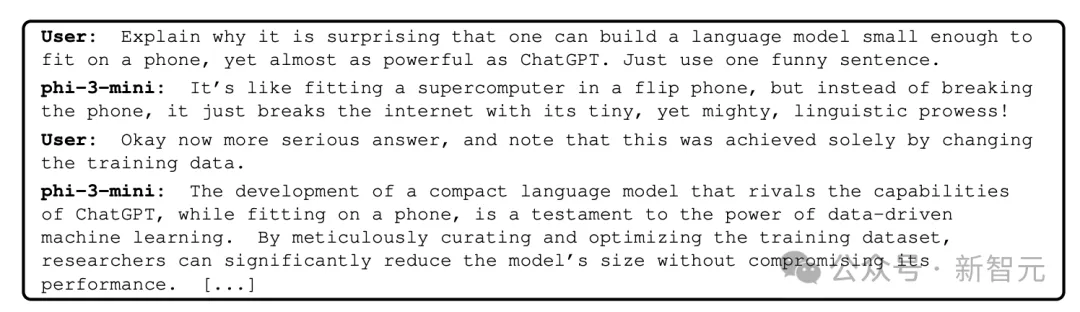

Why can Phi-3 achieve powerful capabilities with fewer parameters? The technical report reveals the answer from the side.

In its exploration of the Phi series of models, Microsoft discovered a training data "recipe" that can achieve the performance of larger models in a small model.

This recipe is the public network data filtered by LLM, combined with LLM synthetic data. This can inspire us to what extent data content and quality can affect model performance.

The Phi-1 model used "textbook quality" data to achieve a performance breakthrough in SLM for the first time.

Paper address: https://arxiv.org/abs/2306.11644

The technical report calls this data-driven approach the “Data Optimal Regime.” At a given scale, it attempts to “calibrate” the training data to be closer to the “data optimal” state of the SLM.

This means that data screening at the sample level must not only contain the correct "knowledge" but also maximize the model's reasoning capabilities.

The performance of Phi-3-medium did not improve significantly after increasing the 7B parameters. On the one hand, this may be due to the mismatch between the architecture and parameter scale. On the other hand, it may also be due to the fact that the "data mixing" work did not reach the optimal state of the 14B model.

However, there is no free lunch in the world. Even with improvements in architecture and training strategies, reducing parameters is not without cost.

For example, there are no extra parameters to implicitly store too much "factual knowledge", which may lead to the low score of the Phi-3 language model on TriviaQA, and the reasoning ability of the visual model is also limited.

Although the team has put a lot of effort into data, training, and red team work, and has seen significant results, there is still much room for improvement in the model's hallucination and bias problems.

Furthermore, training and benchmarking were mainly conducted in English, and the multilingual capabilities of SLM remain to be explored.