Intel raids NVIDIA H100, new AI chip trains 40% faster, infers 50% faster, CEO celebrates

Intel is starting to take on Nvidia head-on.

Just late at night, Intel CEO Pat Gelsinger danced and showed off the latest AI chip- Gaudi 3 :

Why was he so happy that he went to the scene and danced to a disco?

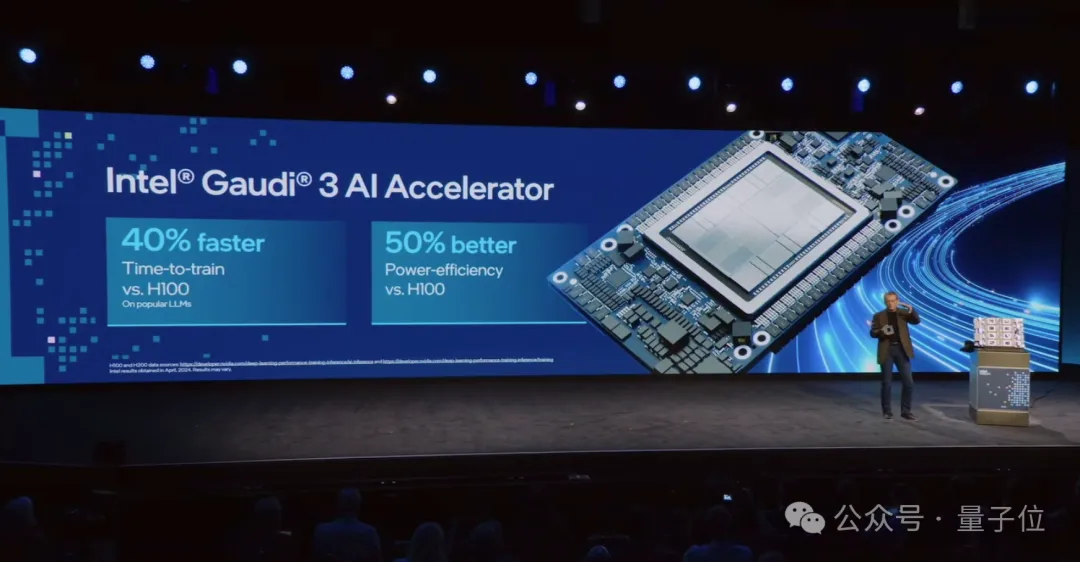

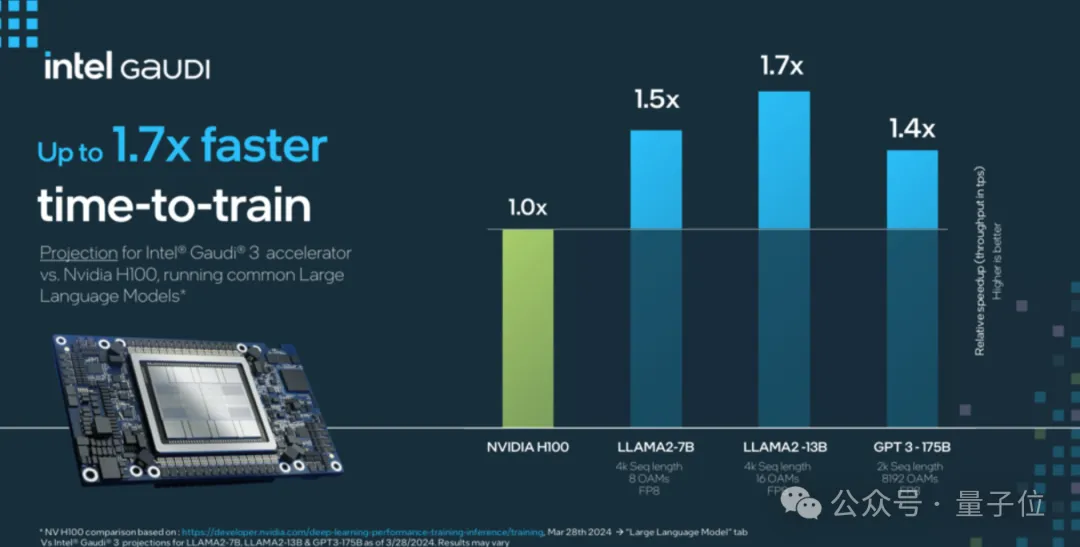

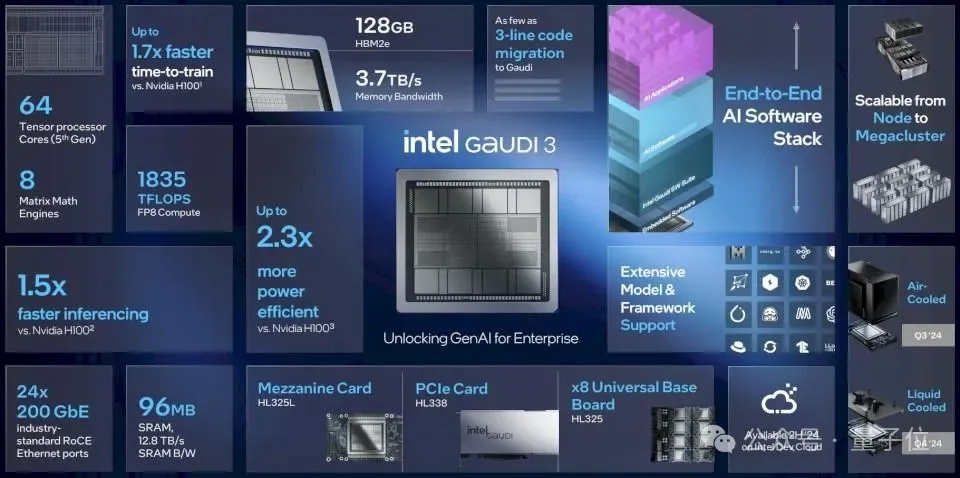

Looking at the performance results of Gaudi 3, it is clear at a glance:

- Training large models: 40% faster than NVIDIA H100

- Inference on large models: 50% faster than NVIDIA H100

Not only that, although Kissinger did not give direct data at the scene, he also said:

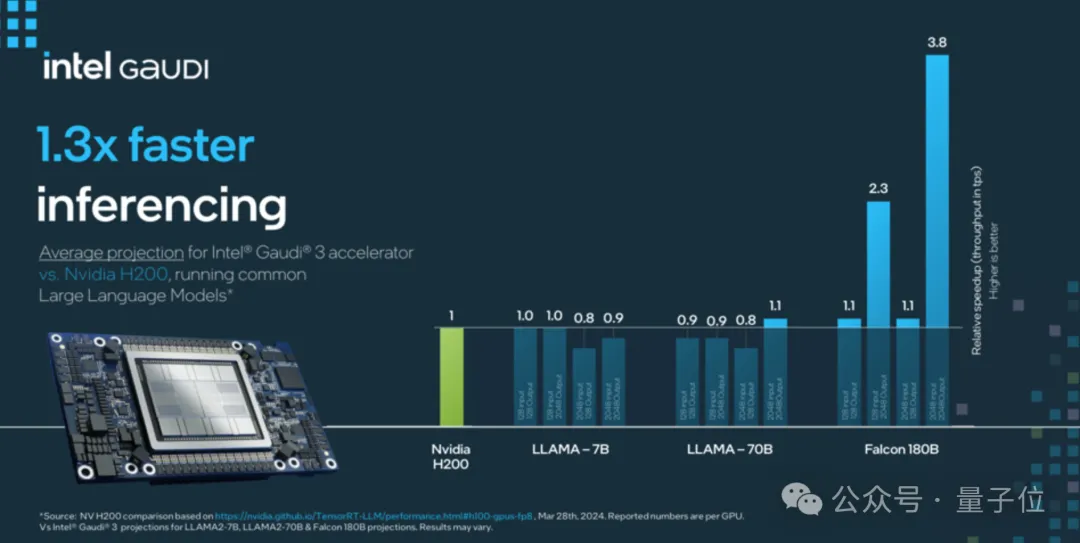

Gaudi 3's performance will be on par with the NVIDIA H200 , and even better in some areas.

Let’s continue to see how effective it is in directly confronting NVIDIA.

Tougher than Nvidia’s Gaudi 3

Intel said that Gaudi 3 has been tested on Llama and can effectively train or deploy large AI models, including Stable Diffusion of Vincentian graphs and Whisper of speech recognition, etc.

At the scene, Kissinger also demonstrated an AI PC integrated with the latest Intel chips, which can quickly handle multiple tasks, such as quickly processing emails :

Another example is voice processing :

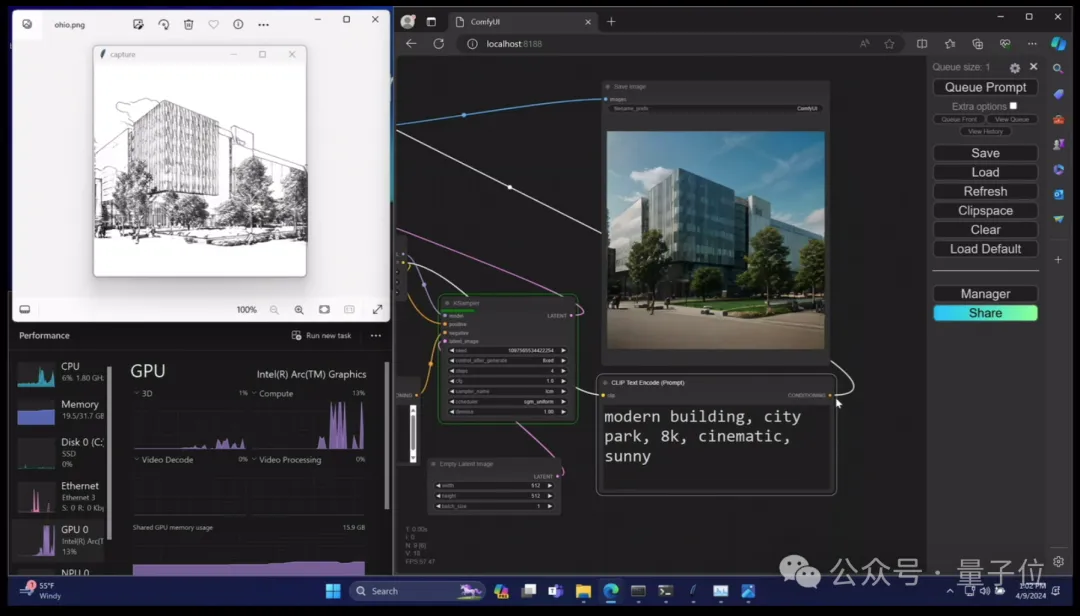

And image rendering :

Colleagues at Intel's demonstration operation also very naughtyly showed a cartoon version of Kissinger generated by AI PC:

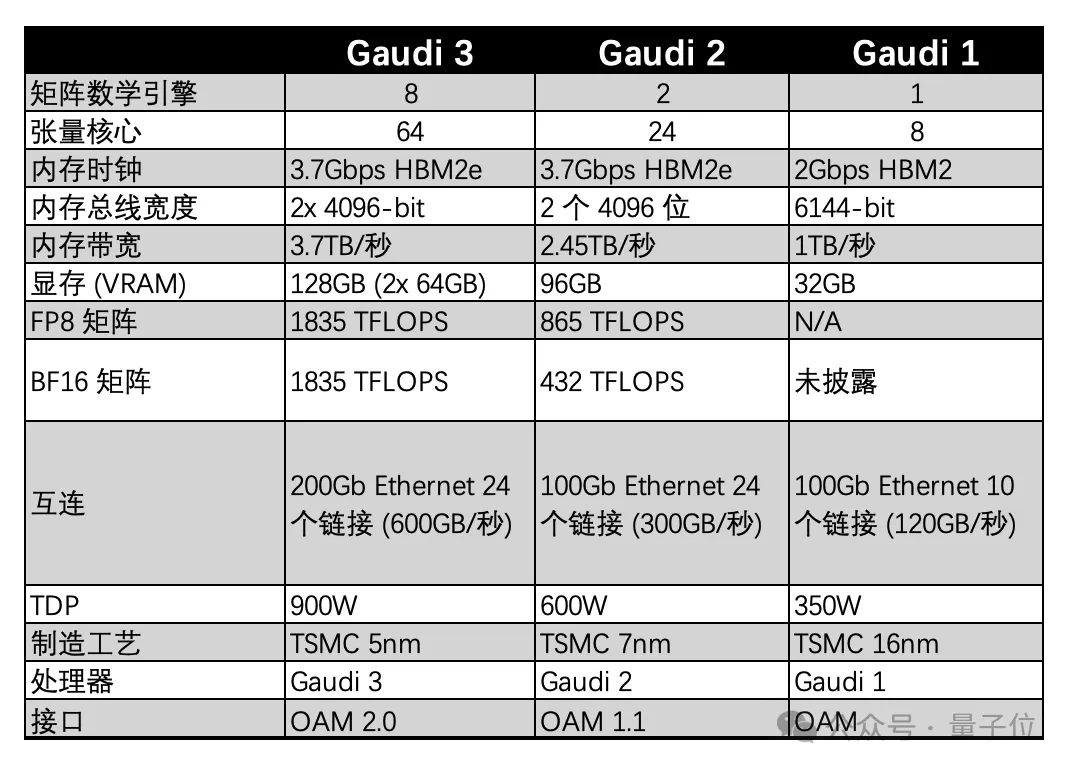

It is understood that Gaudi 3 is manufactured using a 5-nanometer process. The specific comparison with other Gaudi performances of previous generations is as shown in the following table:

Intel said the Gaudi 3 chip will be widely available to customers in the third quarter of this year and will be used by companies including Dell, HP and AMD.

As for the specific price, Intel has not yet disclosed it.

But what’s even more surprising is that Gaudi 3 is just one of the products released at this Intel Vision event.

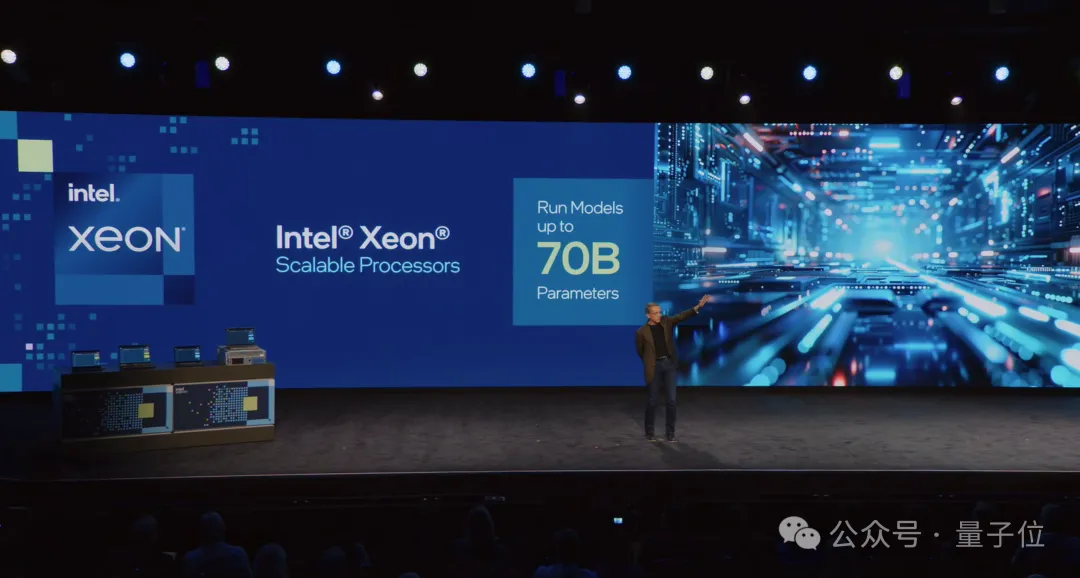

Xeon, entering the sixth generation

That's right, Intel just launched the fifth-generation Xeon at the end of last year. After only a few months, the sixth-generation Xeon is here again!

(Intel really didn’t squeeze out toothpaste this time)

At the scene, Kissinger affectionately called it "little baby" :

The sixth-generation Xeon includes two architectures, namely Sierra Forest and Granite Rapids .

Sierra Forest is based on Intel's smaller, lower-power E-cores, while Granite Rapids is made up of larger but higher-performance P-cores.

Kissinger said at the scene that the two were like twins :

More specifically, the Intel Xeon 6 processor with Sierra Forest architecture has a rack density that is 2.7 times higher.

Customers can replace old systems at a ratio of nearly 3:1, significantly reducing energy consumption and helping them achieve their sustainable development goals.

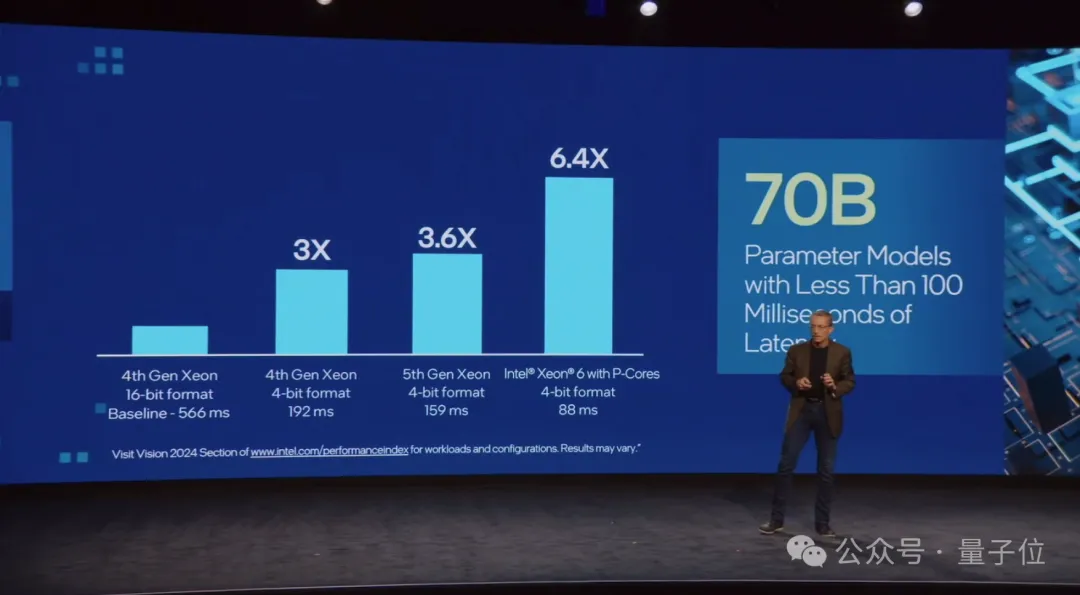

The Intel Xeon 6 processor of the Sierra Forest architecture includes software support for the MXFP4 data format.

Compared with the fourth generation Xeon processor using FP16, the latency of the next token can be shortened by up to 6.5 times, and it can run the 70 billion parameter Llama-2 model.

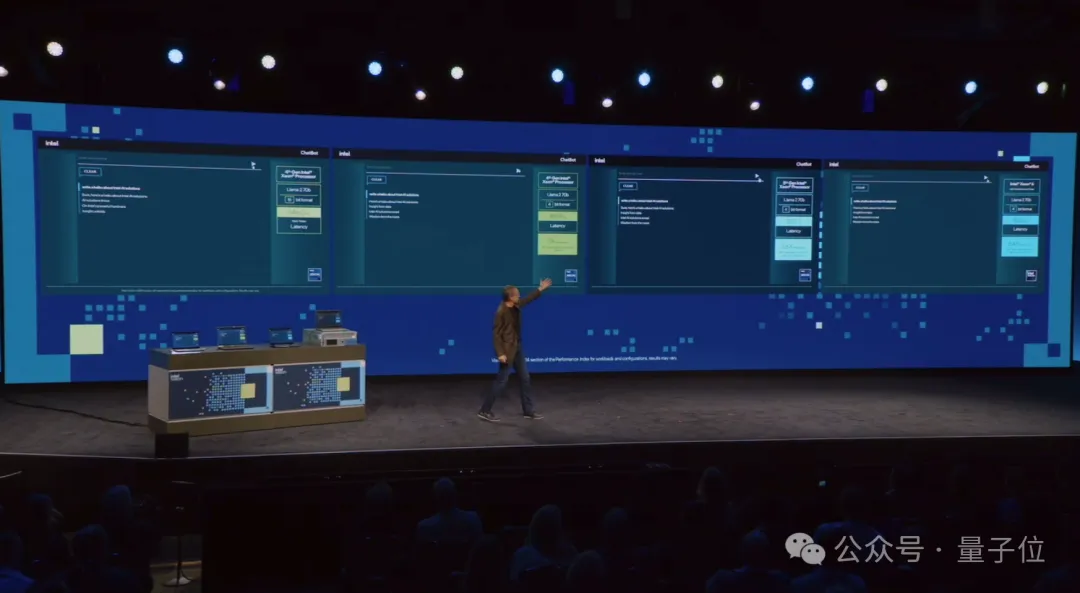

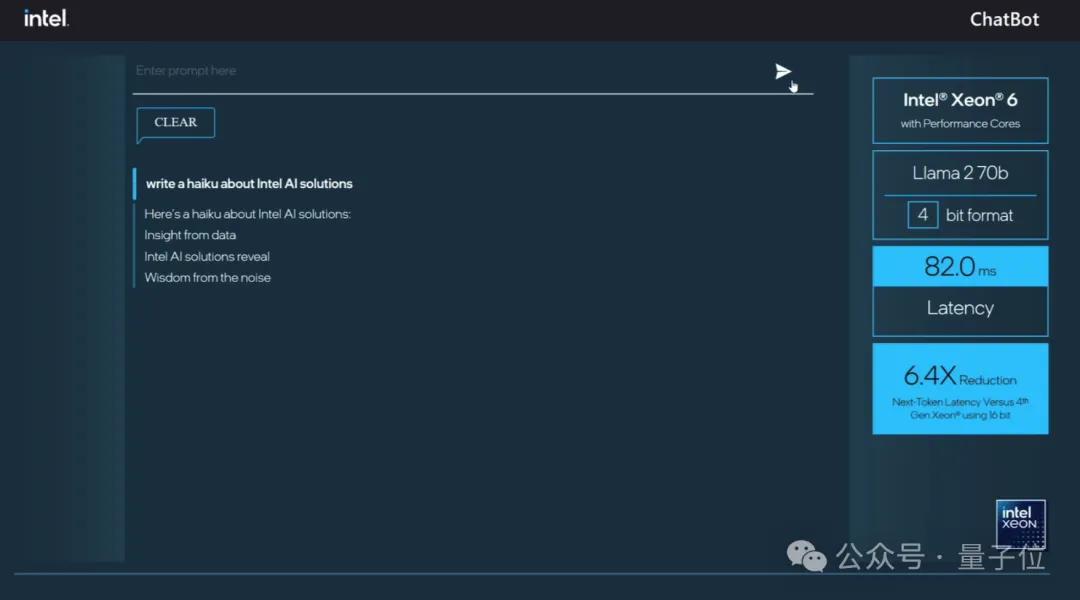

As for how fast Intel Xeon 6 can process large models, Gelsinger made a more direct comparison.

He put the fourth-generation, fifth-generation and latest sixth-generation Xeon together and had a live speed competition.

Judging from the intuitive generation speed, the sixth-generation Xeon is obviously much faster than its "predecessors".

Specific to the precise latency value, the sixth generation Xeon running Llama 2 70B is only 82ms .

Under the same conditions, the delay values of the sixth-generation Xeon and its "predecessors" are compared as follows:

It is understood that the sixth-generation Xeon processor with Sierra Forest architecture will be launched in the second quarter of this year.

Netizens Reveal Intel’s “Secret Recipe”

This tough Nvidia press conference also aroused heated discussions among many netizens.

For example, one detail of Gaudi 3 packaging, namely the use of HBM2e memory chip, has some netizens expressing surprise:

They are using HBM2e, which is what Nvidia A100 uses in 2020.

The most advanced HBM3e Intel originally planned to adopt, but probably due to insufficient supply, it failed to secure enough orders this time.

In this regard, this netizen further stated:

This is one of Intel's secret sauces.

They can always rely on old technology to catch up/overtake new technology until current technology becomes easier to produce, acquire, and integrate.

In fact, manufacturing advantages are also one of Intel's key advantages in the semiconductor field. As one of the pioneers of the semiconductor industry, Intel has almost all the resources and element capabilities of the chip industry.

But when can we fully compete with NVIDIA? This is also an issue that many netizens are concerned about:

This time Intel is toughing it out with H100/H200, so when can it compete with Nvidia’s latest “nuclear bomb” B200?

Perhaps the answer can only be given by time.

But in any case, Intel’s release this time does provide an additional “fast, easy and economical” option for computing power in the AIGC era.