Learn to assemble a circuit board in 20 minutes! The open source SERL framework has a 100% precision control success rate

Faced with this challenge, scholars from the University of California, Berkeley, Stanford University, the University of Washington, and Google jointly developed an open source software framework called the Efficient Robot Reinforcement Learning Kit (SERL), dedicated to promoting the application of reinforcement learning technology in actual robots. of widespread use.

- Project homepage: https://serl-robot.github.io/

- Open source code: https://github.com/rail-berkeley/serl

- Thesis title: SERL: A Software Suite for Sample-Efficient Robotic Reinforcement Learning

The SERL framework mainly includes the following components:

1. Efficient reinforcement learning

In reinforcement learning, an agent (such as a robot) learns how to perform tasks by interacting with its environment. It learns a set of strategies designed to maximize cumulative rewards by trying various behaviors and obtaining reward signals based on the behavioral results. SERL uses the RLPD algorithm to empower robots to learn from real-time interactions and previously collected offline data at the same time, greatly shortening the training time required for robots to master new skills.

2. Various reward stipulation methods

SERL provides multiple reward provisioning methods, allowing developers to tailor reward structures to the needs of specific tasks. For example, fixed-position installation tasks can develop rewards based on the position of the manipulator, and more complex tasks can use classifiers or VICE to learn an accurate reward mechanism. This flexibility helps to precisely guide the robot to learn the most effective strategy for a specific task.

3. No reproduction function

Traditional robot learning algorithms need to reset the environment regularly for the next round of interactive learning. In many tasks this cannot be done automatically. The no-reinforcement learning feature provided by SERL trains two forward-backward policies simultaneously, providing environment resets for each other.

4. Robot control interface

SERL provides a series of Gym environment interfaces for Franka manipulator tasks as standard examples, allowing users to easily extend SERL to different manipulators.

5. Impedance controller

In order to ensure that the robot can explore and operate safely and accurately in complex physical environments, SERL provides a special impedance controller for the Franka robotic arm to ensure accuracy while ensuring that excessive torque is not generated after contact with external objects.

Through the combination of these technologies and methods, SERL greatly shortens the training time while maintaining a high success rate and robustness, enabling robots to learn to complete complex tasks in a short time and be effectively applied in the real world.

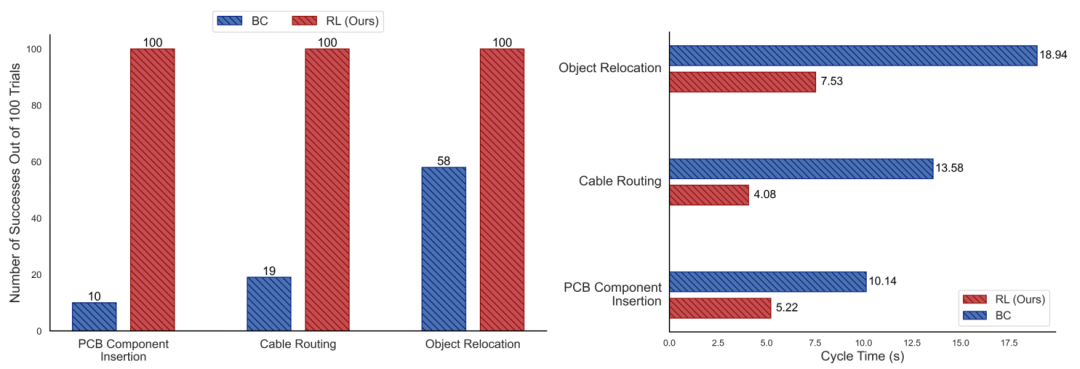

Figures 1 and 2: Comparison of success rate and number of beats between SERL and behavioral cloning methods in various tasks. With a similar amount of data, the success rate of SERL is several times higher (up to 10 times) than that of clones, and the beat rate is at least twice as fast.

Applications

1. PCB component assembly:

Assembling perforated components on PCB boards is a common yet challenging robotic task. The pins of electronic components are very easy to bend, and the tolerance between the hole position and the pin is very small, which requires the robot to be both precise and gentle during assembly. With just 21 minutes of autonomous learning, SERL enabled the robot to achieve a 100% task completion rate. Even in the face of unknown interference such as the position of the circuit board moving or the line of sight being partially blocked, the robot can stably complete the assembly work.

Figures 3, 4, and 5: When performing the task of installing circuit board components, the robot was able to cope with various interferences that it had not encountered during the training stage and successfully completed the task.

2. Cable routing:

During the assembly process of many mechanical and electronic devices, we need to install cables precisely in place along specific paths, a task that places high demands on precision and adaptability. Since flexible cables are prone to deformation during the wiring process, and the wiring process may be subject to various disturbances, such as accidental movement of the cable or changes in the position of the holder, this makes it difficult to deal with using traditional non-learning methods. SERL is able to achieve a 100% success rate in as little as 30 minutes. Even when the gripper position is different from what it was during training, the robot is able to generalize its learned skills and adapt to new wiring challenges, ensuring correct execution of the wiring job.

Figures 6, 7, and 8: The robot can directly pass the cable through the clip in a different position than during training without more special training.

3. Object grabbing and placing operations:

In warehouse management or retail, robots often need to move items from one place to another, which requires the robot to be able to identify and handle specific items. During the training process of reinforcement learning, it is difficult to automatically reset under-actuated objects. Using SERL's no-reset reinforcement learning feature, the robot simultaneously learned two strategies with a 100/100 success rate in 1 hour and 45 minutes. Use the forward strategy to put the objects from box A to box B, and then use the backward strategy to put the objects from box B back to box A.

Figures 9, 10, 11: SERL trained two sets of strategies, one to carry objects from the right to the left, and one to move objects from the left back to the right. The robot not only achieves a 100% success rate on training objects, but can also intelligently handle objects it has never seen before.

main author

1.Jianlan Luo

Jianlan Luo is currently a postdoctoral scholar in the Department of Electrical and Computer Science at the University of California, Berkeley, where he collaborates with Professor Sergey Levine at the Berkeley Artificial Intelligence Center (BAIR). His main research interests lie in machine learning, robotics, and optimal control. Before returning to academia, he was a full-time researcher at Google X, working with Professor Stefan Schaal. Before that, he obtained a master's degree in computer science and a Ph.D. in mechanical engineering from the University of California, Berkeley; during this time he worked with Professor Alice Agogino and Professor Pieter Abbeel. He has also served as a visiting research scholar at Deepmind's London headquarters.

2.Zheyuan Hu

He holds an undergraduate degree in Computer Science and Applied Mathematics from the University of California, Berkeley. Currently, he conducts research in the RAIL laboratory headed by Professor Sergey Levine. He has a strong interest in the field of robotic learning, focusing on developing methods that enable robots to quickly and widely acquire dexterous manipulation skills in the real world.

3. Charles Xu

He is a fourth-year undergraduate student majoring in electrical engineering and computer science at the University of California, Berkeley. Currently, he conducts research in the RAIL laboratory headed by Professor Sergey Levine. His research interests lie at the intersection of robotics and machine learning, aiming to build autonomous control systems that are highly robust and capable of generalization.

4. You Liang Tan

He is a Research Engineer at Berkeley's RAIL Laboratory, supervised by Professor Sergey Levine. He previously received his bachelor's degree from Nanyang Technological University, Singapore and completed his master's degree from Georgia Institute of Technology, USA. Prior to that, he was a member of the Open Robotics Foundation. His work focuses on real-world applications of machine learning and robotics software technologies.

5. Stefan Schaal

He received his PhD in mechanical engineering and artificial intelligence in 1991 from the Technical University of Munich in Munich, Germany. He is a postdoctoral researcher in the Department of Brain and Cognitive Sciences and the Artificial Intelligence Laboratory at MIT, an invited researcher at the ATR Human Information Processing Research Laboratory in Japan, and an adjunct assistant professor in the Department of Kinesiology at Georgia Institute of Technology and Pennsylvania State University in the United States. . He also served as the leader of the computational learning team during the Japanese ERATO project, the Sichuanese Dynamic Brain Project (ERATO/JST). In 1997, he became a professor of computer science, neuroscience, and biomedical engineering at USC and was promoted to tenured professor. His research interests include topics such as statistics and machine learning, neural networks and artificial intelligence, computational neuroscience, functional brain imaging, nonlinear dynamics, nonlinear control theory, robotics, and biomimetic robots.

He was one of the founding directors of the Max Planck Institute for Intelligent Systems in Germany, where he led the Autonomous Motion Department for many years. He is currently chief scientist at Intrinsic, Alphabet's [Google] new robotics subsidiary. Stefan Schaal is an IEEE Fellow.

6. Chelsea Finn

She is an assistant professor of computer science and electrical engineering at Stanford University. Her lab, IRIS, research explores intelligence through large-scale robot interaction and is part of SAIL and the ML Group. She is also a member of the Google Brain team. She is interested in the ability of robots and other intelligent agents to develop a wide range of intelligent behaviors through learning and interaction. She previously completed a PhD in computer science from the University of California, Berkeley, and a bachelor's degree in electrical engineering and computer science from the Massachusetts Institute of Technology.

7. Abhishek Gupta

He is an assistant professor in the Paul G. Allen School of Computer Science and Engineering at the University of Washington, where he directs the WEIRD Laboratory. Previously, he was a postdoctoral scholar at MIT, working with Russ Tedrake and Pulkit Agarwal. He completed his PhD in machine learning and robotics at BAIR, University of California, Berkeley, under the supervision of Professors Sergey Levine and Pieter Abbeel. Prior to that, he also completed his bachelor's degree at the University of California, Berkeley. His main research goal is to develop algorithms that enable robotic systems to learn to perform complex tasks in a variety of unstructured environments, such as offices and homes.

8. Sergey Levine

He is an associate professor in the Department of Electrical Engineering and Computer Science at the University of California, Berkeley. His research focuses on algorithms that enable autonomous agents to learn complex behaviors, particularly general methods that enable any autonomous system to learn to solve any task. Applications for these methods include robotics, as well as a range of other areas where autonomous decision-making is required.