High-performance network framework XDP technology

High-performance network framework XDP technology

1.Basic concepts of XDP

The full name of XDP is eXpress Data Path, which is fast data path. It is a high-performance, programmable network packet processing framework provided by the Linux kernel. XDP will directly take over the RX direction data packets of the network card, quickly process the packets by running the eBPF instruction in the kernel, and seamlessly connect to the kernel protocol stack.

XDP is not a kernel bypass, it adds a fast data path between the network card and the kernel protocol stack. XDP relies on eBPF technology to inherit its excellent features such as programmability, instant implementation, and security.

XDP smart network card is an extension of the XDP concept. On a smart network card that supports eBPF instructions, download the eBPF instructions corresponding to XDP on the CPU to the smart network card. This can achieve CPU resource saving and rule hardware offloading at the same time.

XDP provides a high-performance network processing framework with eBPF technology, and users can customize network processing behavior according to standard eBPF programming guidelines. At the same time, the kernel has added the AF_XDP protocol family, and the matched data packets in the kernel XDP framework are sent to the user mode through it, which in turn extends the support of XDP from the kernel to user mode application scenarios.

2.The overall framework of XDP

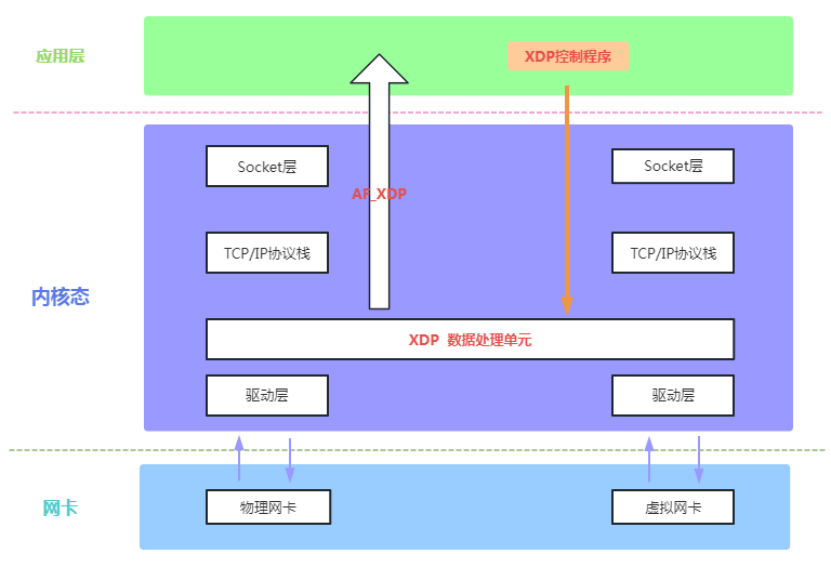

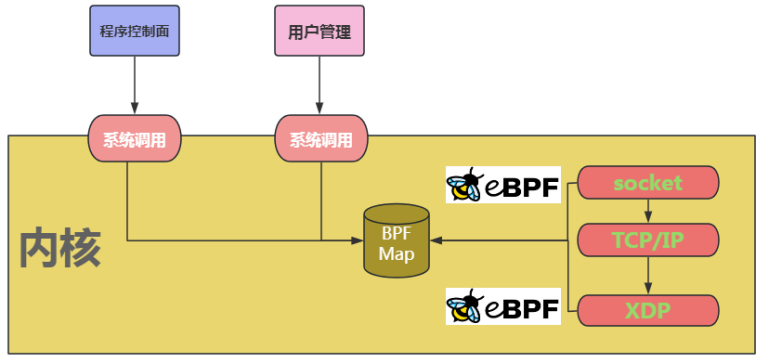

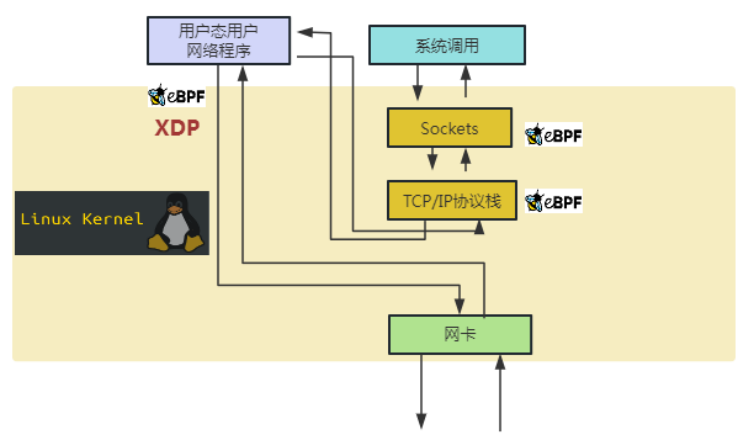

As shown in Figure 1 below, we use the overall framework diagram of XDP to show its relative position in the kernel system and how it meets the requirements of the data plane development framework.

Figure 1 includes network card equipment, XDP framework, TCP/IP protocol stack, Socket interface, application layer and other layers, covering the entire data flow process of network data packets from the network card to the server. The gray part in the middle of Figure 1 (XDP Packet Processor) is the XDP framework. The relative position of its data plane processing unit in the kernel is between the network card driver and the protocol stack, and actually runs at the driver layer. Network data packets from the network card to the CPU processor first arrive at the XDP framework via the network card driver, and are processed by the user-defined eBPF program running in the XDP framework. The processing results of the data packets are Drop, Forward, and Local Acceptance. (Receive Local), etc., the result is that the network data packets received locally continue the original kernel path and continue to enter the TCP/IP protocol stack for processing, and the result is that the network data packets that are forwarded or discarded are directly processed in the XDP framework (this part of the traffic It occupies a large part of the real network, and its execution path is greatly shortened compared with the traditional kernel path). The black dotted line in the gray part in the middle of Figure 1 (XDP Packet Processor) shows the upper layer control's ability to load/update/configure the eBPF program in the XDP framework. The kernel provides corresponding system calls to realize the control of the control surface and data plane. . Figure 1 XDP overall framework diagram completely shows the overall framework of XDP as a collection of high-performance network data plane and control plane.

3. Introduction to XDP application development

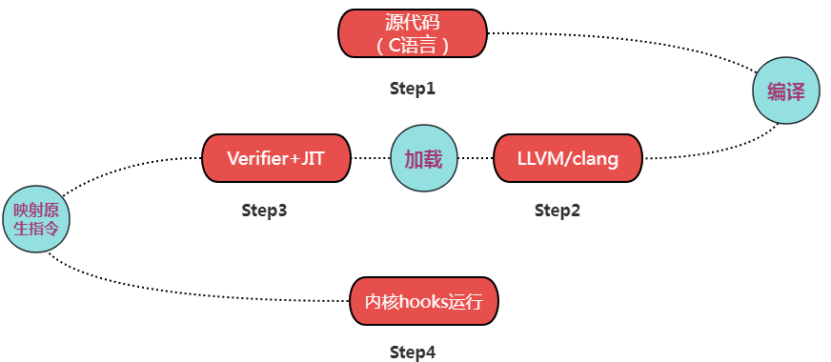

The XDP framework is based on eBPF technology. BPF is a general purpose RISC instruction set. In 1992, BPF was first proposed in the Berkeley laboratory. In 2013, BPF was enhanced to become eBPF, which was officially incorporated into the Linux kernel in 2014. eBPF provides a mechanism to run small programs when various kernel and application events occur. As shown in Figure 2 below, we describe the development/operation process of eBPF and its specific application on XDP.

Figure 2 shows a typical eBPF development and operation process. Developers use a subset of the C language (kernel running, standard C library is not available) to develop programs, and then use the LLVM/clang compiler to compile them into eBPF instructions (Bytecode), which are verified by the eBPF verifier (Verifier) after passing the test. The just-in-time compiler (JIT Compiler) in the kernel maps the eBPF instructions into the processor's native instructions (opcode) and then loads them into the preset hooks (Hooks) of each module in the kernel. The XDP framework is a hook (Hooks) for a fast path to network data opened by the kernel in the network card driver. Other typical hooks in the kernel are kernel functions (kprobes), user space functions (uprobes), system calls, fentry/fexit, trace points, network routing, TC, TCP congestion algorithm, sockets and other modules.

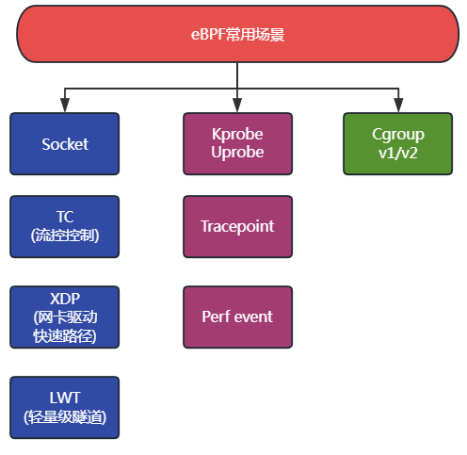

XDP is a specific application of the network fast path implemented by the kernel based on eBPF. Figure 3 below lists typical applications that support eBPF hooks nodes in the kernel.

Compared with traditional user-mode/kernel-mode programs, eBPF/XDP has the following typical characteristics:

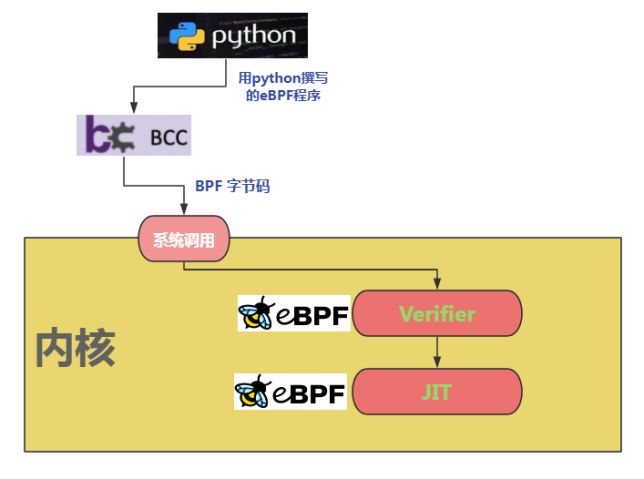

(1) As shown in Figure 4 below, the in-kernel JIT Compiler maps eBPF bytecode into processor native instructions with better performance to achieve high performance, and its program verifier (verifier) The program security will be verified and a sandbox running environment will be provided. Its security check includes determining whether there is a loop, whether the program length exceeds the limit, whether the program memory access is out of bounds, whether the program contains unreachable instructions, etc. The strongest advantage is that real-time updates can be achieved without interrupting the workload.

(2) The data exchange between the kernel state and user state of the eBPF program is implemented through BPF maps, which is similar to the shared memory access of inter-process communication. The data types it supports include Hash table, array, LRU cache (Least Recently Used), ring queue, stack trace, and LPM routing table (Longest Prefix match). As shown in Figure 5 below, BPF Map assumes the role of data interaction between user mode and kernel mode.

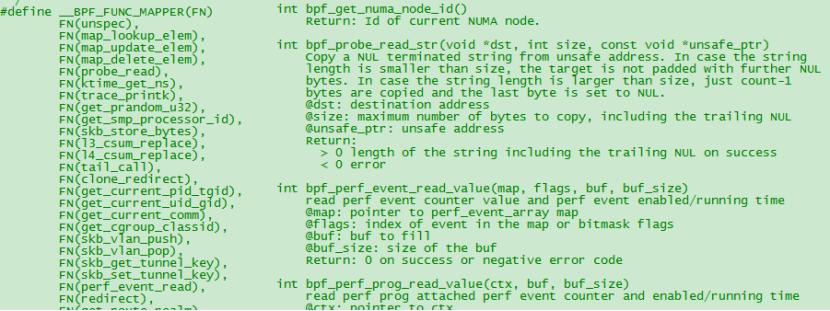

(3) eBPF makes up for the lack of the standard C library by providing auxiliary functions. Common ones include obtaining random numbers, obtaining the current time, map access, obtaining process/cgroup context, processing network data packets and forwarding, accessing socket data, executing tail calls, accessing process stacks, accessing system call parameters, etc., in actual development You can get more help information through the man bpf-helpers command. Figure 6 below shows an auxiliary function for obtaining random numbers that starts with bpf.

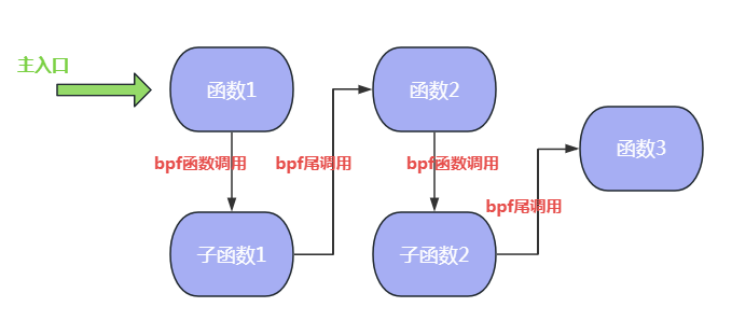

(4) Compared with development models such as pure kernel kmodule, eBPF provides a unique tail call and function calling mechanism. Due to the precious kernel stack space and the fact that eBPF does not support loops and the recursion depth is limited (maximum 32), eBPF introduces tail calls and function calls to implement jumps between eBPF programs. The tail call and function call mechanisms are fully designed for performance optimization. Tail call can reuse the current stack frame and jump to another eBPF program. For details, please refer to the bpf_tail_call auxiliary function manual. Since eBPF programs are independent of each other, the tail call mechanism actually provides developers with the ability to orchestrate functions as units. Starting from Linux 4.16 and LLVM 6.0, eBPF begins to support function calls, and supports the cooperation of tail calls and function calls after kernel 5.9. The disadvantage of tail calling is that the generated program image is large but saves memory; the advantage of function calling is that the image is small but consumes large memory. Developers can flexibly choose different methods according to actual needs. Figure 7 below shows the mixed collaboration process of eBPF program tail call and function call, where tail call is the tail call and bpf2bpf call is the function call.

Figure 7 Mixed collaboration of eBPF program tail calls and function calls

Figure 7 Mixed collaboration of eBPF program tail calls and function calls

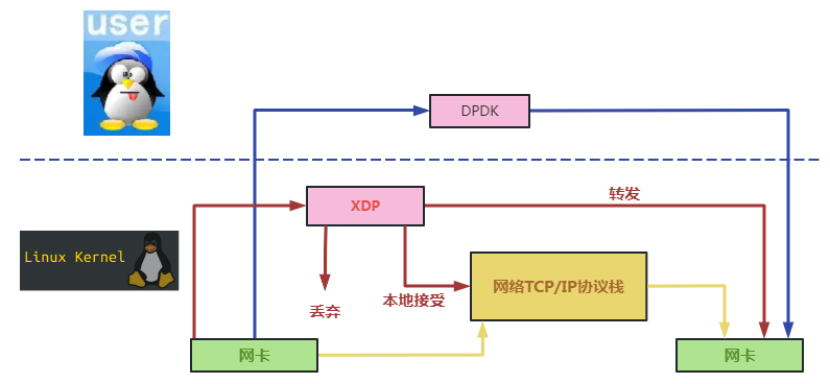

4. Comparison of similar technologies of XDP

Taking DPDK, currently the most widely used user-mode data plane development framework, as a benchmark, we illustrate the implementation differences between XDP and DPDK through the following data flow diagram. As shown in Figure 8 below, DPDK completely bypasses the kernel and runs in user mode, while XDP runs in the kernel between the network card and the kernel protocol stack. DPDK is a new data plane development framework separated from the kernel, while XDP is a fast data path attached to the kernel (compared to the original kernel network slow path).

The following is the specific comparison between XDP and DPDK:

(1) DPDK will monopolize CPU resources and require large page memory. XDP is not exclusive to the CPU and does not require large page memory. XDP has lower hardware requirements than DPDK.

(2) Projects using DPDK as the data plane framework will require heavy investment in development of human resources. Please refer to the typical projects FD.IO (VPP) and OVS-DPDK. XDP is a fast data channel native to the kernel and a lightweight data plane framework.

(3) DPDK requires code support and license support at all levels such as network card driver and user mode protocol stack. XDP is directly maintained and released by the Linux Foundation, and the specific technology ecosystem is maintained by its sub-project IO Visor.

(4) DPDK has advantages in scenarios such as large capacity and high throughput. XDP has advantages in scenarios such as cloud native.

Currently, XDP has typical projects with the following application scenarios:

- DDoS defense

- firewall

- Load balancing based on XDP

- Protocol stack processing

- Cloud native application service optimization (such as K8S, OpenStack, Docker and other service improvement projects)

- flow control

5. Famous open source projects based on eBPF/XDP

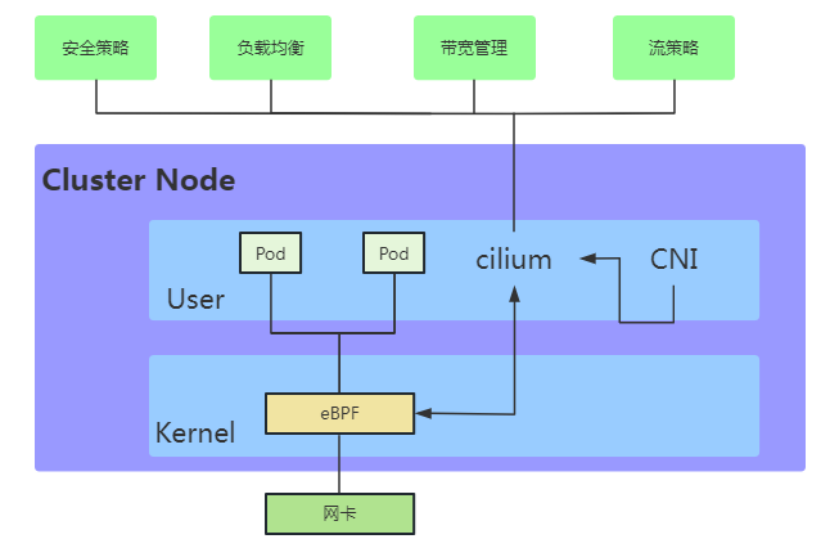

Cilium is an open source project that provides fast in-kernel network and security policy enforcement for containers using eBPF and XDP. The Cilium project implements distributed load balancing for traffic between Pods and external services, and is able to completely replace kube-proxy, using efficient hash tables in eBPF, allowing almost unlimited scaling. It also supports advanced features such as integrated ingress and egress gateways, bandwidth management and service mesh, and provides deep network and security visibility and monitoring.

As shown in Figure 9 below, eBPF/XDP (Little Bee) is between containers, Pods and other services and network cards. It uses XDP technology to improve the performance and security of upper-layer services. It is very clever and safe before the kernel data flow node is dynamically completed. The kernel cannot do the job.

Figure 10 shows a specific case of functional improvement in the kernel network card and Socket layer through XDP and eBPF respectively in the Cilium project. On the left side of Figure 10, user-mode network processing code is inserted into the XDP framework of the network card driver layer, and on the right side, socket processing code is inserted into the Socket layer. In this way, both dynamic expansion of functions and upper-layer containers can be achieved without modifying the kernel. Non-aware function upgrades for typical node applications such as , Pod and so on.

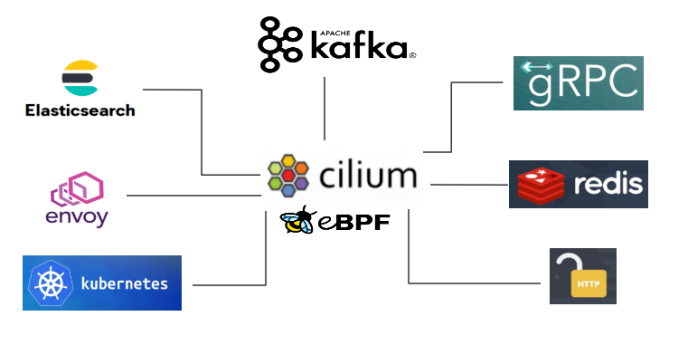

The Cilium project provides a very good model solution for service performance improvement and security improvement in cloud native scenarios. As shown in Figure 11, various common cloud native services benefit from eBPF/XDP to achieve performance improvements and security improvements.

Figure 11 The core value of eBPF/XDP in the Cilium project

Figure 11 The core value of eBPF/XDP in the Cilium project

6.The development prospects of DP

In order to achieve a flexible data plane and accelerate NFV applications, the Linux Foundation established the sub-project IO Visor to extend an open and programmable network data plane open source project based on the Linux kernel. XDP is a sub-project of the IO Visor project. The lack of virtualization in the Linux kernel is the biggest challenge for IO Visor in NFV scenarios. XDP uses eBPF virtual machine real-time implementation technology to make up for this shortcoming. However, almost all virtual machines run in user space. Due to the security requirements of eBPF virtual machines running in the kernel, it will be a relatively big challenge to transplant virtualization-related tasks to the kernel space.

In terms of performance improvement, Sebastiano Miano and others used XDP and TC hooks to mount the eBPF program in 2019 to implement the Linux firewall iptable, which provides several times or even dozens of times higher performance than the original iptable when the number of rules increases. In 2021, Yoann Ghigoff and others implemented a layer of Memcached cache in the kernel based on eBPF, XDP, and TC, achieving higher performance than the DPDK kernel bypass solution.

The XDP project opens a new path between the traditional kernel model and the new user-mode framework to fill the resource investment trap caused by the excessive span of new technologies. We saw Microsoft announce plans to start supporting XDP technology on the Windows platform in 2022. As the entire ecological environment gradually improves, the capabilities brought by XDP such as lightweight, instant implementation, high-performance channels, security and reliability will increasingly exert greater value.

China Mobile Smart Home Center will maintain close tracking of XDP technology, continue to track the development direction of the industry from a technical level, maintain an open mind towards emerging technologies and actively embrace new technologies, and promote the industry to bring practical benefits to the general public through emerging technologies. Real digital and intelligent services.