Don’t wait for OpenAI, the world’s first Sora-like one is open source first!

Not long ago, OpenAI Sora quickly became popular with its amazing video generation effects. It stood out among the crowd of Wensheng video models and became the focus of global attention.

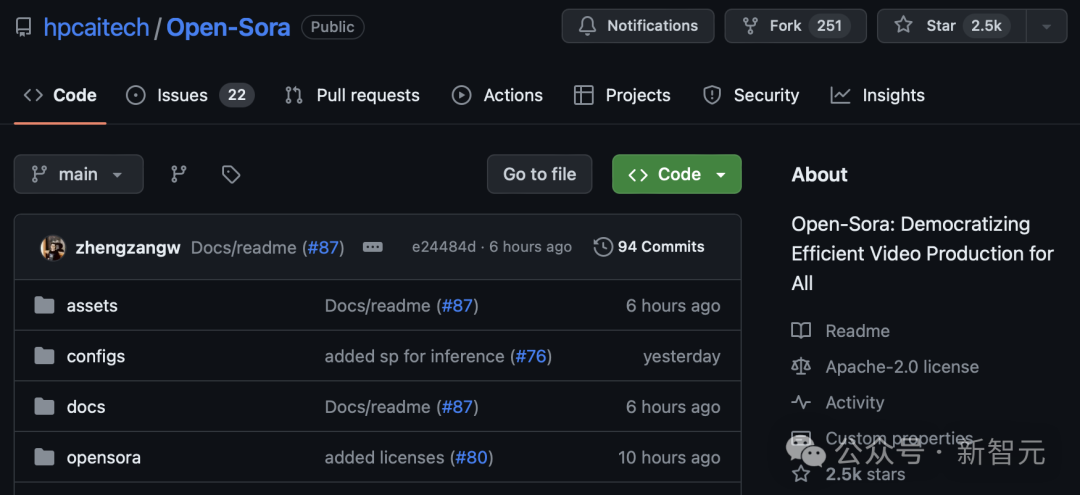

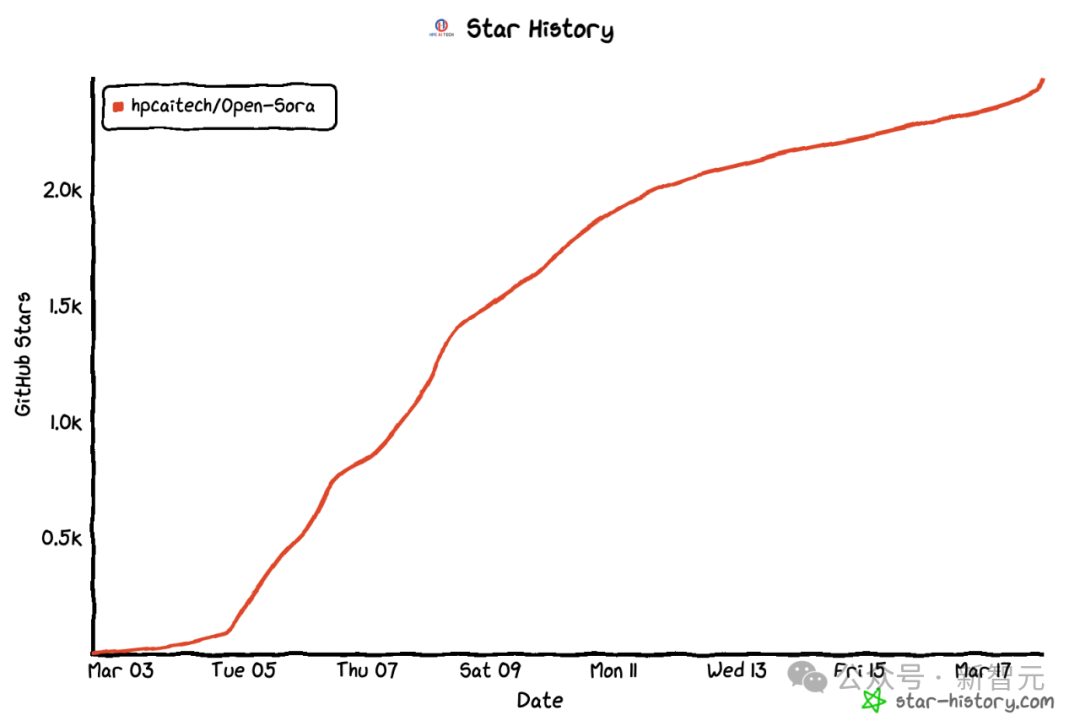

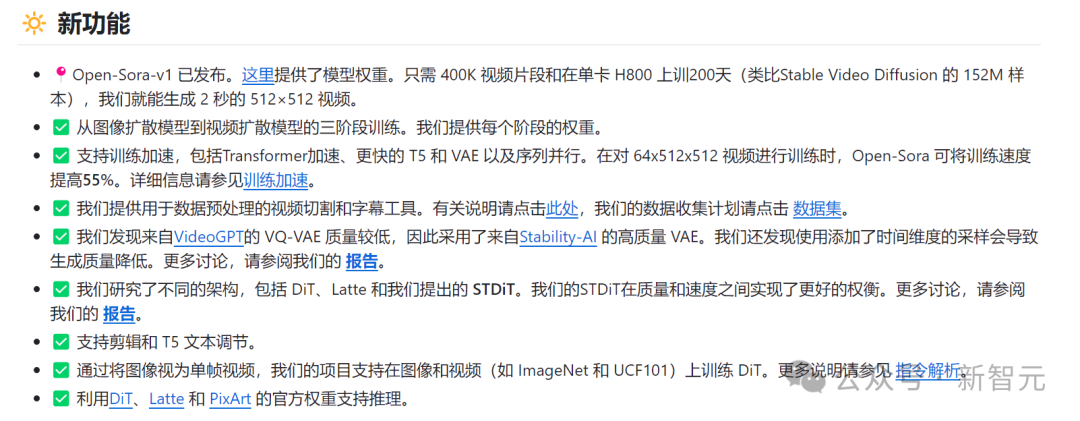

Following the launch of the Sora training inference reproduction process with a 46% cost reduction 2 weeks ago, the Colossal-AI team fully open sourced the world's first Sora-like architecture video generation model "Open-Sora 1.0" - covering the entire training process , including data processing, all training details and model weights , and join hands with global AI enthusiasts to promote a new era of video creation.

Open-Sora open source address: https://github.com/hpcaitech/Open-Sora

For a sneak peek, let’s take a look at a video of a bustling city generated by the “Open-Sora 1.0” model released by the Colossal-AI team .

A glimpse of urban bustling generated by Open-Sora 1.0

This is just the tip of the iceberg of Sora's reproduction technology. Regarding the model architecture of Wensheng's video, the trained model weights, all the training details of the reproduction, data preprocessing process, demo display and detailed tutorials , the Colossal-AI team has Fully free and open source on GitHub.

At the same time, Xinzhiyuan contacted the team immediately and learned that they will continue to update Open-Sora related solutions and latest developments. Interested friends can continue to pay attention to the Open-Sora open source community.

Comprehensive interpretation of Sora’s recurrence plan

Next, we will provide an in-depth explanation of multiple key dimensions of Sora's replication solution, including model architecture design, training replication solution, data preprocessing, model generation effect display, and efficient training optimization strategies.

Model architecture design

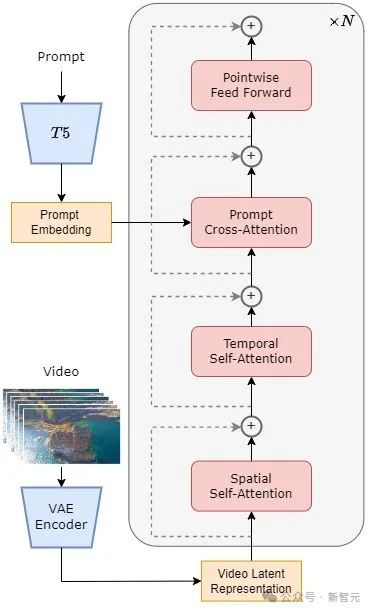

The model adopts the currently popular Diffusion Transformer (DiT) [1] architecture.

The author team uses PixArt-α [2], a high-quality open source graph model that also uses the DiT architecture, as a base. On this basis, the author team introduces a temporal attention layer and extends it to video data.

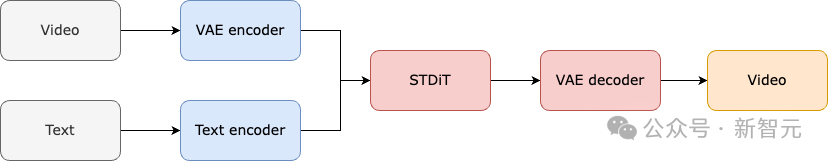

Specifically, the entire architecture includes a pre-trained VAE, a text encoder, and an STDiT (Spatial Temporal Diffusion Transformer) model that utilizes the spatial-temporal attention mechanism.

Among them, the structure of each layer of STDiT is shown in the figure below. It uses a serial method to superimpose a one-dimensional temporal attention module on a two-dimensional spatial attention module to model temporal relationships.

After the temporal attention module, the cross-attention module is used to align the semantics of the text. Compared with the full attention mechanism, such a structure greatly reduces training and inference overhead.

Compared with the Latte [3] model, which also uses the spatial-temporal attention mechanism, STDiT can better utilize the weights of pre-trained image DiT to continue training on video data.

STDiT structure diagram

The training and inference process of the entire model is as follows. It is understood that in the training phase, the pre-trained Variational Autoencoder (VAE) encoder is first used to compress the video data, and then the STDiT diffusion model is trained together with text embedding in the compressed latent space.

In the inference stage, a Gaussian noise is randomly sampled from the VAE's latent space and input into STDiT together with the prompt embedding to obtain the denoised features. Finally, it is input to the VAE decoder and decoded to obtain the video.

Model training process

Training recurrence plan

We learned from the team that Open-Sora’s reproduction solution refers to the Stable Video Diffusion (SVD) [3] work and includes three stages, namely:

1. Large-scale image pre-training;

2. Large-scale video pre-training;

3. Fine-tuning of high-quality video data.

Each stage continues training based on the weights from the previous stage. Compared with single-stage training from scratch, multi-stage training achieves the goal of high-quality video generation more efficiently by gradually expanding data.

Three phases of training program

The first stage: large-scale image pre-training

The first stage uses large-scale image pre-training and the mature Vincentian graph model to effectively reduce the cost of video pre-training.

The author team revealed to us that through the rich large-scale image data on the Internet and advanced Vincentian graph technology, we can train a high-quality Vincentian graph model, which will serve as the initialization weight for the next stage of video pre-training.

同时,由于目前没有高质量的时空VAE,他们采用了Stable Diffusion [5]模型预训练好的图像VAE。该策略不仅保障了初始模型的优越性能,还显著降低了视频预训练的整体成本。

The second stage: large-scale video pre-training

In the second stage, large-scale video pre-training is performed to increase model generalization capabilities and effectively grasp the time series correlation of videos.

We understand that this stage requires the use of a large amount of video data for training to ensure the diversity of video themes, thereby increasing the generalization ability of the model. The second-stage model adds a temporal attention module to the first-stage Vincentian graph model to learn temporal relationships in videos.

The remaining modules remain consistent with the first stage, and load the first stage weights as initialization, while initializing the output of the temporal attention module to zero to achieve more efficient and faster convergence.

The Colossal-AI team used the open source weights of PixArt-alpha [2] as the initialization of the second-stage STDiT model, and the T5 [6] model as the text encoder. At the same time, they used a small resolution of 256x256 for pre-training, which further increased the convergence speed and reduced training costs.

Phase 3: Fine-tuning of high-quality video data

第三阶段对高质量视频数据进行微调,显著提升视频生成的质量。

The author team mentioned that the size of the video data used in the third stage is one order of magnitude less than that in the second stage, but the duration, resolution and quality of the video are higher. By fine-tuning in this way, they achieved efficient scaling of video generation from short to long, from low to high resolution, and from low to high fidelity.

The author's team stated that in the Open-Sora reproduction process, they used 64 H800 blocks for training.

The total training volume of the second stage is 2808 GPU hours, which is approximately US$7,000. The training volume of the third stage is 1920 GPU hours, which is about 4500 US dollars. After preliminary estimation, the entire training plan successfully controlled the Open-Sora reproduction process to about US$10,000.

Data preprocessing

In order to further reduce the threshold and complexity of Sora recurrence, the Colossal-AI team also provides a convenient video data preprocessing script in the code warehouse, so that everyone can easily start Sora recurrence pre-training, including downloading public video data sets, long The video is segmented into short video clips based on shot continuity, and the open source large language model LLaVA [7] is used to generate precise prompt words.

The author team mentioned that the batch video title generation code they provided can annotate a video with two cards and 3 seconds, and the quality is close to GPT-4V. The resulting video/text pairs can be directly used for training.

借助他们在GitHub上提供的开源代码,我们可以轻松地在自己的数据集上快速生成训练所需的视频/文本对,显著降低了启动Sora复现项目的技术门槛和前期准备。

Automatically generated video/text pairs based on data preprocessing scripts

Model generation effect display

Let's take a look at the actual video generation effect of Open-Sora. For example, let Open-Sora generate an aerial footage of sea water lapping against rocks on a cliff coast.

Let Open-Sora capture the majestic aerial view of mountains, rivers and waterfalls surging down from the cliffs and finally flowing into the lake.

In addition to going to the sky, you can also enter the sea. Simply enter prompt and let Open-Sora generate a shot of the underwater world. In the shot, a sea turtle leisurely swims among the coral reefs.

Open-Sora can also show us the Milky Way with twinkling stars through time-lapse photography.

If you have more interesting ideas for video generation, you can visit the Open-Sora open source community to obtain model weights for free experience.

Link: https://github.com/hpcaitech/Open-Sora

It is worth noting that the author team mentioned on Github that the current version only uses 400K training data, and the model’s generation quality and ability to follow text need to be improved. For example, in the turtle video above, the resulting turtle has an extra leg. Open-Sora 1.0 is also not good at generating portraits and complex images.

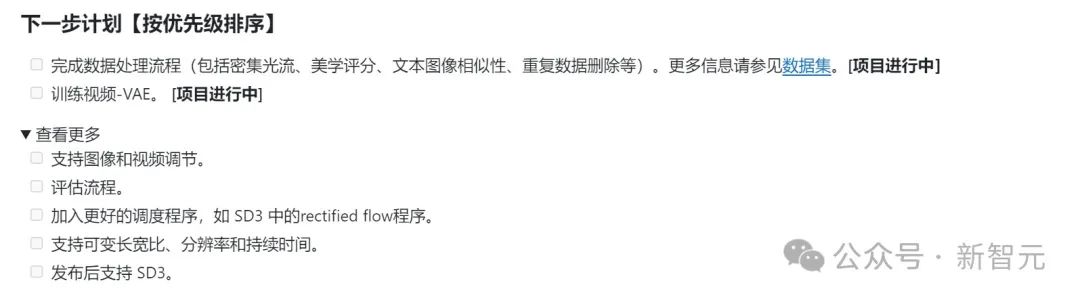

The author team listed a series of plans to be done on Github, aiming to continuously solve existing defects and improve the quality of production.

Efficient training support

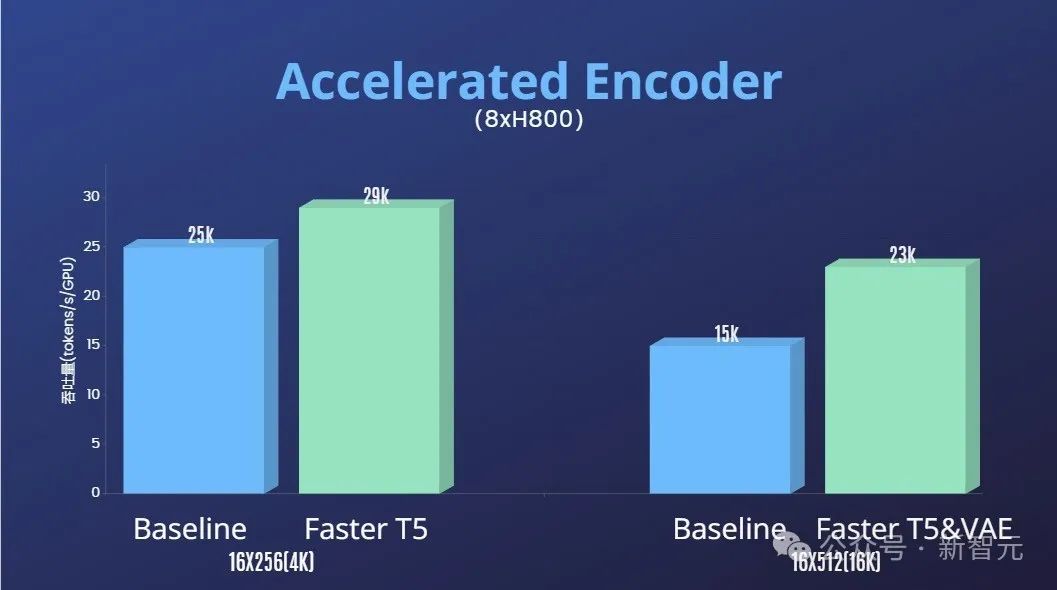

In addition to significantly lowering the technical threshold for Sora reproduction and improving the quality of video generation in multiple dimensions such as duration, resolution, and content, the author team also provides the Colossal-AI acceleration system for efficient training of Sora reproduction.

Through efficient training strategies such as operator optimization and hybrid parallelism, an acceleration effect of 1.55 times was achieved in the training of processing 64-frame, 512x512 resolution video.

At the same time, thanks to Colossal-AI's heterogeneous memory management system, a 1-minute 1080p high-definition video training task can be performed without hindrance on a single server (8 x H800).

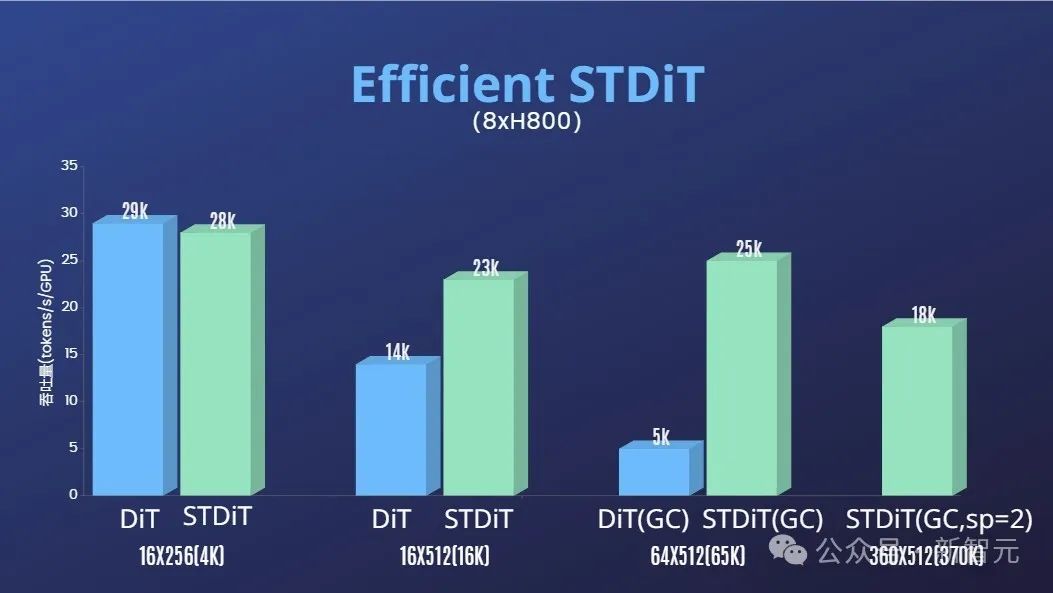

In addition, in the report of the author team, we also found that the STDiT model architecture also showed excellent efficiency during training.

Compared with DiT, which uses a full attention mechanism, STDiT achieves an acceleration effect of up to 5 times as the number of frames increases, which is particularly critical in real-world tasks such as processing long video sequences.

A glance at the video generation effects of the Open-Sora model

Welcome to continue to pay attention to the Open-Sora open source project: https://github.com/hpcaitech/Open-Sora

The author team mentioned that they will continue to maintain and optimize the Open-Sora project, and are expected to use more video training data to generate higher quality, longer video content, and support multi-resolution features to effectively promote AI The implementation of technology in movies, games, advertising and other fields.