Let’s talk about the Kubernetes portal network system based on Traefik

1. A brief analysis of the Kubernetes portal network

As we all know, Kubernetes, as the leading container orchestration platform, provides powerful capabilities for building and managing distributed applications. However, in different business scenarios, there are also differences in network requirements. In order to meet these differentiated needs, we need to create different Kubernetes Cluster network modes to provide customized network solutions.

Normally, the cluster network in Kubernetes needs to meet the following core requirements, as detailed below:

- Service security and isolation: Ensure that different services can be isolated from each other and prevent malicious access.

- Pod's connection, network and IP: Provide network connection and IP address allocation for Pod, and support dynamic allocation and management.

- Set up a network to deploy cluster abstraction from multiple physical clusters: Virtualize multiple physical nodes into a unified network to facilitate application deployment and management.

- Traffic load balancing across multiple instances of a service: Evenly distribute traffic to multiple service instances to improve the load capacity and reliability of the system.

- Control external access to services: restrict access to services and ensure service security.

Use Kubernetes networking in public and non-cloud environments: Supports deploying and running Kubernetes Clusters in different cloud environments.

2. Access within Pod

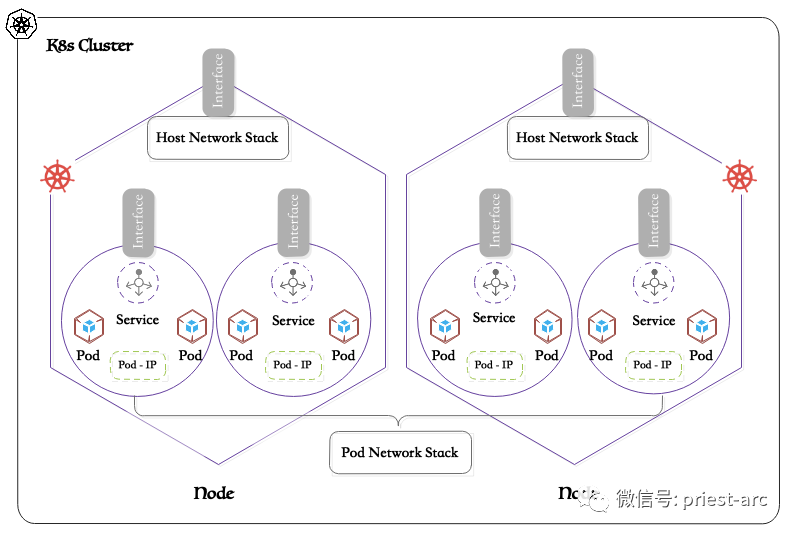

For a simple Kubernetes Cluster with two nodes, when Kubernetes creates and runs a Pod, it will build an independent network namespace for the Pod, providing an isolation environment at the network level.

In this isolated environment, Pods can have independent network stacks, including independent network cards, IP addresses, routing tables, iptables rules, etc., just like running on independent servers. This isolation mechanism ensures that network traffic between different Pods does not interfere with each other, ensuring the security and reliability of network communication.

In addition to network isolation, Kubernetes also assigns a unique IP address to each Pod so that all Pods in the Cluster can access each other through IP addresses. These IP addresses can be statically allocated from a dedicated subnet, or obtained through dynamic allocation such as DHCP, and are automatically managed by the cluster. No matter what distribution method is used, Kubernetes will ensure that there will be no IP conflicts within the entire Cluster, thus ensuring smooth and reliable communication.

For details, please refer to the following:

Although the isolation of the Pod network ensures the security of the service, it also brings certain challenges to service access. Because the IP address inside the Pod is not accessible outside the Cluster, external clients cannot communicate directly with the service. What does this mean for services? Services run within Pods in a Pod network. The IP addresses assigned on the Pod's network (for services) are not accessible outside the Pod. So how do you access the service?

3. Cross-Nodes access

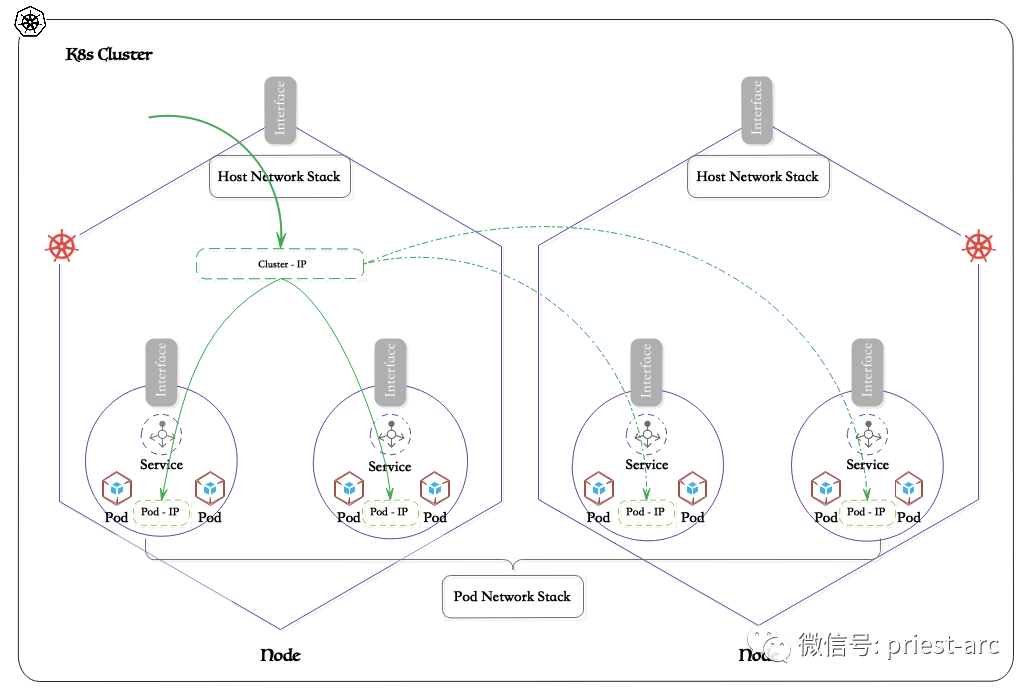

In actual business scenarios, a service usually consists of multiple Pod instances, and these Pods may be distributed on different nodes in the cluster. For example, a service consists of two Pods, running on two different physical nodes. When an external client needs to access the service, how does Kubernetes perform load balancing between the two Pods to ensure that requests can be distributed fairly to each instance?

Kubernetes uses the abstract mechanism of Cluster IP (cluster IP) to solve this problem. Specifically, Kubernetes assigns a Cluster IP to each service as a unified external entry point. When the client initiates a request for the service, it only needs to connect to the Cluster IP and does not need to care about the specific Pod instance on the backend.

Next, Kubernetes will distribute the incoming request traffic to all Pod instances running the service according to the preset load balancing policy. Commonly used load balancing algorithms include weight-based round robin scheduling, least connection scheduling, etc. Users can also customize scheduling algorithms according to their own needs. No matter what strategy is adopted, Kubernetes can ensure that request traffic is reasonably distributed among various Pod instances to achieve high availability and load balancing.

It is worth noting that this load balancing process is completely transparent to the client. The client only needs to connect to the cluster IP and does not need to care about the actual distribution of backend Pods. This not only simplifies the client's access logic, but also achieves a high degree of abstraction of the service, decoupling the client from the underlying infrastructure.

Cluster IP is the core mechanism for Kubernetes to realize service discovery and load balancing. It relies on the support of kube-proxy component and a series of network technologies.

Through the close cooperation of multiple components such as kube-proxy, iptables/IPVS, and network plug-ins, Kubernetes provides users with a highly abstract and powerful service network solution that can not only achieve efficient traffic load balancing, but also support rich networks Policy control truly unleashes the potential of cloud-native applications and promotes the widespread application of microservice architecture.

4. Cluster external access

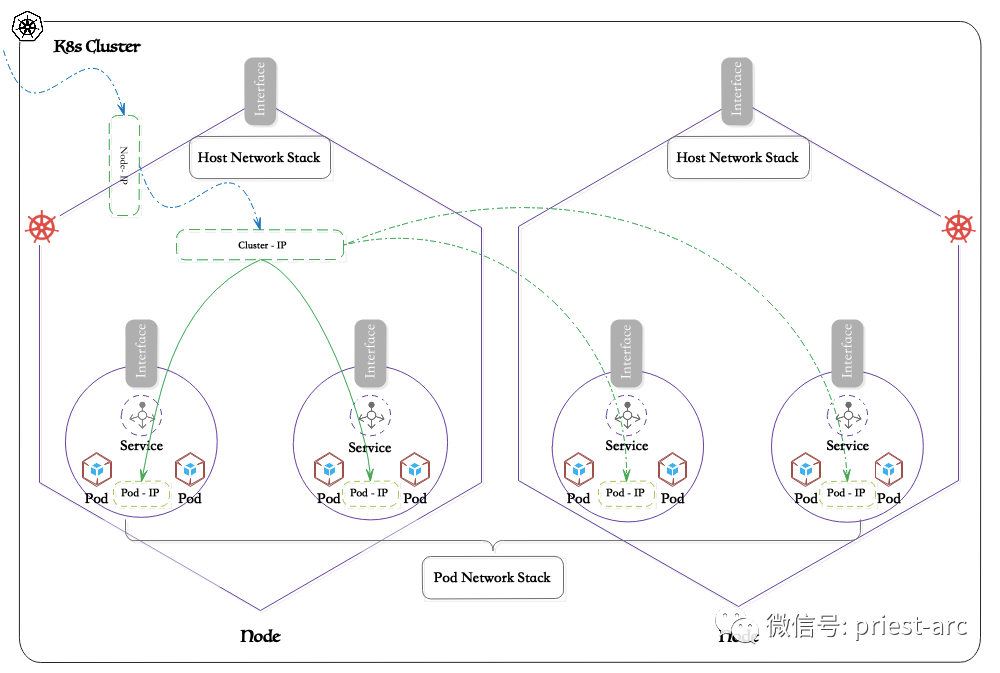

ClusterIP (cluster IP) provides a convenient way to access services running within a Kubernetes Cluster, but it can only be accessed within the cluster by default and is invisible to external traffic. This is for security reasons to prevent the service from being directly accessed by the outside and being attacked.

But in some scenarios, we may need to expose the service to client access outside the cluster. For example, some web services facing the public network, or microservices that require cross-cluster communication, etc. To meet this need, Kubernetes provides NodePort type services.

The NodePort service introduces traffic from outside the cluster into the cluster by opening a designated port on each node, and forwards these traffic to the back-end Pod instance through ClusterIP. Specifically, when a client accesses the NodePort of any node, Nodes will forward the request to the corresponding ClusterIP, and then ClusterIP will perform load balancing and distribute the traffic to all Pod instances that provide the service.

This mode is equivalent to setting up an entry gateway at the edge of the cluster, providing a legal entry point for external traffic. Compared with ClusterIP, which directly exposes services, this method provides better security and flexibility. Administrators can finely control which services can be exposed to the outside world through NodePort. At the same time, they can also adjust NodePort's port mapping policy as needed.

It is worth noting that the NodePort service requires one port to be opened on each node, which means that the port is not reusable on all nodes in the cluster. In order to avoid wasting port resources, Kubernetes will automatically allocate an available high-bit port (the default range is 30000-32767) as a NodePort. Of course, users can also customize the port number of NodePort according to actual needs.

In addition to NodePort, Kubernetes also supports more advanced service exposure methods such as LoadBalancer and Ingress. The LoadBalancer service provides an externally accessible IP address for the service through the load balancer provided by the cloud service provider; while the Ingress allows users to customize the external access rules of the service to achieve more refined routing and traffic management.

Here, we leave aside the public cloud and only talk about the entrance network in the private cloud environment...

When running in a private cloud, creating a LoadBalancer type of service requires a Kubernetes controller that can be configured with a load balancer. Among current solutions, one such implementation is MetalLb. MetalLB is a load balancer implementation for bare metal Kubernetes clusters that uses standard routing protocols. It routes external traffic within the cluster based on assigned IP addresses. Essentially, MetalLB aims to correct this imbalance by providing a network LoadBalancer implementation that integrates with standard network equipment so that external services on bare metal clusters also function as well as possible.

MetalLB implements an experimental FRR mode that uses an FRR container as a backend for handling BGP sessions. It provides functionality not available in the native BGP implementation, such as pairing BGP sessions with BFD sessions and publishing IPV6 addresses.

MetalLB is a Kubernetes external load balancer implementation that can be used in bare metal environments. It is a simple load balancer developed by Google. It has two functions: assigning a public IP address (External IP) to the load balancer type Service and exposing routing information to the External IP. Based on MetalLb design features, it mainly involves the following two core functions:

- Address assignment: MetalLB assigns an IP address to the LoadBalancer Service when it is created. This IP address is obtained from a pre-configured IP address library. Similarly, when the Service is deleted, the assigned IP address will return to the address library.

- External broadcast: After assigning an IP address, the network outside the cluster needs to know the existence of this address. MetalLB uses standard routing protocol implementations: ARP, NDP or BGP.

There are two broadcast methods. The first is Layer 2 mode, using ARP(ipv4)/NDP(ipv6) protocol; the second is BPG.

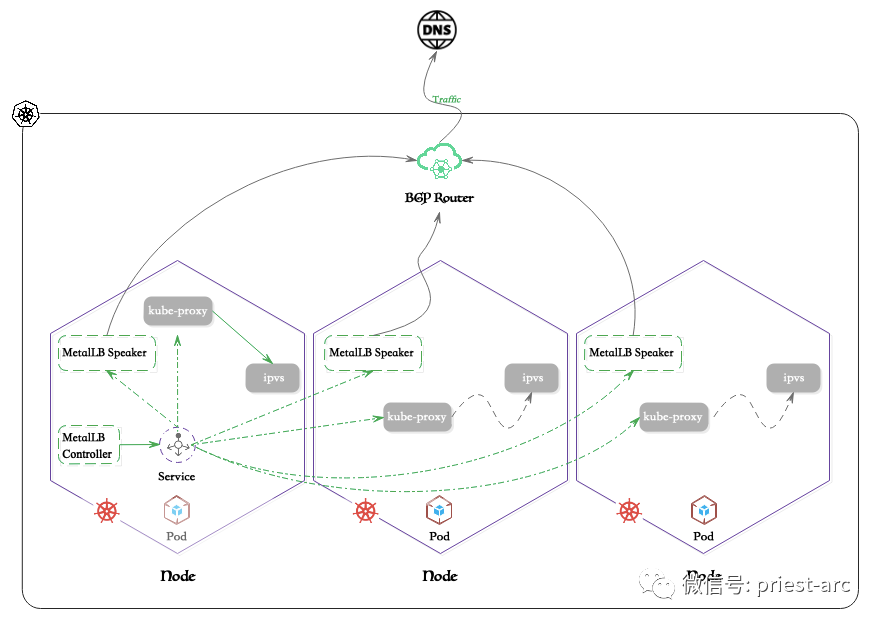

Let’s take a look at the MetalLB network reference diagram, as shown below:

Based on the above reference topology diagram, we can see that when external traffic requests access, the router and ipvs will adjust the connection destination according to the set routing information. Therefore, from a certain perspective, MetalLB itself is not a load balancing component facility, but is designed based on load balancing scenarios.

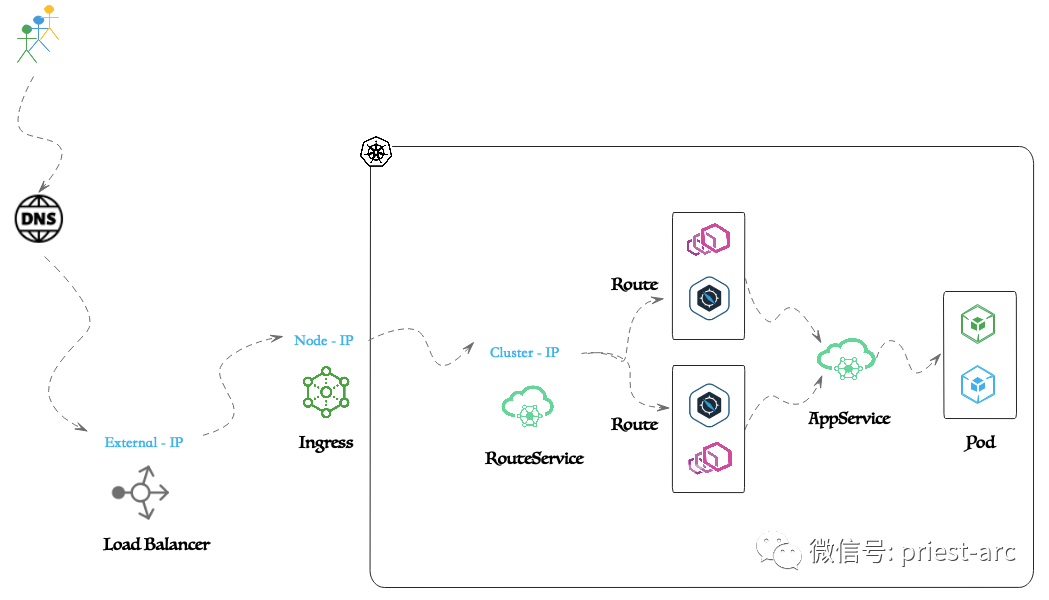

Well, actually, a proxy like Traefik can receive all external traffic coming into the cluster by running it as a service and defining this LoadBalancer type service. These proxies can be configured using L7 routing and security rules. The collection of these rules forms the Ingress rule.

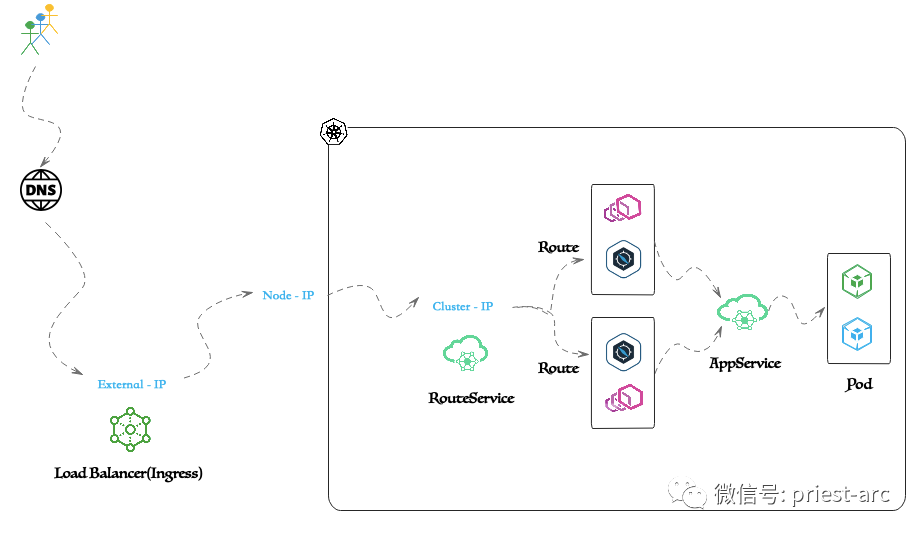

Based on Ingress - Places the service behind a proxy that is externally accessible through a load balancer. A layer of L7 proxy can be placed in front of the service to apply L7 routing and policies. For this, an ingress controller is required. Ingress Controller is a service within a Kubernetes cluster configured as LoadBalancer type to receive external traffic.

The Ingress Controller uses defined L7 routing rules and L7 policies to route traffic to the service. For details, please refer to the following diagram:

In fact, in essence, running the Ingress Controller and execution strategy in Ingress has several obvious advantages, specifically:

- Ingress provides a portable mechanism to enforce policies within a Kubernetes cluster. Policies implemented within a cluster are more easily portable across clouds. You can use Kubernetes service extensions to scale horizontally.

- Multiple agents can be scaled horizontally using Kubernetes services, and the elasticity of the L7 structure makes it easier to operate and scale.

- L7 policies can be hosted alongside services within the cluster, with cluster-native state storage.

- Bringing L7 policies closer to services simplifies policy enforcement and troubleshooting of services and APIs.

Choosing an Ingress controller in a Kubernetes Cluster is a very important decision, which is directly related to the network performance, security, and scalability of the Cluster. As a highly respected open source Ingress controller, Traefik has become one of the first choices of many users with its excellent design concept and powerful functional features.

So, why should you choose Traefik as the preferred Kubernetes Ingress? The details are as follows:

1.Easy to use and maintain

The installation and configuration process of Traefik is extremely simple and smooth, using declarative configuration files in YAML or TOML format, which is extremely readable and maintainable. At the same time, Traefik also provides an intuitive management interface based on Web UI, where users can view and manage routing rules, middleware and other configuration items in real time, greatly reducing the complexity of operation and maintenance.

2. Automated service discovery capabilities

Traefik has a powerful automatic service discovery function that can automatically monitor changes in services in Kubernetes Cluster and dynamically generate corresponding routing rules based on these changes without manual intervention. This automation capability not only greatly simplifies the configuration process, but also ensures real-time updates of routing rules, ensuring the accuracy and timeliness of service discovery. In addition, Traefik supports a variety of service discovery mechanisms, including Kubernetes Ingress, Docker, etc., and can adapt to various complex deployment environments.

3. Intelligent dynamic load balancing

Traefik has a built-in high-performance load balancing module that can dynamically adjust the backend weight according to the real-time status of the service instance to achieve truly intelligent load balancing. At the same time, Traefik also supports advanced functions such as session affinity, circuit breaker mechanism, and retry strategy, thus ensuring the high availability and reliability of services and providing a stable and efficient operating environment for applications.

4. Rich middleware ecosystem

Traefik has dozens of built-in middleware, covering many aspects such as identity authentication, rate limiting, circuit breaker, retry, caching, etc. Users can enable these middleware through simple configuration according to actual needs and quickly build functions. Powerful edge services. In addition to built-in middleware, Traefik also provides good scalability, allowing users to develop and integrate customized middleware to meet the needs of various complex scenarios.

5. Excellent scalability

As a production-level Ingress controller, Traefik is inherently scalable. It supports multiple deployment modes, including single instance, cluster, etc. In cluster mode, multiple Traefik instances can cooperate seamlessly to achieve high availability and horizontal expansion. At the same time, Traefik also supports multiple back-end server types, such as HTTP, gRPC, etc., which can meet the needs of various complex application scenarios.

6. Comprehensive security

Security is the top priority when designing Traefik, supporting multiple security features such as HTTPS/TLS and role-based access control (RBAC). Traefik can automatically obtain and update SSL/TLS certificates through Let's Encrypt and other methods to ensure end-to-end encryption of data transmission. At the same time, it also provides a complete identity authentication mechanism, such as basic authentication, JWT, etc., to effectively protect services from unauthorized access.