"Bitter Lessons", a must-have classic for OpenAI engineers, turned out to have a prototype more than 20 years ago

It has been a week since OpenAI launched the video generation model Sora, and its popularity continues to grow. The author team continues to release eye-catching videos. For example, "a movie trailer about a group of adventurous puppies exploring ruins in the sky", Sora generates it once and completes the editing by itself.

Of course, each vivid and realistic AI video makes people curious about why OpenAI took the lead in creating Sora and can run through all AGI technology stacks? The issue sparked heated discussions on social media.

Among them, in a Zhihu article, author @SIY.Z, a PhD in computer science from the University of California, Berkeley, analyzed some of the successful methodologies of OpenAI. He believes that OpenAI’s methodology is the methodology leading to AGI, and the methodology is built on several Important "axioms" include The bitter lesson, Scaling Law and Emerging properties.

Original post on Zhihu: https://www.zhihu.com/question/644486081/answer/3398751210?utm_psn=1743584603837992961

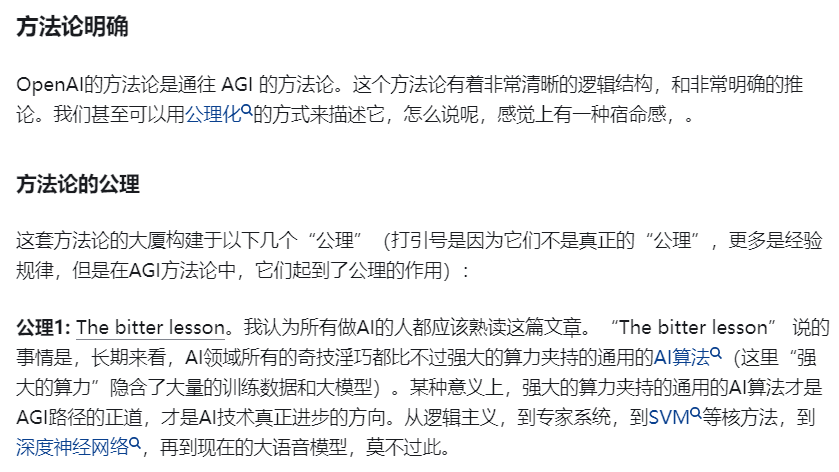

The bitter lesson comes from a classic article "The Bitter Lesson" by machine learning pioneer Rich Sutton in 2019. By exploring the detours that artificial intelligence has taken in recent decades, his core point is: If artificial intelligence If you want to achieve long-term improvement, the best way is to use powerful computing power. The computing power here implies a large amount of training data and large models.

Original link: http://www.incompleteideas.net/IncIdeas/BitterLesson.html

Therefore, the author @SIY.Z believes that in a certain sense, the general AI algorithm supported by powerful computing power is the king of the AGI path and the direction of real progress of AI technology. With big models, big computing power and big data, The bitter lesson constitutes the necessary condition for AGI. Coupled with the sufficient condition of Scaling Law, large models, large computing power and big data can achieve better results through algorithms.

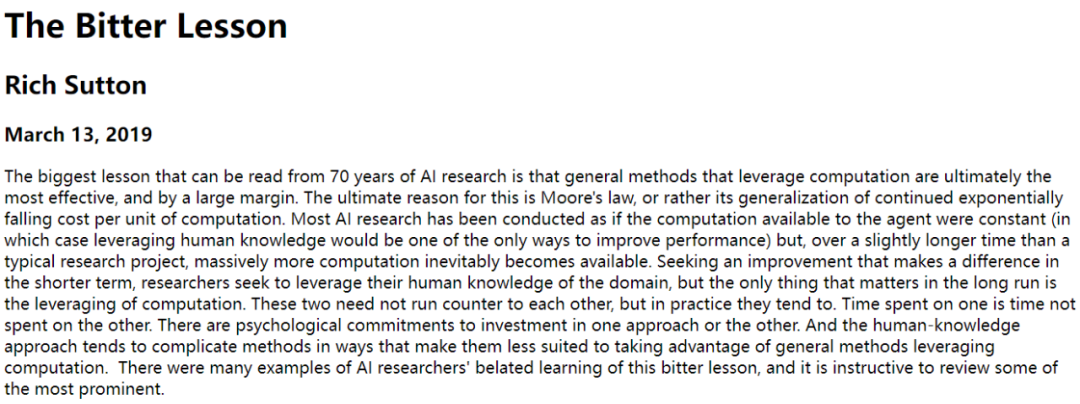

Coincidentally, Rich Sutton's The bitter lesson was also mentioned in the daily work timeline of OpenAI researcher Jason Wei that went viral this week. It can be seen that many people in the industry regard The Bitter Lesson as a model.

Source: https://twitter.com/_jasonwei/status/1760032264120041684

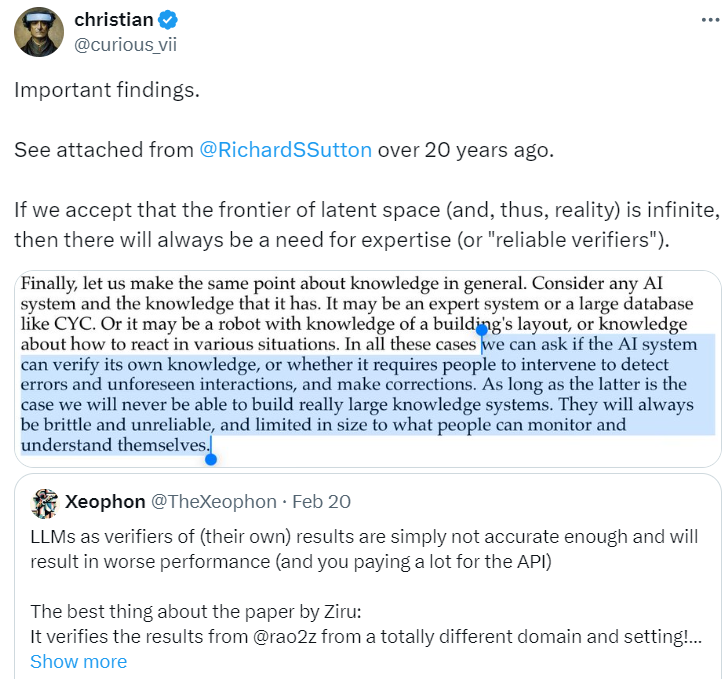

At the same time, in another discussion about "whether a large language model (LLM) can be used as a verifier of its own results", some people believe that LLM is simply not accurate enough when verifying its own results, and will lead to worse performance (the API also needs to be Pay a lot of money).

Source: https://twitter.com/curious_vii/status/1759930194935029767

Regarding this point of view, another Twitter user made an important discovery in a blog written by Rich Sutton more than 20 years ago.

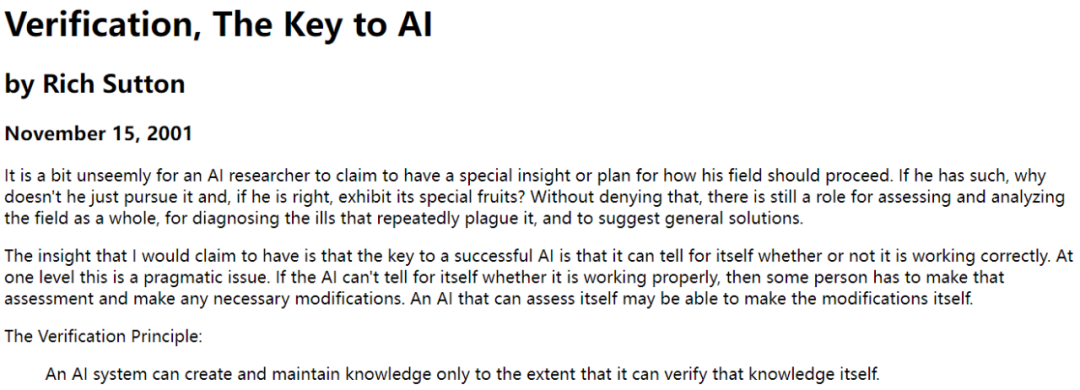

Original link: http://incompleteideas.net/IncIdeas/KeytoAI.html

The blog says this:

Think of any AI system and the knowledge it possesses, it could be an expert system or a large database like CYC. Or it could be a robot that knows the layout of a building, or how to react in various situations. In all these cases, we can ask whether the AI system can verify its own knowledge, or whether human intervention is required to detect errors and unforeseen interactions and correct them. In the latter case, we will never be able to build a truly large knowledge system. They are always fragile and unreliable, and are limited in scale to what people can monitor and understand.

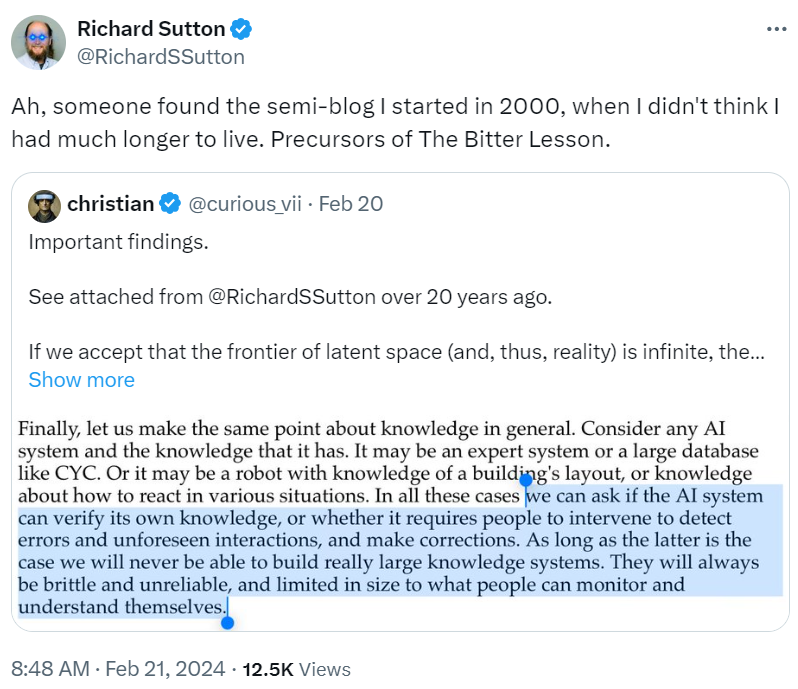

Unexpectedly, Rich Sutton responded and said that this half-written blog was the prototype of The bitter lesson.

Source: https://twitter.com/RichardSSutton/status/1760104125625459171

In fact, not long after OpenAI released Sora, many people realized that The bitter lesson played an important role.

Others view The bitter lesson alongside the Transformer essay Attention is All You Need.

Source: https://twitter.com/karanganesan/status/1759782109399662777

At the end of the article, we review the full text of "Bitter Lessons" by Rich Sutton.

Seventy years of history in artificial intelligence research tells us that general approaches to harnessing computing power are ultimately the most efficient. This is explained by Moore's Law, or its summary of the continued exponential decline in cost per unit of computing. Most AI research is conducted under the assumption that the computation available to the agent is constant (in which case leveraging human knowledge is the only way to improve performance), however, on slightly longer timescales than typical research projects Within, we will inevitably need a lot of calculations.

To improve in the short term, researchers need to tap into domain-specific human knowledge. But if you want long-term improvement, leveraging computing power is the way to go. The two need not be in opposition, but in fact they often are. Spend time studying one and you’ll overlook the other. Methods that use human knowledge tend to be complicated, making them less suitable for methods that use computing. There are many examples where AI researchers have been too late to realize these lessons, so it’s worth reviewing some prominent examples.

In computer chess, the method that defeated world champion Garry Kasparov in 1997 was based on massive deep searches. At the time, most AI computer chess researchers discovered this to their dismay, and their approach exploited human understanding of chess's special structure. While a simpler search-based approach using hardware and software proved more effective, these chess researchers based on human knowledge refused to admit defeat. They believe that although this "brute force" search method won this time, it is not a universal strategy, and in any case it is not the way humans play chess. These researchers hoped that methods based on human input would win, but they were disappointed.

There is a similar pattern of research progress in computer Go, just 20 years later. Initial efforts were made by researchers to exploit human knowledge or specificities of the game to avoid search, but all efforts proved useless as search was effectively applied on a large scale. It is also important to use self play to learn a value function (as in many other games and even chess, although it did not play a role in the first defeat of the world champion in 1997). Learning through self-play and learning in general is a bit like searching in that it allows a lot of computation to come into play. Search and learning are two of the most important techniques in artificial intelligence research that utilize large amounts of computing. In computer Go, as in computer chess, researchers initially sought to achieve their goals through human understanding (which would require less searching), and only later achieved great success through search and learning.

In the field of speech recognition, there was a competition sponsored by DARPA as early as the 1970s. Contestants made use of many unique methods of exploiting human knowledge: words, factors, and the human vocal tract, among others. On the other hand, others have taken advantage of new methods based on hidden Markov models, which are more statistical and computationally intensive in nature. Likewise, statistical methods prevailed over methods based on human knowledge. This has led to significant changes in the field of natural language processing, where statistics and computing have increasingly come to dominate over the past few decades. The recent rise of deep learning in speech recognition is just the latest step in this direction.

Deep learning methods rely less on human knowledge, use more computation, and are accompanied by learning from large training sets, resulting in better speech recognition systems. Just like in games, researchers are always trying to make the system work the way they want it to work - they are trying to put knowledge into the system - but it often turns out that the end result is counterproductive and a huge waste of the researcher's time. time. But with Moore's Law, researchers can take massive amounts of calculations and find a way to use them efficiently.

A similar pattern exists in the field of computer vision. Early methods considered vision to search edges, generalized cylinders, or depend on SIFT features. But today, all these methods have been abandoned. Modern deep learning neural networks achieve better results using just convolutions and some concepts of invariance.

This is a very big lesson. Because we are still making the same kinds of mistakes, we still don’t fully understand the field of artificial intelligence. To see this and effectively avoid making the same mistakes, we must understand why these mistakes lead us astray. We must learn the hard lesson that sticking to our mindset doesn’t work in the long run. The painful lesson is based on the following historical observations:

- AI researchers often try to build knowledge into their own agents,

- In the short term, this is often helpful and satisfies researchers,

- But in the long run, this will stall researchers and even inhibit further development.

- Breakthroughs may ultimately come through an opposite approach—based on search and learning based on large-scale computation. Final success often has a bitter taste and cannot be fully digested because it was not achieved through a likeable, people-centered approach.

One thing we should learn the hard way is that general-purpose methods are very powerful, and they will continue to scale as computing power increases, even as the available computation becomes very large. Search and learning seem to be exactly two methods that can be expanded at will in this way.

Richard S. Sutton, the godfather of reinforcement learning, is currently a professor at the University of Alberta in Canada.

The second general idea we learn the hard way is that the actual content of consciousness is extremely complex; we should not try to think about the content of consciousness in simple ways, such as thinking about space, objects, multi-agents, or symmetries. All of this is part of the arbitrary, inherently complex external world.

The reason they should not be intrinsic is that the complexity is infinite; instead we should only build meta-methods that can find and capture this arbitrary complexity. The key to these methods is that they are able to find good approximations, but the search for them should be done by our method, not ourselves.

We want AI agents to discover new things like we do, rather than rediscover what we discovered. Building on what we have discovered only makes it more difficult to see how well the discovery process has been accomplished.