Stable Diffusion 3 is suddenly released! Same architecture as Sora, everything is more realistic

It has been brewing for more than a year and has evolved three major abilities compared to the previous generation.

Come on, get the results right away!

First of all, it is the text rendering ability of cheating.

Look at the chalk writing on the blackboard:

Go Big or Go Home (Go Big or Go Home), this one is quite murderous~

Neon effects on street signs and bus lights:

There is also the "Good Night" embroidered with such a "hook" that you can almost see the stitches:

As soon as the work was displayed, netizens shouted: It’s too accurate.

Some people even said: hurry up and arrange Chinese language.

Secondly, the multi-theme prompting ability is directly maxed out.

What's the meaning? You can stuff n number of "elements" into the prompt word at once, Stable Diffusion 3: If you miss one, I lose.

Now, look carefully at the picture below. There are "Astronaut", "Piggy in Tutu", "Pink Umbrella", "Robin in Top Hat", and a few "Stable Diffusion" in the corner. Big characters (not a watermark).

With this ability, a work can be as rich as you want.

Finally, the image quality has evolved again.

Are you shocked just by looking at these pictures? !

And all kinds of ultra-high-definition close-ups are beyond easy reach.

Are you excited? The official queue list has now been opened, and everyone can apply on the official website.

Ahem, I have to say that the AI circle has been quite lively recently.

Some netizens said that my computer can no longer hold...

Stable Diffusion 3 is here!

The effect of the new Stable Diffusion is so good, I will give you some as a gift.

Of course, all pictures are from the official, such as the head of StabilityAI media:

I have to say that the text effect is really the most eye-catching, and various forms can be presented quite clearly and "appropriately".

When I see the picture above, I have to think about "Midjourney's embarrassing appearance in academia: randomly illustrating biology papers" - with SD3, can we produce very professional academic illustrations?

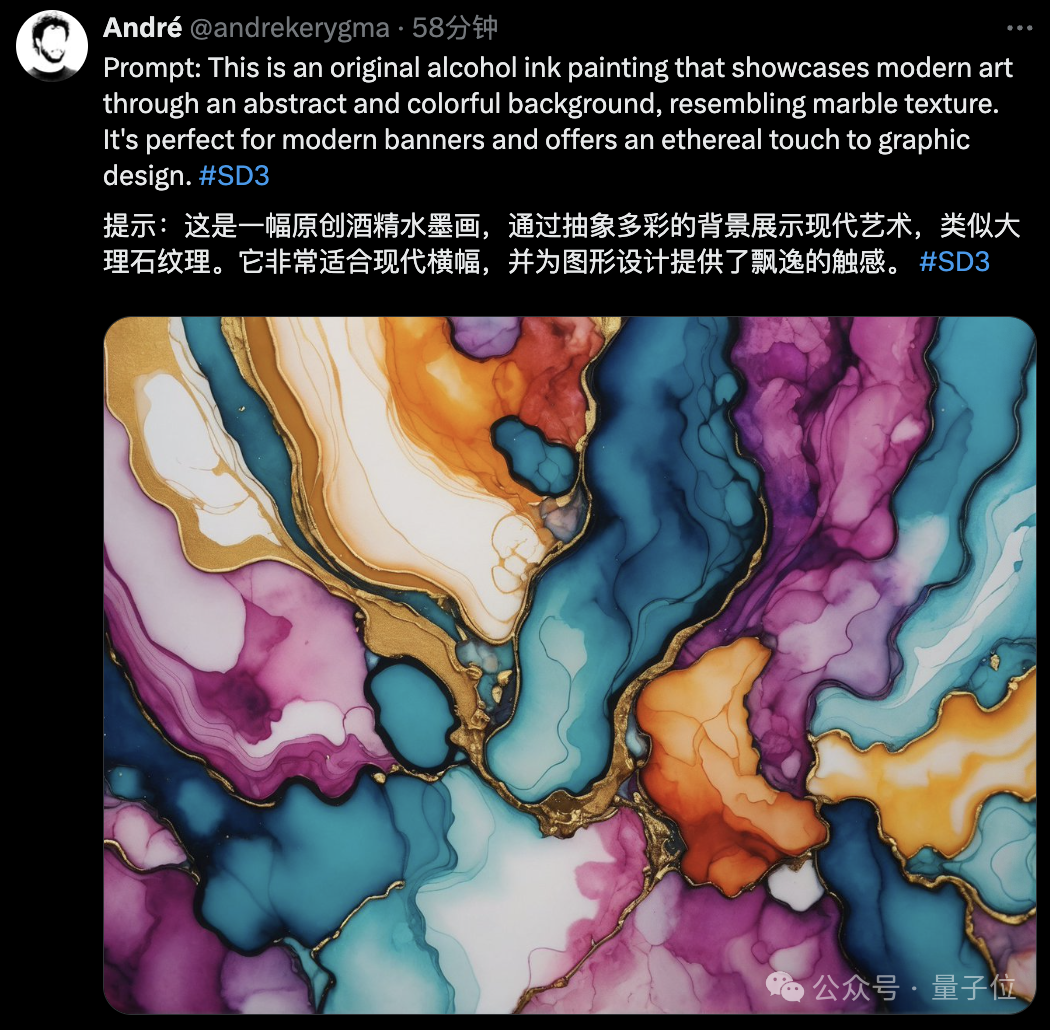

In addition to these, SD3’s “alcohol ink painting” is also quite unique:

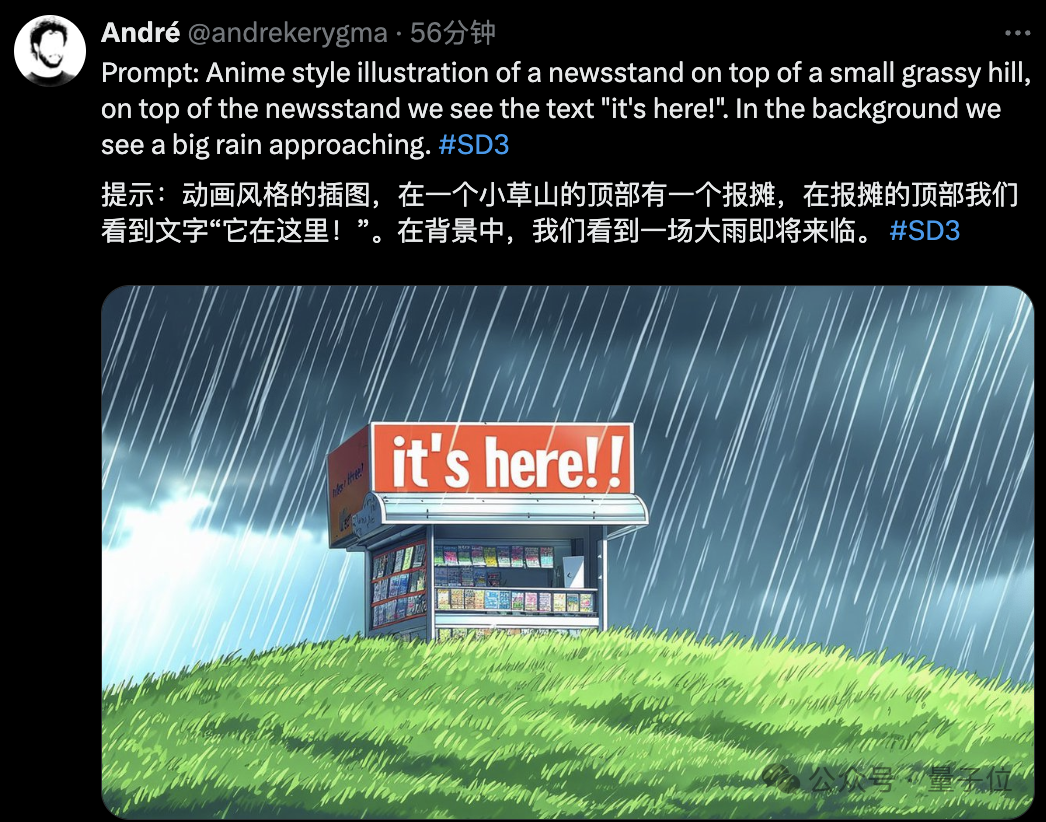

Anime style:

Again, you can add clear text on it.

Since you currently need to queue up to apply, it is not yet easy for everyone to actually test it out.

However, clever netizens have already fed Midjourney (v 6.0) with the same prompt words.

For example, the picture at the beginning of "Red Apple and Blackboard Words" (prompt: cinematic photo of a red apple on a table in a classroom, on the blackboard are the words "go big or go home" written in chalk)

The final results given by Midjourney are as follows:

Judging from this set of comparisons, it can be said that the judgment is clear - SD3 is superior in terms of text spelling, quality, color coordination, etc.

In terms of technology, currently, the selectable parameters of the model range from 800M to 8B.

The detailed technical report has not yet been released. The official has only revealed that it mainly combines the diffusion transformer architecture and flow matching.

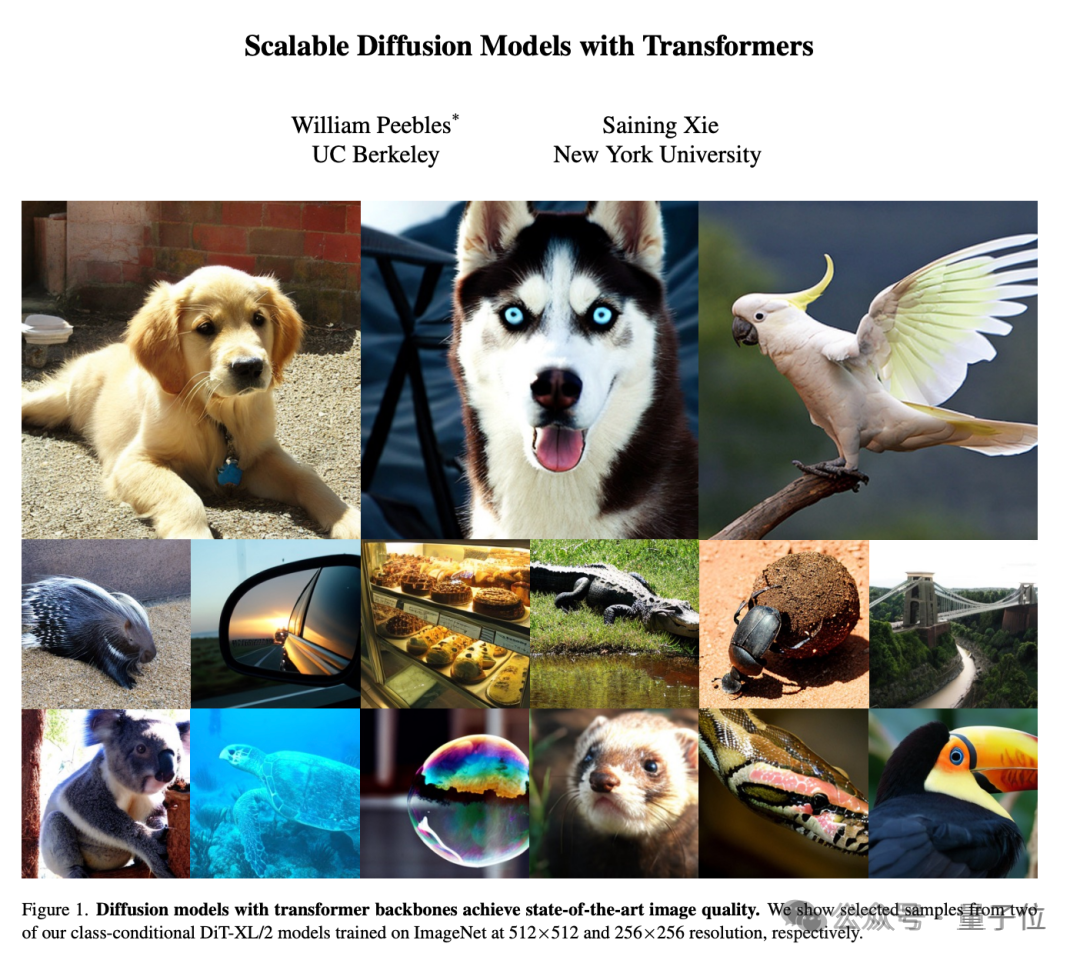

The former is actually the same as Sora, and the attached technical paper is DiT co-written by William Peebles and Xie Senin in 2022.

DiT combined Transformer and diffusion model for the first time, and the related paper was accepted as an Oral paper by ICCV 2023.

In this study, the researchers trained a latent diffusion model, replacing the commonly used U-Net backbone network with a Transformer that operates on latent patches. They analyze the scalability of the Diffusion Transformer (DiT) through the forward pass complexity measured in Gflops.

The latter flow matching also came from 2022 and was completed by scientists from Meta AI and the Weizmann Institute of Science.

They proposed a new paradigm of generative models based on continuous normalized flows (CNFs), and the concept of flow matching, a simulation-free CNFs method based on vector fields that regress fixed conditional probability paths. It was found that using flow matching with diffusion path, the model that can be trained is more robust and stable.

However, after seeing so much progress in video generation recently, some netizens said:

What do you think?

One More Thing

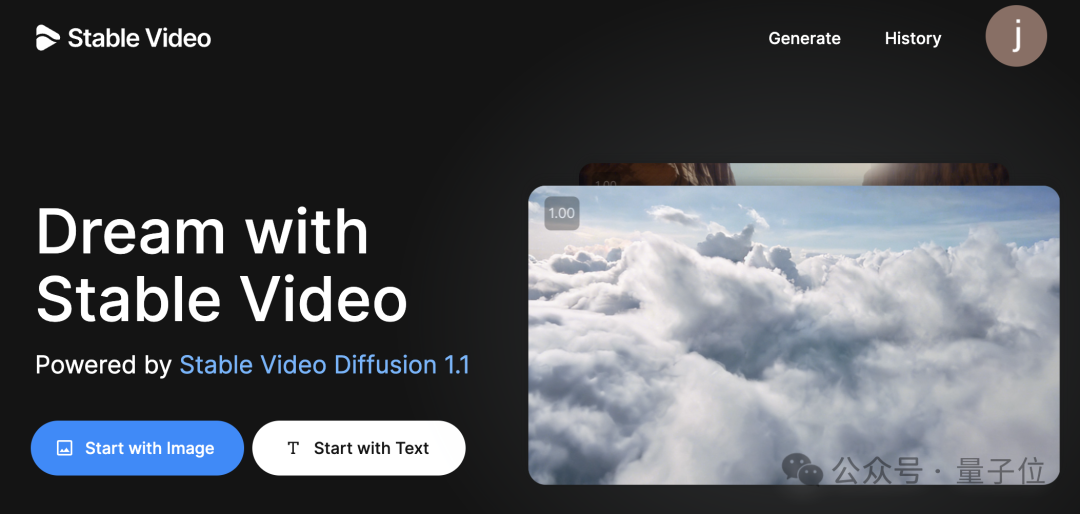

In addition, just the day before, their video product Stable Video was officially open for public testing.

Based on SVD1.1 (Stable Video Diffusion 1.1), available to everyone.

Mainly supports two functions: Wensheng video and Tusheng video.

Reference link:

[1]https://stability.ai/news/stable-diffusion-3.

[2]https://arxiv.org/abs/2212.09748.

[3]https://arxiv.org/abs/2210.02747.

[4]https://twitter.com/pabloaumente/status/1760678508173660543.