Looking at the technological evolution of OpenAI from RAG to multi-modal search

Summary

This article explores the latest progress in the field of information retrieval and text generation, with a special focus on OpenAI's RAG model and its application in text content search. The article introduces the gpt-4-vision-preview model in detail, which marks a major shift from structured search to unstructured search and can effectively process and interpret multi-modal information, such as pictures, tables, and text. Through practical case analysis, the article demonstrates how these technologies can be leveraged for enterprise document management, academic research, and media content analysis, providing readers with in-depth insights into how to apply these advanced technologies for multimodal data processing.

Beginning

In the field of artificial intelligence, information retrieval and text generation have always been two important research directions. OpenAI's RAG (Retrieval-Augmented Generation) model, as a breakthrough in this field, successfully combines the text generation capabilities of neural networks with the retrieval capabilities of large-scale data sets. This innovation not only improves the accuracy and information richness of text generation models, but also solves the limitations of traditional models in dealing with complex queries.

The mainstream application of the RAG model is reflected in the search of text content, such as the retrieval of enterprise knowledge bases. Establish the correlation between the input and the target through text loading, cutting, embedding, indexing and other methods, and finally present the search results to the user. However, with the diversification of information types, traditional text search can no longer meet all needs.

At this time, OpenAI launched the gpt-4-vision-preview model, which was not only a major leap forward in technology, but also marked an important shift from structured search to unstructured search. The model has the ability to process and interpret multi-modal information, whether it is pictures, tables or text, and can be effectively summarized and searched. This progress greatly expands the extension of RAG functions and opens up a new path for multi-modal data processing.

For example, in terms of enterprise document management, gpt-4-vision-preview can analyze PDF documents containing charts and text, such as contracts and reports, to provide accurate summaries and key information extraction. In the field of academic research, this technology can automatically organize and analyze data and images in academic papers, greatly improving research efficiency. In terms of media content analysis, the pictures and text content in news reports can be integrated and analyzed to provide media practitioners with deeper insights.

Today I will use this article to show you how to analyze and search a multi-modal PDF.

Scenario analysis

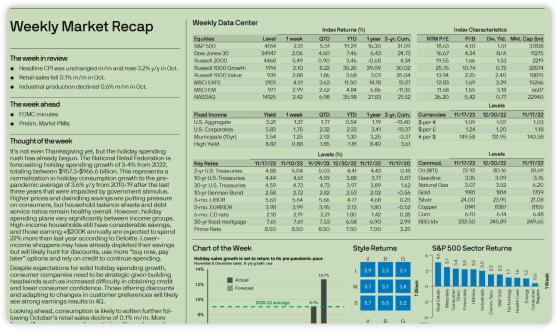

Then today’s protagonist is about to appear. As shown in the picture below, this PDF file is a typical financial market analysis report, containing rich text, charts and data.

Financial market analysis report

Financial market analysis report

The report not only contains detailed text descriptions, such as analysis and forecasts of market trends, but also includes a large number of charts and data, such as stock market indexes, fixed income product yields and key interest rates, etc. These charts and data play a vital role in understanding the overall market situation.

However, manual processing of such multi-modal PDF files is often time-consuming and labor-intensive. Analysts need to carefully read text, interpret charts and data, and synthesize this information to form a complete view of the market. This process is not only time-consuming, but also error-prone, especially when dealing with large amounts of complex data.

The traditional RAG model is inadequate when processing such multi-modal PDF files. Although RAG is excellent at processing and generating text-based information, it mainly searches and generates text content and has limited processing capabilities for non-text elements such as charts and data. This means that when using the RAG model for information retrieval, for complex PDF files containing non-text elements, it may not be able to fully understand and utilize all the information in the file.

Therefore, for this business scenario, it is necessary to use the multi-modal processing method introduced by OpenAI and use the GPT -4-Vision-Preview model to process text, charts and data to provide a more comprehensive and accurate analysis. This improves analysis efficiency and reduces risks caused by manual processing errors.

technical analysis

In multimodal PDF document processing, the primary challenge is to identify unstructured information in the document, such as pictures, tables, and text. Here we need to use the unstructured library , which provides open source components for ingesting and preprocessing images and text documents, such as PDF, HTML, Word documents, etc. Its main purpose is to simplify and optimize data processing workflows for large language models (LLMs). unstructured’s modular features and connectors form a consistent system that simplifies data ingestion and preprocessing, adapting it to different platforms and efficiently transforming unstructured data into structured output .

In addition, in order to better process PDF documents, we also introduced the poppler-utils tool, which is used to extract images and text. In particular, the pdfimages and pdftotext tools are used to extract embedded images and full text from PDFs respectively, which is crucial for the analysis of multi-modal PDFs.

Note: Poppler is a PDF document rendering library that can be drawn using two backends, Cairo and Splash. The features of these two backends differ, and Poppler's functionality may also depend on the backend it uses. In addition, there is a backend based on the Qt4 drawing framework "Arthur", but this backend is incomplete and has stopped development. Poppler provides bindings for Glib and Qt5, which provide interfaces to the Poppler backend, although the Qt5 bindings only support the Splash and Arthur backends. The Cairo backend supports anti-aliasing and transparent objects for vector graphics, but not smooth bitmap images such as scanned documents, and does not rely on the X Window System, so Poppler can run on platforms such as Wayland, Windows or macOS. The Splash backend supports bitmap reduction filtering. Poppler also comes with a text rendering backend that can be called through the command line tool pdftotext, which can be used to search for strings in PDFs from the command line, for example using the grep tool.

After solving the problem of extracting images, tables, and questions, the Tesseract-OCR tool played a key role in converting image and table content into an analyzeable text format. Although Tesseract cannot handle PDF files directly, it can convert images in PDF files converted to .tiff format to text. This process is a key link in processing multi-modal PDF, converting information originally in the form of images or tables into text form that can be further analyzed.

Of course, in addition to the above-mentioned technologies for identifying and separating PDF elements, technologies such as vector storage and vector indexers will also be used. Since they are often used in traditional RAG, they will not be described here.

Code

After scenario and technical analysis, we learned that if we need to perform multi-modal analysis and query on PDF, we must first identify the pictures, tables, and text in it. Here we will introduce unstructured , Poppler library and Tesseract-OCR tool. With the support of these tools, our coding process is even more powerful.

Install libraries and tools

!pip install langchain unstructured[all-docs] pydantic lxml openai chromadb tiktoken -q -U

!apt-get install poppler-utils tesseract-ocr- 1.

- 2.

There are several libraries above that have not been mentioned before. Here are some explanations.

- pydantic: for data validation and settings management.

- lxml: XML and HTML processing library.

- openai: Interact with OpenAI's API.

- chromadb: used for vector database management or data storage .

Extract PDF information

Now that the tools are ready, the next step is to process the PDF content. The code is as follows:

from typing import Any

from pydantic import BaseModel

from unstructured.partition.pdf import partition_pdf

# 设置存放图像的路径

images_path = "./images"

# 调用 partition_pdf 函数处理PDF文件,提取其中的元素

raw_pdf_elements = partition_pdf(

filename="weekly-market-recap.pdf", # 指定要处理的PDF文件名

extract_images_in_pdf=True, # 设置为True以从PDF中提取图像

infer_table_structure=True, # 设置为True以推断PDF中的表格结构

chunking_strategy="by_title", # 设置文档切分策略为按标题切分

max_characters=4000, # 设置每个块的最大字符数为4000

new_after_n_chars=3800, # 在达到3800字符后开始新的块

combine_text_under_n_chars=2000, # 将少于2000字符的文本组合在一起

image_output_dir_path=images_path, # 指定输出图像的目录路径

)- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

This Python code uses the pydantic and unstructured libraries to process PDF files. Here's a line-by-line explanation of the code:

images_path = "./images": Set a variable images_path to store images extracted from PDF.

Next, the partition_pdf function is called, which is used to process the PDF file and extract its elements:

- filename="weekly-market-recap.pdf": Specify the name of the PDF file to be processed.

- extract_images_in_pdf=True: Set to True to extract images from PDF.

- infer_table_structure=True: Set to True to infer table structure in PDF.

- chunking_strategy="by_title": Set the document chunking strategy to chunking by title.

- max_characters=4000: Set the maximum number of characters per block to 4000.

- new_after_n_chars=3800: Start a new block after reaching 3800 characters.

- combine_text_under_n_chars=2000: Combine text with less than 2000 characters.

- image_output_dir_path=images_path: Specifies the directory path of the output images.

You can obtain the information of PDF images through commands. Since the images are saved to the images directory through the above code, the images are displayed through the following code.

from IPython.display import Image

Image('images/figure-1-1.jpg')- 1.

- 2.

Image information extracted from PDF

Image information extracted from PDF

Generate summaries for image information

The following code defines a class called ImageSummarizer, which is used to generate summary information of image content .

# 引入所需的库

import base64

import os

# 从langchain包导入ChatOpenAI类和HumanMessage模块

from langchain.chat_models import ChatOpenAI

from langchain.schema.messages import HumanMessage

# 定义图像摘要类

class ImageSummarizer:

# 初始化函数,设置图像路径

def __init__(self, image_path) -> None:

self.image_path = image_path

self.prompt = """你的任务是将图像内容生成摘要信息,以便检索。

这些摘要将被嵌入并用于检索原始图像。请给出一个简洁的图像摘要,以便于检索优化。

"""

# 定义函数将图像转换为base64编码

def base64_encode_image(self):

with open(self.image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

# 定义摘要函数

def summarize(self, prompt = None):

# 获取图像的base64编码数据

base64_image_data = self.base64_encode_image()

# 创建ChatOpenAI对象,使用gpt-4-vision预览模型,最大token数为1000

chat = ChatOpenAI(model="gpt-4-vision-preview", max_tokens=1000)

# 调用chat对象的invoke方法,发送包含文本和图像的消息

response = chat.invoke(

[

#人类提示语的输入

HumanMessage(

content=[

{

"type": "text",

"text": prompt if prompt else self.prompt

},

{

"type": "image_url",

"image_url": {"url": f"data:image/jpeg;base64,{base64_image_data}"},

},

]

)

]

)

# 返回base64编码的图像数据和响应内容

return base64_image_data, response.content- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

Here is a detailed explanation of the code:

1.Import library

Import base64 and os libraries for image encoding and operating system related functions.

Import the ChatOpenAI class and HumanMessage module from the Langchain package, which are used to interact with OpenAI's chat model.

2. Define the image summary class ImageSummarizer

The __init__ method initializes the image path and the prompt for summary generation.

The base64_encode_image method reads and converts image files into base64 encoding. This encoding format is suitable for transmitting images on the network and facilitates the interaction of image information with the GPT model.

3. Define the summary function summarize

Use the base64_encode_image method to get the base64 encoded data of the image.

Create a ChatOpenAI object, specify the gpt-4-vision-preview model, and set the maximum number of tokens to 1000 for processing image summary tasks.

Use the chat.invoke method to send a message containing text prompts and image data to the model. Text hints are used to guide the model in generating summaries of images.

The purpose of creating the summarize function is to generate a summary for the image, and the next step is to call the function. The following code creates a list of image data and image summaries and iterates through all files under the specified path to generate this information.

# 创建图像数据和图像摘要的列表

image_data_list = []

image_summary_list = []

# 遍历指定路径下的所有文件

for img_file in sorted(os.listdir(images_path)):

# 检查文件扩展名是否为.jpg

if img_file.endswith(".jpg"):

# 创建ImageSummarizer对象

summarizer = ImageSummarizer(os.path.join(images_path, img_file))

# 调用summarize方法生成摘要

data, summary = summarizer.summarize()

# 将base64编码的图像数据添加到列表

image_data_list.append(data)

# 将图像摘要添加到列表

image_summary_list.append(summary)- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

Code explanation:

1. Initialization list

Two lists, image_data_list and image_summary_list, are created to store the base64 encoded data of the image and the corresponding image summary.

2. Traverse all files under the specified path

Use os.listdir(images_path) to list all files under the specified path (images_path), and use the sorted function to sort the file names.

3. Check and process each file

Check if the file extension is .jpg via if img_file.endswith(".jpg") to ensure only image files are processed.

For each .jpg file, create an ImageSummarizer object, passing in the full path to the image.

Call the summarize method to generate a summary of the image.

Add the returned base64 encoded image data to the image_data_list list.

Add the returned image summary to the image_summary_list list.

Two lists are ultimately generated: one containing the base64-encoded data of the image file, and the other containing the corresponding image summary. This information can be used for image retrieval or other processing.

We use the following code to view the summary information of the image

image_summary_list- 1.

The output is as follows:

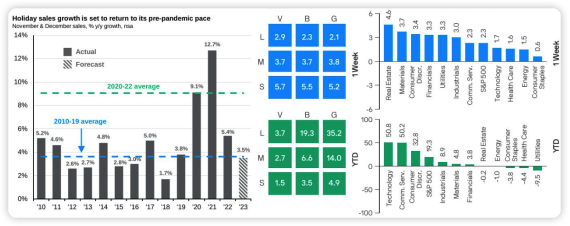

['This image contains three different charts that provide data on holiday sales growth, week-to-week and year-to-date (YTD) performance comparisons across industries. \n\nThe first chart is a bar chart titled "Holiday sales growth is set to return to its pre-pandemic pace" showing actual data and year-on-year sales growth in November and December between 2010 and 2023. Forecast data. The blue columns represent actual data, the striped columns represent forecast data, the blue dotted line represents the average growth rate from 2010 to 2019, and the green dotted line represents the average growth rate from 2020 to 2022. \n\nThe second chart is a color block chart, divided into two parts, showing the 1 week and year of "V", "B", and "G" at different scales (large, medium, small) Performance to date (YTD). Values are displayed in white text within the color block. \n\nThe third chart is two side-by-side bar charts showing the 1-week and year-to-date (YTD) performance of different industries. The blue bar on the left shows the change over the week, and the green bar on the right shows the year-to-date change. The Y-axis of the chart represents the percentage change, and the X-axis lists the different industries. \n\nSummary: Three charts showing holiday sales growth expected to return to pre-pandemic levels, 1-week and year-to-date performance by market size (large, medium, small), and 1-week and year-to-date performance data by industry . ']

It can be seen from the results that the GPT -4- Vision - Preview model can recognize images and can also control some details.

Recognize tabular and textual information

Pictures, tables and text information make up the complete content of PDF. After identifying the picture information, you need to start with the tables and text information.

The following code defines the process of processing PDF document elements.

# 定义一个基本模型Element,用于存储文档元素的类型和文本内容

class Element(BaseModel):

type: str

text: Any

# 创建表格元素和文本元素的空列表

#表格信息

table_elements = []

#文本信息

text_elements = []

# 遍历从PDF中分割出的所有元素

for element in raw_pdf_elements:

# 判断元素是否为表格:非结构化-文档-元素-表格

if "unstructured.documents.elements.Table" in str(type(element)):

# 如果是表格,将其添加到表格元素列表

table_elements.append(Element(type="table", text=str(element)))

# 判断元素是否为复合元素

elif "unstructured.documents.elements.CompositeElement" in str(type(element)):

# 如果是复合元素,将其添加到文本元素列表

text_elements.append(Element(type="text", text=str(element)))- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

The code is explained below:

1. Define the Element basic model

Using pydantic's BaseModel defines a class called Element that stores the type of document element (such as table or text) and text content. This class uses the type annotations str and Any to define two attributes: type (element type) and text (element content).

2. Create empty lists to store table and text elements

table_elements and text_elements are used to store table information and text information respectively.

3. Traverse elements in PDF

For each element split from the PDF file (in raw_pdf_elements), the code classifies and processes it by determining the type of element:

If the element is a table (unstructured.documents.elements.Table), create an Element object, set its type to "table", set the text content to the string representation of the element, and then add this object to the table_elements list.

If the element is a composite element (unstructured.documents.elements.CompositeElement), it is handled in a similar manner, setting its type to "text" and adding it to the text_elements list.

After classifying and storing different elements in the PDF document, you need to process them and summarize these tables and texts. code show as below:

# 导入所需模块

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.schema.output_parser import StrOutputParser

# 定义用于生成总结的提示文本

prompt_text = """

您负责简洁地总结表格或文本块,中文输出。:

{element}

"""

# 从模板创建提示

prompt = ChatPromptTemplate.from_template(prompt_text)

# 创建总结链,结合提示和GPT-3.5模型

summarize_chain = {"element": lambda x: x} | prompt | ChatOpenAI(temperature=0, model="gpt-3.5-turbo") | StrOutputParser()

# 从表格元素中提取文本

tables = [i.text for i in table_elements]

# 使用总结链批量处理表格文本,设置最大并发数为5

table_summaries = summarize_chain.batch(tables, {"max_concurrency": 5})

# 从文本元素中提取文本

texts = [i.text for i in text_elements]

# 使用总结链批量处理文本,设置最大并发数为5

text_summaries = summarize_chain.batch(texts, {"max_concurrency": 5})- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

This code is used to generate summaries of tables and text blocks, leveraging LangChain and GPT-3.5 models:

1. Import module

Import LangChain's ChatOpenAI, ChatPromptTemplate and StrOutputParser modules.

2. Define prompt text for generating summary

Defines a prompt text template used to guide the model summary table or text block.

3. Create prompts and summary chains

Use ChatPromptTemplate.from_template to create a prompt based on a defined prompt text.

Create a summary chain named summarize_chain, which combines prompts, GPT-3.5 model (because the ability to generate summarization does not require the use of GPT -4 , in order to save tokens use GPT -3.5 ), and string output parser (StrOutputParser ).

4. Work with tables and text elements

Extract table text from table_elements, use the summarize_chain.batch method to batch process these texts, and set the maximum number of concurrencies to 5.

Extract text block text from text_elements, also use summarize_chain.batch for batch processing, and the maximum number of concurrencies is set to 5.

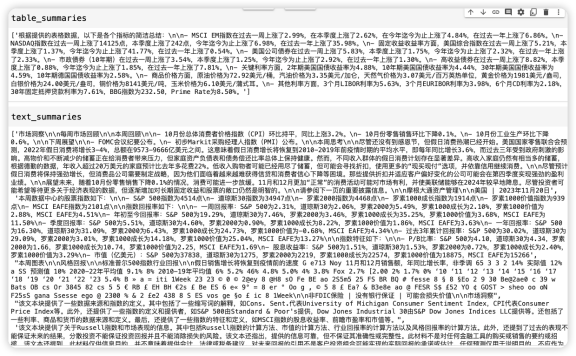

We print the summary as follows:

Table and text summary information

Table and text summary information

Create indexer

Okay, so far we have extracted the pictures, tables and text information in the PDF one by one , and generated a summary of this information. The purpose is to facilitate subsequent searches. After making this series of preparations, we need to arrange for indexing device . As the name suggests, the indexer adds an index to the searched information to facilitate searching.

The following code creates a multi-vector retriever for storing and retrieving text, tabular, and image data:

import uuid # 导入uuid库,用于生成唯一标识符

# 导入所需的langchain模块

from langchain.embeddings import OpenAIEmbeddings

from langchain.retrievers.multi_vector import MultiVectorRetriever

from langchain.schema.document import Document

from langchain.storage import InMemoryStore

from langchain.vectorstores import Chroma

id_key = "doc_id" # 设置文档的唯一标识键

# 初始化多向量检索器,初始为空,文字,图片,表格

retriever = MultiVectorRetriever(

vectorstore=Chroma(collection_name="summaries", embedding_function=OpenAIEmbeddings()), # 使用OpenAI的嵌入方法

docstore=InMemoryStore(), # 使用内存存储文档

id_key=id_key, # 设置文档的唯一标识键

)

# 为文本添加唯一标识

doc_ids = [str(uuid.uuid4()) for _ in texts] # 为每个文本生成一个唯一的UUID

# 创建文本的文档对象并添加到检索器

summary_texts = [

Document(page_content=s, metadata={id_key: doc_ids[i]}) # 将文本封装为文档对象

for i, s in enumerate(text_summaries)

]

retriever.vectorstore.add_documents(summary_texts) # 将文本文档添加到向量存储中

retriever.docstore.mset(list(zip(doc_ids, texts))) # 将文本文档的ID和内容存储在内存存储中

# 为表格添加唯一标识

table_ids = [str(uuid.uuid4()) for _ in tables] # 为每个表格生成一个唯一的UUID

# 创建表格的文档对象并添加到检索器

summary_tables = [

Document(page_content=s, metadata={id_key: table_ids[i]}) # 将表格封装为文档对象

for i, s in enumerate(table_summaries)

]

retriever.vectorstore.add_documents(summary_tables) # 将表格文档添加到向量存储中

retriever.docstore.mset(list(zip(table_ids, tables))) # 将表格文档的ID和内容存储在内存存储中

# 为图像添加唯一标识

doc_ids = [str(uuid.uuid4()) for _ in image_data_list] # 为每个图像生成一个唯一的UUID

# 创建图像的文档对象并添加到检索器

summary_images = [

Document(page_content=s, metadata={id_key: doc_ids[i]}) # 将图像封装为文档对象

for i, s in enumerate(image_summary_list)

]

retriever.vectorstore.add_documents(summary_images) # 将图像文档添加到向量存储中

retriever.docstore.mset(list(zip(doc_ids, image_data_list))) # 将图像文档的ID和内容存储在内存存储中- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

Code explanation:

1. Initialize the multi-vector retriever

Using Chroma as the vector store, it leverages OpenAI embedding methods to convert documents into vector form.

Use InMemoryStore as the document store, which is a method of storing documents in memory.

Set id_key to the unique identification key of the document.

2. Process text data

Create a unique identifier (UUID) for each text.

Encapsulate the text summary into a Document object and add it to vector storage and document storage.

3. Process table data

Similarly, create UUID for each table.

Encapsulate the table summary into a Document object and add it to vector storage and document storage.

4. Process image data

Create UUID for each image.

Encapsulate the image summary into a Document object and add it to vector storage and document storage.

This code sets up a system that can store and retrieve multiple types of document data, including text, tables, and images, utilizing unique identifiers to manage each document. This is useful for processing and organizing large amounts of complex data.

Generate multimodal query prompts

The code below contains multiple functions for processing and displaying image and text data.

from PIL import Image

from IPython.display import HTML, display

import io

import re

# 显示基于base64编码的图片

def plt_img_base64(img_base64):

display(HTML(f''))

# 检查base64数据是否为图片

def is_image_data(b64data):

"""

通过查看数据开头来检查base64数据是否为图片

"""

image_signatures = {

b"\xFF\xD8\xFF": "jpg",

b"\x89\x50\x4E\x47\x0D\x0A\x1A\x0A": "png",

b"\x47\x49\x46\x38": "gif",

b"\x52\x49\x46\x46": "webp",

}

try:

header = base64.b64decode(b64data)[:8] # 解码并获取前8个字节

for sig, format in image_signatures.items():

if header.startswith(sig):

return True

return False

except Exception:

return False

# 分离base64编码的图片和文本

def split_image_text_types(docs):

"""

分离base64编码的图片和文本

"""

b64_images = []

texts = []

for doc in docs:

# 检查文档是否为Document类型并提取page_content

if isinstance(doc, Document):

doc = doc.page_content

#是图片

if is_image_data(doc):

b64_images.append(doc)

else:

texts.append(doc)

return {"images": b64_images, "texts": texts}

# 根据数据生成提示信息

def img_prompt_func(data_dict):

messages = []

# 如果存在图片,则添加到消息中

#图片的信息单独拿出来, 需要和提示语一起传给大模型的。

if data_dict["context"]["images"]:

for image in data_dict["context"]["images"]:

image_message = {

"type": "image_url",

"image_url": {"url": f"data:image/jpeg;base64,{image}"},

}

messages.append(image_message)

# 添加文本到消息

formatted_texts = "\n".join(data_dict["context"]["texts"])

text_message = {

"type": "text",

"text": (

"You are financial analyst.\n"

"You will be given a mixed of text, tables, and image(s) usually of charts or graphs.\n"

"Use this information to answer the user question in the finance. \n"

f"Question: {data_dict['question']}\n\n"

"Text and / or tables:\n"

f"{formatted_texts}"

),

}

messages.append(text_message)

print(messages)

return [HumanMessage(content=messages)]- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

- 55.

- 56.

- 57.

- 58.

- 59.

- 60.

- 61.

- 62.

- 63.

- 64.

- 65.

- 66.

- 67.

- 68.

- 69.

- 70.

- 71.

- 72.

- 73.

- 74.

- 75.

- 76.

- 77.

Code explanation:

1. Display images encoded based on base64 (plt_img_base64)

The purpose of this function is to display base64 encoded images.

2. Check whether the base64 data is an image (is_image_data)

Determine whether the data is a picture by checking whether the beginning of the base64-encoded data matches the signature of common image formats (such as JPEG, PNG, GIF, WEBP).

3. Separate base64-encoded images and text (split_image_text_types)

This function iterates through the document collection, uses the is_image_data function to distinguish which is image data and which is text, and then stores them in two lists respectively.

4. Generate prompt information (img_prompt_func) based on data

This function generates messages for delivery to LangChain's large model. For each image, create a message containing the image URL; for text data, create a message containing the prompt text and question.

Special explanation is required for "text" , which defines the specific text content of the message.

First, the user role is explained: "You are financial analyst."

Then describe the task type and data format: "You will be given a mixed of text, tables, and image(s) usually of charts or graphs." ) mixed data).

Tip: "Use this information to answer the user question in the finance."

{data_dict['question']}: The part within {} here is a placeholder for Python string formatting, used to insert the value corresponding to the 'question' key in the variable data_dict, that is, the question raised by the user.

"Text and / or tables:\n": Prompt text indicating that the next content is text and/or tables.

f"{formatted_texts}": Use string formatting again to insert the variable formatted_texts (formatted text data) into the message.

Realize financial analysis query

All we need is east wind. Now let’s use the GPT -4- Vision - Preview model for inference. Let’s try to ask questions to the indexer and see what the results are.

The following code implements a query processing pipeline based on the RAG (Retrieval Augmented Generation) model.

from langchain.schema.runnable import RunnableLambda, RunnablePassthrough

# 创建ChatOpenAI模型实例

model = ChatOpenAI(temperature=0, model="gpt-4-vision-preview", max_tokens=1024)

# 构建RAG(Retrieval Augmented Generation)管道

chain = (

{

"context": retriever | RunnableLambda(split_image_text_types), # 使用检索器获取内容并分离图片和文本

"question": RunnablePassthrough(), # 传递问题

}

| RunnableLambda(img_prompt_func) # 根据图片和文本生成提示信息

| model # 使用ChatOpenAI模型生成回答

| StrOutputParser() # 解析模型的字符串输出

)

# 定义查询问题

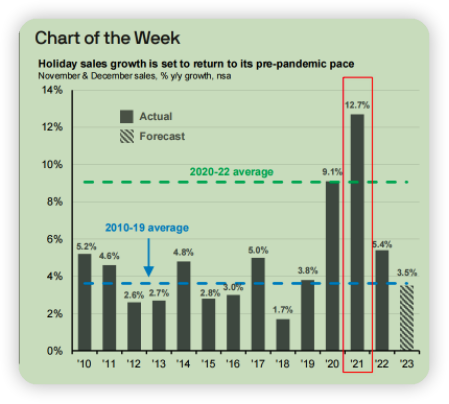

query = "Which year had the highest holiday sales growth?"

# 调用chain的invoke方法执行查询

chain.invoke(query)- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

Code explanation:

1. Create a ChatOpenAI model instance

Use the ChatOpenAI class to create a model instance, configure the temperature parameter to 0, use the model gpt-4-vision-preview, and set the maximum number of tokens to 1024.

2. Build RAG pipeline (chain)

retriever | RunnableLambda(split_image_text_types): First use retriever to retrieve the content, and then call the split_image_text_types function through RunnableLambda to separate the image and text.

RunnablePassthrough(): used to pass query questions directly.

RunnableLambda(img_prompt_func): Generate prompt information based on the retrieved images and text.

model: Use the configured ChatOpenAI model to generate answers based on the generated prompt information.

StrOutputParser(): Parse the string output by the model.

3. Define query problem (query)

A string query is defined as a query question, for example: "Which year had the highest holiday sales growth?" (Which year had the highest holiday sales growth?).

4. Execute query (chain.invoke(query))

Call the chain's invoke method to execute the query and generate an answer based on the question and the retrieved information.

Overall, this code snippet establishes a complex query processing flow that combines content retrieval, data separation, hint generation, and model answering for processing and answering complex questions based on text and image data.

To view the output results :

Based on the chart provided, the year with the highest holiday sales growth is 2021, with a growth rate of 12.7%. This is indicated by the tallest bar in the bar chart under the "Actual" section, which represents the actual year-over-year growth rate for November and December sales.

We translate it into Chinese as follows:

According to the provided chart, holiday sales growth in 2021 is the highest at 12.7%. This is evident from the tallest bar in the histogram in the "solid" section, which represents the year-over-year growth rate for November and December.

Comparison between the chart part in the PDF and the response to the large model

Comparison between the chart part in the PDF and the response to the large model

Through the response of the large model, and then comparing the histogram describing the holiday sales growth rate in the PDF, it can be clearly found that the growth rate in 2021 is the highest, at 12.7 % , and the description of the column as "solid" is also correct. It seems that the multi-modal capabilities provided by OpenAI have worked.

Summarize

Through analysis and example demonstrations, the article clearly demonstrates the potential and application prospects of OpenAI's latest technology in multi-modal data processing. Through the detailed introduction and interpretation of code implementation of the unstructured library, Poppler tool and Tesseract-OCR tool, the article not only provides readers with theoretical knowledge, but also guides how to actually operate and apply these tools. The conclusion of the article highlights the value of the gpt-4-vision-preview model in improving analysis efficiency and reducing manual errors.