You must know, seven pictures to easily understand the service communication in the Kubernetes cluster

Dive into the 3 native k8s objects that support inter-service communication: ClusterIP Service, DNS, and Kube-Proxy.

Overview

Overview

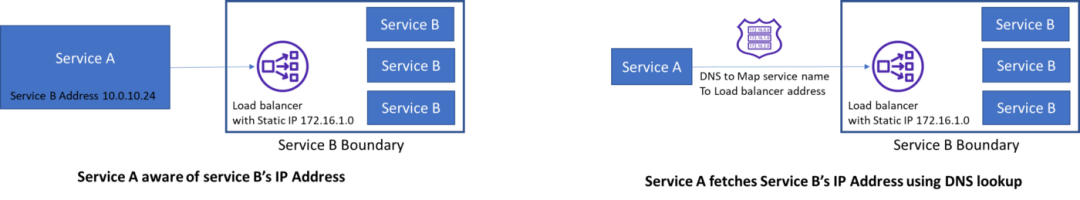

Traditional service-to-service communication

Before jumping into the Kubernetes ecosystem, a quick look at traditional service-to-service communication: communication happens via IP addresses, so in order for service A to call service B, one way is to assign service B a static IP address. Now, either Service A already knows the IP address (this might work when dealing with very few services) or Service B registers itself with a domain name, and Service A obtains Service B's contact address via a DNS lookup.

Traditional service-to-service communication

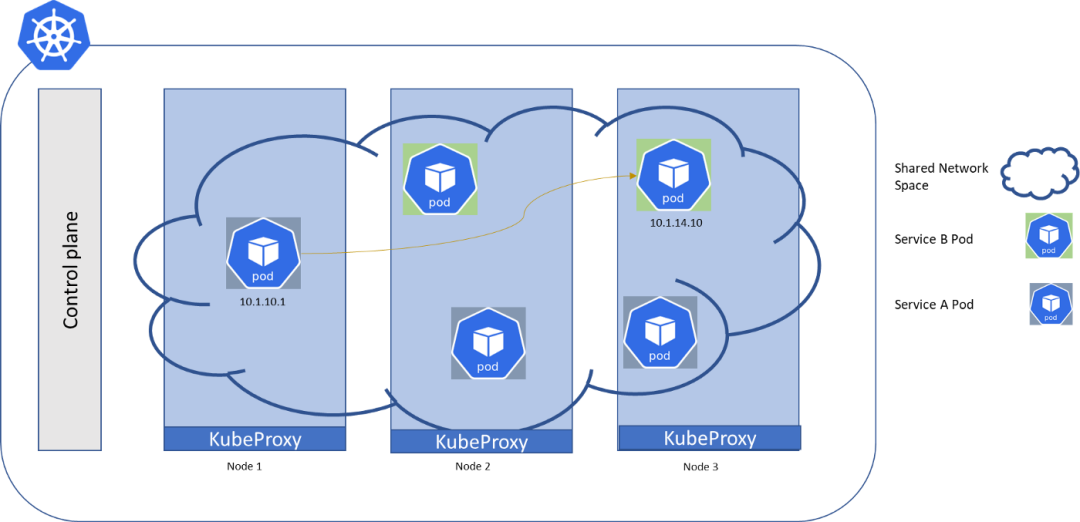

Kubernetes network model

Now in a Kubernetes cluster, we have the control plane that makes up the cluster management component and a set of worker machines called nodes. These nodes host Pods that run the backend microservices as containerized services.

Pod-to-Pod communication within a cluster

According to the Kubernetes network model:

- Each pod in the cluster has its own unique cluster-wide IP address

- All pods can communicate with every pod in the cluster

- Communication happens without NAT, which means the destination pod can see the source pod's real IP address. Kubernetes considers the container network or the applications running on it to be trusted and does not require authentication at the network level.

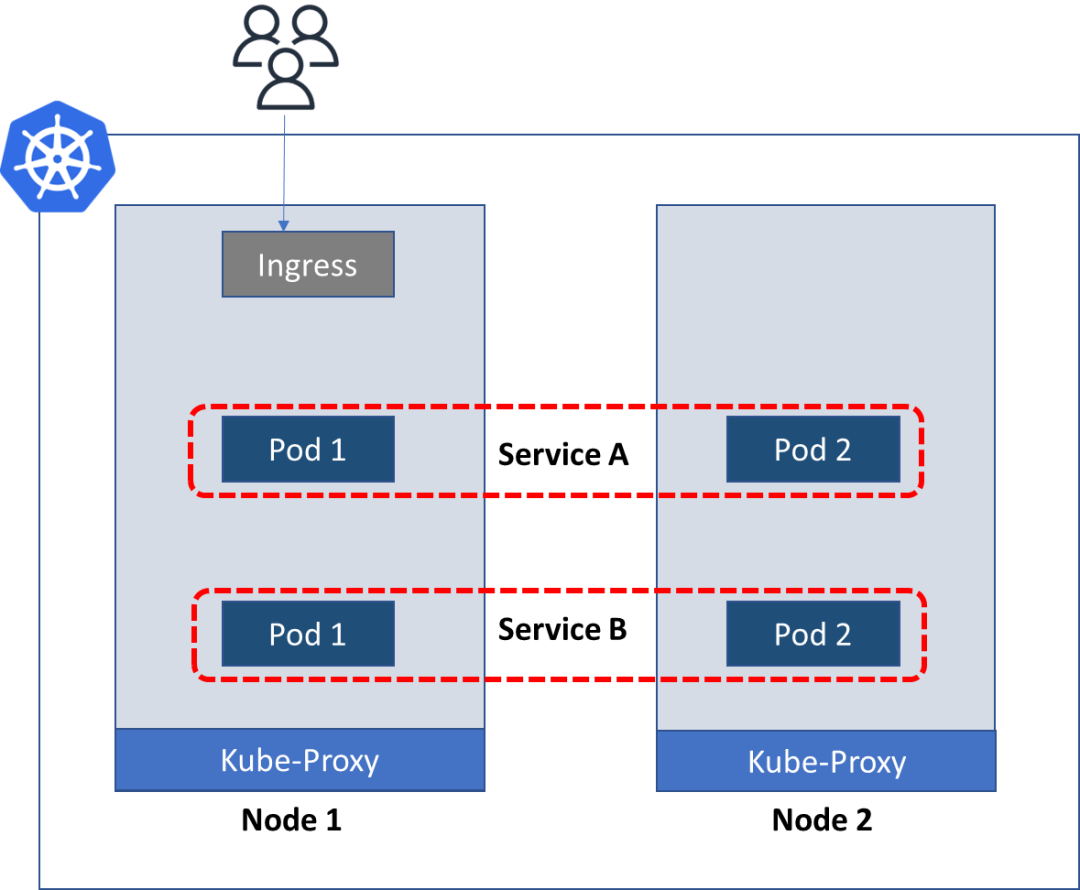

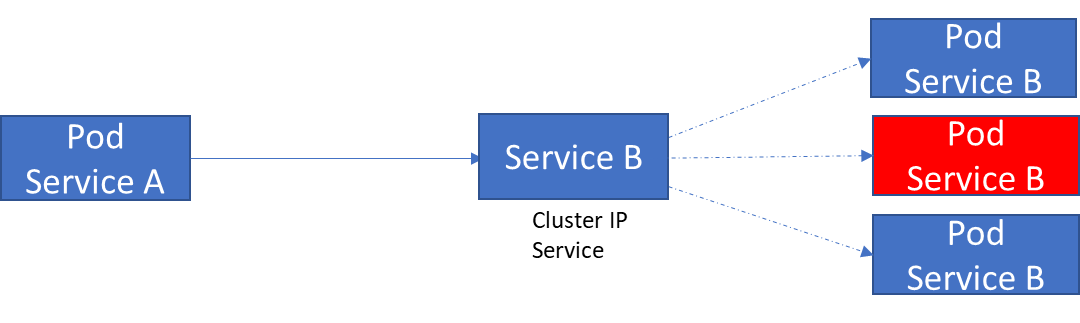

ClusterIP service ~ Pod-based abstraction

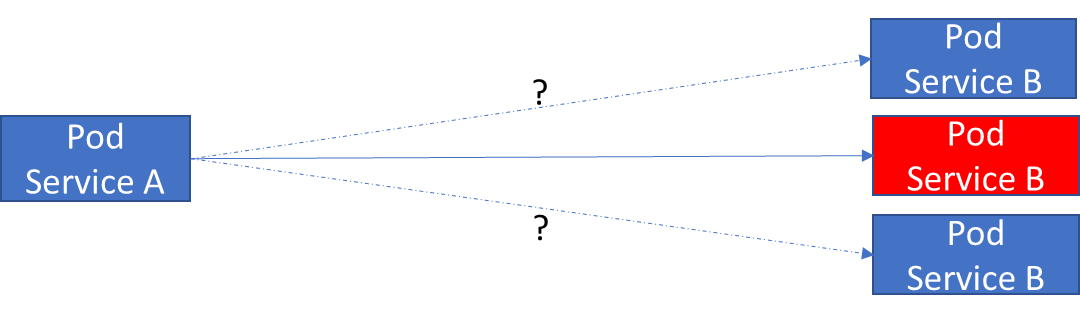

Since each pod in the cluster has its own IP address, it should be easy for one pod to communicate with another, right?

No, because pods are volatile and get a new IP address each time a pod is created. So the client service must somehow switch to the next available pod.

The problem with Pods talking directly to each other is the ephemeral nature of the other target Pod (which could be destroyed at any time), followed by the discovery of new Pod IP addresses.

So Kubernetes can create a layer on top of a group of Pods that can provide a single IP address to the group and can provide basic load balancing.

Pods exposed by the ClusterIP service on a persistent IP address, the client talks to the service instead of talking to the Pod directly.

This abstraction is provided by a service object in Kubernetes called the ClusterIP service. It spawns on multiple nodes, creating a single service in the cluster. It can receive requests on any port and forward them to any port on the pod.

So when app service A needs to talk to service B, it calls the service B object's ClusterIP service, not the individual pod running that service.

ClusterIP uses the standard pattern of labels and selectors in Kubernetes to continuously scan for pods that match selection criteria. Pods have labels, and services have selectors to find labels. Using it, basic traffic splitting is possible, where old and new versions of microservices coexist behind the same clusterIP service.

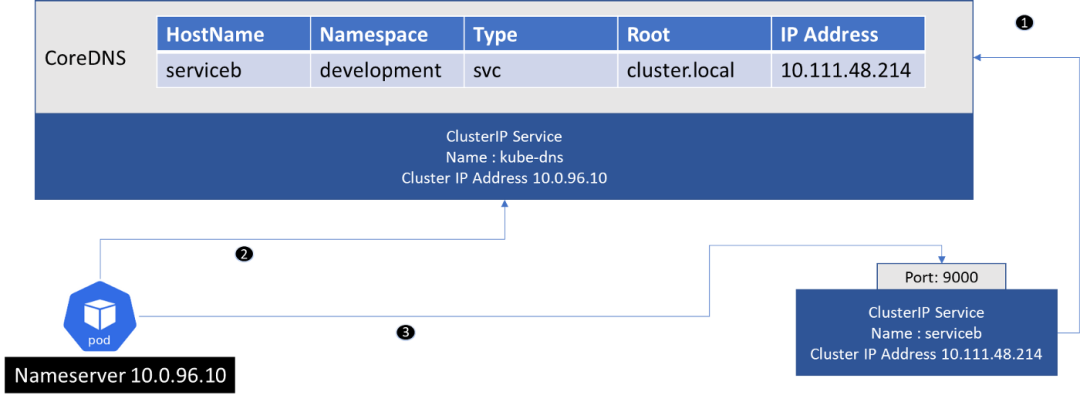

CoreDNS ~ Service discovery within a cluster

Now that service B has obtained a persistent IP address, service A still needs to know what this IP address is before it can communicate with service B.

Kubernetes supports name resolution using CoreDNS. Service A should know the name (and port) of the ClusterIP it needs to communicate with.

- CoreDNS scans the cluster, and whenever a ClusterIP service is created, its entry is added to the DNS server (it also adds an entry per pod if configured, but it has nothing to do with service-to-service communication).

- Next, CoreDNS exposes itself as the cluster IP service (called kube-dns by default), and this service is configured as the nameserver in the pod.

- The Pod making the request gets the IP address of the ClusterIP service from DNS, and can then use the IP address and port to make the request.

Kube-proxy connects Service and backend Pod (DNAT)

From this article so far, it appears that the ClusterIP service is forwarding the request calls to the backend pods. But actually, it's done by Kube-proxy.

Kube-proxy runs on each node and monitors the Service and its chosen Pod (actually an Endpoint object).

- When a pod running on a node makes a request to the ClusterIP service, kube-proxy intercepts it.

- The destination ClusterIP service can be identified by viewing the destination IP address and port. and replace the destination of this request with the endpoint address where the actual Pod is located.

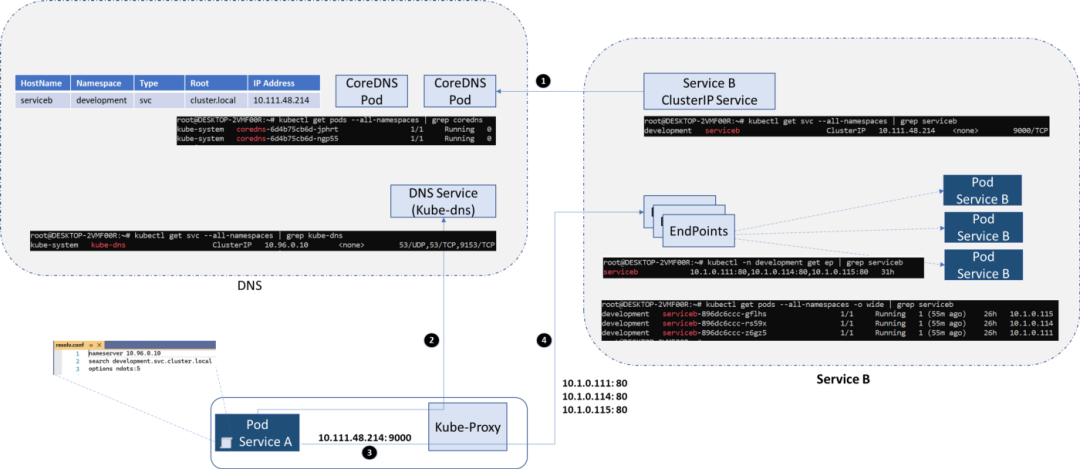

How to work together?

Interaction of ClusterIP Service, CoreDNS, Client Pod, Kube-Proxy, and EndPoint

Interaction of ClusterIP Service, CoreDNS, Client Pod, Kube-Proxy, and EndPoint

- The target's ClusterIP service is registered with CoreDNS.

- DNS resolution: Each pod has a resolve.conf file that contains the IP address of the CoreDNS service, and the pod performs DNS lookups.

- The Pod calls the clusterIP service using the IP address it received from DNS and the port it already knows.

- Destination address translation: Kube-proxy updates the destination IP address to an address available to service B's Pod.