You can use wireshark to analyze tcpdump network packets to quickly troubleshoot and resolve the problem of abnormal TCP connection disconnection

1. Problem phenomenon

According to a customer's feedback, in one of its acceptance environments, when a business student is conducting a common business test (non-performance stress test scenario), a microservice frequently occurs abnormally during database operations, and the underlying reason for the microservice log prompts is as follows:

com.alipay.oceanbase.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure\n\nThe last packet successfully received from the server was 9 milliseconds ago. The last packet sent successfully to the server was 2 milliseconds ago.

at sun.reflect.GeneratedConstructorAccessor143.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.alipay.oceanbase.jdbc.Util.handleNewInstance(Util.java:439)

at com.alipay.oceanbase.jdbc.SQLError.createCommunicationsException(SQLError.java:1236)

at com.alipay.oceanbase.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:4394)

at com.alipay.oceanbase.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:4252)

at com.alipay.oceanbase.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:4789)

at com.alipay.oceanbase.jdbc.MysqlIO.sendCommand(MysqlIO.java:2993)

at com.alipay.oceanbase.jdbc.ServerPreparedStatement.serverExecute(ServerPreparedStatement.java:1396)

at com.alipay.oceanbase.jdbc.ServerPreparedStatement.executeInternal(ServerPreparedStatement.java:831)

at com.alipay.oceanbase.jdbc.PreparedStatement.executeQuery(PreparedStatement.java:2042)

at com.zaxxer.hikari.pool.ProxyPreparedStatement.executeQuery(ProxyPreparedStatement.java:52)

at com.zaxxer.hikari.pool.HikariProxyPreparedStatement.executeQuery(HikariProxyPreparedStatement.java)

at org.springframework.jdbc.core.JdbcTemplate$1.doInPreparedStatement(JdbcTemplate.java:696)

at org.springframework.jdbc.core.JdbcTemplate.execute(JdbcTemplate.java:638)

at org.springframework.jdbc.core.JdbcTemplate.query(JdbcTemplate.java:688)

at org.springframework.jdbc.core.JdbcTemplate.query(JdbcTemplate.java:720)

at org.springframework.jdbc.core.JdbcTemplate.query(JdbcTemplate.java:730)

at org.springframework.jdbc.core.JdbcTemplate.queryForObject(JdbcTemplate.java:798)

at org.springframework.jdbc.core.JdbcTemplate.queryForObject(JdbcTemplate.java:817)- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

2. Background of the problem

The OceanBase server version of the database server in this environment is observer 4.2.1.x, and the OceanBase JDBC driver version used by the microservice is 1.1.7. According to the OB official, the OceanBase JDBC driver of 1.1.7 and earlier versions is prone to this problem of abnormal connection disconnection, and the official solution given by OB is to upgrade the JDBC driver, and it is recommended to use at least 2.4.x or above. However, customers have reported that some microservices use the same version of OceanBase JDBC driver and the same version of OceanBase on the database server, but there is basically no such problem, or the frequency of this problem is very low. Mitigate the issue by adjusting the parameters.

3. Preliminary analysis of the problem

- com.alipay.oceanbase.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure actually refers to the abnormal disconnection of the TCP connection, but from the above error logs, the last time the microservice successfully received a packet from obproxy was 15 milliseconds ago, and the last time the microservice successfully sent a packet Obproxy is 1 millisecond ago, the time is very short, and our business traffic is not large (the scenario is normal business test, not performance stress test), the preliminary speculation may not be network packet loss, but obproxy or server configuration problems.

- In order to rule out network problems, we preliminarily tested the network conditions of the environment by pinging, and the results are as follows, as you can see, the network conditions in the intranet environment are good, the latency is very low, and there is no packet loss:

ping -c 10 -i 1 172.253.34.74

PING 172.253.34.74 (172.253.34.74) 56(84) bytes of data.

64 bytes from 172.253.34.74: icmp_seq=1 ttl=61 time=0.727 ms

64 bytes from 172.253.34.74: icmp_seq=2 ttl=61 time=0.231 ms

64 bytes from 172.253.34.74: icmp_seq=3 ttl=61 time=0.278 ms

64 bytes from 172.253.34.74: icmp_seq=4 ttl=61 time=0.227 ms

64 bytes from 172.253.34.74: icmp_seq=5 ttl=61 time=0.219 ms

64 bytes from 172.253.34.74: icmp_seq=6 ttl=61 time=0.227 ms

64 bytes from 172.253.34.74: icmp_seq=7 ttl=61 time=0.384 ms

64 bytes from 172.253.34.74: icmp_seq=8 ttl=61 time=0.235 ms

64 bytes from 172.253.34.74: icmp_seq=9 ttl=61 time=0.232 ms

64 bytes from 172.253.34.74: icmp_seq=10 ttl=61 time=0.331 ms

--- 172.253.34.74 ping statistics ---

10 packets transmitted, 10 received, 0% packet loss, time 9000ms

rtt min/avg/max/mdev = 0.219/0.309/0.727/0.148 ms。- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

4. Cause of the problem

- The reasons for the abnormal disconnection of TCP connections are complex, not only related to the client jdbc driver version and the server-side obproxy version, but also related to the specific configuration of the database, the specific configuration of the operating system of the client and the server, and even the network situation.

- Due to the large number of related configuration parameters, in order to quickly troubleshoot and confirm the problem, we are going to analyze the network packets first.

- 经过协调,我们首先在微服务端使用 “tcpdump -i any port 2883 -w 2883.pcap” 抓取了约5分钟的网络包,通过微服务日志可以确认,5分钟内出现了上述问题,即 com.alipay.oceanbase.jdbc.exceptions.jdbc4.CommunicationsException:Communications link failure。

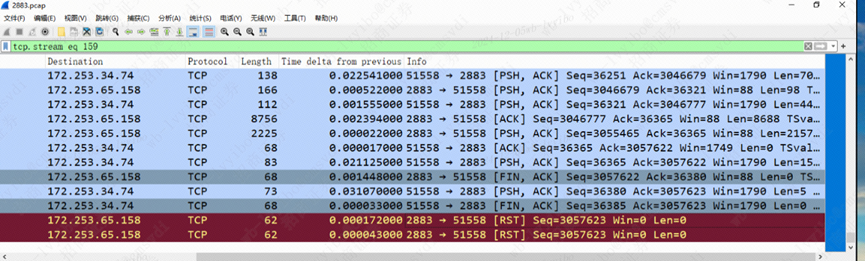

- After opening the pcap network packet captured by the above command through wireshark, it is found that some TCP connections are indeed closed through RST exceptions, as shown in the following figure, note that port 2883 here is the listening port of the server obproxy:

Image

Image

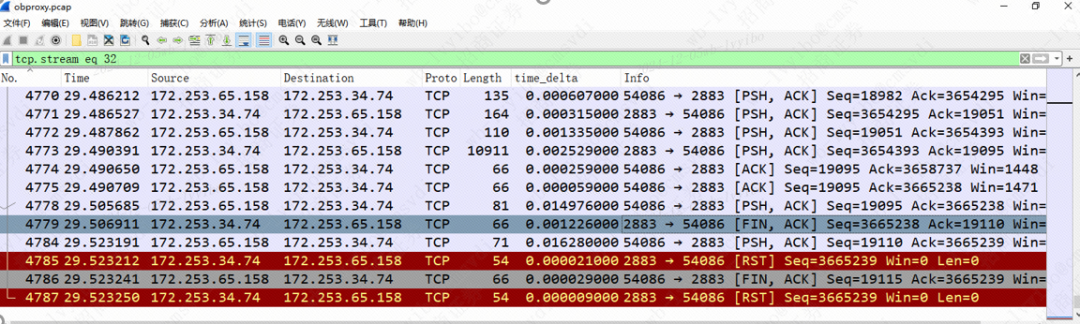

- In order to further confirm the problem, we also coordinated to capture packets on the obproxy server, and after opening the captured pcap network packets through wireshark, we found that the phenomenon is consistent with that of the client, that is, some TCP connections are indeed closed through RST exceptions, as shown in the following figure, note that port 2883 here is the listening port of the server's obproxy:

Image

Image

According to the packet capture results of the client, the following is sorted out:

- During the TCP connection is successfully created and used normally, the microservice sends an SQL request to the server obproxy through the ob jdbc driver.

- After about 0.001448 seconds, the server obproxy (port 2883) actively sends a [FIN,ACK] signal to close the tcp connection, which is the first abnormal place, because under normal circumstances, obproxy does not actively close the tcp connection;

- After about 0.03107 seconds, the microservice receives a close connection signal from obproxy [FIN,ACK] and replies with a [PUSH,ACK] signal to confirm that the signal is received to close the connection;

- After about 0.000033 seconds, the microservice [FIN,ACK] closes the TCP connection, which is the second abnormal place, because under normal circumstances, the microservice will not actively close the tcp connection when waiting for the SQL execution result from the server;

- After about 0.000172 seconds and 0.000043 seconds, the server obproxy (port 2883) actively sends two [RST] signals to reset the tcp connection. (Combined with the server-side packet capture, it can be seen that these two [RSTs] are actually responses to [PUSH,ACK] and [FIN,ACK] packets sent by the client, respectively.)

So far, the direct cause of the problem has been confirmed, it is not caused by network timeout or network packet loss, but obproxy for some reason, actively sent [FIN,ACK] to disconnect!

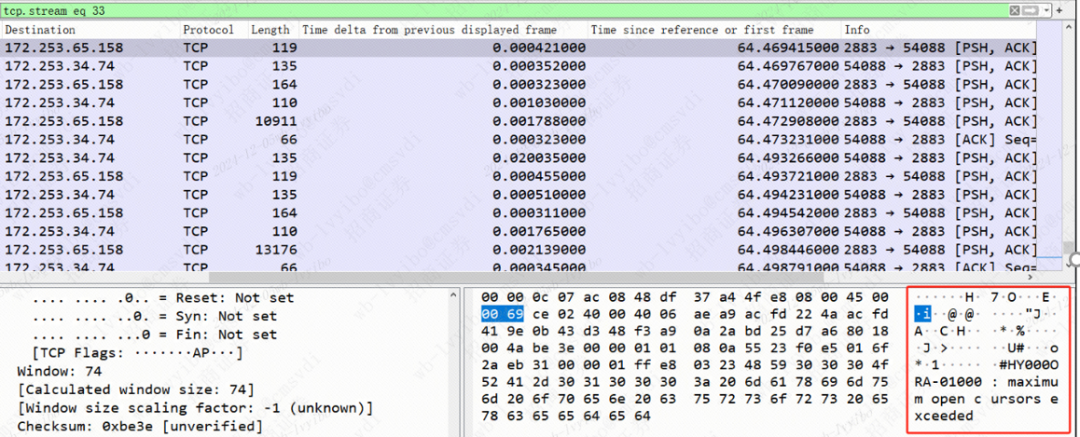

- Further tracking of the TCP flow shows that the obproxy actually replies to the client with an exception message before this: "HY000ORA-01000: maximum open cursors exceeded", as shown in the following figure:

Image

Image

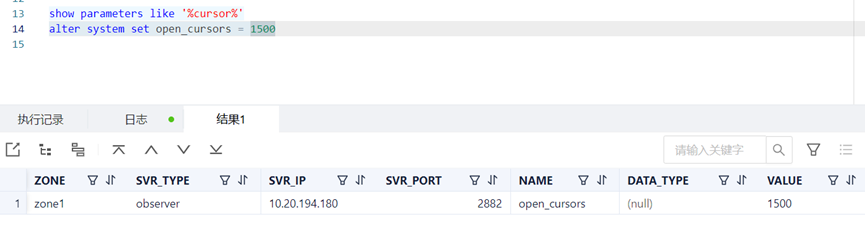

- Further check by running the show parameters like '%cursor%' command and find that the open_cursors parameter is configured to 50 in this environment, while in other environments where there is no such error, the parameter is configured to 500 or even 1500!

- Therefore, the root cause of this problem is that the parameter configuration value of the open_cursors in the environment is too low, and when the number of cursors actually opened at the bottom of a session is greater than the parameter value, the server observer sends [FIN] to actively close the TCP connection for self-protection reasons! This also explains why clients find logs about the frequent creation of new JDBC connections.

- open_cursors 参数说明如下:”specifies the maximum number of open cursors a session can have at once. can use this parameter to prevent a session from opening an excessive number of cursors. Range: [0, 65535] in integer.”

Image

Image

5. Problem solving

In this acceptance environment, use the command "alter system set open_cursors=1500; The value of the parameter open_cursors was changed from 50 to 1500, and the problem was successfully solved. In addition, after consulting with the DBA, the relevant specifications are as follows:

- Parameter open_cursors: [Tenant level takes effect], which is used to set the number of cursors to be opened in a single session, the default is 50, too small is easy to appear ORA-01000 maxium open cursors exceeded, which can be modified according to the situation, and the calculation formula is as follows: tenant memory (64G) * _temporary_file_io_area_size (5%)/macro block (2m) = 1600, The following command is to be modified: alter system set open_cursors=1500;

- Parameter temporary_file_io_area_size: [Tenant-level effect], the total size of the intermediate SQL result (such as hash join) that can be used in the storage layer can be appropriately increased (tenant-level parameters need to be adjusted by sys tenant), and the modification command is as follows: alter system set "_temporary_file_io_area_size" = 5;

6. Problem follow-up

- 微服务的异常日志打印逻辑需要优化下,当前的日志揭示了底层原因是 “com.alipay.oceanbase.jdbc.exceptions.jdbc4.CommunicationsException:Communications link failure\n\n The last packet successfully received from the server was 9 milliseconds ago. the last packet sent successfully to the server was 2 milliseconds ago.”, 但并没有揭示更底层的原因,即 “HY000ORA-01000: maximum open cursors exceeded”,这个最底层的原因也需要体现在日志中,以方便问题排查;

- Subsequent microservices need to upgrade the OB JDBC driver version, and it is recommended to upgrade to the lowest version recommended by the official, such as OB JDBC driver 2.4.3;