The Designed Agent is not Smart or Fast Enough? You Need a Semantic Router

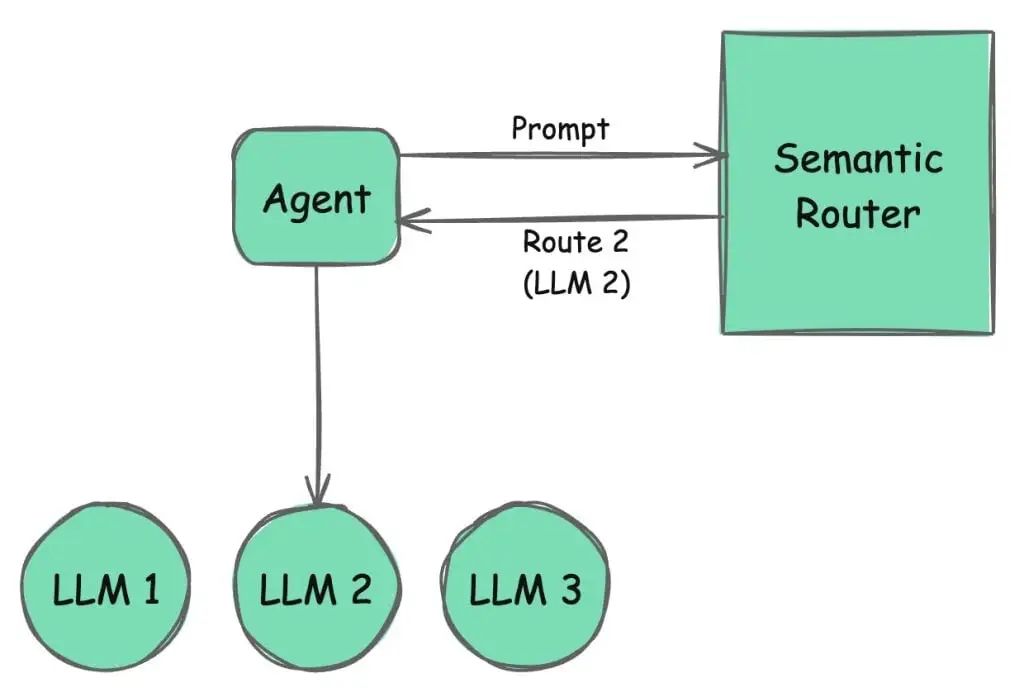

Semantic Router is a new paradigm that enables AI Agents to select the right LLM for the right task while reducing their dependence on LLMs.

Emerging agent workflow patterns rely heavily on LLMs to perform reasoning and decision making. Each agent calls the LLM multiple times during task execution. For workflows consisting of multiple agents, the number of calls grows exponentially, resulting in cost and delays.

There are various language models with different features and capabilities, such as small language models, multimodal models, and purpose-built task-specific models. Agents can use these models to complete their workflows. This reduces costs and latency, and improves overall accuracy.

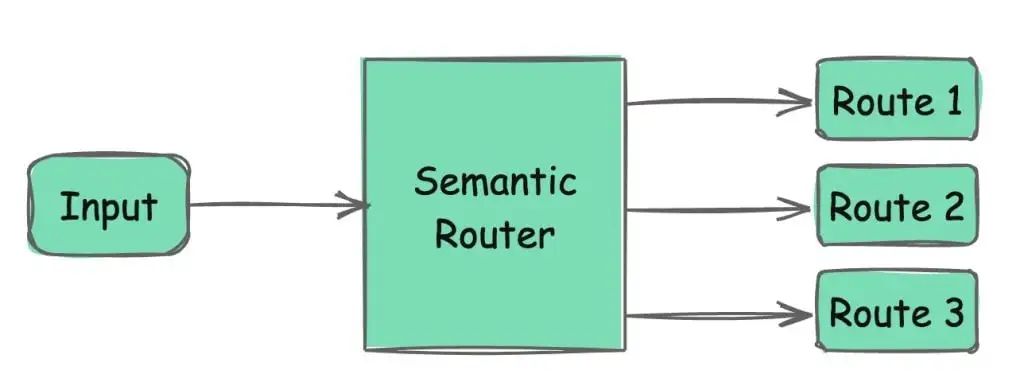

The semantic router is a pattern that enables the agent to choose the right language model for the right task while also reducing reliance on the model through local decision making. Behind the scenes, the semantic router matches the prompt to a set of existing phrases (also called utterances) using embedding vectors stored in a vector database to map them to a specific route. The route can be the LLM that is most suitable for the task. Because semantic search determines the goal, we call it a semantic router.

The semantic router uses the same technology as the retriever in the RAG pipeline to perform a semantic search to find the correct match. But instead of a block of text, it returns a single predefined route based on the input.

Although it is technically possible to implement a semantic router as a custom layer between the Agent and the LLM, the open source Semantic Router project is gaining popularity.

1. What is Semantic Router?

Semantic Router, an innovative open source tool developed by a company called Aurelio AI, transforms the decision-making of AI-based agents. This layer enhances the capabilities of LLM and agents by leveraging semantic vector space to more efficiently route requests. Unlike traditional methods that rely on slow LLM generation to make tool usage decisions, Semantic Router uses the power of semantic meaning to make fast and accurate choices.

The project provides seamless integration with various embedding models, including popular options such as Cohere and OpenAI, as well as support for open source models through the HuggingFace encoder. The project utilizes an internal in-memory vector database, but it is also easy to configure if you want to use mainstream vector database engines such as Pinecone and Qdrant. The semantic router is able to make decisions based on user queries, significantly reducing processing time, typically from 5000 milliseconds to just 100 milliseconds.

With its MIT license, Semantic Router is extensible, allowing developers to freely incorporate it into their projects. The tool addresses key challenges in AI development, including security, scalability, and speed, making it a valuable asset for creating more efficient and responsive agent workflows.

2. Key Components of Semantic Router

1. Routing and Utterances

Routes form the backbone of the Semantic Router's decision-making process. Each route represents a potential decision or action and is defined by a set of utterances, which are example inputs that map to a specific route. These utterances are fed into the semantic profile for each route. New inputs are compared to these utterances to find the closest match.

In practice, this allows the system to classify and respond to inputs based on their semantic meaning, rather than relying on LLM generation, which can be slow or error-prone. Developers can customize routing to suit specific applications—whether filtering for sensitive topics, managing APIs, or orchestrating tools in complex workflows.

2. Encoder and Vector Space

To compare the input with predefined utterances, the semantic router uses an encoder to convert the text into high-dimensional vectors. These vectors are in a semantic space, where the distance between vectors reflects the semantic similarity of the corresponding texts. The shorter the distance, the higher the semantic relevance of the input.

Semantic Router supports multiple encoding methods, including Cohere and OpenAI encoders for high-performance API-driven workflows, and Hugging Face models for users seeking open source, locally executable alternatives. Developers have the flexibility to choose between different encoders to tailor the system to their specific infrastructure, balancing performance, cost, and privacy concerns.

3. Decision-making level

Once the input is encoded and compared to the predefined routes, the semantic router makes a decision using the RouteLayer. This layer aggregates routes and embeddings and manages the decision-making process. It also supports hybrid routing, where the system can combine local and cloud-based models to optimize performance.

4. Local LLM Integration

For developers who want to maintain full control of their LLM or reduce reliance on external APIs, Semantic Router provides support for local models through LlamaCPP and Hugging Face models. Consumer hardware (such as a MacBook running Apple Metal hardware acceleration or a Microsoft Copilot+ PC) can fully execute routing decisions and LLM-driven responses. This local execution model not only reduces latency and cost, but also improves privacy and security.

5. Scalability

Scalability becomes an issue when adding more tools and agents to a workflow. LLMs have a limited context window, which means they struggle to handle large amounts of data or context. The Semantic Router solves this problem by decoupling decision making from the LLM, enabling it to handle thousands of tools simultaneously without overloading the system. This separation of concerns enables agents to scale without sacrificing performance or accuracy.

3. Use Cases and Scenarios

As we all know, an agent needs to manage multiple tools, APIs, or datasets at the same time, which is particularly suitable for Semantic Router. In a typical workflow, the router can quickly determine which tool or API to use based on the input without having to do a full LLM query. This is particularly useful in virtual assistant systems, content generation workflows, and large-scale data processing pipelines.

For example, in a virtual assistant, the Semantic Router can efficiently route prompts like “schedule a meeting” or “check the weather” to the appropriate API or tool without the LLM being involved in every decision. Similarly, requests can be routed to an LLM fine-tuned to respond to medical or legal terminology. This not only reduces latency, but also ensures a consistent, reliable experience for users.

The semantic router can be used to evaluate whether a hint should be sent directly to a small language model running locally, or whether it must be mapped to a function and its arguments by calling a powerful LLM running in the cloud. This is especially important in the implementation of a federated language model that leverages both a cloud-based language model and a local language model.

In the era of intelligent agent workflows, the need for efficient, scalable, and deterministic decision-making systems is more pressing than ever. Semantic Router provides a powerful solution by leveraging the power of semantic vector spaces to make fast, reliable decisions while still allowing integration with LLM when needed. Its flexibility, speed, and determinism make it an indispensable tool for developers looking to build the next generation of AI systems.

As LLMs grow and diversify, tools such as Semantic Router will be critical to ensuring that agent systems can perform, scale, and provide consistent results. This will help developers find new ways to use AI in their workflows.

Reference link: https://thenewstack.io/semantic-router-and-its-role-in-designing-agentic-workflows/