Do You Understand the Problems That the Introduction of Cache Brings to the Business?

1. Problems caused by the introduction of cache

After the business system introduced cache, the architecture changed from the original two-tier architecture to a three-tier architecture:

This brings up three problems that need to be solved, namely cache reading, cache updating and cache elimination.

1. Cache Read

Cache reading is relatively simple. When querying data, the cache is queried first. If the cache hits, the data is read from the cache. If the cache does not hit, the database is queried and the cache is updated.

2. Cache Update

When updating the cache, there are several strategies for the order of updating the storage and updating the cache:

1) Update the database first and then update the cache

First of all, this solution will have thread safety issues:

For example, if thread A and thread B update data at the same time, the following execution order may occur.

①Thread A updates the database

②Thread B updates the database

③Thread B updates the cache

④Thread A updates the cache

At this time, the data in the database will be inconsistent with the cached data. This is because thread A updates the database first. Perhaps due to abnormal conditions such as the network, thread B updates the database and then updates the cache. After thread B updates the cache, thread A updates the cache, which leads to inconsistency between the database data and the cached data.

Secondly, this solution also has its inapplicable business scenarios:

The first business scenario is that the database has more writes than reads. In this scenario, the strategy of updating the database first and then updating the cache will cause the cache to be frequently updated without being read, greatly wasting server performance.

Another business scenario is that the data in the database is not written directly to the cache, but requires a lot of complex operations to write the results of the operations to the cache. If the strategy of updating the database first and then updating the cache is used in this scenario, it will also cause a waste of server resources.

2) Delete the cache first, then update the database

The solution of deleting the cache first and then updating the database also has thread safety issues. For example, thread A updates the cache while thread B reads the cached data. The following execution order may occur:

①Thread A deletes the cache

②Thread B queries the cache and finds that the desired data is not in the cache

③Thread B queries the old data in the database

④Thread B writes the old data it has queried into the cache

⑤Thread A writes new data to the database

At this point, the data in the database and the data in the cache are inconsistent. If the cache is deleted, the database data and the cache data will also be inconsistent.

3) Update the database first, then delete the cache

First, this method also has a very small probability of inconsistency between database data and cache data. For example, thread A performs a query operation and thread B performs an update operation. The execution order is as follows:

①The cache just expired

②Request A to query the database and obtain the old value in the database

③Request B to write the new value to the database

④Request B to delete the cache

⑤Request A to write the old value found into the cache

If the above sequence occurs, the data in the database and the data in the cache will be inconsistent. However, the probability of inconsistency between the database and the cache is very low when the strategy of updating the database first and then deleting the cache occurs. The reason is that the database writing operation in step ③ takes less time than the database reading operation in step ②, which makes it possible for step ④ to be executed before step ⑤.

However, the speed of database read operations is often much faster than that of write operations, so it is unlikely that step ③ takes less time than step ②. Therefore, it is a recommended practice to update the database first and then delete the cache.

4) Abnormal situations

The above discussion and comparison are based on the case where both the cache deletion and database update operations are successful. Of course, the operation is basically successful when the system is running normally, but what if one of the two operations fails? For example, update the database first and then delete the cache:

- Failed to update the database: This situation is very simple and will not affect the second step operation or data consistency. Just throw an exception directly;

- Failed to update cache: In this case, you need to continue trying to delete the cache until the cache is deleted successfully. This can be done with a message queue, as shown in the following figure:

①Update database data;

② Failed to delete cache data;

③Send the key to be deleted to the message queue;

④ Consume the message yourself and obtain the key that needs to be deleted;

⑤Continue to retry the deletion operation until it succeeds.

3. Cache elimination

There are two main strategies, active elimination and passive elimination:

- Active elimination: Set a TTL time for the key-value pair, and automatically eliminate it when it expires (recommended). This method can achieve the purpose of caching hot data;

- Passive elimination: When the memory reaches the maximum limit, it is eliminated through the LRU and LFU algorithms (not recommended). This method affects cache performance and the cache quality cannot be controlled.

2. Three Mountains of Cache

1. Consistency

Consistency mainly solves the following problems:

1) How to solve the isolation problem with parallel updates

- Serial update: A single Key update sequence needs to be updated serially to ensure timing

- Parallel update: Different keys can be placed in different slots, and parallel updates can be performed in the slot dimension to improve performance

2) How to solve the problem of partial updates during atomic updates

- System linkage: cache & storage real-time synchronization update status, synchronization status through revision

- Partial success: cache update succeeds, storage update fails, HA is triggered, and write success is guaranteed (log idempotence)

3) How to solve the problem of read consistency

- Revision: Each key has a revision, which is used to identify the newness and oldness of data.

- Elimination control: Redis does not eliminate data that has not been updated (Redis does not eliminate data with revision < 4), ensuring that Redis does not cache old versions of data

2. Cache breakdown

Cache penetration refers to accessing data directly by bypassing the cache and accessing the database. There are two possible reasons for this phenomenon:

One is a large number of empty queries, such as hacker attacks; the other is cache pollution, such as caused by a large number of web crawlers.

1) To solve the cache breakdown caused by empty queries, there are two main solutions:

The first is to add a Bloom filter in front of the cache layer. Bloom filter is a data structure, a relatively clever probabilistic data structure, characterized by efficient insertion and query, which can be used to tell you "something definitely does not exist or may exist". Bloom filter is a bit vector or bit array, which looks like this:

If we want to map a value to a Bloom filter, we need to use multiple different hash functions to generate multiple hash values, and set the bit position pointed to by each generated hash value to 1. For example, for the value "baidu" and three different hash functions, hash values 1, 4, and 7 are generated respectively, then the above figure is transformed into:

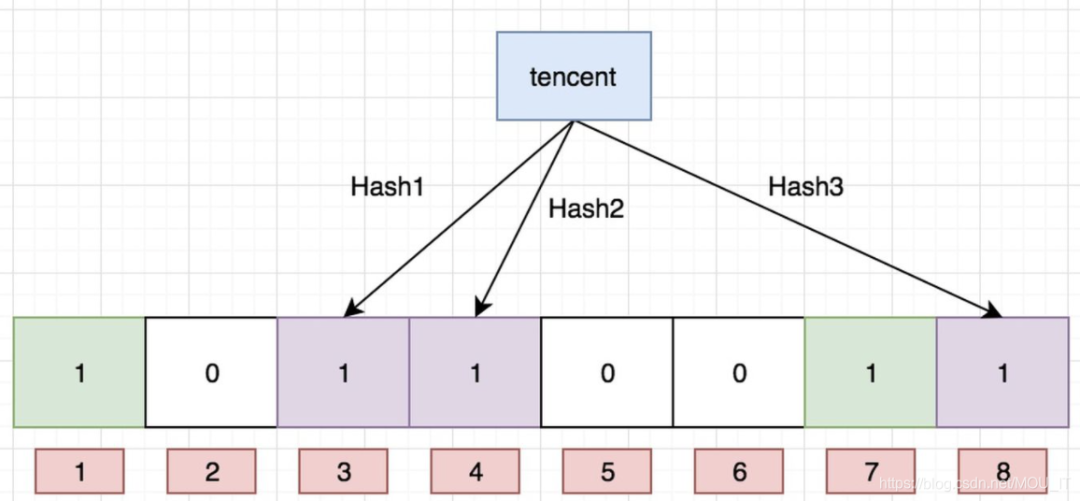

Now we store another value "tencent". If the hash function returns 3, 4, and 8, the graph continues to become:

picture

picture

It is worth noting that the bit 4 is overwritten because the hash function of both values returns this bit. Now if we want to check whether the value "dianping" exists, the hash function returns three values: 1, 5, and 8. As a result, we find that the value of the bit 5 is 0, which means that no value is mapped to this bit. Therefore, we can say with certainty that the value "dianping" does not exist.

If we need to check whether the value "baidu" exists, the hash function will definitely return 1, 4, and 7. Then we check and find that the values of these three bits are all 1. Can we say that "baidu" exists? The answer is no, it can only be possible that the value "baidu" exists.

Why is this? The answer is simple, because as more and more values are added, more and more bits will be set to 1. In this way, even if a value "taobao" has not been stored, if the three bits returned by the hash function are all set to 1 by other values, the program will still determine that the value "taobao" exists.

Obviously, if the Bloom filter is too small, all bits will be 1 soon, and any query value will return "may exist", which will not achieve the purpose of filtering. The length of the Bloom filter will directly affect the false alarm rate. The longer the Bloom filter, the lower the false alarm rate. In addition, the number of hash functions also needs to be weighed. The more the number, the faster the Bloom filter bit position is 1, and the lower the efficiency of the Bloom filter; but if there are too few, then our false alarm rate will become higher.

The second method is to add all keys and hot data values to the cache and intercept empty data queries at the cache layer.

2) To solve the cache breakdown problem caused by crawlers, you can set a cache strategy:

- For update operations, immediate caching is required

- For read operations, you can set whether to cache immediately or delay, and whether to cache only when the number of hits reaches a certain number within the specified time window.

3. Cache Avalanche

Cache avalanche refers to the centralized elimination of hot data, where a large number of requests are instantly transmitted to the storage layer, causing the storage layer to overload.

The main cause of cache avalanche is that the TTL mechanism is too simple. The solutions are as follows:

- When setting TTL, add a random time value to the expiration time

- Each access will re-update the TTL. In addition, the business can more accurately specify the hot data cache time.