Cache design: What is the key to a good cache design?

Cache is a place for temporary storage of data. When a user queries data, the first step is to search in the cache. If it is found, it will be used directly; if it is not found, it will need to go back to the original location of the data to search. Therefore, cache is essentially a technical means of exchanging space for time, which speeds up data access by duplicating data at the spatial level.

However, with the continuous development of distributed and cloud computing technologies, data storage technology has undergone tremendous changes. Moreover, different storage technologies have great differences in price and performance. Therefore, when designing software for performance, if we fail to do a good job of multi-level cache design, it may not only waste money, but also the performance benefits obtained may not reach the ideal state.

The road to cache design

First of all, I plan to start with two questions to guide you to understand when cache design should be carried out, and through a comparative analysis of the characteristics of different data types, discuss with you how to do a good job in cache design.

OK, first question: In Internet application services, is the purpose of using cache technology just to increase access speed? In fact, I think not all caches are just for speeding up, because in distributed systems, the cache mechanism is actually an important trade-off in system-level performance design. For example, when the load of a database is high and close to the system bottleneck, we can use cache technology to distribute the load to other databases. Here, the purpose of using cache is mainly to achieve load balancing, not to increase access speed.

The second question: In a large system, there are many types of data. Do you need to plan a cache mechanism for each type of data? In fact, it is not necessary at all. In actual business scenarios, the system contains a lot of business data. You cannot design and implement a cache mechanism for each type of data. On the one hand, the software cost is too high, and on the other hand, it is likely not to bring high performance benefits. Therefore, before starting the cache design, you first need to identify which data access has a greater impact on performance. So how should we identify which data needs a cache mechanism?

However, after identifying the data that needs to use the cache mechanism, you may find that the characteristics of these data types are very different. If the same cache design is used, it is actually difficult to achieve the best software performance. So here, I summarize three types of data that require a cache mechanism, namely immutable data, weakly consistent data, and strongly consistent data. Understanding the differences between these three types of cache data and the corresponding methods of designing cache mechanisms, you will master the core of cache design.

Okay, let’s take a closer look at it.

First is immutable data. Immutable data means that the data will never change, or will not change for a long period of time, so we can also determine that this part of the data is immutable. This type of data is a type of data that can be given priority to using caching technology, and there are many of them in actual business scenarios. For example, static web pages and static resources in Web services, or the mapping relationship between column data and keys in database tables, business startup configuration, etc., can all be considered immutable data.

Moreover, immutable data also means that it is extremely easy to achieve distributed consistency. We can choose any data storage method for this data, and we can also choose any storage node location. Therefore, the way we implement the cache mechanism can be very flexible and relatively simple. For example, in the Java language, you can directly use the memory Caffeine, or the built-in structure as a cache.

In addition, you need to pay attention to the fact that when you design a cache for immutable data, the cache expiration mechanism can be either permanent or time-based. When using the time-based expiration method, you also need to make a design and implementation trade-off between cache capacity and access speed based on specific business needs.

Weakly consistent data

The second type is weak consistency data. It means that the data changes frequently, but the business does not have high requirements for data consistency. In other words, it is acceptable for different users to see inconsistent data at the same time. Given that this type of data has relatively low requirements for consistency, when designing a cache mechanism, you only need to achieve eventual consistency. This type of data is also common in actual business, such as historical analysis data of the business, data returned by some search queries, etc. Even if some of the most recent data is not recorded, the impact is not significant.

In addition, there is another way to quickly identify this type of data, which is to use the data read from the database Replica node, most of which belong to this type of data (the data of many database Replica nodes does not meet the strong consistency requirements due to the delay in data synchronization). For weakly consistent data, we usually use a cache invalidation mechanism based on time. At the same time, due to the characteristics of weak consistency, you can flexibly choose data storage technology, such as memory cache, or distributed database cache. You can even design a multi-level cache mechanism based on load balancing scheduling.

Strong consistency data

The third type of cache data is strongly consistent data. This means that the data changes frequently and the business has extremely high requirements for database consistency. That is to say, when the data changes, other users should see the updated data anywhere in the system.

So, for this type of data, I usually do not recommend that you use a cache mechanism, because the use of cache for this type of data is more complicated and can easily introduce new problems. For example, users can directly submit and modify various types of data content. If the data in the cache is not modified synchronously, data inconsistency will occur, leading to more serious business failures.

However, in some special business scenarios, for example, when the frequency of access to individual data is extremely high, we still need to further improve performance by designing a caching mechanism.

Therefore, for this type of strongly consistent data, you need to pay special attention to the following two points when designing a cache mechanism:

This type of data cache must be implemented in a modification synchronization manner. In other words, all data modifications must ensure that the data in the cache and the database can be modified synchronously.

Accurately identify the duration of time that cached data can be used in a specific business process. This is because some cached data (such as data required by multiple processes in a REST request) can only be used in a single business process and cannot be used across business processes.

Well, the above are the cache design ideas for three typical types of data.

What you need to note here is that the purpose of using cache must be performance optimization. Therefore, you also need to use an evaluation model to analyze whether the cache has achieved the goal of performance optimization.

So what kind of evaluation model is it? Let's take a look at the formula of this performance evaluation model: AMAT = Thit + MR * MP. AMAT (Average Memory Access Time) represents the average memory access time; Thit refers to the data access time after hitting the cache; MR is the failure rate of accessing the cache; MP refers to the sum of the time it takes the system to access the cache and the time it takes to access the original data request after the cache fails.

In addition, you may notice that the difference between AMAT and original data access represents the improvement in access speed brought by using cache. In some scenarios where cache is used improperly, the added cache mechanism is likely to cause a decrease in data access speed. So next, I will use real cache design cases to guide you to understand how to use cache correctly, so as to help you improve system performance more effectively.

Typical usage scenarios for cache design

Well, before I start, I would like to explain to you that in real business, there are actually many scenarios for cache design. My purpose here is mainly to make you clear about the methods of cache design.

Therefore, I will start with two typical case scenarios to help you understand the use of cache.

How to design cache for static pages?

In Web application services, an important application scenario is the use of static page cache. The static page here refers to a website where all users see the same page, which usually does not change unless it is redeployed, such as the homepage cover of most company websites. Usually, the number of concurrent visits to static pages is relatively large. If you do not use caching technology, it will not only cause a long user response delay, but also bring great load pressure to the backend service.

So for static pages, when we use caching technology, we can reduce the transmission delay of page data on the network by placing the static cache closer to the user.

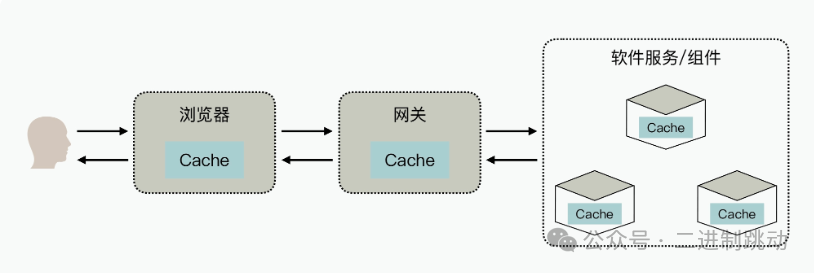

Now, let's look at a diagram of a cache design for static pages:

picture

picture

As shown in the figure above, for static web pages, you can first use caching technology in the instance of the software backend service to avoid regenerating page information every time. Then, since static web pages are immutable data, you can use memory or file-level caching.

In addition, for static pages with a large number of visits, in order to further reduce the pressure on the backend service, you can also place the static pages at the gateway, and then use third-party frameworks such as OpenResty to add a cache mechanism to save static pages.

In addition, many static pages or static resource files on the web page also need to use the browser cache to further improve performance. Note that I am not suggesting that you design a three-layer cache mechanism for all static pages, but you should know that in the software design stage, you usually need to consider how to design a cache for static pages.

How does the backend service design a multi-level cache mechanism for the database?

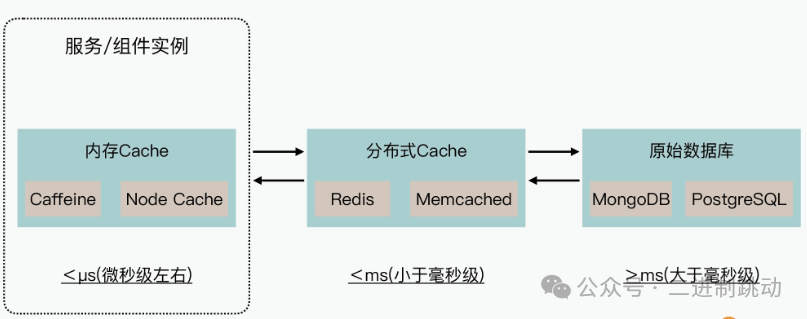

Another typical caching scenario is database caching. Today's databases are usually distributed and large in scale. It takes a certain period of time to query and analyze large-scale data. Therefore, we can first identify the data in these calculation results that can use the cache mechanism, and then use the cache to improve access speed. The following is a schematic diagram of the database cache mechanism:

picture

picture

As can be seen from the figure, both memory-level cache and distributed cache can serve as caches for data computing and analysis results. Moreover, the access speeds of caches at different levels vary. The access speed of memory-level cache can reach microseconds or even better; the access speed of distributed cache is usually less than milliseconds; and for native database queries and analysis, it is usually greater than milliseconds.

Therefore, when planning the cache mechanism, you need to follow the cache usage principles I introduced earlier, identify the data type, and then select and design the cache implementation mechanism.

In addition, in the process of optimizing access speed through the cache mechanism, we mainly focus on the access speed improvement brought by different levels of cache, and here, different levels of cache can also exist in one database.

For example, in a performance optimization project I participated in designing, the cache strategy was to use another collection in MongoDB as a cache query analysis to optimize performance. Therefore, when designing cache, you should focus on different types of data and performance evaluation models of caches at different levels, rather than just focusing on the database. Only in this way can you design a better and more excellent performance cache solution.