LLM context window exceeds 2 million! No architectural changes + complex fine-tuning required, easily expandable 8 times

Large language models (LLM) often pursue longer "context windows". However, due to problems such as the high cost of fine-tuning, the scarcity of long text, and the catastrophic values introduced by new token positions, the context windows of current models do not exceed 128k. token

Recently, researchers from Microsoft Research proposed a new model, LongRoPE, which extends the context window of pre-trained LLM to 2048k tokens for the first time, requiring only 1000 fine-tuning steps at a training length of 256k, while maintaining the original short context. Window performance.

picture

picture

Paper link: https://arxiv.org/abs/2402.13753

Code link: https://github.com/microsoft/LongRoPE

LongRoPE mainly contains three key innovation points:

1. Identify and exploit two non-uniformities in position interpolation through efficient search, provide better initialization for fine-tuning, and achieve 8 times expansion in non-fine-tuning situations;

2. Introduced a progressive expansion strategy, first fine-tuning the 256k length LLM, and then performing a second position interpolation on the fine-tuned extended LLM to achieve a 2048k context window;

3. Rescale LongRoPE on 8k length to restore short context window performance.

Extensive experiments on various tasks on LLaMA2 and Mistral demonstrate the effectiveness of this approach.

The model extended by LongRoPE retains the original architecture with only slight modifications to the position embedding, and can reuse most of the existing optimizations.

Non-uniformity in position interpolation

The Transformer model requires explicit position information, usually in the form of position embedding to represent the order of input tokens.

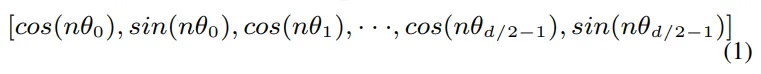

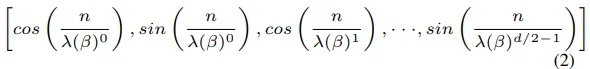

The position embedding representation method in this article mainly comes from RoPE. For a mark with position index n, its corresponding RoPE encoding can be simplified as follows:

picture

picture

Among them, d is the embedding dimension, nθi is the rotation angle of the marker at position n, and θi = θ -2i/d represents the rotation frequency. In RoPE, the default base value for θ is 10000.

Inspired by NTK and YaRN, the researchers noticed that these two models can gain performance gains from nonlinear embeddings, especially when considering different frequencies in each dimension of RoPE for specialized interpolation and extrapolation.

However, current nonlinearities rely heavily on human-designed rules.

This naturally leads to two questions:

1. Is the current position interpolation optimal?

2. Are there unexplored nonlinearities?

picture

picture

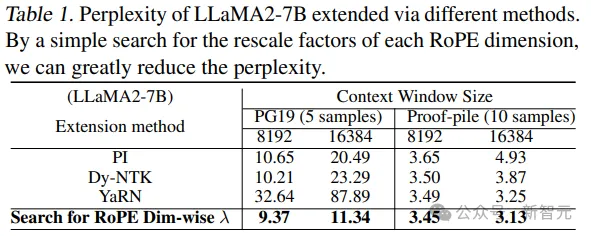

To answer these questions, the researchers used evolution search to find better non-uniform position interpolations for LLaMA2-7B. The search was guided by fallibility, using 5 random samples from the PG19 validation set.

Through empirical analysis, the researchers summarized several main findings.

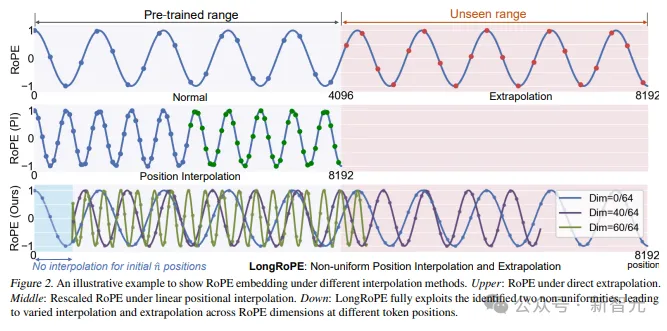

Finding 1: The RoPE dimension shows great inhomogeneity, and the current position interpolation method cannot effectively handle these inhomogeneities;

The optimal λ is searched for each RoPE dimension in Equation 2.

picture

picture

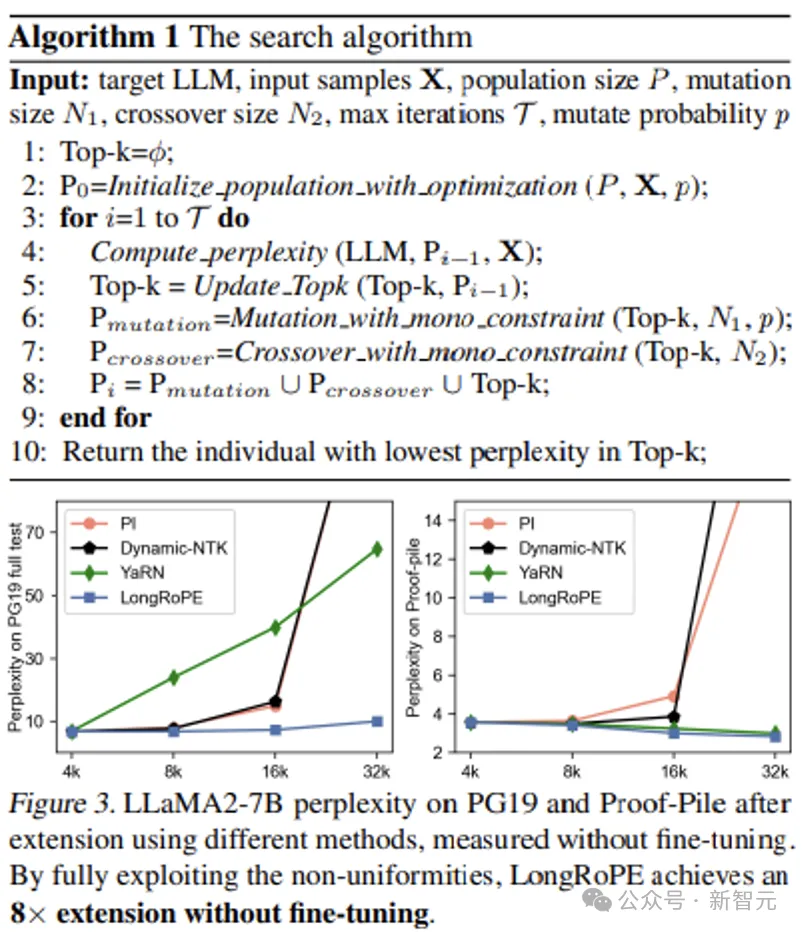

The researchers compared the complexity of LLaMA2-7B using different methods on the PG19 and Proof-pile test sets without fine-tuning.

picture

picture

Judging from the results, there is a significant improvement in the searched solutions, indicating that current linear (PI, positional interpolation) and non-uniform (Dynamic-NTK and YaRN) interpolation methods are not optimal.

It is worth noting that YaRN performs worse than PI and NTK on PG19 because it cannot reach the target context window length of non-fine-tuned LLM.

For example, with a context size of 8k, YaRN's perplexity peaks after 7k.

Through the search, the rescaled factor λ in Formula 2 becomes uneven, which is different from the fixed scale s in the formula calculations of PI and NTK and the group calculation of YaRN.

In both 8k and 16k context windows, these non-uniform factors significantly improve the language modeling performance (i.e. complexity) of LLaMA2 without the need for fine-tuning, mainly because the resulting positional embeddings effectively preserve the original RoPE, esp. is the key dimension, thereby reducing the difficulty of LLM in distinguishing approximate token positions.

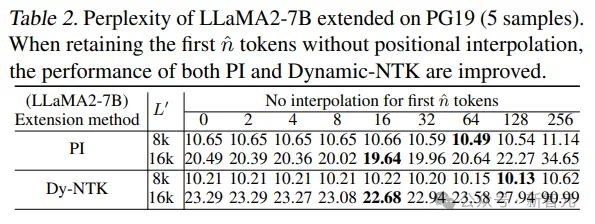

Finding 2: RoPE inference of initial chunks in the input sequence should reduce interpolation;

For the initial n tokens in the input sequence, it is assumed that RoPE should do less interpolation because a larger attention score will be obtained and thus crucial for the attention layer, as observed in Streaming LLM and LM-Infinite As arrived.

To verify this, the researchers used PI and NTK to extend the context window to 8k and 16k, retaining the first n (0, 2, ..., 256) tokens without interpolation. When n=0, restore to the original PI and NTK

picture

picture

Two results can be observed in the above table:

1. Retaining the starting token without position interpolation can indeed improve performance.

2. The optimal starting token number n depends on the target extension length.

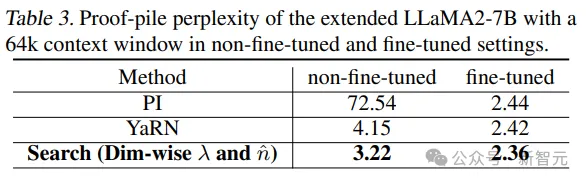

Finding 3: Non-uniform position interpolation can effectively expand the LLM context window in both fine-tuning and non-fine-tuning settings.

虽然已经证明,在不进行微调的情况下,搜索到的非均匀位置插值能显著提高8k和16k扩展性能,但更长的扩展需要微调。

Therefore the 64k context window size of LLaMA2-7B was fine-tuned using the searched RoPEs.

picture

picture

It can be seen from the results that this method is significantly better than PI and YaRN both before and after fine-tuning LLaMA2-7B. The main reason is that it effectively uses non-uniform position interpolation, minimizes information loss, and provides better fine-tuning. Initialization.

Inspired by the above findings, the researchers proposed LongRoPE, which first introduced an efficient search algorithm to fully exploit these two inhomogeneities and extend the LLM context window to 2 million tokens.

picture

picture

Please refer to the original text for the specific formal algorithm.

Experimental results

The researchers applied LongRoPE to the LLaMA2-7B and Mistral-7B models and evaluated their performance from three aspects:

1. Perplexity of extended context LLM in long documents;

2. Passkey retrieval task, which measures the model’s ability to retrieve simple keys from a large amount of irrelevant text;

3. Standard LLM benchmark for 4096 context windows;

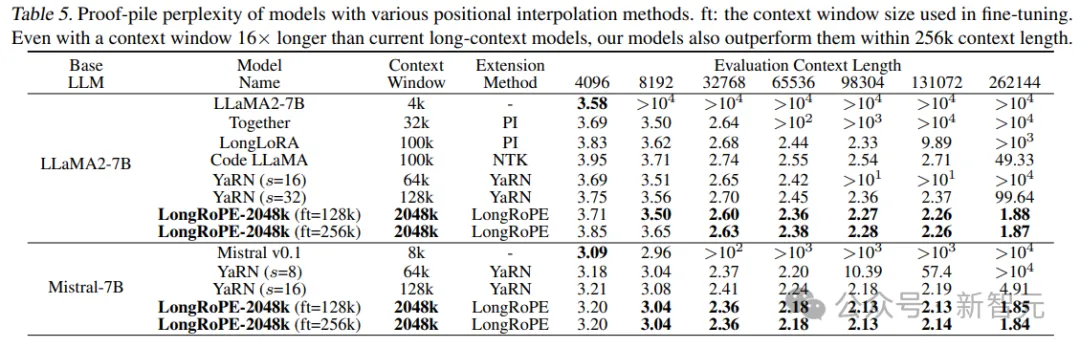

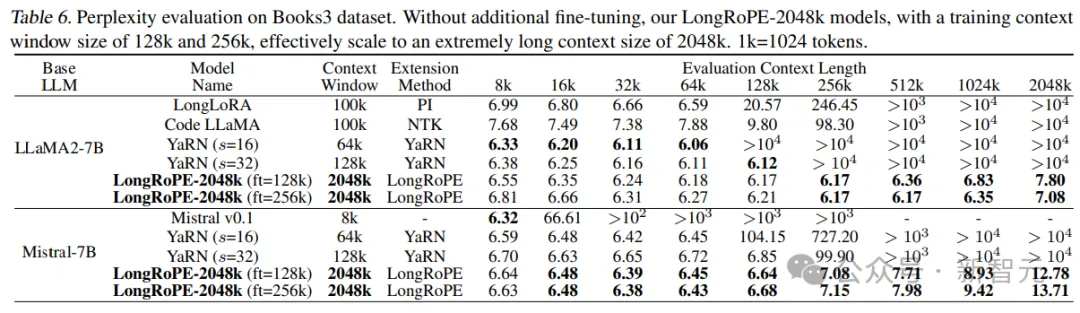

Long sequence language modeling in the 256k range

Perplexity of LLaMA2 and Mistral extended by different interpolation methods on Proof-pile and PG19.

Two key conclusions can be drawn from the experimental results:

1. From the evaluation length of 4k to 256k, the extended model shows a decreasing trend in overall perplexity, indicating that the model has the ability to utilize longer context;

2. Our LongRoPE-2048k model still outperforms the state-of-the-art within a context length of 256k, even with a context window length of 16x, which is often a challenge to maintain performance at shorter context lengths. Baseline model.

picture

picture

picture

picture

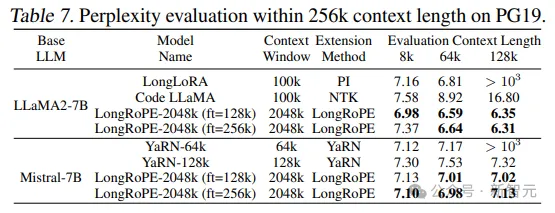

Over 2000k long sequence language modeling

To evaluate the effectiveness of very long documents, the researchers used the Books3 dataset.

To evaluate the efficiency, 20 books are randomly selected, each with a length of more than 2048k tokens, and a sliding window of 256k is used.

picture

picture

As can be seen from the results, LongRoPE successfully extends the context window of LLaMA2-7B and Mistral-7B to 2048k, while also achieving perplexity comparable to or better than the baseline at shorter lengths of 8k-128k.

还可以观察到2048k LLaMA2和Mistral之间的显著性能差异:Mistral在较短的长度上优于基线,但困惑度在超过256k长度时达到7

The performance of LLaMA2 is as expected: confusion decreases over time, increasing slightly at 1024k and 2048k.

Furthermore, on LLaMA2, LongRoPE-2048k performs better at the trimming length of 256k versus 128k, mainly due to the smaller secondary extension ratio (i.e. 8x versus 16x).

In contrast, Mistral performed better in fine-tuning the window size of 128k. The main reason is that for Mistral's 128k and 256k fine-tuning, the researchers followed YaRN's settings and used 16k training length, which affected Mistral's further expansion of the context window after fine-tuning. ability.