GPT-4 Turbo is back on the throne, ChatGPT is upgraded for free! Mathematics surges by 10%/Context is completely crushed

picture

picture

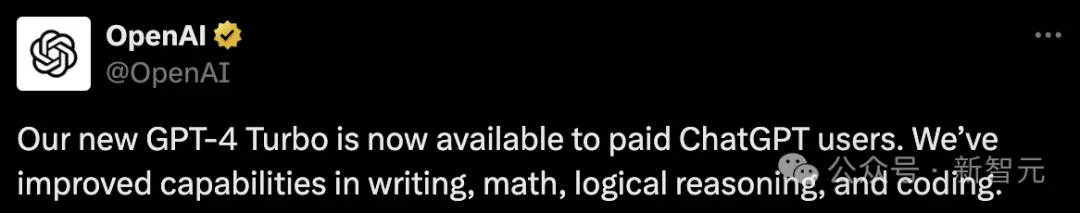

With the blessing of GPT-4 Turbo, ChatGPT's writing, mathematics, logical reasoning and coding capabilities have been improved.

The editor did a small test and found that the latest data of ChatGPT has been updated to April.

picture

picture

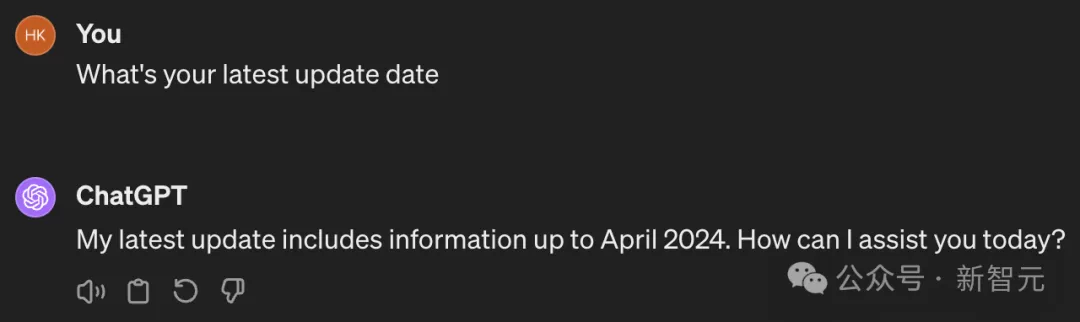

According to benchmark test results, GPT-4 Turbo has significantly improved its mathematical capabilities compared to the previous generation.

picture

picture

It is not difficult to understand that the new version of GPT-4 Turbo once again topped the large model rankings today.

picture

picture

Even Ultraman himself said, "GPT-4 is now more intelligent and more comfortable to use."

picture

picture

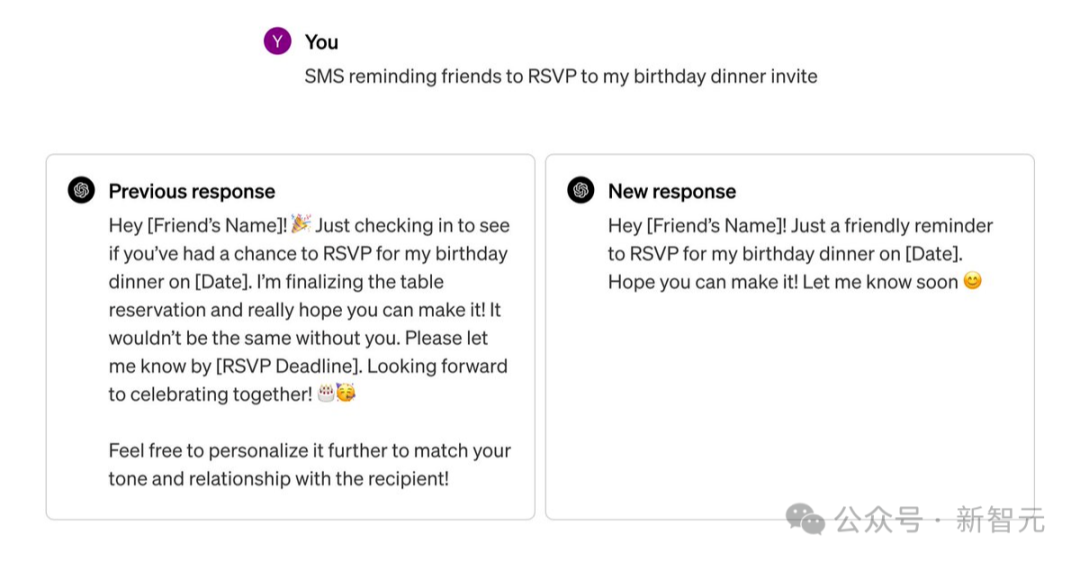

In addition, according to OpenAI, GPT-4 Turbo becomes more direct, less verbose and more colloquial when replying.

picture

picture

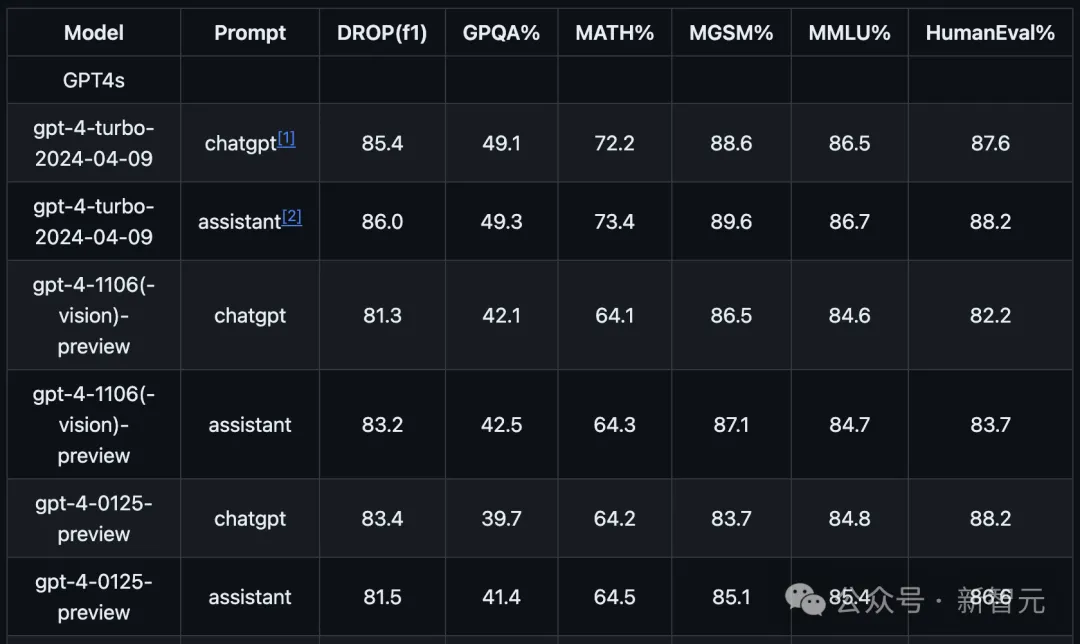

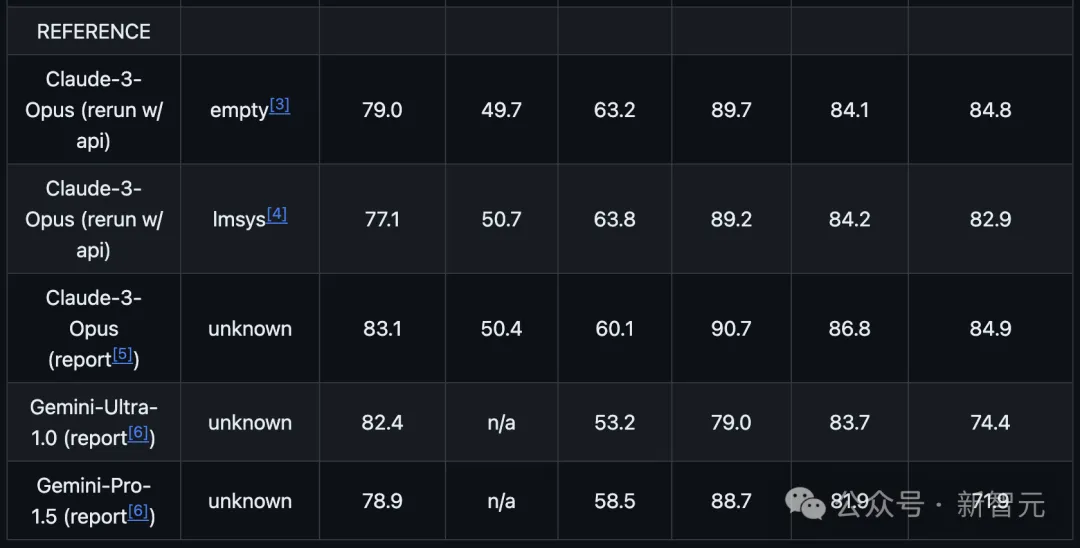

Let’s take a look, how does GPT-4 Turbo perform in benchmark tests?

Math performance improved by nearly 10%

On the official public GitHub, OpenAI released the latest evaluation results of gpt-4-turbo-2024-04-09.

The model was evaluated mainly on the following seven benchmarks:

- MMLU (Measuring Large-Scale Multi-Task Language Understanding)

- MATH (Measuring Mathematical Problem Solving Ability Using the MATH Dataset)

- GPQA (Graduate-Level Google Protection Questions and Answers Benchmark)

- DROP (Reading Comprehension Benchmark Requires Discrete Reasoning About Passages)

- MGSM (Multilingual Elementary Mathematics Benchmark): Language Models as Multilingual Thought Chain Reasoners

- HumanEval (evaluate large language models trained on code)

- MMMU (a large-scale multidisciplinary multimodal understanding and reasoning benchmark for expert general artificial intelligence)

In this GitHub repository, OpenAI mainly uses zero-sample, CoT settings, and uses simple instructions such as "solve the following multiple-choice questions."

This prompt method can more truly reflect the performance of the model in actual use.

The specific results are as follows:

The latest gpt-4-turbo has significantly improved performance compared to the previous GPT-4 series.

Especially in mathematics, the ability has improved by nearly 10%.

picture

picture

In the overall comparison, the new model has basically completely surpassed the Claude 3 Opus and Gemini Pro 1.5.

picture

picture

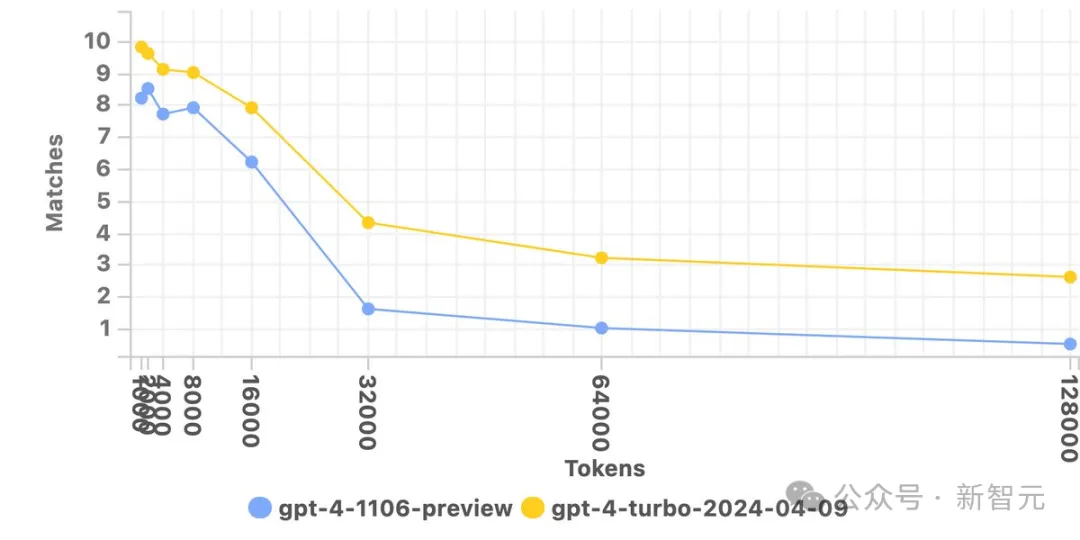

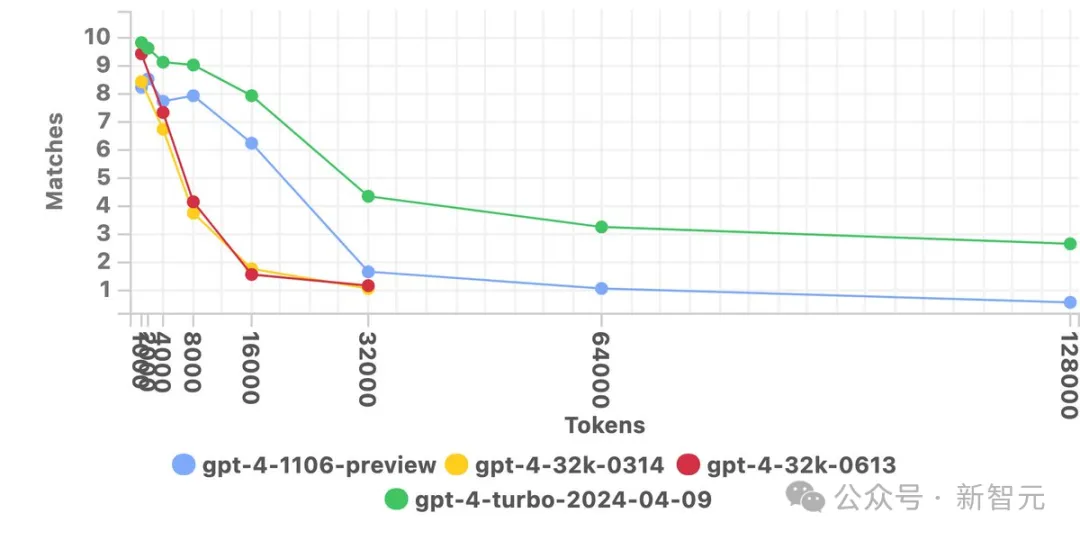

Finding a needle in a haystack is 4.3 times better than the original GPT-4

Similarly, in the needle-in-a-haystack test, the latest gpt-4-turbo also surpassed the previous 1106-preview in all aspects.

picture

picture

It is known that the longer the context, the greater the challenge to the model.

When gpt-4-turbo can handle content up to 64k Tokens, its performance is directly comparable to the performance of the preview version at 26k Tokens.

picture

picture

If we look back to when GPT-4 was first released, that was about a year ago.

The performance of the latest gpt-4-turbo is about 4.3 times higher than that of the first generation GPT-4 under the 32k configuration.

By the way, at that time, the maximum context that the model could handle was only 32k.

picture

picture

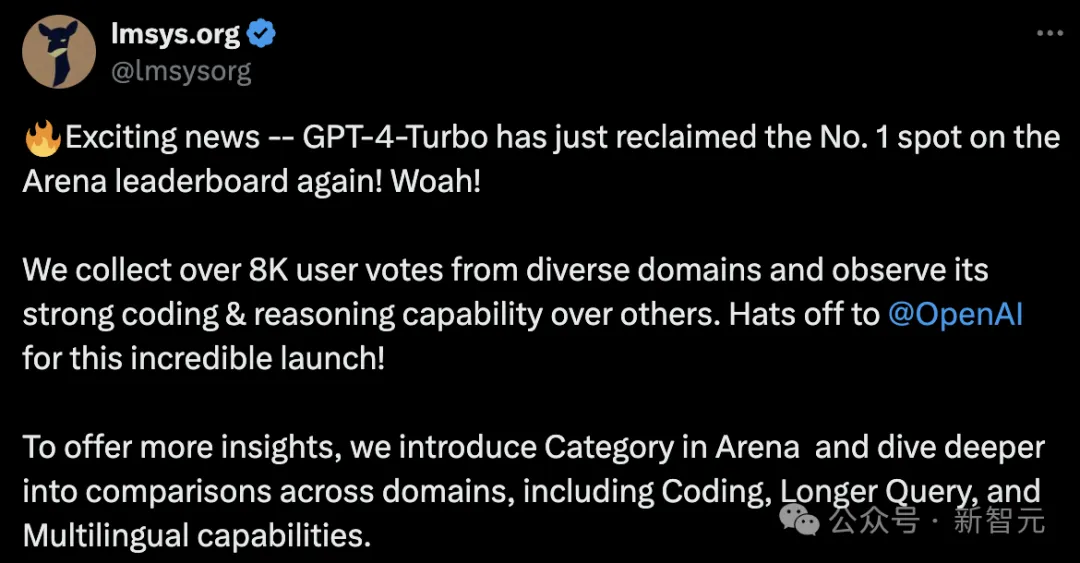

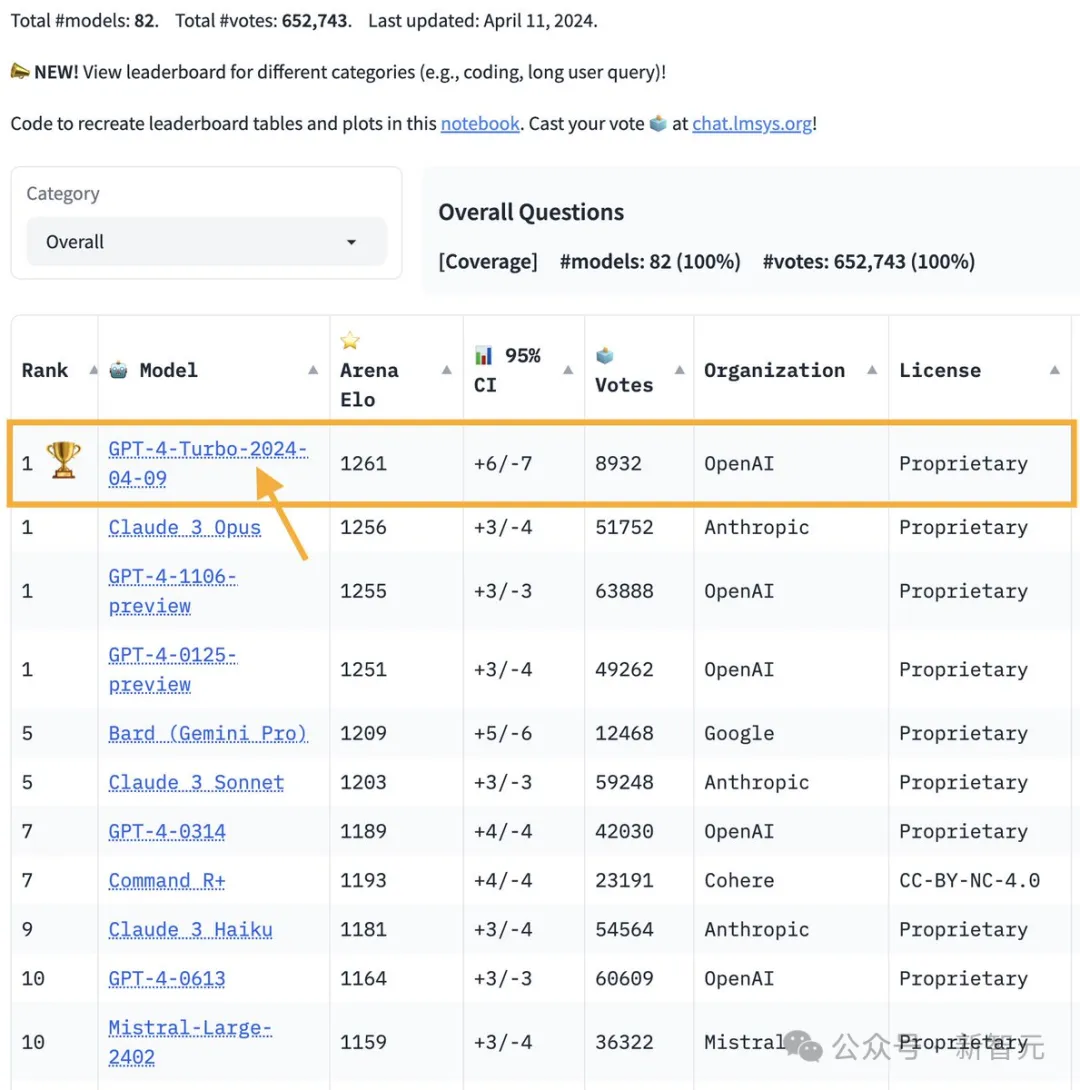

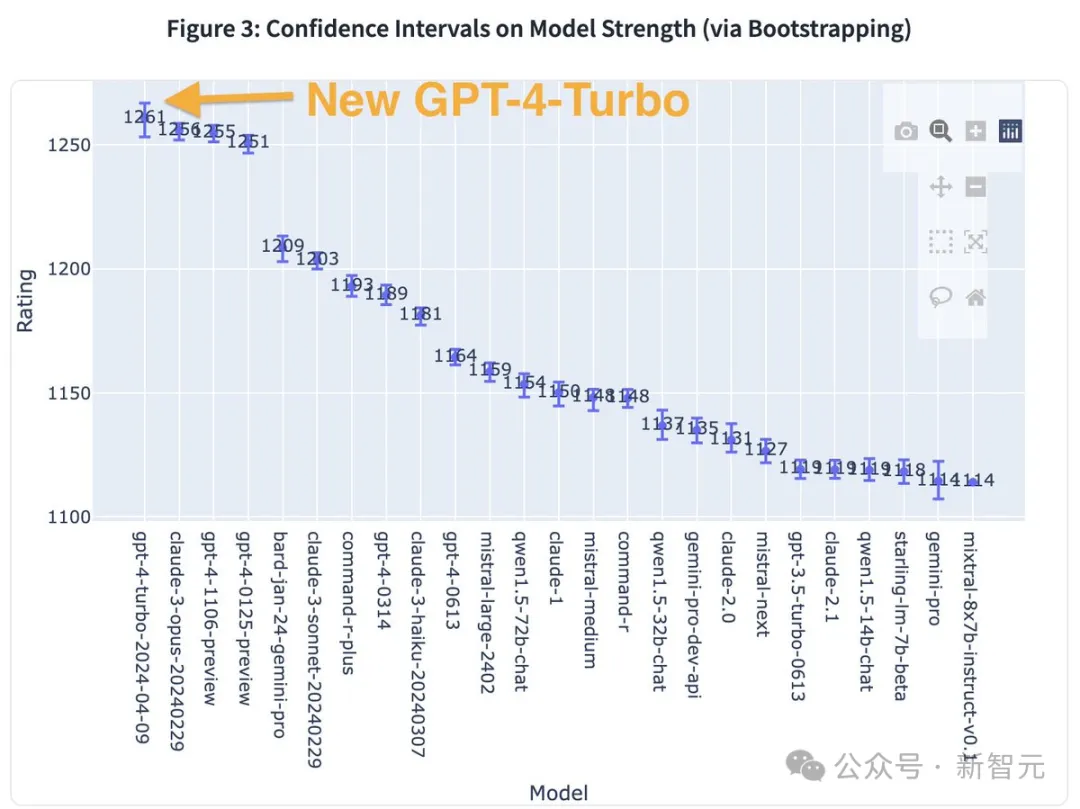

GPT-4 Turbo is back on the throne

Some time ago, Anthropic’s most powerful model, Claude 3 Opus, can be said to dominate all major lists.

However, just today, OpenAI has pulled it down from the "first place" position with its new gpt-4-turbo.

According to the latest results of the "LLM Qualifying Tournament", GPT-4-Turbo once again surpassed Claude 3 and won the first place.

picture

picture

LMSYS Org collected more than 8,000 human votes from multiple fields and found that GPT-4-Turbo's performance in programming and reasoning surpassed other models.

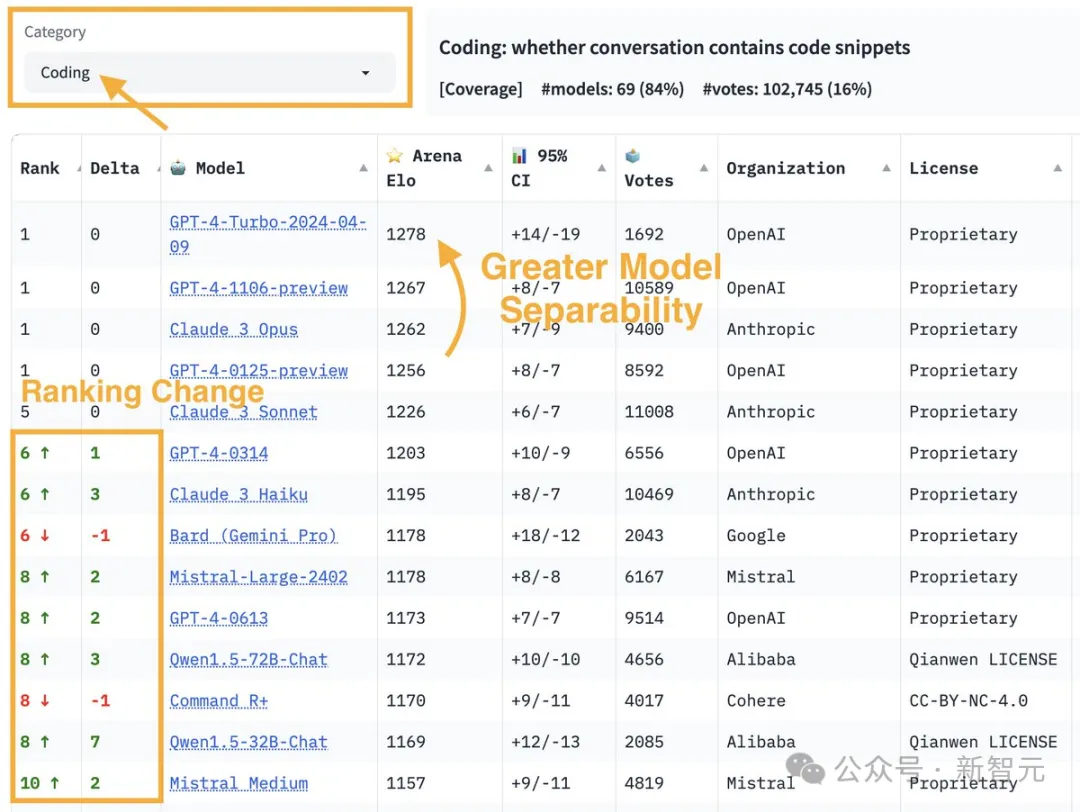

To gain a deeper understanding, the researchers introduced the "category" function in Arena.

This new feature enables more detailed comparisons across different areas such as programming, long query processing and multilingual capabilities.

picture

picture

The researchers also tagged all conversations in the programming domain that contained code snippets. In this aspect, GPT-4-Turbo shows stronger performance.

picture

picture

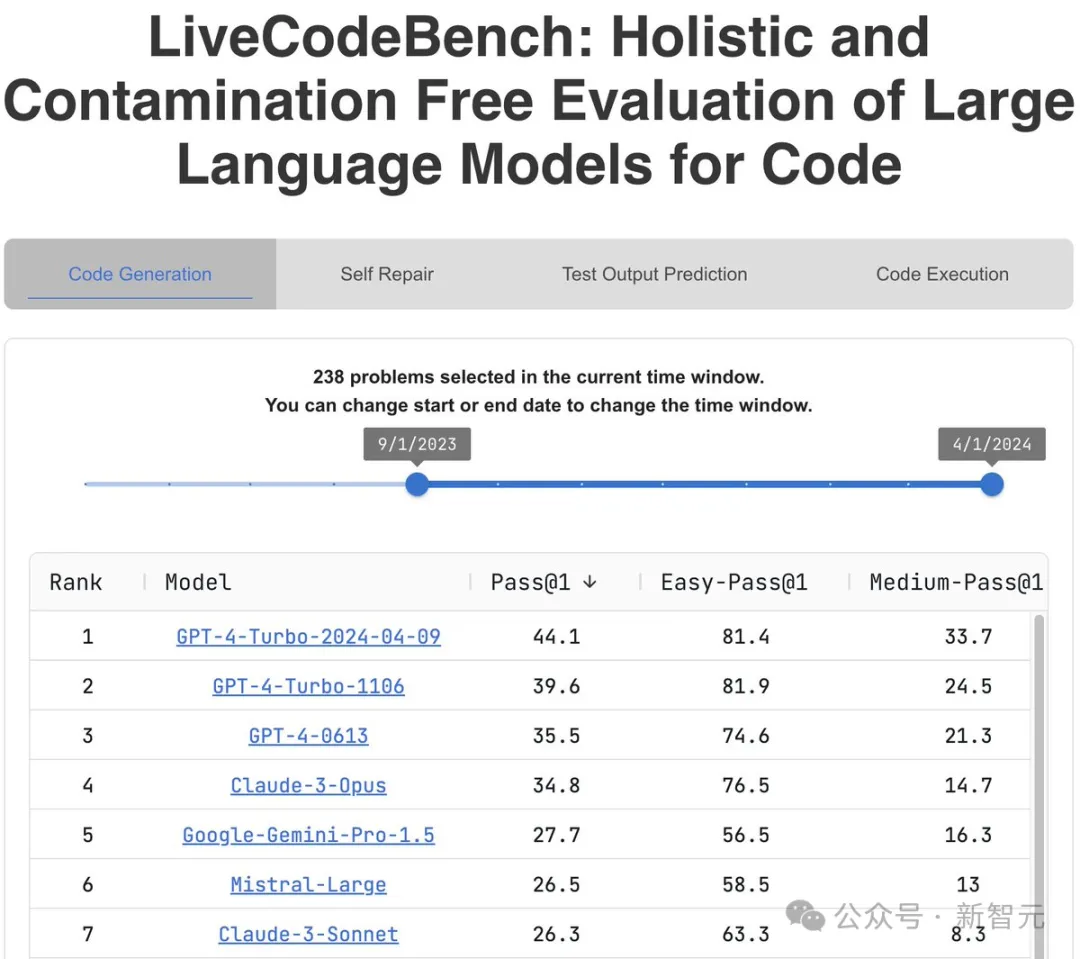

Similarly, Naman Jain also found that the performance of the new version of GPT-4-Turbo on LiveCodeBench (including programming competition questions) improved by an astonishing 4.5 points.

This type of problem poses great challenges to the current LLM, and this update of OpenAI has obviously greatly improved the model reasoning capabilities.

picture

picture

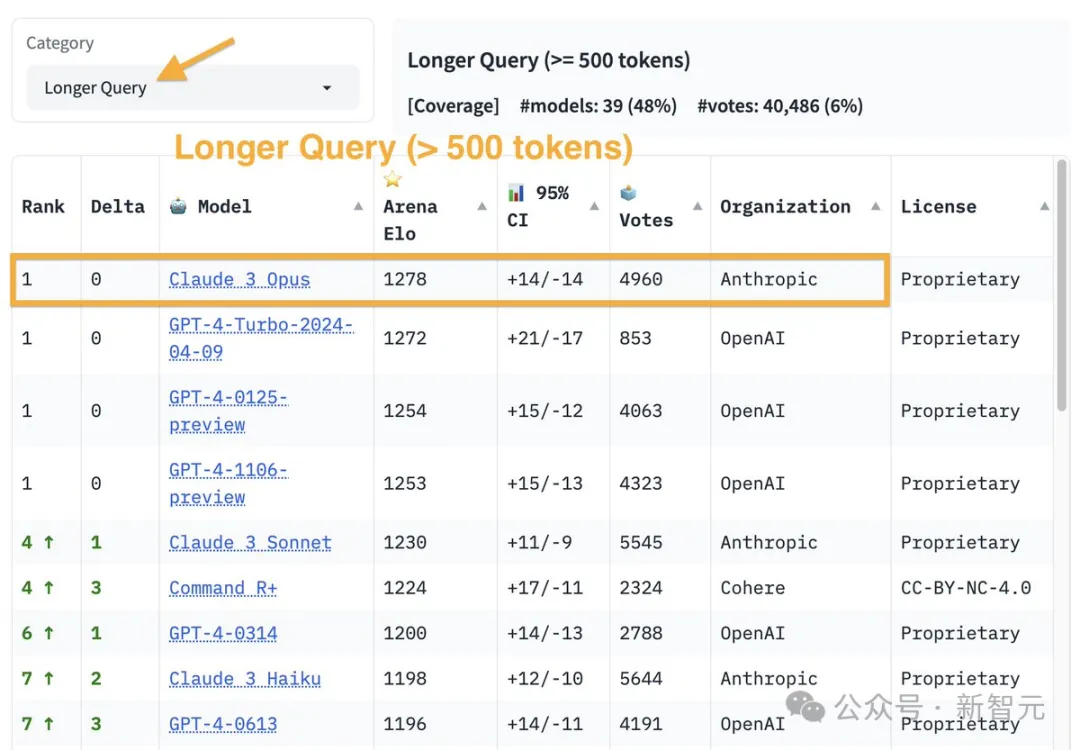

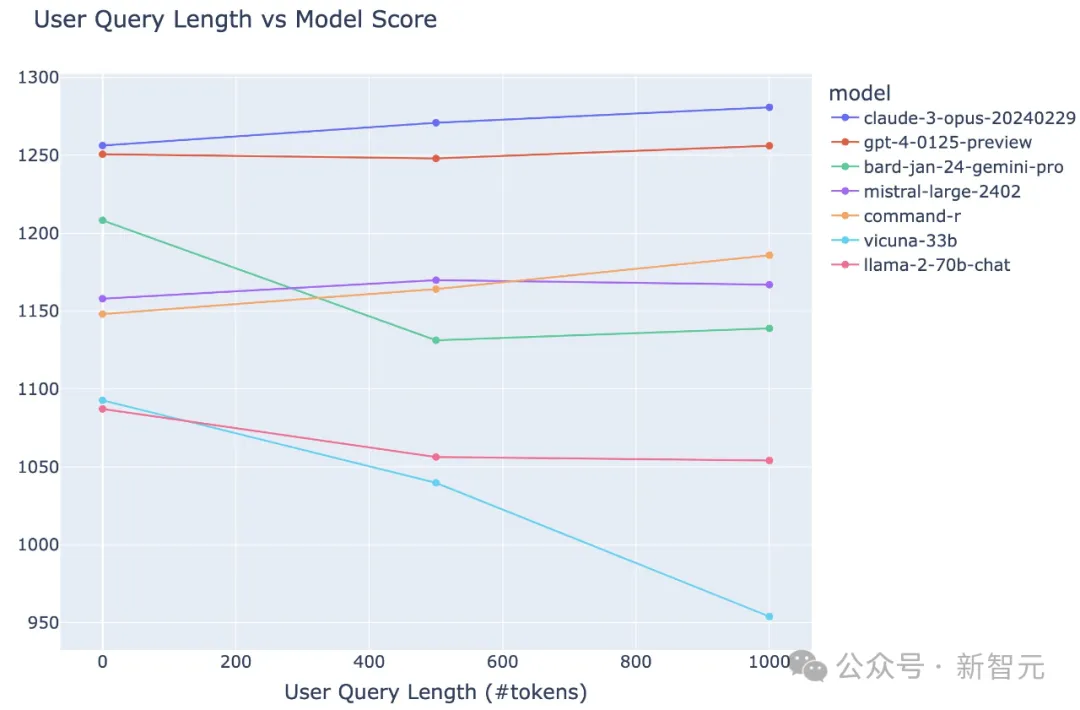

In the field of long queries (number of tokens exceeds 500), Claude-3 Opus performs best.

Somewhat unexpectedly, Command R/R+ also scores very high in this area.

picture

picture

picture

picture

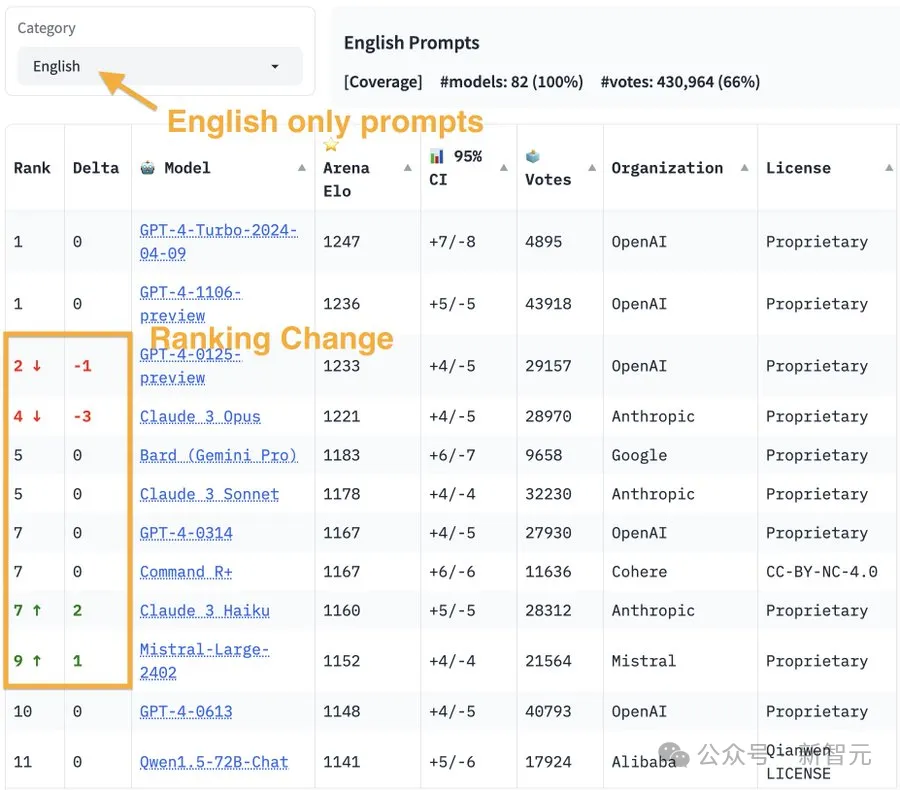

Interestingly, if only English tips were involved, the rankings would be slightly different than overall.

In this category, three GPT-4-Turbo still lead the way.

This change occurs because as the user base expands, language usage shifts from English to multiple languages including Chinese.

picture

picture

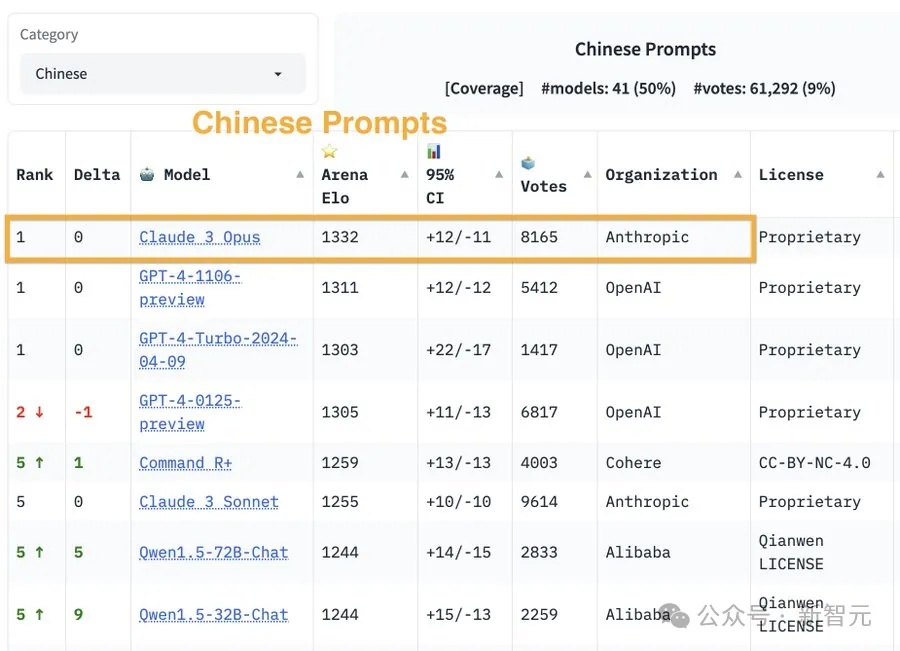

When dealing with different languages, the performance of the model is also different.

For example, in the Chinese environment, Claude-3 Opus ranks first.

picture

picture

Here are the confidence intervals (CIs) for the model scores:

picture

picture

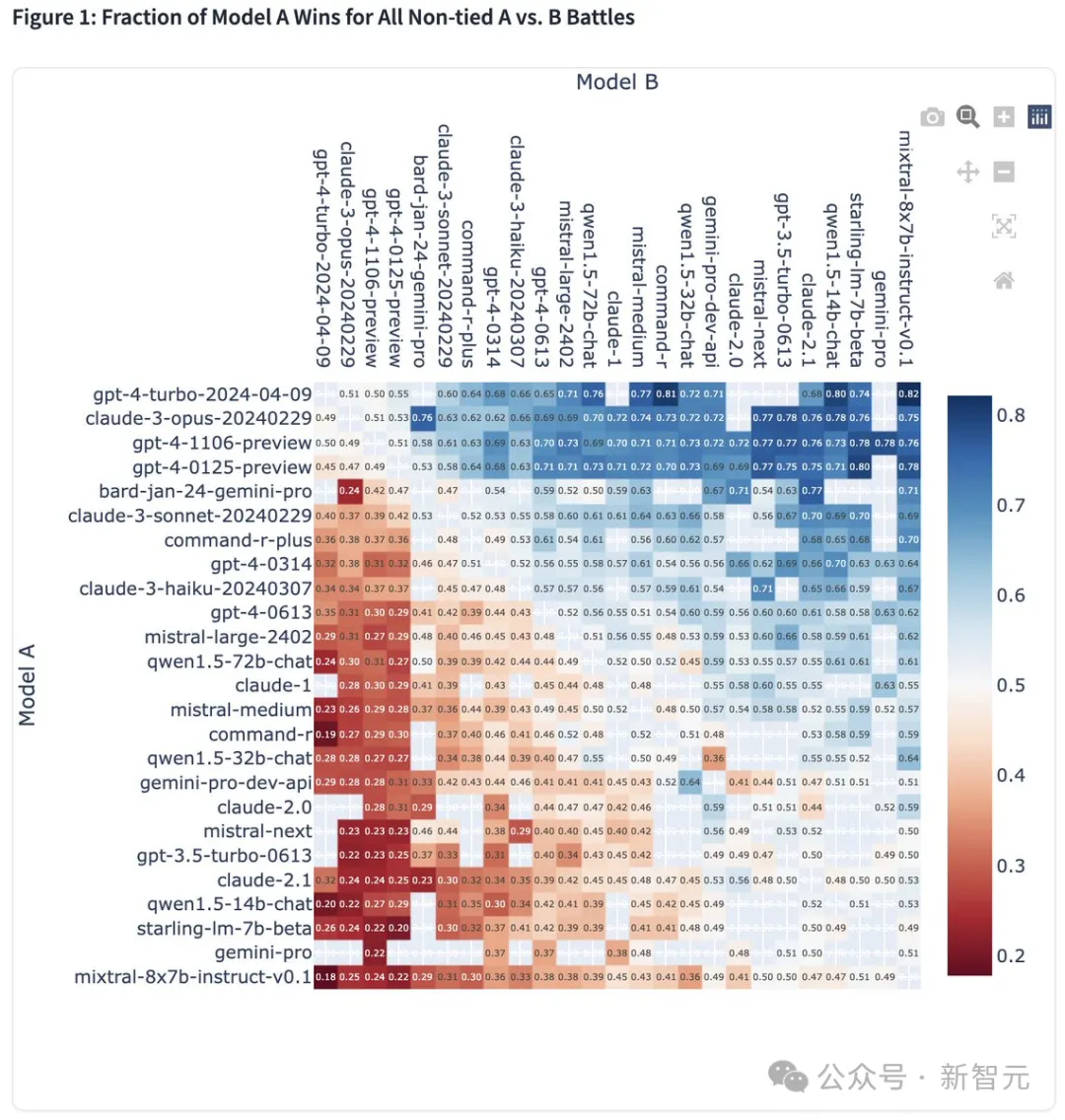

And the overall winning rate heat map:

picture

picture

References: