Guitar rock and electronic music can be handled, Meta open source audio generation new model MAGNeT, non-autoregressive 7 times faster

In the AIGC track of generating audio (or music) from text, Meta has recently made new research results and made them open source.

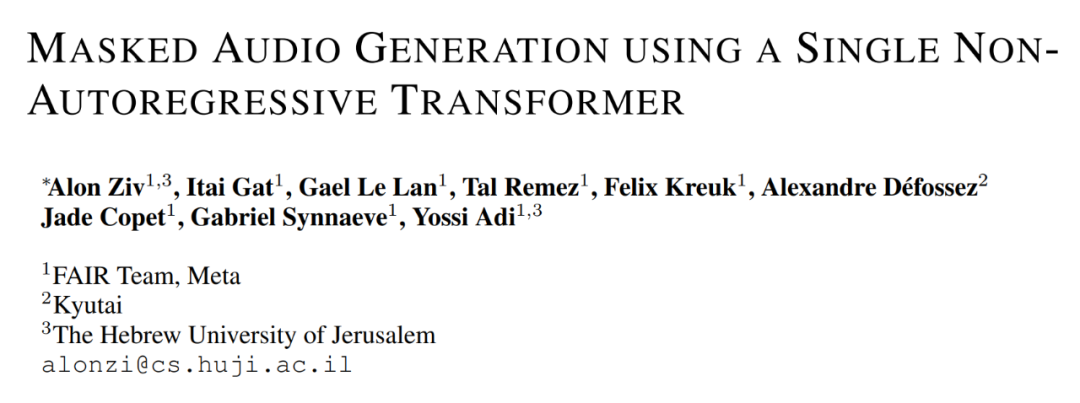

A few days ago, in the paper "Masked Audio Generation using a Single Non-Autoregressive Transformer", the Meta FAIR team, Kyutai and Hebrew University launched MAGNeT, a mask generation sequence modeling method that can directly generate multiple audio Run directly on the tokens stream. The biggest difference from previous work is that MAGNeT generates audio by a single-stage, non-autoregressive transformer.

- Paper address: https://arxiv.org/pdf/2401.04577.pdf

- GitHub address: https://github.com/facebookresearch/audiocraft/blob/main/docs/MAGNET.md

Specifically, during training, researchers predict the range of mask tokens obtained from the mask scheduler; during the model inference stage, the output sequence is gradually constructed through several decoding steps. To further enhance the quality of generated audio, they propose a novel rescoring method that utilizes an external pre-trained model to rescore and rank predictions from MAGNET, which are then used in subsequent decoding steps.

In addition, researchers also explored a hybrid version of MAGNET that fuses autoregressive and non-autoregressive models to generate the first few seconds in an autoregressive manner while decoding the remaining sequence in parallel.

Judging from the generation results, MAGNET has achieved very good results on text-to-audio and text-to-music tasks, with quality comparable to the SOTA autoregressive baseline model and 7 times faster than them.

You can listen to the generated music effect.

Overview of the MAGNeT method

Figure 1 below shows the schematic diagram of MAGNeT. As a non-autoregressive audio generation mask language model, it operates on several discrete audio token streams obtained from EnCodec based on conditional semantic representation. In terms of modeling strategies, researchers have made core modeling modifications in several aspects, including masking strategies, restricted contexts, sampling mechanisms, and model rescoring.

Looking first at the masking strategy, the researchers evaluated various span lengths between 20ms and 200ms and found that a span length of 60ms provided the best overall performance. They sampled the masking rate γ(i) from the scheduler and calculated the average span size for masking accordingly. In addition, from the perspective of computational efficiency, the researchers also used non-overlapping spans.

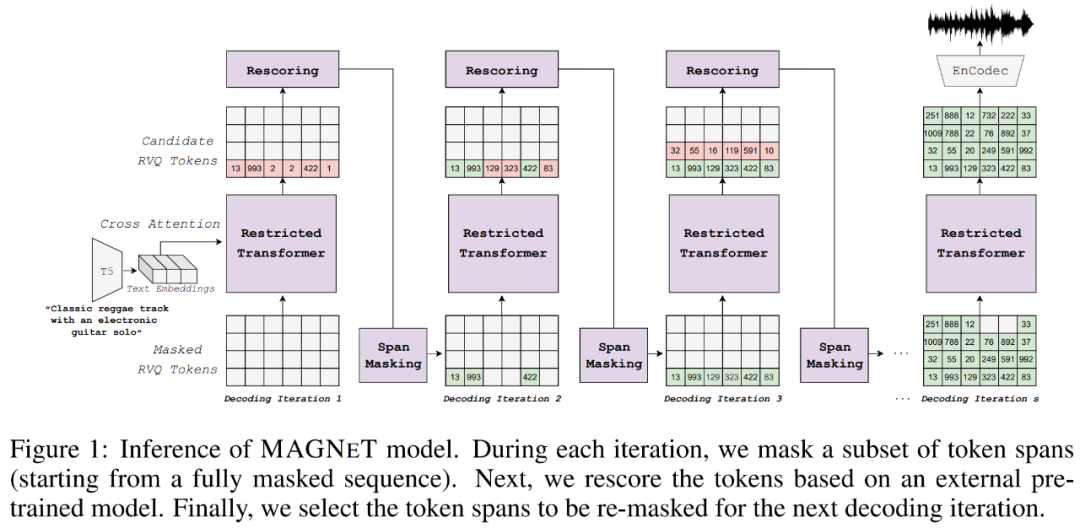

Next comes the restricted context. The researchers used EnCodec and restricted the context of the codebook accordingly. Specifically, the audio encoder consists of a multi-layer convolutional network and a final LSTM block. The analysis results of the EnCodec receptive field show that the receptive field of the convolutional network is about 160ms, while the effective receptive field including the LSTM block is about 180ms. We empirically evaluated the model's receptive field by using a translational pulse function over time and measuring the amplitude of the encoding vectors in the middle of the sequence.

Figure 3 below shows the process. However, although LSTM has infinite memory in theory, it is limited in actual observation.

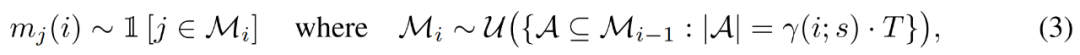

Finally, there is modal inference, which includes sampling and classifier-free guided annealing. Sampling is as shown in equation (3) below, using uniform sampling to select spans from a previous set of masked spans. In practice, researchers use the model confidence at iteration i as a scoring function to rank all possible spans and select the span least likely to be masked accordingly.

For token prediction, the researchers chose to use classifier-free guidance to complete it. During training, they optimize the model conditionally and unconditionally; during inference, they sample from a distribution obtained from a linear combination of conditional and unconditional probabilities.

Experiments and results

In the experimental session, the researchers evaluated MAGNeT on text-to-music generation and text-to-audio generation tasks. They used exactly the same music generation training data used by Copet et al. (2023) and the same audio generation training data used by Kreuk et al. (2022a).

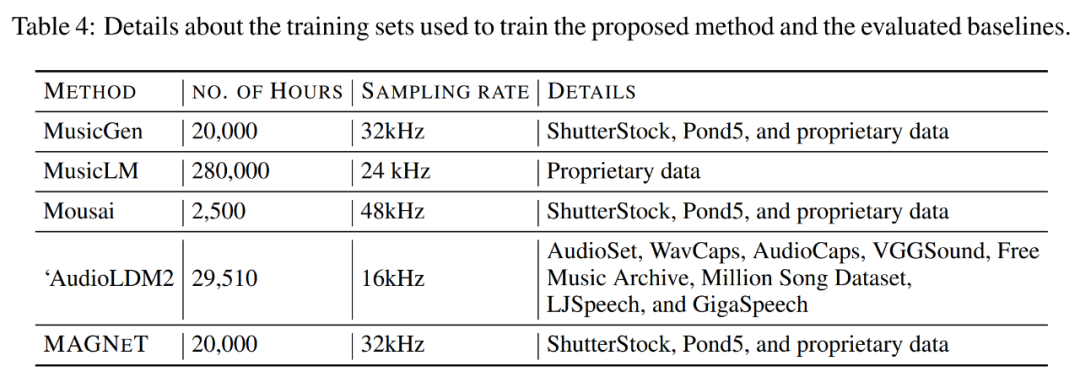

Table 4 below shows details of the training set used to train MAGNeT as well as other baseline methods including MusicGen, MusicLM and AudioLDM2.

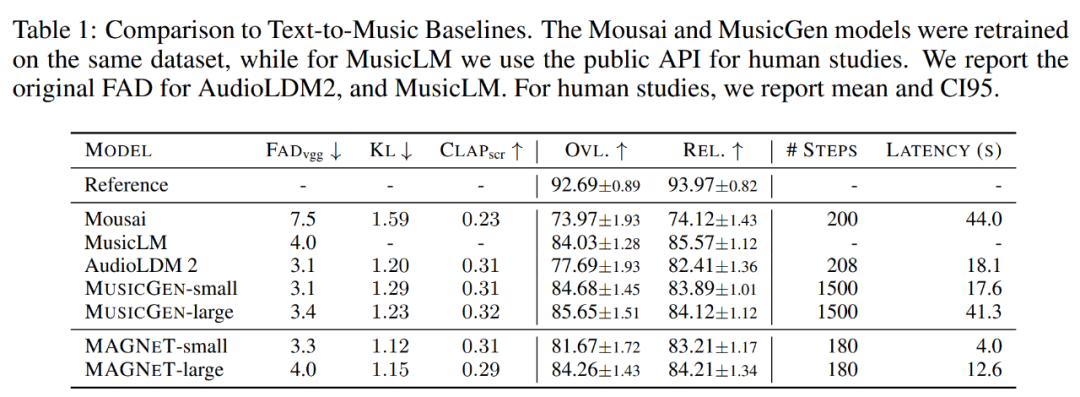

Table 1 below shows the comparison results of MAGNeT with other baseline methods on the text-to-music generation task. The evaluation data set used is MusicCaps. We can see that MAGNeT's performance is comparable to MusicGen using the autoregressive modeling approach, but is much faster than the latter in terms of both generation speed (latency) and decoding.

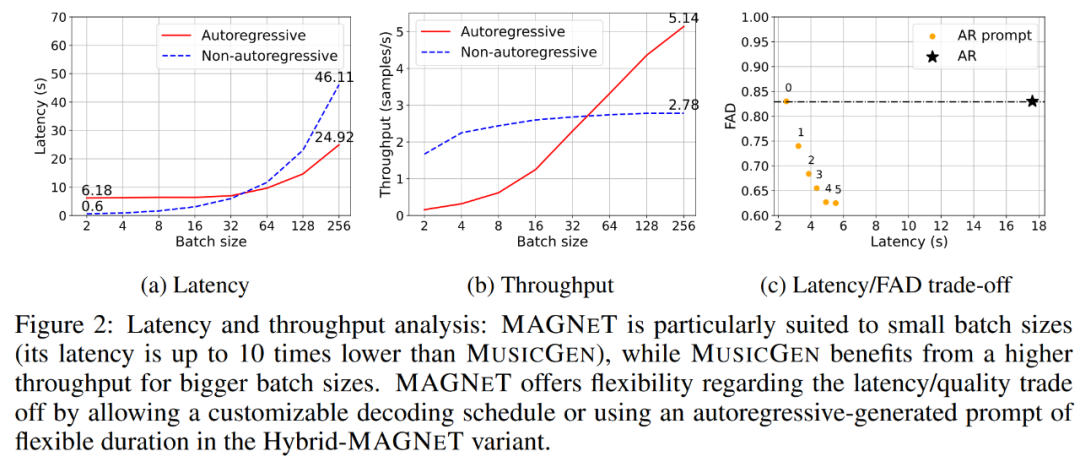

Figure 2a below shows that compared to the autoregressive baseline model (red curve), the non-autoregressive model (blue dashed line) benefits from parallel decoding and performs particularly well at small batch sizes, with a latency as low as 600ms for a single generated sample, which is 1/10 of the autoregressive baseline model. It is foreseeable that MAGNeT has great potential for application in interactive applications that require low-latency preprocessing. In addition, non-autoregressive models are generated faster than the baseline model until the batch size reaches 64.

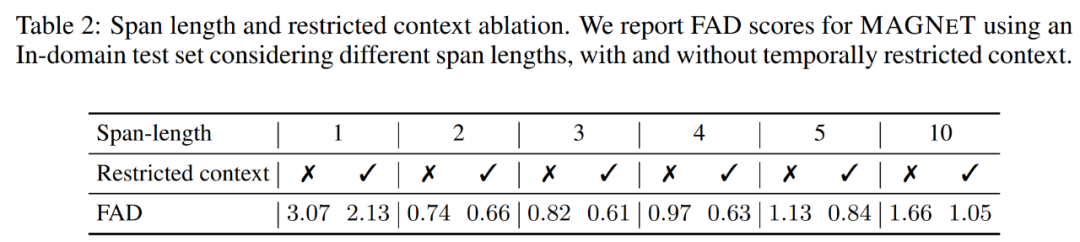

Table 2 below shows the ablation experiments for span length and restricted context. The researchers report the FAD (Fréchet Audio Distance) scores of MAGNeT with different span lengths, with and without time-restricted context, using the in-domain test set.

Please refer to the original paper for more technical details and experimental results.