The back end is a bit curled? Off to the client!

The back end is a bit curled? Off to the client!

Hello everyone, I am Xiaolin.

Among Internet positions, it can be said that back-end development is the most popular and attracts the most applicants, but the client development next door has very few investors. Back-end students will be recruited by the client department for interviews, so if they fail to pass the exam, Students who want to enter a big factory can try client development. The interview is relatively less stressful, and the salary and benefits are the same as back-end development.

Today I will share an interview experience with a Kuaishou client. The classmate’s technology stack is C++ backend, but the interview will not ask about the backend content. It will mainly focus on Cpp+operating system+network protocol+algorithm. Compared with The back-end needs to prepare less back-end components such as mysql, redis, message queue, etc., but the depth of the calculation basics will be deeper.

On the third side, it is still a bit difficult to directly write out the two scene code questions by hand. .

Quick side

An introduction to congestion control

When the network is congested, if you continue to send a large number of data packets, it may cause data packet delay, loss, etc. At this time, TCP will retransmit the data, but a retransmission will cause a heavier burden on the network, which will lead to greater With delays and more packet losses, this situation will enter a vicious cycle and be continuously amplified....

Therefore, TCP cannot ignore what is happening on the network. It is designed to be a selfless protocol. When the network is congested, TCP will sacrifice itself and reduce the amount of data sent.

Therefore, there is congestion control. The purpose of control is to prevent the "sender"'s data from filling the entire network.

In order to regulate the amount of data to be sent on the "sender", a concept called "congestion window" is defined.

Congestion control mainly consists of four algorithms:

- slow start

- congestion avoidance

- congestion occurs

- Quick recovery

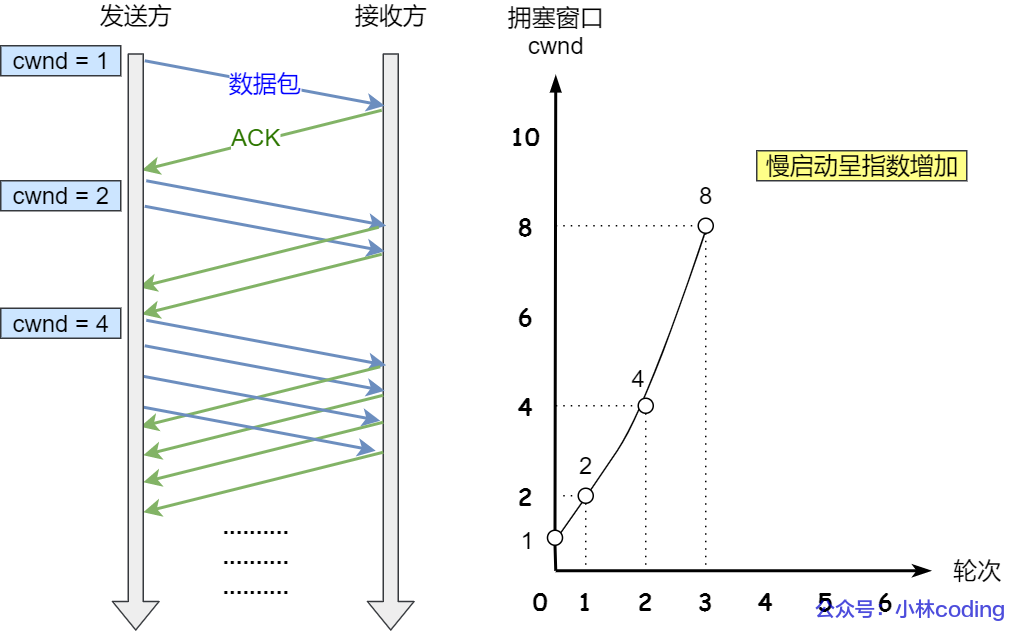

slow start

After TCP has just established a connection, it first goes through a slow start process. This slow start means to increase the number of data packets sent little by little. If a large amount of data is sent as soon as it starts, isn't this adding congestion to the network? ?

The slow-start algorithm just needs to remember one rule: every time the sender receives an ACK, the size of the congestion window cwnd will be increased by 1.

It is assumed here that the congestion window cwnd and the sending window swnd are equal. Here is an example:

- After the connection is established, cwnd = 1 is initially initialized, indicating that data of MSS size can be transmitted.

- When an ACK confirmation response is received, cwnd is increased by 1, so 2 can be sent at a time

- After receiving 2 ACK confirmation responses, cwnd is increased by 2, so 2 more ACKs can be sent than before, so 4 can be sent this time

- When these 4 ACK confirmations arrive, the cwnd of each confirmation increases by 1, and the cwnd of the 4 confirmations increases by 4, so 4 more can be sent than before, so 8 can be sent this time.

The change process of the slow start algorithm is as follows:

slow start algorithm

slow start algorithm

It can be seen that with the slow start algorithm, the number of packets sent increases exponentially.

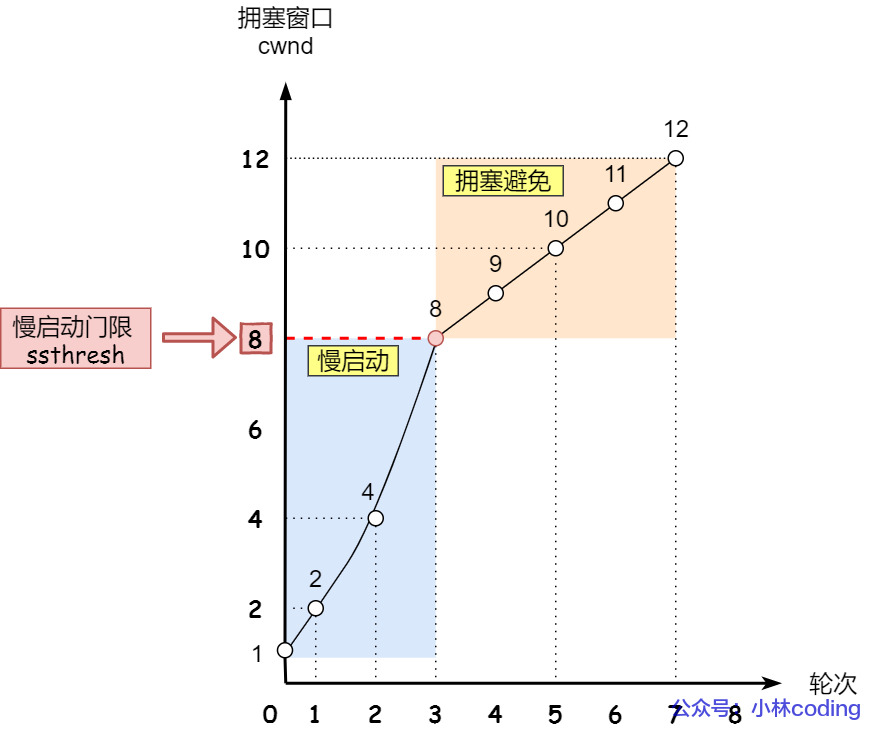

When will the slow start end?

There is a state variable called ssthresh (slow start threshold).

- When cwnd < ssthresh, the slow start algorithm is used.

- When cwnd >= ssthresh, the "congestion avoidance algorithm" will be used.

congestion avoidance

When the congestion window cwnd "exceeds" the slow start threshold ssthresh, it will enter the congestion avoidance algorithm.

Generally the size of ssthresh is 65535 bytes.

Then after entering the congestion avoidance algorithm, its rule is: whenever an ACK is received, cwnd increases by 1/cwnd.

Continuing from the previous slow start example, now assume that ssthresh is 8:

- When 8 ACK response confirmations arrive, each confirmation increases by 1/8, and the cwnd of the 8 ACK confirmations increases by 1 in total, so this time 9 MSS-sized data can be sent, which becomes a linear increase.

The change process of the congestion avoidance algorithm is as follows:

congestion avoidance

congestion avoidance

Therefore, we can find that the congestion avoidance algorithm changes the exponential growth of the original slow start algorithm into linear growth, which is still in the growth stage, but the growth rate is slower.

After it continues to grow like this, the network will slowly enter a congestion situation, so packet loss will occur. At this time, the lost data packets need to be retransmitted.

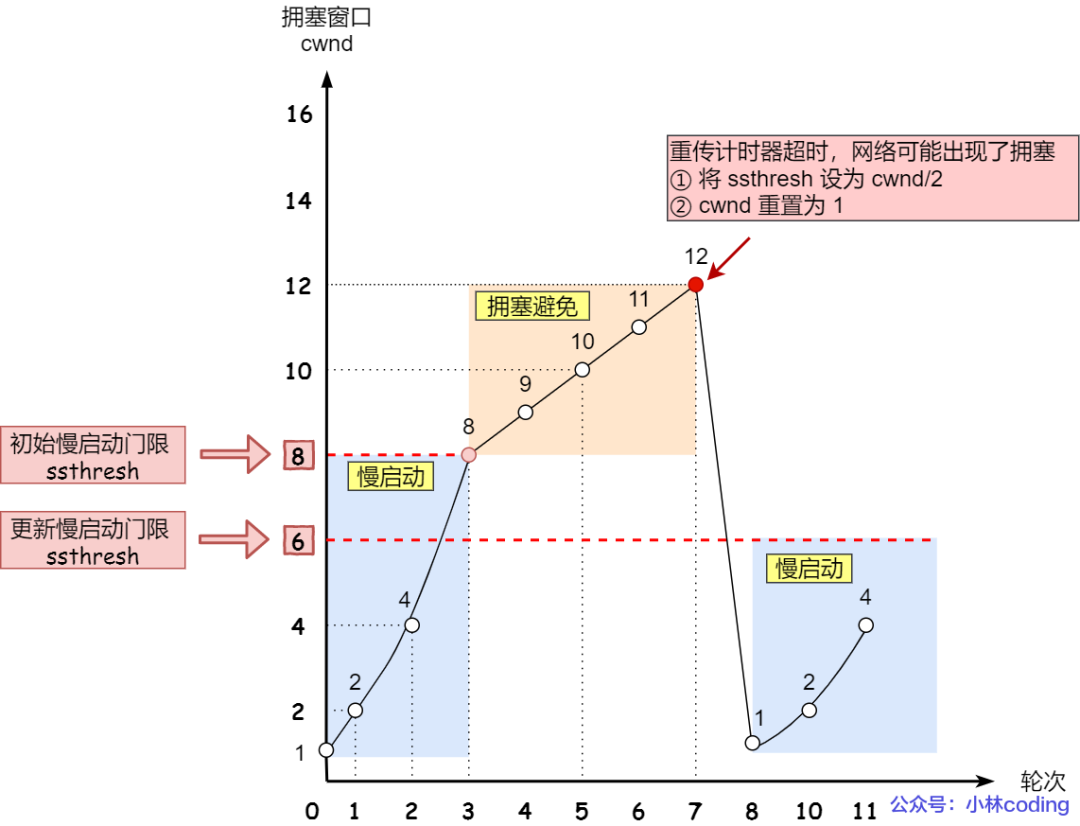

When the retransmission mechanism is triggered, the "congestion occurrence algorithm" is entered.

congestion occurs

When network congestion occurs, data packets will be retransmitted. There are two main retransmission mechanisms:

- Timeout retransmission

- Fast retransmission

When a "timeout retransmission" occurs, the congestion generation algorithm will be used.

At this time, the values of ssthresh and cwnd will change:

- ssthresh is set to cwnd/2,

- cwnd is reset to 1 (restores to the cwnd initialization value, I assume here that the cwnd initialization value is 1)

The changes in the congestion generation algorithm are as follows:

Congested sending - timeout retransmission

Congested sending - timeout retransmission

Then, slow start is restarted, which will suddenly reduce the data flow. This is really a case of "timeout retransmission" and it will immediately return to before liberation. But this method is too radical and the reaction is very strong, which will cause network lag.

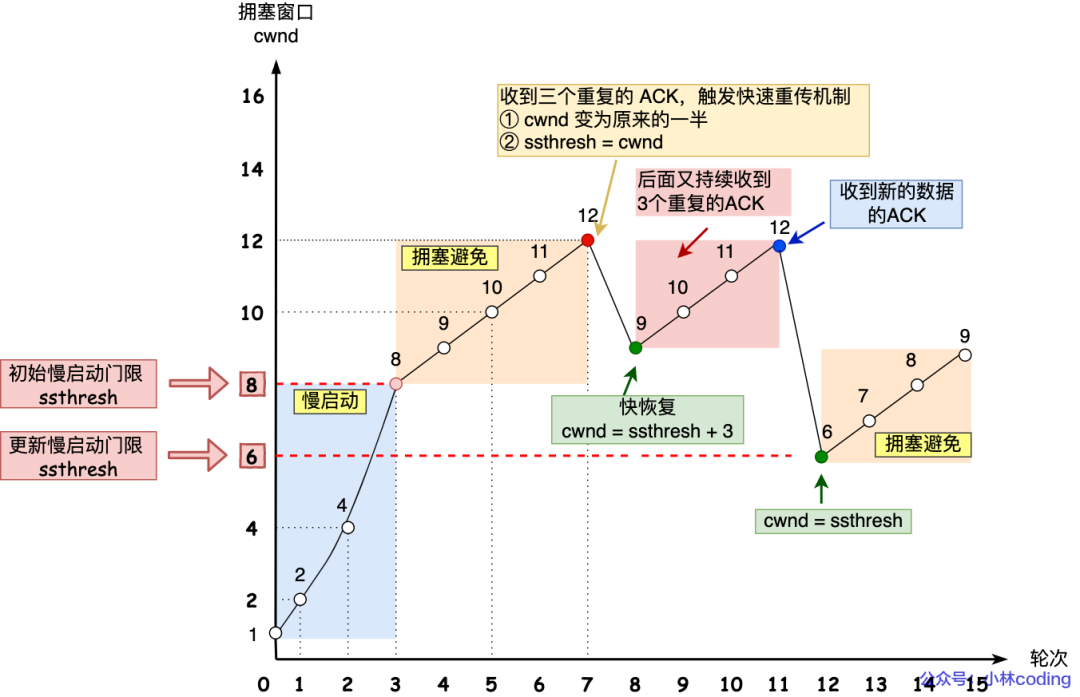

There is a better way. We talked about the "fast retransmission algorithm" earlier. When the receiver discovers that an intermediate packet has been lost, it sends the ACK of the previous packet three times, so the sender will quickly retransmit without waiting for timeout before retransmitting.

TCP thinks this situation is not serious, because most of it is not lost, only a small part is lost, then ssthresh and cwnd change as follows:

- cwnd = cwnd/2, that is, set to half of the original value;

- ssthresh = cwnd;

- Enter the fast recovery algorithm

Quick recovery

The fast retransmission and fast recovery algorithms are generally used at the same time. The fast recovery algorithm believes that if you can still receive 3 duplicate ACKs, it means that the network is not that bad, so there is no need to be as strong as the RTO timeout.

As mentioned before, before entering fast recovery, cwnd and ssthresh have been updated:

- cwnd = cwnd/2, that is, set to half of the original value;

- ssthresh = cwnd;

Then, enter the fast recovery algorithm as follows:

- Congestion window cwnd = ssthresh + 3 (3 means confirmation that 3 packets were received);

- Retransmit lost packets;

- If a duplicate ACK is received again, cwnd is increased by 1;

- If after receiving the ACK of new data, set cwnd to the value of ssthresh in the first step, the reason is that the ACK confirms the new data, indicating that the data from the duplicated ACK has been received, and the recovery process has ended. You can return to the state before recovery, that is, enter the congestion avoidance state again;

The changing process of the fast recovery algorithm is as follows:

Fast retransmission and fast recovery

Fast retransmission and fast recovery

That is to say, it does not return to before liberation overnight like "timeout retransmission", but it is still at a relatively high value, and then increases linearly.

What is the difference between http/https?

- HTTP is the Hypertext Transfer Protocol, and information is transmitted in clear text, which poses security risks. HTTPS solves the insecurity shortcomings of HTTP and adds the SSL/TLS security protocol between the TCP and HTTP network layers so that messages can be encrypted and transmitted.

- HTTP connection establishment is relatively simple, and HTTP message transmission can be carried out after the TCP three-way handshake. After the TCP three-way handshake, HTTPS still needs to carry out the SSL/TLS handshake process before it can enter encrypted message transmission.

- The default ports of the two are different. The default port number of HTTP is 80 and the default port number of HTTPS is 443.

- The HTTPS protocol requires applying for a digital certificate from a CA (certificate authority) to ensure that the server's identity is trustworthy.

HTTPS four times encryption process?

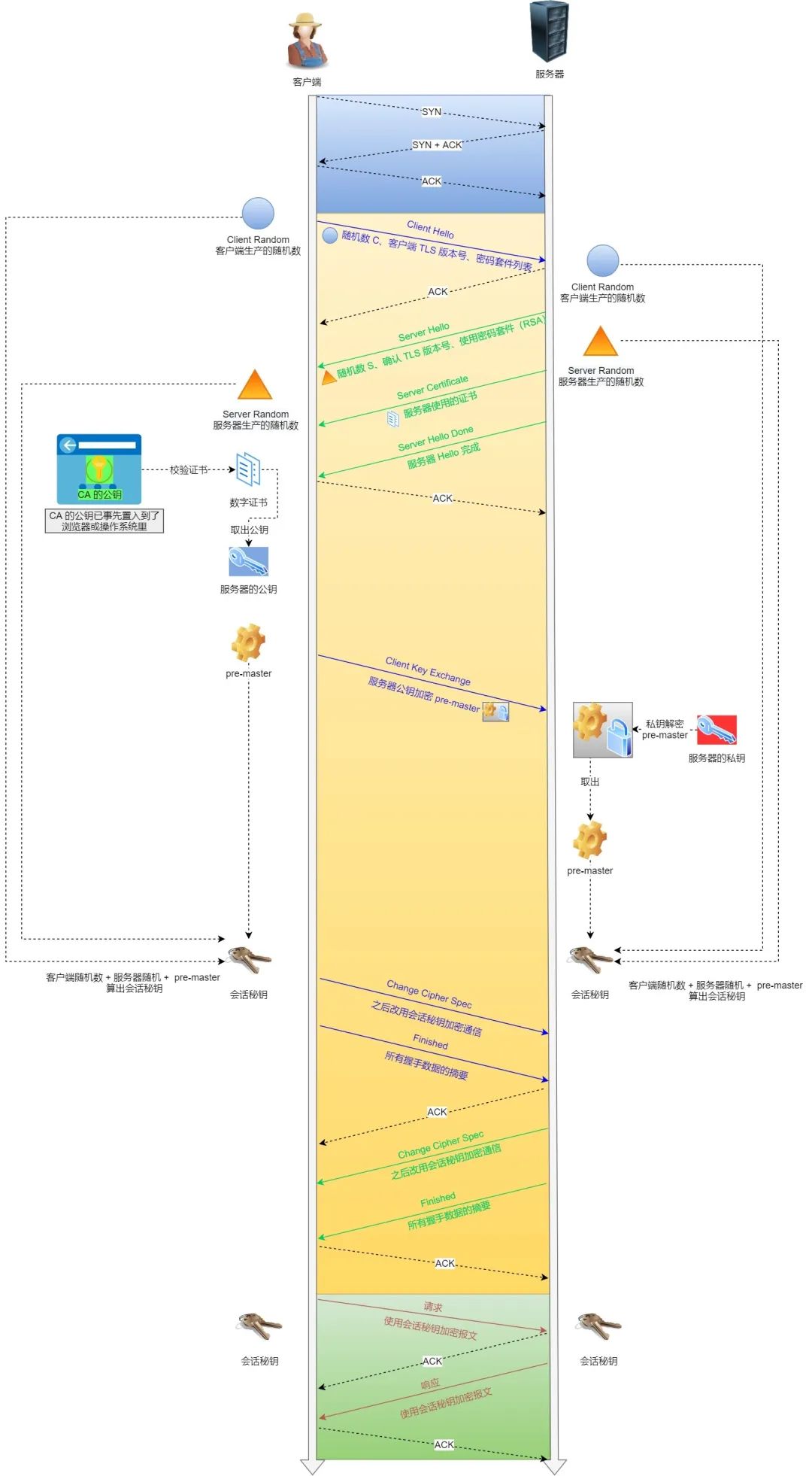

The TLS handshake process based on the RSA algorithm is relatively easy to understand, so here we use this to show you the TLS handshake process, as shown below:

HTTPS connection establishment process

HTTPS connection establishment process

Detailed process of establishing TLS protocol:

1.ClientHello

First, the client initiates an encrypted communication request to the server, which is a ClientHello request.

In this step, the client mainly sends the following information to the server:

(1) TLS protocol version supported by the client, such as TLS version 1.2.

(2) The random number produced by the client (Client Random) is later used to generate one of the conditions for the "session key".

(3) List of cipher suites supported by the client, such as RSA encryption algorithm.

2.SeverHello

After receiving the client's request, the server sends a response to the client, which is SeverHello. The content of the server's response is as follows:

(1) Confirm the TLS protocol version. If the browser does not support it, turn off encrypted communication.

(2) The random number produced by the server (Server Random) is also one of the conditions used later to produce the "session key".

(3) Confirmed cipher suite list, such as RSA encryption algorithm.

(4) Server’s digital certificate.

3. Client response

After receiving the server's response, the client first confirms the authenticity of the server's digital certificate through the CA public key in the browser or operating system.

If there is no problem with the certificate, the client will take out the server's public key from the digital certificate, then use it to encrypt the message and send the following information to the server:

(1) A random number (pre-master key). The random number will be encrypted by the server's public key.

(2) Notification of change of encryption communication algorithm, indicating that subsequent messages will be encrypted with the "session key".

(3) Client handshake end notification indicates that the client's handshake phase has ended. This item also summarizes the occurrence data of all previous contents for server-side verification.

The random number in the first item above is the third random number in the entire handshake phase and will be sent to the server, so this random number is the same on both the client and the server.

The server and client have these three random numbers (Client Random, Server Random, pre-master key), and then use the encryption algorithm negotiated by both parties to each generate a "session key" for this communication.

4. The server’s final response

After receiving the third random number (pre-master key) from the client, the server calculates the "session key" for this communication through the negotiated encryption algorithm.

Then, send the final message to the client:

(1) Notification of change of encryption communication algorithm, indicating that subsequent messages will be encrypted with the "session key".

(2) Server handshake end notification indicates that the server's handshake phase has ended. This item also summarizes the occurrence data of all previous content for client verification.

At this point, the entire TLS handshake phase is over. Next, the client and server enter encrypted communication, completely using the ordinary HTTP protocol, but using the "session key" to encrypt the content.

What is the difference between get and post?

- According to the RFC specification, the semantics of GET is to obtain specified resources from the server. This resource can be static text, pages, pictures, videos, etc. The parameter position of the GET request is generally written in the URL. The URL stipulates that it can only support ASCII, so the parameters of the GET request only allow ASCII characters, and the browser will have restrictions on the length of the URL (the HTTP protocol itself does not do anything about the URL length. Regulation).

- According to the RFC specification, the semantics of POST is to process the specified resource based on the request load (message body). The specific processing method varies depending on the resource type. The position of the data carried in the POST request is generally written in the message body. The data in the body can be in any format, as long as the client and the server negotiate well, and the browser does not limit the body size.

What is the difference between process threads?

- Resource occupation: Each process has an independent address space, file descriptor and other system resources. The resources between processes are independent of each other, and threads share the address space and resources of the process to which they belong, including file descriptors, signal processing, etc. .

- Scheduling and switching: Process is the basic unit for system scheduling and resource allocation, and switching between processes is relatively expensive. Threads are executed within the process, and the cost of thread switching is relatively small.

- Communication and synchronization: Communication methods between processes include pipes, message queues, shared memory, etc. Inter-process communication is relatively complex. The memory space of the process is shared between threads, and communication and synchronization can be achieved by directly reading and writing shared data.

What resources does the thread have and what is stored in the stack?

Threads have some specific resources in the operating system, including:

- Thread Control Block (TCB): used to save thread status information, such as program counter (Program Counter, PC), register value, thread ID, thread priority, etc.

- Stack: Each thread has its own stack space, which is used to save local variables of function calls, function return addresses, and other temporary data. The stack is private to the thread, and the stacks between different threads are independent of each other.

- Registers: Threads will use registers during execution, including general-purpose registers (such as EAX, EBX, etc.), program counter (PC), etc. The register saves the current execution status of the thread.

- Shared resources: Threads can share resources of the process to which they belong, such as open files, signal handlers, etc. These resources can be shared and accessed between threads.

In the stack, the following contents are mainly stored:

- Local variables of function calls: When a function is called, its local variables are saved on the stack. These local variables will be destroyed after the function execution ends.

- Return address of the function: After a function is executed, it needs to return to the address where the function was called. The return address will be saved on the stack so that the function can return correctly after execution.

- Temporary data during function calling: During function execution, some temporary data may need to be saved, such as function parameters, intermediate calculation results, etc. These data will be saved on the stack.

What about pushing on the stack when a function is called?

When the function is called, the following stack operations are performed:

- Saving the return address: Before the function call, the calling instruction will push the address of the next instruction (that is, the address that needs to continue execution after the function call) onto the stack so that the function can correctly return to the call point after the function is executed.

- Save the caller's stack frame pointer: Before the function call, the calling instruction will push the current stack frame pointer (that is, the caller's stack pointer) onto the stack so that the caller's execution state can be restored after the function is executed.

- Passing parameters: When a function is called, the parameter values are pushed onto the stack in turn. These parameter values can be accessed through the stack inside the function.

- Allocate local variable space: When a function is called, space will be allocated for local variables, and these local variables will be saved on the stack. The stack pointer is moved accordingly to accommodate the new local variable space.

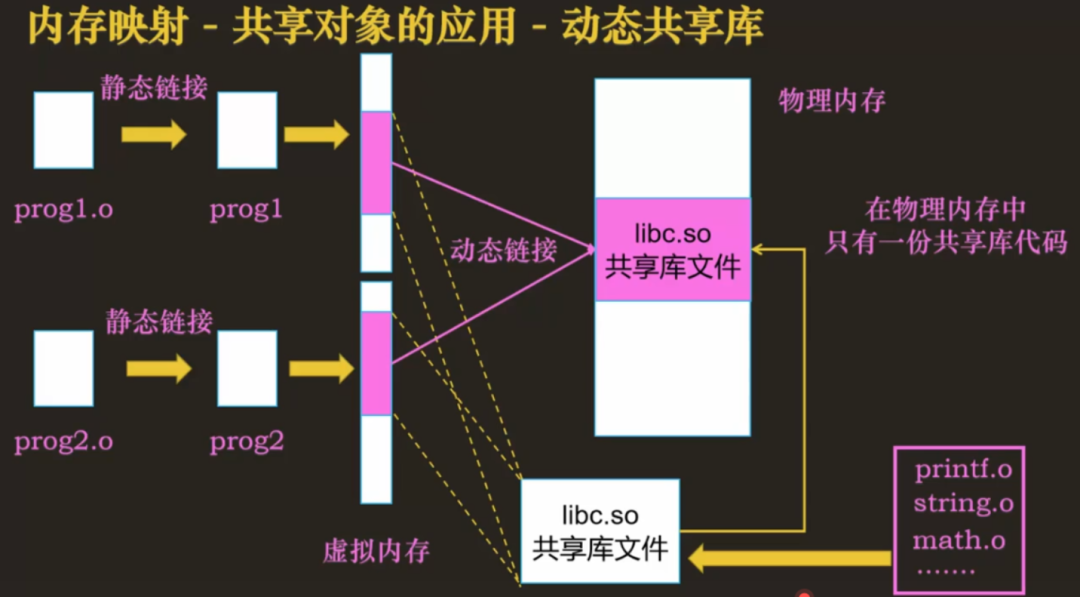

What is the difference between static link library and dynamic link library?

- Linking method: The static link library will be completely copied to the executable file when compiling and linking, and becomes part of the executable file; while the dynamic link library will only include a reference to the library in the executable file when compiling and linking. In fact, The library files are dynamically loaded by the operating system at run time.

- File size: Static link libraries will increase the size of the executable file because the library code is completely copied into the executable file; dynamic link libraries will not increase the size of the executable file because the library code is will be loaded.

- Memory usage: The static link library will be completely loaded into the memory at runtime, occupying a fixed memory space; while the dynamic link library will be loaded at runtime and can be shared between multiple processes to reduce memory usage.

- Scalability: Dynamic link libraries are more scalable and can replace or add new library files without modifying the executable file, while static link libraries need to be recompiled and linked.

How is the dynamic link library loaded into memory?

By using mmap to map the library directly into the address space of each process, although each process thinks that the library is loaded in its own address space, in fact there is only one copy of the library in the memory. mmap is so magical and dynamic. The link library is linked.

picture

picture

An introduction to virtual memory

- First, virtual memory can make the process use more running memory than the physical memory size. Because the program runs in compliance with the principle of locality, the CPU has an obvious tendency to repeatedly access the memory. For those memories that are not frequently used, we can Swap it out outside of physical memory, such as the swap area on the hard disk.

- Second, since each process has its own page table, the virtual memory space of each process is independent of each other. Processes have no way to access the page tables of other processes, so these page tables are private, which solves the problem of address conflicts between multiple processes.

- Third, in addition to the physical address, the page table entries in the page table also have some bits that mark attributes, such as controlling the read and write permissions of a page, marking whether the page exists, etc. The operating system provides better security when it comes to memory access.

what is interrupt

In an operating system, an interrupt refers to an event triggered by hardware or software that temporarily suspends the currently executing program and switches to the execution of the interrupt-related handler. Interrupts can be internal, such as a division error or an out-of-bounds access, or external, such as an input/output request from a hardware device or a clock interrupt.

The role of interrupts is to provide a mechanism to process and respond to various events, such as processing input/output requests from hardware devices, handling exceptions, clock scheduling, etc. When an interrupt occurs, the operating system determines the interrupt handler to be executed based on the interrupt type, and resumes the original program execution after handling the interrupt.

An interrupt handler can perform a series of operations, such as saving the context of the current process, handling interrupt events, communicating with the device, scheduling other processes, etc. By using interrupts, the operating system can achieve multitasking, respond to external events in real time, and improve system reliability and stability.

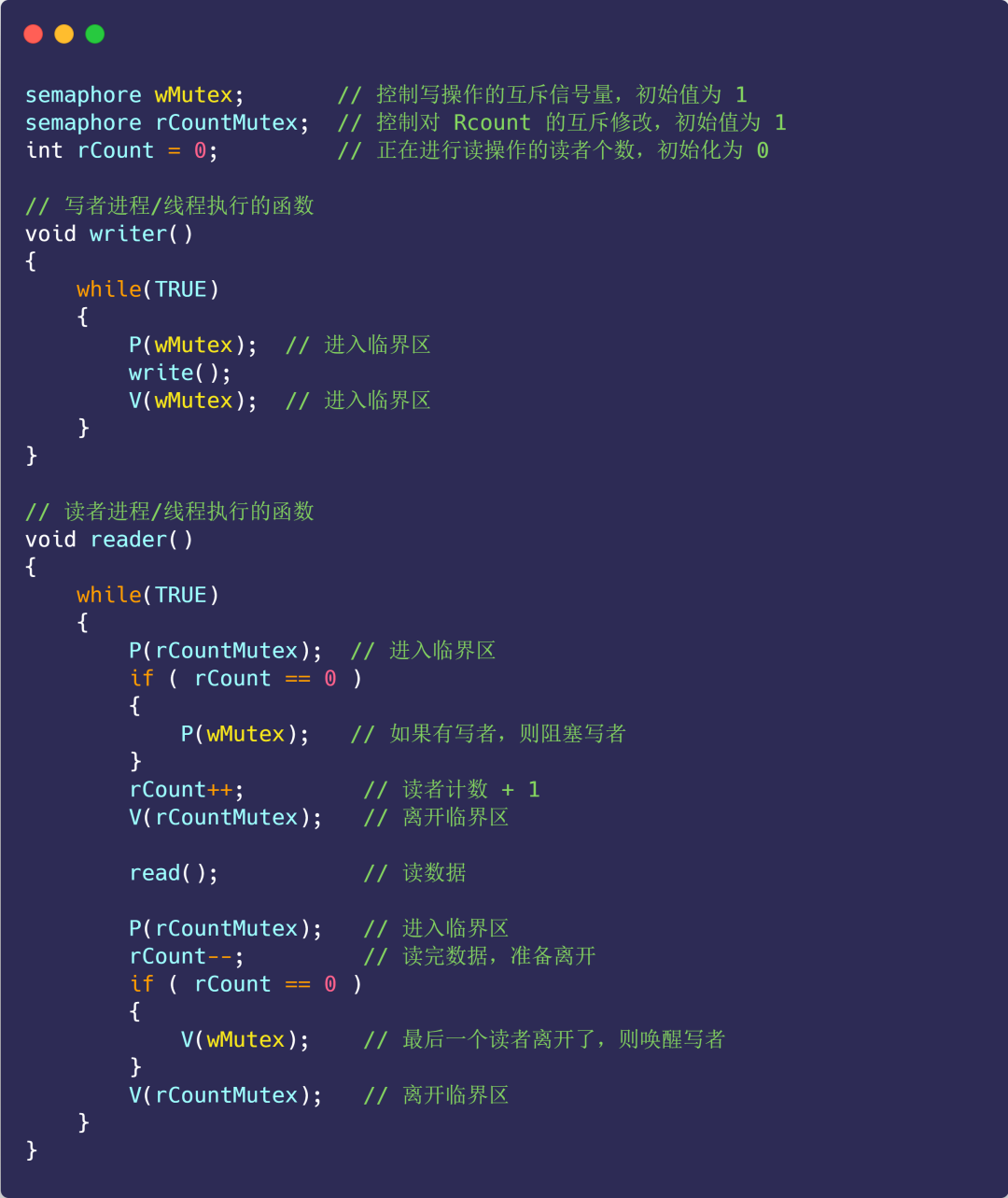

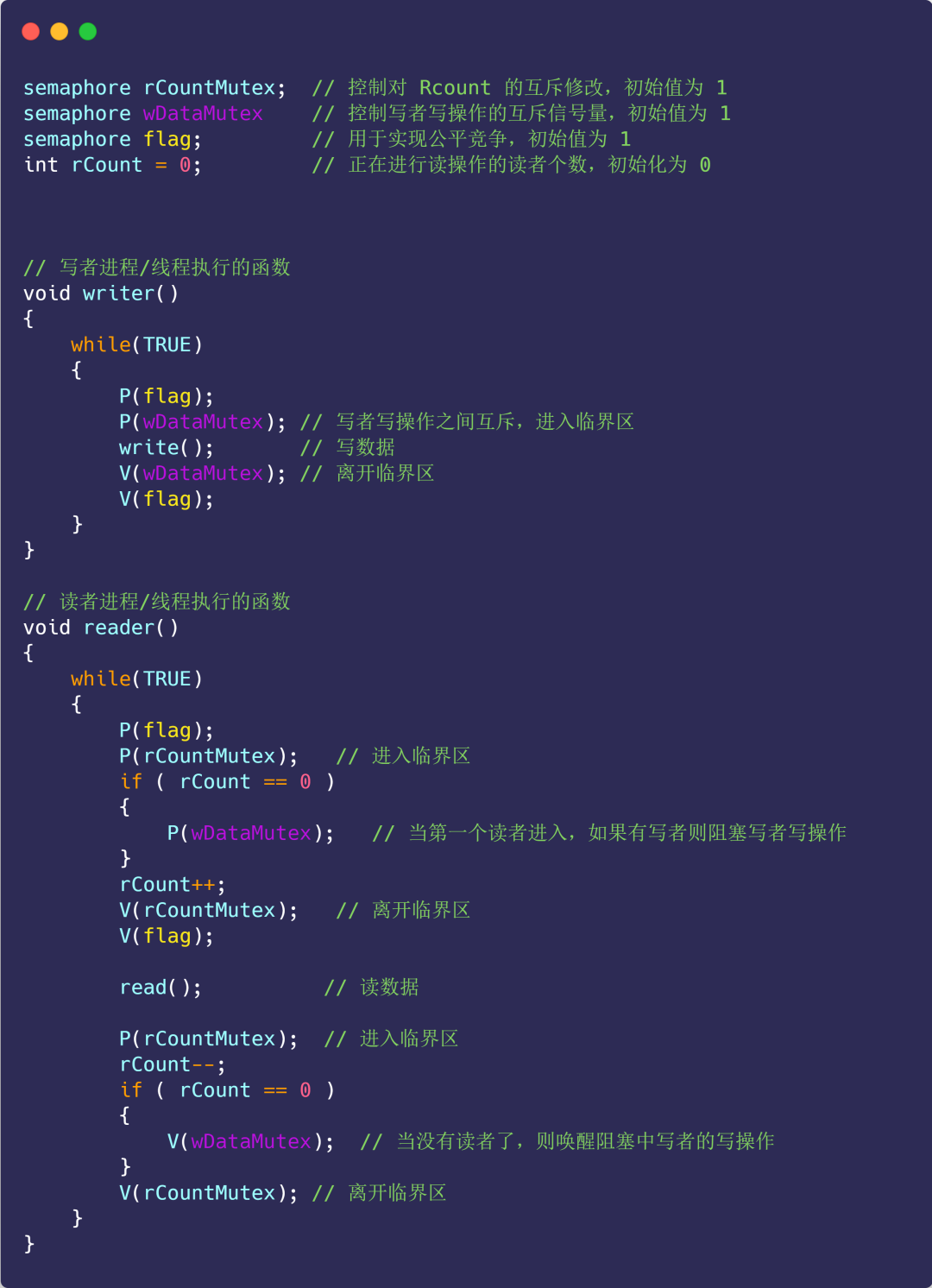

Operating system lock, implement a read-write lock by yourself

Readers will only read the data and will not modify the data, while writers can both read and modify the data.

Reader-Writer Problem Description:

- "Read-read" allows: multiple readers are allowed to read at the same time

- "Read-Write" are mutually exclusive: readers can read when there is no writer, and writers can write when there are no readers.

- "Write-Write" is mutually exclusive: the writer can write only when there are no other writers.

Next, several solutions are proposed for analysis.

Option One

Use semaphore to try to solve:

- Semaphore wMutex: a mutually exclusive semaphore that controls write operations, with an initial value of 1;

- Reader count rCount: The number of readers performing read operations, initialized to 0;

- Semaphore rCountMutex: controls the mutually exclusive modification of the rCount reader counter, with an initial value of 1;

Next let’s look at the implementation of the code:

picture

picture

The above implementation is a reader-first strategy, because as long as a reader is reading, subsequent readers can enter directly. If readers continue to enter, the writer will be in a hungry state.

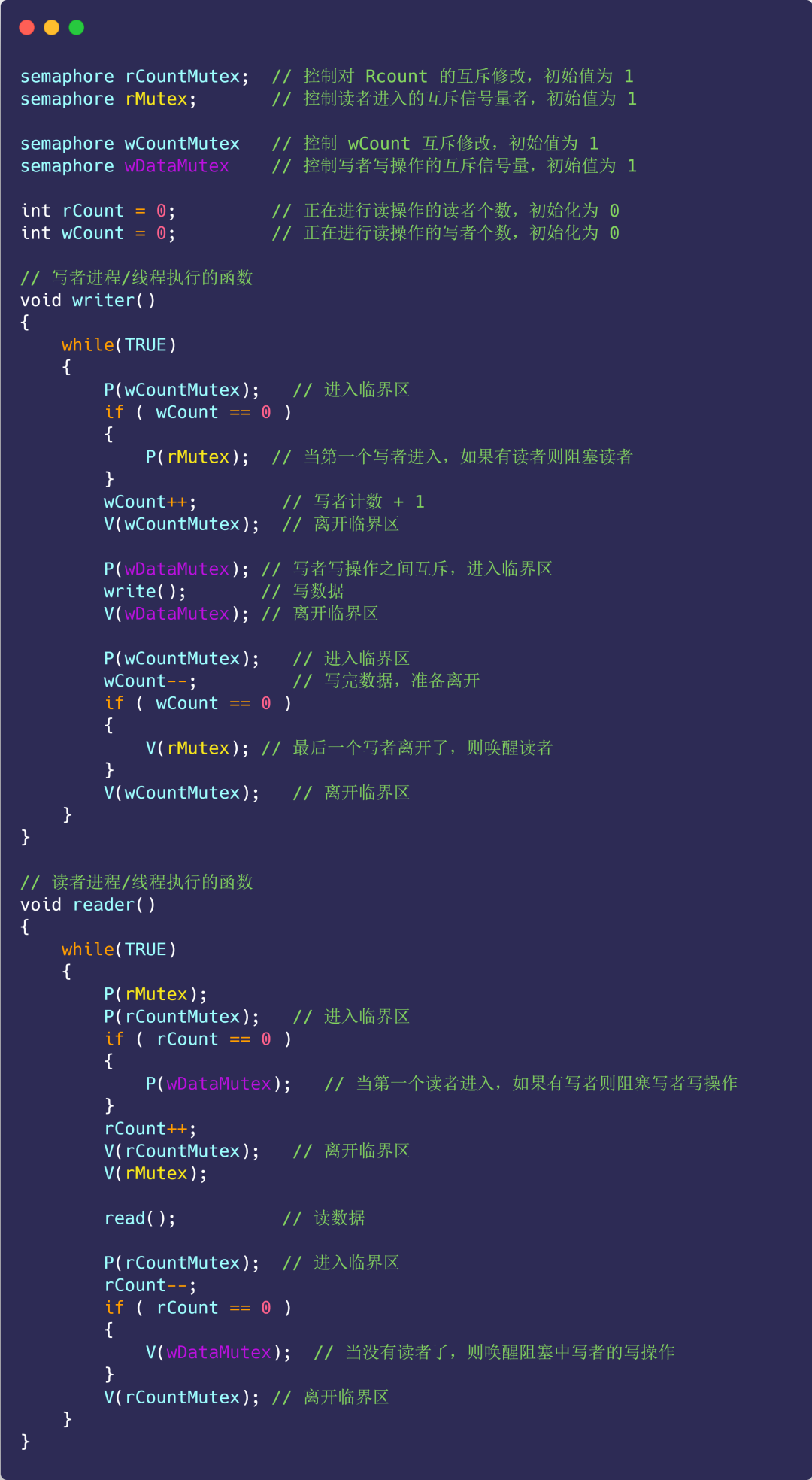

Option II

Since there is a reader-first strategy, there is also a writer-first strategy:

- As long as there is a writer ready to write, the writer should perform the write operation as soon as possible, and subsequent readers must block;

- If a writer keeps writing, readers are hungry;

Based on option 1, add the following variables:

- Semaphore rMutex: a mutually exclusive semaphore that controls reader entry, with an initial value of 1;

- Semaphore wDataMutex: a mutually exclusive semaphore that controls the writer's writing operation, with an initial value of 1;

- Writer count wCount: records the number of writers, the initial value is 0;

- Semaphore wCountMutex: controls the mutually exclusive modification of wCount, with an initial value of 1;

The specific implementation is as follows:

Note that the role of rMutex here is that multiple readers begin to read data, and they all enter the reader queue. At this time, a writer comes. After executing P(rMutex), subsequent readers cannot enter again because they are blocked on rMutex. Reader queue, and when writers arrive, they can all enter the writer queue, thus ensuring that writers have priority.

At the same time, after the first writer executes P(rMutex), he cannot start writing immediately. He must wait until all readers who have entered the reader queue have completed the reading operation, and then wake up the writer's writing operation through V(wDataMutex).

third solution

Since both the reader-first strategy and the writer-first strategy will cause starvation, let's implement a fair strategy.

Fair strategy:

- The priority is the same;

- Writers and readers have mutually exclusive access;

- Only one writer can access the critical section;

- Multiple readers can access critical resources simultaneously;

Specific code implementation:

picture

picture

After reading the code, I wonder if you have any questions, why does adding a semaphore flag achieve fair competition?

Comparing the reader priority strategy of Scheme 1, we can find that in reader priority, as long as a reader arrives later, the reader can enter the reader queue, and the writer must wait until no readers arrive.

The arrival of no readers will cause the reader queue to be empty, that is, rCount==0. Only then can the writer enter the critical section to perform writing operations.

The function of the flag here is to prevent this special permission of the reader (the special permission is that as long as the reader arrives, he can enter the reader queue).

For example: some readers start to read data, and they all enter the reader queue. At this time, a writer comes and performs the P(falg) operation, so that subsequent readers are blocked on the flag and cannot enter the reader queue. This will cause The reader queue gradually becomes empty, that is, rCount decreases to 0.

This writer cannot start writing immediately (because the reader queue is not empty at this time), and will be blocked on the semaphore wDataMutex. After all readers in the reader queue have finished reading, the last reader process executes V(wDataMutex) to wake up just now The writer continues to write.

Algorithm questions

- Binary tree level order traversal constructs a binary tree test

Kuaishou Ermian

The concept of pointer and reference value passing

- Passing by value means copying the value of a parameter and passing it to a function or method for operation. In value transfer, modification of parameters by a function or method does not affect the original variable value.

- Pointer reference refers to passing the memory address of a parameter to a function or method, so that the function or method can directly access and modify the value of the original variable. In a pointer reference, modifications to parameters by a function or method are directly reflected in the original variable.

Why does the forced conversion of int double string cause precision loss?

- Integer to floating point: Integer types are represented exactly, while floating point types are represented approximately, with a fixed number of significant digits. When converting an integer to a floating point number, a loss of precision can occur because floating point numbers cannot accurately represent all the digits of an integer.

- Floating point to integer: The floating point type has a fractional part and an exponent part, while the integer type can only represent integer values. When converting a floating point number to an integer, the fractional part is discarded, potentially resulting in a loss of precision.

- String to numeric type: String represents data in text form, while numeric type represents data in numeric form. When converting a string to a numeric type, if the string cannot be parsed into a valid numeric value, or if the value represented by the string exceeds the range of the target type, precision may be lost or erroneous results may be produced.

What is void*?

void* is a general pointer type, known as "untyped pointer". It can be used to represent a pointer to any type because void* pointers do not specify a specific data type.

Since void* is untyped, it cannot directly perform dereference operations, nor can it perform pointer operations. When using void* pointers, they need to be converted to specific pointer types before operations can be performed.

Void* pointers are often used when common operations between different types are required, such as passing pointer parameters of any type in a function or used in dynamic memory allocation.

How to convert malloc parameter list void* into int*?

You can use type conversion to convert a void* pointer into an int* pointer. The following is an example code that converts a void* pointer to an int* pointer:

void* voidPtr = malloc(sizeof(int)); // 分配内存并返回void*指针

int* intPtr = (int*)voidPtr; // 将void*指针转化为int*指针

// 现在可以通过intPtr指针访问int类型的数据

*intPtr = 42;- 1.

- 2.

- 3.

- 4.

- 5.

In the above example, the malloc function is used to allocate the memory required to store an int type data, and a void* pointer is returned. Then, assign the void* pointer to the intPtr variable by converting it to an int* pointer. Now, data of type int can be accessed and manipulated through intPtr pointer.

Algorithm questions

- Find a number from an array that is larger than the left side and smaller than the right side

Kuaishou three sides

- n threads print numbers in order

- Design a small snake game, snake data structure, snake body update and collision detection

I didn’t ask about the three questions and the eight-part question. I came directly to two scenario code questions, which focused more on practical operations. It was too difficult.