Exploration on the application of metropolitan area RDMA in computing power network in home broadband network

Exploration on the application of metropolitan area RDMA in computing power network in home broadband network

1. Dilemmas of traditional TCP/IP network transmission

1.1 The traditional Ethernet end-to-end transmission system overhead is too high

When describing the relationship between software and hardware in the communication process, we usually divide the model into user space, kernel, and hardware.

Userspace and Kernel actually use the same physical memory, but for security reasons, Linux divides the memory into user space and kernel space. The user layer does not have permission to access and modify the memory contents of the kernel space. It can only fall into the kernel state through system calls. The memory management mechanism of Linux is relatively complex.

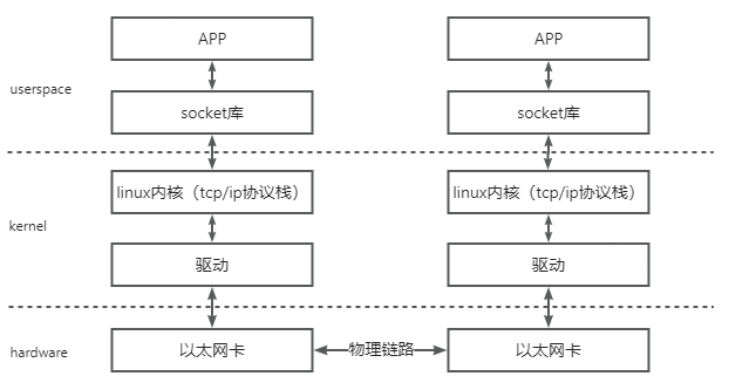

A typical communication process based on traditional Ethernet can be layered as shown in the following figure:

The data flow of this model is roughly as shown in the figure above. The data first needs to be copied from user space to kernel space. This copying is completed by the CPU, which copies the data block from user space to the Socket Buffer in kernel space. The software TCP/IP protocol stack in the kernel adds each layer of headers and verification information to the data. Finally, the network card will copy the data from the memory through DMA and send it to the opposite network card through the physical link.

The opposite process is exactly the opposite: the hardware copies the data packet DMA to the memory, then the CPU parses and verifies the data packet layer by layer, and finally copies the data to user space. The key point in the above process is that the CPU needs to be fully involved in the entire data processing process, that is, copying data from user space to kernel space, assembling and parsing the data, etc. When the amount of data is large, this will cause a lot of trouble to the CPU. A big burden.

In a traditional network, what "node A sends a message to node B" actually does is "move a piece of data in the memory of node A to the memory of node B through the network link", and this process regardless of whether it is the sender or receiver. Segments require CPU command and control, including network card control, interrupt processing, message encapsulation and parsing, etc.

The data of the node on the left in the memory user space in the above figure needs to be copied to the buffer of the kernel space by the CPU before it can be accessed by the network card. During this period, the data will pass through the TCP/IP protocol stack implemented by the software, plus various Layer header and check code, such as TCP header, IP header, etc. The network card copies the data in the kernel to the buffer inside the network card through DMA, processes it, and sends it to the peer through the physical link.

After the peer receives the data, it will perform the opposite process: copy the data from the internal storage space of the network card to the buffer of the memory kernel space through DMA, and then the CPU will parse it through the TCP/IP protocol stack and retrieve the data. Come out and copy it to user space. It can be seen that even with DMA technology, the above process still has a strong dependence on the CPU.

1.2 The TCP protocol itself has natural shortcomings in the scenario of long and fat pipelines

- Two major characteristics of TCP long fat pipeline

①The transmission delay (sending delay) is very small: the speed of sending and receiving packets is very fast, and a large amount of data can be sent to the network in a very short time.

② The propagation delay is very large: From the time the data packet is sent to the network, it takes a long time (compared to the sending delay) to be transmitted to the receiving end.

- Impact of LFN on TCP performance

① The bandwidth delay product of LFN is very large (it is sent very quickly and takes a long time to propagate to the other end), resulting in a large number of data packets staying in the propagation route. The TCP flow control algorithm will stop sending when the window becomes 0. However, the window size field in the original TCP header is 16 bits, so the maximum window size is 65535 bytes, which limits the total length of unacknowledged data sent by the sender to 65536 bytes. Refer to the calculation 65535*8/1024/1024=0.5Mbps. Assuming that the transmission speed is fast enough, in a network with a propagation delay of 100 milliseconds, only a bandwidth of 5Mbps can be achieved before the first bit arrives. When receiving, the sender has already sent the last bit, and then the window becomes 0 and stops sending data. The sender has to wait for at least 100 milliseconds to receive the receiving window notification sent back by the receiver, and then the window can be opened to continue sending. , which means that only up to 5Mbps of bandwidth can be used, so the network cannot be fully utilized. ------The window expansion option is thus proposed to declare a larger window.

②The high latency of LFN will lead to pipeline exhaustion

According to TCP congestion control, losing packets will cause the connection to undergo congestion control. Even if it enters fast recovery due to redundant ACK, the congestion window will be reduced by half. If it enters slow start due to timeout, the congestion window will become is 1, no matter which case it is, the amount of data allowed to be sent by the sender is greatly reduced, which will cause the pipeline to dry up and the network communication speed to drop sharply.

③LFN is not conducive to RTT measurement of TCP protocol

According to the TCP protocol, each TCP connection has only one RTT timer. At the same time, only one message is used for RTT measurement. TCP cannot perform the next RTT measurement until the data that starts the RTT timing is not ACKed. In long fat pipelines, the propagation delay is very large, which means that the RTT test cycle is very large.

④LFN causes the receiving end tcp to be out of order

The sending speed of long fat pipes is very fast (sending delay). TCP uses a 32-bit unsigned sequence number to identify each byte of data. TCP defines the maximum segment survival time (MSL) to limit the survival time of message segments in the network. However, on the LFN network, since the sequence number space is limited, the sequence number will be reused after 4294967296 bytes have been transmitted. If the network is so fast that the sequence numbers wrap around in less than one MSL, there will be two different segments with the same sequence number in the network, and the receiver will not be able to distinguish their order. In a gigabit network (1000Mb/s), it only takes 34 seconds to complete the transmission of 4294967296 bytes.

2. The overall framework of XDP

2.1 Basic principles

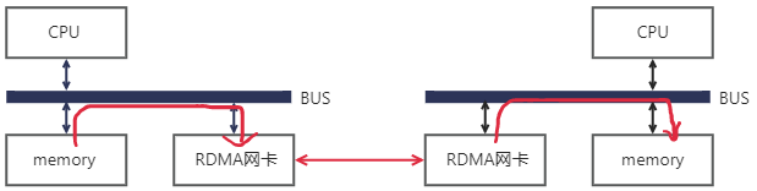

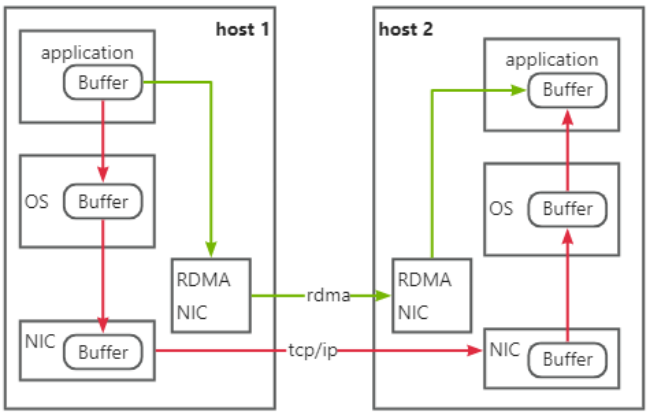

RDMA (Remote Direct Memory Access) means remote direct address access. Through RDMA, the local node can "directly" access the memory of the remote node. The so-called "direct" means that it can read and write remote memory just like accessing local memory, bypassing the complex TCP/IP network protocol stack of traditional Ethernet, and this process is not perceived by the other end, and this reading and writing process is Most of the work is done by hardware rather than software. After using RDMA technology, this process can be simply expressed as the following schematic diagram:

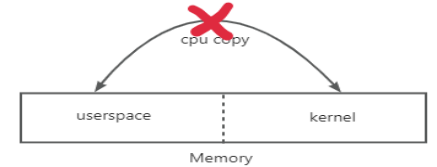

It also copies a piece of data in the local memory to the remote memory. When using RDMA technology, the CPUs at both ends hardly need to participate in the data transmission process (only participate in the control plane). The local network card directly copies the data from the user space DMA of the memory to the internal storage space, and then the hardware assembles the packets at each layer and sends them to the opposite network card through the physical link. After the peer's RDMA network card receives the data, it strips off each layer of message headers and check codes, and copies the data directly to the user space memory through DMA. RDMA transmits server application data directly from the memory to the smart network card (solidified RDMA protocol), and the smart network card hardware completes the RDMA transmission message encapsulation, freeing up the operating system and CPU.

2.2 Core advantages

1) Zero Copy: There is no need to copy data to the operating system kernel state and process the data packet header, and the transmission delay will be significantly reduced.

2) Kernel Bypass: It does not require the participation of the operating system kernel. There is no cumbersome header processing logic in the data path, which not only reduces latency, but also greatly saves CPU resources.

3) Protocol Offload: RDMA communication can read and write memory without the remote node CPU participating in the communication. This actually puts the message encapsulation and parsing into the hardware. Compared with traditional Ethernet communication, the CPUs of both parties must participate in the parsing of messages at each layer. If the amount of data is large and interactions are frequent, it will be a considerable expense for the CPU, and these occupied CPU computing resources could have been used Do more valuable work.

Compared with traditional Ethernet, RDMA technology achieves both higher bandwidth and lower latency, so it can be used in bandwidth-sensitive scenarios such as the interaction of massive data and latency-sensitive scenarios such as data synchronization between multiple computing nodes. can play its role in various scenarios.

2.3 Basic classification of RDMA networks

Currently, there are roughly three types of RDMA networks, namely InfiniBand, RoCE (RDMA over Converged Ethernet, RDMA over Converged Ethernet) and iWARP (RDMA over TCP, Internet Wide Area RDMA Protocol). RDMA was originally exclusive to the Infiniband network architecture, ensuring reliable transmission from the hardware level. RoCE and iWARP are both Ethernet-based RDMA technologies.

1) InfiniBand

InfiniBand is a network designed specifically for RDMA. It was proposed by IBTA (InfiniBand Trade Association) in 2000. It specifies a complete set of link layer to transport layer (not the transport layer of the traditional OSI seven-layer model, but located in above) specifications, mainly using Cut-Through forwarding mode (cut-through forwarding mode) to reduce forwarding delay, and Credit-based flow control mechanism (credit-based flow control mechanism) to ensure no packet loss. But IB also has inevitable cost flaws. Because it is not compatible with existing Ethernet, in addition to requiring a network card that supports IB, enterprises must purchase new supporting switching equipment if they want to deploy it.

2) RoCE

There are two versions of RoCE: RoCEv1 is implemented based on the Ethernet link layer. The network layer of the v1 version still uses the IB specification, while v2 uses UDP+IP as the network layer, so that data packets can also be routed and can only be transmitted at the L2 layer. ;RoCEv2 carries RDMA based on UDP and can be deployed in three-layer networks.

RoCE can be considered a "low-cost solution" for IB. Deploying a RoCE network requires supporting RDMA dedicated smart network cards and does not require dedicated switches and routers (supports ECN/PFC and other technologies to reduce packet loss rates). Its network construction cost is three The lowest among all rdma network models.

3) iWARP

The transport layer is the iWARP protocol. iWARP is the TCP layer implementation of the Ethernet TCP/IP protocol and supports L2/L3 layer transmission. Large-scale network TCP connections consume a lot of CPU, so there are few applications.

iWARP only requires the network card to support RDMA and does not require dedicated switches and routers. The network construction cost is between InfiniBand and RoCE.

2.4 Implement comparison

Infiniband technology is advanced, but the price is high, and its application is limited to the field of HPC high-performance computing. With the emergence of RoCE and iWARPC, the cost of using RDMA has further increased, thus promoting the popularization of RDMA technology.

Using these three types of RDMA networks in high-performance storage and computing data centers can significantly reduce data transmission delays and provide higher CPU resource availability for applications.

Among them, InfiniBand network brings ultimate performance to data centers, with transmission latency as low as 100 nanoseconds, which is an order of magnitude lower than the latency of Ethernet equipment;

RoCE and iWARP networks bring ultra-high cost performance to data centers. They carry RDMA based on Ethernet and take full advantage of RDMA's high performance and low CPU usage. At the same time, the network construction cost is not high;

RoCE based on UDP protocol has better performance than iWARP based on TCP protocol. Combined with the flow control technology of lossless Ethernet, it solves the problem of sensitive packet loss. RoCE network has been widely used in high-performance data centers in various industries.

3. Exploration on the application of RDMA in home broadband networks

Under the comprehensive promotion of national strategies such as "Network Power, Digital China, and Smart Society", digital, networked, and intelligent digital homes have become the embodiment of smart city concepts at the family level. The "14th Five-Year Plan" and the 2035 Vision Goals , the digital home is positioned as an important part of building a "new picture of a better digital life". With the support of the new generation of information technology, the digital home is constantly evolving into a smart home, completing the transformation from "digital" to "smart". Currently, China's smart home market is expanding year by year, and China has become the world's largest smart home market consumer, accounting for about 50% to 60% of the global market share (data source: CSHIA, Aimee Data, National Bureau of Statistics). According to CCID Consulting's research, China's smart home market will reach 1.57 trillion yuan in 2030, with an average compound growth rate (CAGR) of 14.6% from 2021 to 2030.

Accompanying the rapid development of the home broadband market is:

① The contradiction between the undifferentiated, best-effort service approach of home broadband and the need for business differentiation and deterministic network quality

Current broadband access does not differentiate between network connections for different services and provides services in a best-effort manner. When congestion occurs, all services have the same priority and adopt the same processing strategy. However, services have different requirements for network quality and different sensitivities to delay and packet loss. Some delay-sensitive services, such as games and cloud computers, require deterministic network guarantees. To ensure user experience, when network congestion occurs and packet loss occurs, the user experience increases sharply. decline, so different processing strategies are required for such services during congestion.

② The contradiction between bandwidth improvement and degradation of experience in long and fat pipeline scenarios

According to statistics from the Ministry of Industry and Information Technology, more than 94% of users nationwide have broadband with speeds above 100Mbps. However, users still have problems with lags and slow download speeds when accessing long-distance content. The reason is not insufficient access bandwidth, but the natural shortcomings of the underlying TCP protocol congestion control algorithm in the long fat pipe (LFN) scenario. TCP is a protocol from decades ago and can no longer adapt to the current network status and application needs. New protocols and algorithms are urgently needed to ensure business experience in long and fat pipeline scenarios.

To sum up, from the perspective of industry trends, under the wave of computing power upgrades in home-broadband networks, RDMA technology can achieve deep integration of computing and network compared to TCP. Data is directly transferred from the memory of one computer to another without the intervention of both operating systems and time-consuming processing by the processor, ultimately achieving the effects of high bandwidth, low latency and low resource usage.