An article to help you understand what CDN is

An article to help you understand what CDN is

When we open the browser to access the page, it is actually a process of continuously sending HTTP requests. The client sends HTTP requests and the server returns a response.

The client here can be a browser or code written in Python, while the server is an application written using a Web framework.

Speaking of which, let me add another knowledge point. Many Python friends may not be able to tell the difference between WSGI, uwsgi, uWSGI, and Nginx. Let’s summarize it.

WSGI

The full name of WSGI is Web Server Gateway Interface, which is the Web Server Gateway Interface. It is not a server, nor a Python module, framework, or any software. It is just a description of the Web server and Web applications (programs written using Web frameworks). Communication specifications and protocols.

Services written based on Web frameworks need to run on the Web server. Although these frameworks themselves come with a small Web server, it is only used for development and testing.

uWSGI

uWSGI is a Web server that implements WSGI, uwsgi, HTTP and other protocols. Therefore, if we deploy services written using the Web framework on uWSGI, we can directly provide external services.

Nginx

It is also a web server, but it can provide more functions than uWSGI, such as reverse proxy, load balancing, caching static resources, and being more friendly to HTTP requests, which uWSGI does not have or is not good at.

Therefore, after deploying the Web service in uWSGI, another layer of Nginx must be built in front. At this time, uWSGI no longer exposes the HTTP service, but exposes the TCP service. Because it communicates with Nginx, it is faster to use TCP, and Nginx exposes the HTTP service to the outside world.

ooze

uwsgi is the protocol used for communication between Nginx and uWSGI. We say that uWSGI is connected to Nginx.

After Nginx receives a user request, if the request is for static resources such as images, it can return directly. If a dynamic resource is requested, the request will be forwarded to uWSGI, and then uWSGI will call the corresponding Web service for processing. After the processing is completed, the result will be handed over to Nginx, and Nginx will return it to the client.

The reason why uWSGI and Nginx can interact is because they both support the uwsgi protocol. There is a module inside Nginx called HttpUwsgiModule, which is used to interact with the uWSGI server.

Back to the topic, we know that HTTP is a request-response model. When using a browser to open a page, the browser and the target server are the two endpoints of the HTTP protocol.

So the question is, must the request sent by the browser go directly to the specified target server? Can we stay somewhere else first? The answer is yes, this place is the agent.

In the HTTP protocol, the proxy is a link between the requester and the responder. As a transfer station, it can forward both the client's request and the server's response. There are many types of agents, the common ones are:

- Anonymous proxy: The proxy machine is completely hidden, and all the outside world sees is the proxy server.

- Transparent proxy: As the name suggests, it is transparent and open during the transmission process. The outside world knows both the proxy and the client.

- Forward proxy: close to the client and sends requests to the server on behalf of the client

- Reverse proxy: Close to the server and returns responses to the client on behalf of the server

Regarding forward proxy and reverse proxy, let’s give two more examples to explain.

forward proxy

Suppose you want to borrow something from B, but B does not agree, so you ask A to borrow it from B and then give it to you. A here plays the role of an agent and is also a forward agent, because it is A who actually borrows things from B.

If A does not say that this is what you want to borrow when asking B to borrow something, then A is an anonymous agent, because B does not know your existence; if A tells B, it is actually you who asked him to come to B, then A It is a transparent agent. B knows A and also knows you.

The V batch N we usually use is a forward proxy. When you access Google but are rejected, you can ask V batch N to help you access it. As far as Google is concerned, it is V batch N who sends requests to it, not you.

reverse proxy

Reverse proxy is also very simple. For example, when accessing Baidu, there may be thousands of servers providing services behind it, but we will not access them directly, but access the reverse proxy server.

Where www.baidu.com is located is the proxy server, which will help us forward the request to the real server. Nginx is a very good reverse proxy server that can balance all real servers behind it and forward the request to an appropriate server, which is the so-called load balancing.

Another example is when classmate Xiao Ming contacts the madam, hoping that she can provide a young lady to come to help with foreign language tutoring. The madam is a reverse proxy, and she will forward Xiao Ming's request to a certain young lady.

Therefore, both forward proxy and reverse proxy are proxies, and the core difference lies in the different objects of the proxy: the forward proxy is the client and is responsible for sending requests to the server; the reverse proxy is the server and is responsible for sending requests to the client. The end returns a response.

Since the proxy inserts an intermediate layer during the transmission process, it can do many , such as:

- Load balancing: evenly distribute access requests to multiple machines to achieve access clustering

- Content cache: temporarily stores upstream and downstream data to reduce the pressure on the backend

- Security protection: Hide IP, use WAF and other tools to resist network attacks, and protect proxy machines

- Data processing: Provides additional functions such as compression and encryption

After understanding the above content, you can clearly understand what CDN is.

The full name of CDN is Content Delivery Network, which translates to content distribution network. It applies caching and proxy technology in the HTTP protocol to respond to client requests on behalf of the origin server. Therefore, CDN is also a proxy and usually plays the role of transparent proxy and reverse proxy.

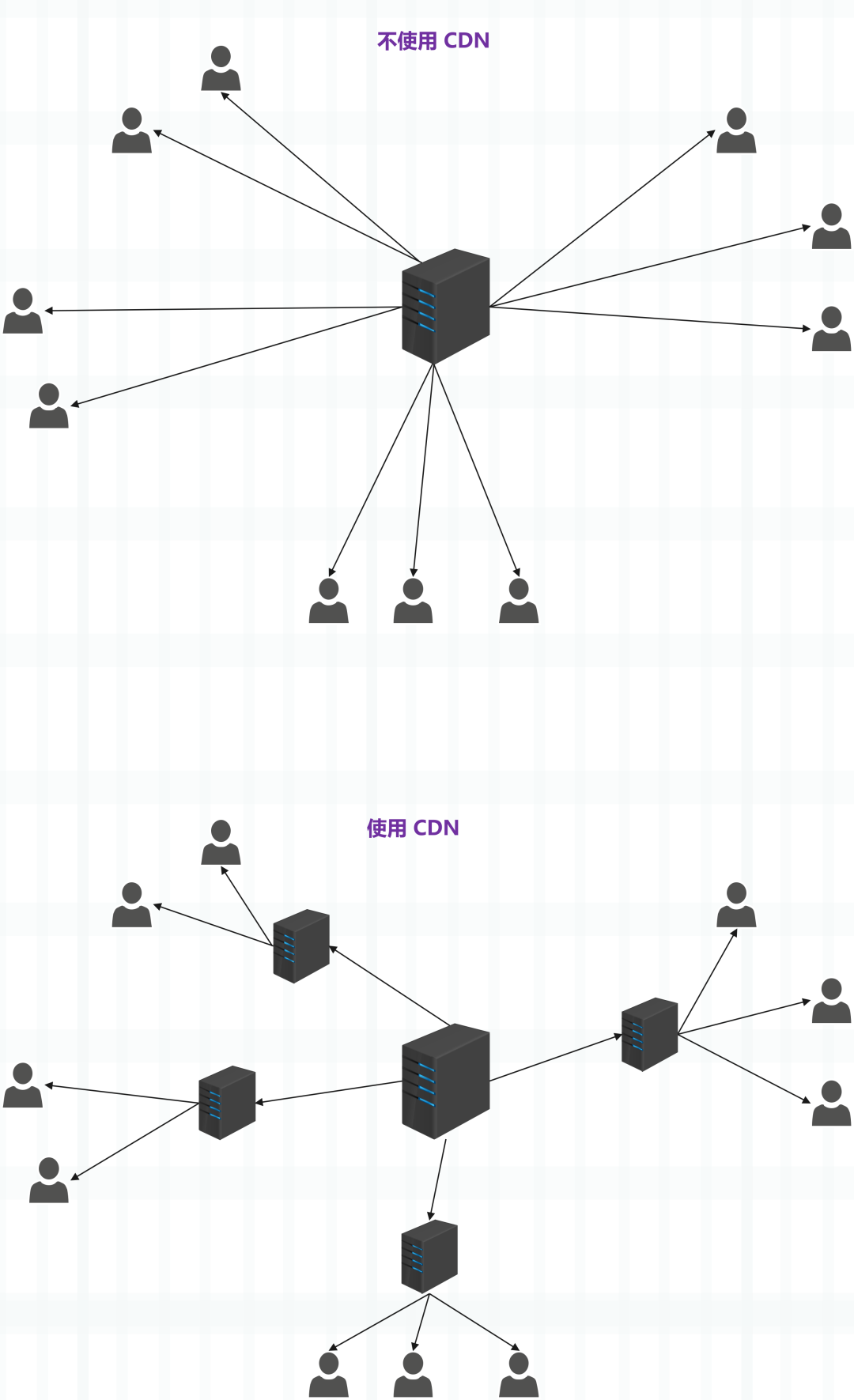

To put it bluntly, it can cache the data of the origin site, so that the browser's request does not have to travel all the way to the origin server, and the response can be obtained directly halfway. If the CDN's scheduling algorithm is excellent, it can find the node closest to the user and greatly shorten the response time.

Because in today's era of explosive information, users' waiting patience is getting lower and lower. There is a saying: when a user opens a page, if there is no response for more than 4 seconds, he will close the page. Therefore, any service provider hopes that the response speed of its service is fast enough so that it can retain users.

The time it takes for a user to make a request and receive a response depends not only on the network bandwidth, but also on the transmission distance. For example, if the server is in Guangdong, but the accessing user is in Beijing, the geographical distance will cause obvious delays, and the long transmission distance will also increase the possibility of data packet loss, leading to network interruption.

So CDN was born. It is an application service specifically responsible for solving the problem of slow network access caused by long distances. Its original core concept is to cache content near the end user. Isn't the origin site far away from the user? It doesn't matter. Just build a cache server close to the user and put a copy of the content from the origin site here.

Subsequently, users in Beijing access the cache server in Beijing, and users in Shanghai access the cache server in Shanghai. Yes, this is the core idea of CDN, but setting up a cache server requires a lot of money. Many companies generally do not do this themselves, but purchase existing CDN services.

Many CDN vendors have invested large sums of money, established computer rooms in major hub cities across the country and even around the world, deployed a large number of nodes with high storage and bandwidth, and built a dedicated network. This network is cross-operator and cross-regional. Although it is divided into multiple small networks internally, they are connected by high-speed dedicated lines. It is a true information highway. Basically, there is no network congestion.

With a high-speed network transmission channel, CDN will distribute the content of the origin site. It will use caching proxy technology and use push or pull methods to cache the content of the origin site to each node on the network level by level. Since the whole process is equivalent to distributing content through the network, it is called CDN, which is content distribution network.

Specifically, CDN uses more cache servers (also called CDN nodes). When a user accesses a website, it uses global load technology to forward the user's request to the nearest CDN node (termed as an edge node). The cache server responds to user requests. This saves the time cost of traveling long distances and achieves network acceleration.

So what kind of content can CDN accelerate? In other words, what content should the CDN node store?

In the CDN field, content is actually resources in the HTTP protocol, such as hypertext, pictures, videos, etc. Resources are divided into two categories: static resources and dynamic resources according to whether they can be cached.

- Static resources: The data content is static and remains the same whenever you access it, such as pictures and audio.

- Dynamic resources: The data content changes dynamically, that is, the content is calculated and generated by the background service, and may change every time you visit, such as the inventory of goods, the number of fans on Weibo, etc.

Obviously, only static resources can be accelerated by cache and accessed nearby, while dynamic resources can only be generated by the origin site in real time, and it is meaningless even if they are cached. However, if the dynamic resource does not change within a period of time, you can specify the Cache-Control field in the response header to allow caching for a short period of time, and then it will become a static resource during this period. Accelerated by CDN caching.

CDN: We do not produce content, we only serve as a porter of content.

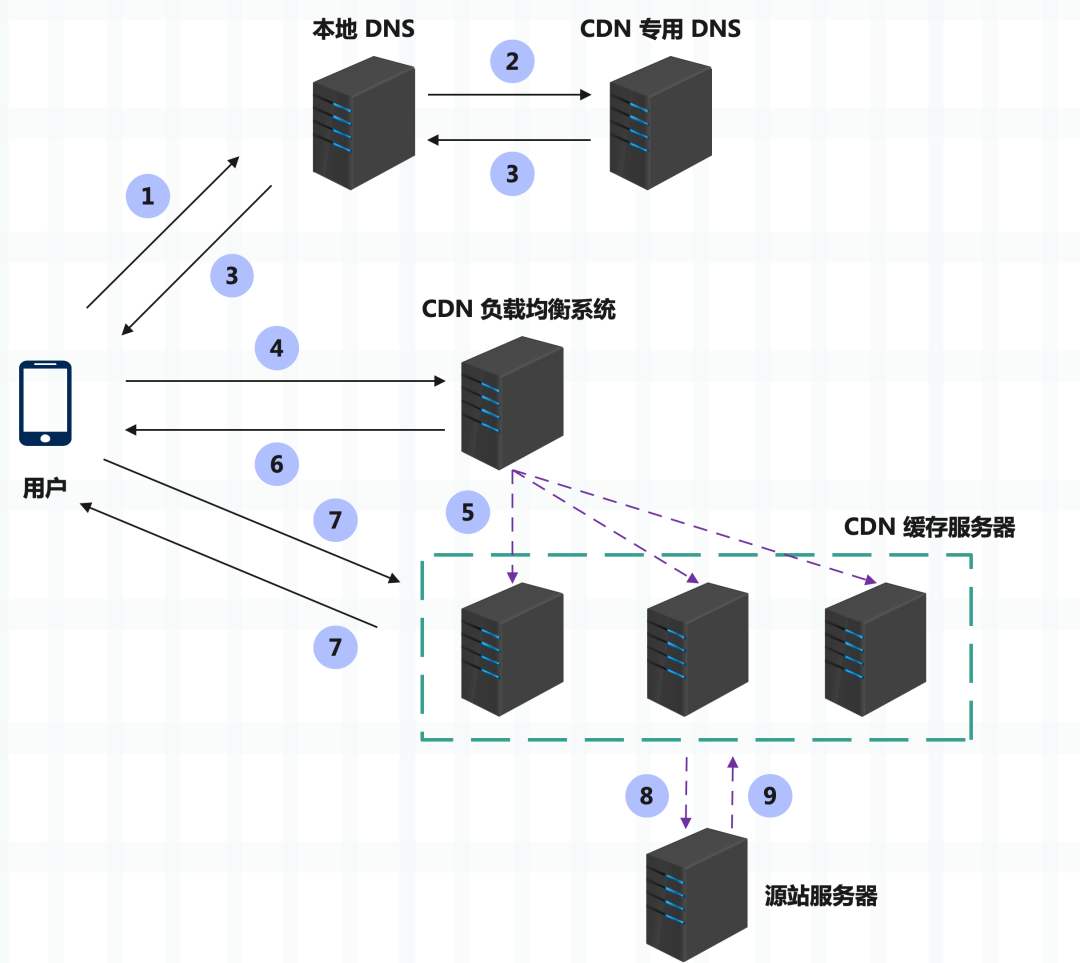

So far, we know what a CDN is, and let's take a look at how it works. A CDN has two key components: global load balancing and a caching system.

Global load balancing

Global Sever Load Balance (Global Sever Load Balance), generally referred to as GSLB, is the brain of CDN. The main responsibility is to select the best node in the CDN private network to provide services when the user accesses the network. The solution is how to find the nearest node, that is, the edge node, which is responsible for load balancing the entire CDN network.

The most common implementation method of GSLB is DNS load balancing, but the method of GSLB is slightly more complicated.

First of all, when there is no CDN, the authoritative DNS returns the actual IP address of the origin server. The browser can connect directly after receiving the DNS resolution result. But it's different when you join a CDN. The authoritative DNS no longer returns an IP address, but a CNAME (Canonical Name) alias record, pointing to the GSLB of the CDN.

This means that I cannot give you the IP of the origin server. What I have given you is GSLB. You need to go to GSLB to check again. Because the IP address is not obtained, the local DNS will initiate another request to GSLB, thus entering the CDN's global load balancing system and starting intelligent scheduling based on the following principles:

- Look at the user's IP address, check the table to find out the geographical location, and find the relatively nearest edge node . For example, if the IP is Beijing, then find the edge node in Beijing;

- Look at the operator's network where the user is located and find edge nodes on the same network. Because there are more than one edge nodes, it is more advantageous to choose the one with the same network;

- Check the load of edge nodes and find nodes with lighter loads;

- Reference node health status, service capabilities, bandwidth, response time, etc.;

GSLB combines these factors, uses a complex algorithm, and finally finds the most suitable edge node, and returns the IP address of this node to the user, so that the user can access the CDN's caching proxy nearby.

caching system

The caching system is another key component of CDN, which is equivalent to the heart of CDN. If the service capability of the cache system is insufficient and cannot meet user needs well, then it will be useless no matter how excellent the GSLB scheduling algorithm is.

However, the resources on the Internet are endless. No matter how powerful the CDN manufacturer is, it is impossible to cache all resources. Therefore, the caching system can only selectively cache the most commonly used resources, which leads to the emergence of two key concepts in CDN: hit and return to origin.

A hit means that the resource accessed by the user happens to be in the cache system and can be returned directly to the user. Returning to the origin is just the opposite. There is no data in the cache. The proxy must first synchronize the data from the origin site.

Correspondingly, the two indicators for measuring CND service quality are the hit rate and the return-to-origin rate. The calculation method is the number of hits/the number of return-to-origins divided by the total number of visits. Obviously, a good CDN should have a higher hit rate, the better, and a lower return-to-origin rate, the better. Today's commercial CDN hit rates are above 90%, which is equivalent to amplifying the service capabilities of the origin site by more than 10 times.

So how can we improve the hit rate and reduce the return rate as much as possible?

First of all, we must work hard on the storage system to store as much content as possible.

Secondly, the cache can also be divided into levels, divided into first-level cache and second-level cache. The first-level cache configuration is higher and directly connected to the origin site. The second-level cache configuration is lower and directly connected to the user. When returning to the origin, the second-level cache only looks for the first-level cache, and the first-level cache does not return to the origin. In this way, the fan-in is reduced, which can effectively reduce the back-to-source.

Although CDN has many advantages, it is not a panacea.

If it is real-time data of dynamic user interaction, it is difficult to cache it in a CDN.

In addition, in order to protect their own data privacy, many companies do not allow third-party CDN vendors to cache data, and only allow their own CDN to cache data. This may have some impact.

And the most critical thing is that if you build your own CDN, it will be very expensive. Therefore, most companies will not build it themselves, but choose specialized CDN vendors. But even renting a CDN service costs a lot of money. The more regions you have, the more money you will spend.

CDN and edge computing

Internet companies use CDN to trade storage space for low network latency, but many communications companies also favor CDN, with the purpose of trading storage space for network bandwidth. Through service sinking, the traffic pressure on the upper backbone network is reduced, hardware expansion is avoided, and network construction costs are reduced. Because a large amount of business traffic data is running around the backbone network, the backbone network will definitely be overwhelmed and must desperately expand its capacity. But if these business traffic data are solved at the bottom layer, then the bandwidth pressure on the backbone network will naturally be alleviated.

Many operators have moved their CDNs to prefectural and municipal levels to reduce pressure and improve user experience. Speaking of this, you should think of edge computing. Many people think that CDN and edge computing are very similar, because CDN is the prototype of edge computing.

All along, with the continuous improvement of network capabilities, content resources and computing capabilities are constantly moving up to the cloud computing center. Through the core cloud computing center, services are provided to all terminal nodes. However, as the number of users increases, the user's area and the computing center may be far away. So no matter where the computing center is set up, or how powerful its capabilities are, it cannot overcome the physical distance obstacle.

So people thought, can the data not be uploaded to the computing center, but transferred to the edge of the network, where the data is input (such as IoT devices), and then calculated directly? So there is edge computing, which does not send data to a computing center for processing, but processes the data near the data source.

So there are still differences between CDN and edge computing:

- CDN is responsible for optimizing data delivery; edge computing is responsible for optimizing data processing

- CDN is usually used for interactive static content, such as web pages, images, and videos; edge computing is used for applications that require fast data processing, such as real-time data analysis, etc.

- CDN will place content close to users; edge computing will place computing and data processing as close as possible to where the data is generated.

But the two can complement each other. For example, an edge computing device can use CDN to efficiently interact with content while processing data at the edge of the network.

In addition, CDN can also use edge computing. By placing the code and data for calculating dynamic resources on CDN nodes, dynamic resources can be obtained in CDN without going back to the source.