Uncovering the secrets of gRPC: Unlocking lightning-fast communication capabilities

Uncovering the secrets of gRPC: Unlocking lightning-fast communication capabilities

RPC — remote procedure call

According to Wikipedia's definition, "In distributed computing, a remote procedure call (RPC) is when a computer program causes the execution of a procedure (subroutine) in a different address space, usually on another computer on a shared network, as if It is a normal (local) procedure call and the programmer did not explicitly code the details of the remote interaction."

Simply put, it's a way for one computer program to ask another program to perform a certain task, even if they are on different computers. It's a bit like calling a function in your program, although it's executing on a different machine. It's a procedure call that behaves as if it's on the same machine but actually isn't, and the RPC library/framework is responsible for abstracting all this complexity.

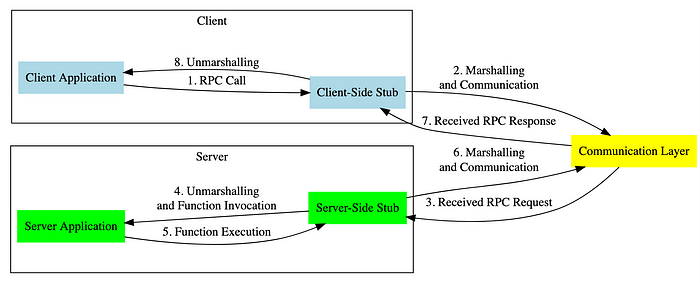

RPC process

The RPC framework is responsible for shielding the underlying transport method (TCP or UDP), serialization method (XML/JSON/binary) and communication details. Service callers can call remote service providers just like calling local interfaces without having to worry about the underlying communication. This involves the details and process of the call.

REST

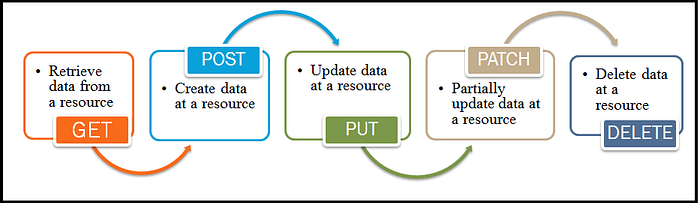

REST stands for Representational State Transfer and is a mature architectural style for designing web applications. RESTful APIs perform CRUD (Create, Read, Update, Delete) operations using HTTP requests, usually represented as URLs. REST API is known for its simplicity and use of standard HTTP methods such as GET, POST, PUT, and DELETE.

HTTP

HTTP (Hypertext Transfer Protocol) is the basis for data communication on the Internet. It defines how messages are formatted and transmitted, and how web servers and browsers respond to various commands. HTTP has evolved over time, with different versions offering various features and improvements:

- HTTP/1.0: The first version of HTTP was very simple and lacked many modern features. In HTTP/1.0, each request requires a new TCP connection, resulting in inefficiency.

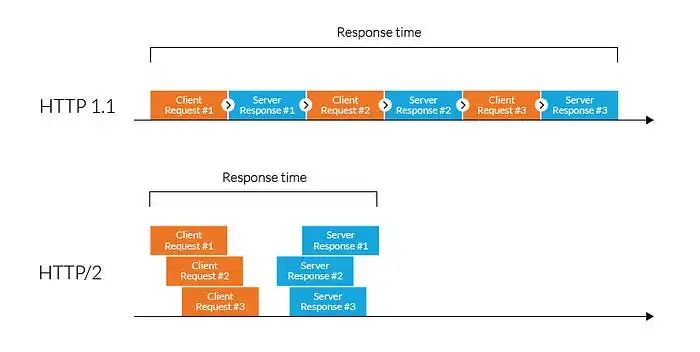

- HTTP/1.1: HTTP/1.1 improves upon HTTP/1.0 by introducing a keep-alive connection mechanism, allowing multiple requests and responses to be sent on a single TCP connection, thereby reducing latency. However, it still has some limitations, such as the "head blocking" problem, where one slow request can block subsequent requests on the same connection, causing a "waterfall" effect.

- HTTP/2: HTTP/2 introduces a binary framing mechanism that allows multiplexing, request prioritization, and header compression. These enhancements significantly improve the efficiency and speed of network communications. It eliminates the "head blocking" problem by allowing multiple concurrent streams within a single connection.

- HTTP/3: The latest version, HTTP/3, further improves performance by using the QUIC transport protocol. It focuses on reducing latency, especially in the presence of high packet loss or unreliable networks. HTTP/3 is designed to be more flexible and efficient than its predecessor.

So now we understand the terms. let's start!

What is gRPC?

gRPC (what does the "g" stand for here?) is an inter-process communication technology that allows you to connect, call, manipulate and debug distributed heterogeneous applications as easily as making a local function call.

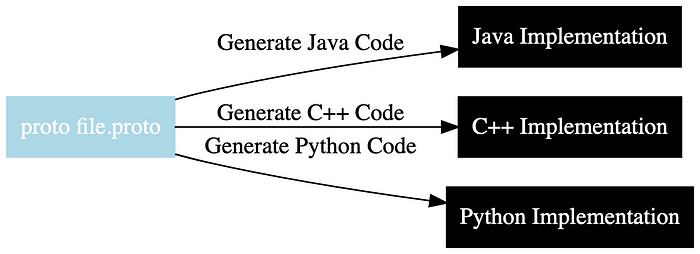

When you develop a gRPC application, the first thing to do is to define a service interface. The service interface definition contains information about how to consume the service, which remote methods the consumer is allowed to call, what method parameters and message formats to use when calling these methods, and so on. The language we specify in the service definition is called the Interface Definition Language (IDL).

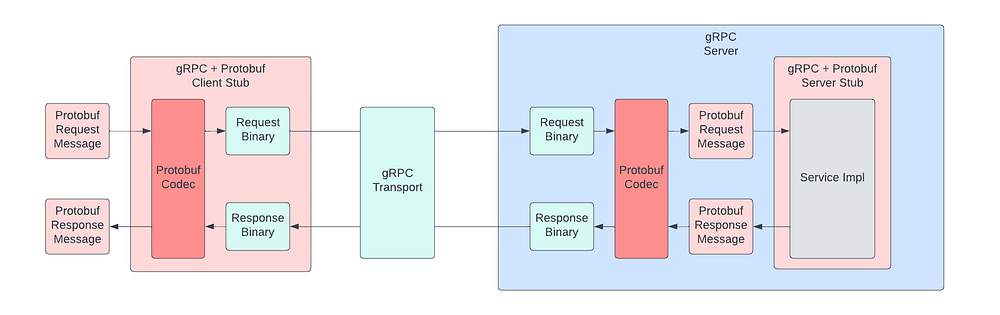

gRPC uses protocol buffers as the IDL that defines the service interface. Protocol buffers are a language-agnostic, platform-neutral, extensible mechanism for serializing structured data.

gRPC architecture

What gives gRPC its lightning-fast performance? Here's what's going on inside:

HTTP/2: In 2015, HTTP/2 replaced HTTP/1.1 and provided multiplexing capabilities, allowing multiple requests and responses to share a single connection, improving efficiency.

- Request/response multiplexing: Thanks to HTTP/2’s binary framing, gRPC can handle multiple requests and responses within a single connection, revolutionizing communication efficiency.

- Header compression: HTTP/2's HPack strategy compresses headers, reducing payload size. Coupled with gRPC's efficient encoding, this makes for excellent performance.

Protobuf: the secret weapon

One of the key factors in the gRPC efficiency game is the protocol buffer, or Protobuf for short. Protobuf defines data structures and function contracts. Both client and server need to use the same Protobuf language, this is how they understand each other. The protocol buffer (ProtoBuf) plays three main roles within the gRPC framework: defining data structures, specifying service interfaces, and enhancing transmission efficiency through serialization and deserialization.

What are the advantages of gRPC?

Except for having a very cute mascot. The reasons for adopting gRPC are its unique advantages:

- Efficiency of inter-process communication: Unlike JSON or XML, gRPC communicates using a binary protocol based on protocol buffers, improving speed. It is built on HTTP/2, making it one of the most efficient inter-process communication technologies.

- Well-defined service interfaces and patterns: gRPC encourages contract definition over service interface definition over delving into implementation details. This simplicity, consistency, reliability, and scalability define the application development experience.

- Strong typing and multi-language support: gRPC uses protocol buffers to define services, clearly specifying the data types communicated between applications. This helps improve stability and reduce runtime and interoperability errors. Additionally, gRPC can be seamlessly integrated with a variety of programming languages, providing developers with the flexibility to choose their preferred language.

- Bidirectional streaming and built-in features: gRPC natively supports client-side and server-side streaming, simplifying the development of streaming services and clients. It also has built-in support for key features such as authentication, encryption, resiliency (including deadlines and timeouts), metadata exchange, compression, load balancing, and service discovery.

- Cloud Native Integration and Maturity: As part of the Cloud Native Computing Foundation (CNCF), gRPC integrates seamlessly with modern frameworks and technologies, making it the preferred choice for communications. Projects in CNCF, such as Envoy, support gRPC, and many monitoring tools, including Prometheus, work effectively with gRPC applications. Additionally, gRPC has been extensively tested at Google and is widely adopted.