Use DDC to build AI network? It might just be a beautiful illusion

Use DDC to build AI network? It might just be a beautiful illusion

ChatGPT, AIGC, large models... A series of dazzling terms have emerged, and the commercial value of AI has aroused great concern from the society. As the scale of training models increases, the data center network supporting AI computing power has also become a hot spot. Improve the efficiency of computing power and build a high-performance network... Big manufacturers are showing their talents and working hard to open up the "F1 new track" of AI network on the grand plan of the Ethernet industry.

In this AI arms race, DDC has made a high-profile appearance, and it seems to have become synonymous with the revolutionary technology of building high-performance AI networks overnight. But is it really as good as it looks? Let us analyze in detail and judge calmly.

Beginning in 2019, the essence of DDC is to replace box routers with box routers

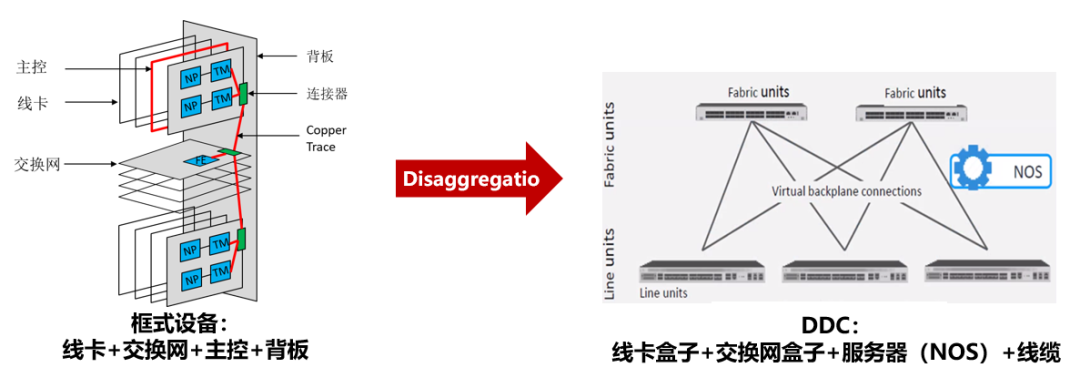

With the rapid growth of DCN traffic, the need for DCI network upgrades has become increasingly urgent. However, the expansion capability of DCI router chassis equipment is limited by the size of the chassis. At the same time, the equipment consumes a lot of power. When expanding the chassis, the requirements for cabinet power and heat dissipation are high, and the transformation cost is high. In this context, in 2019, AT&T submitted the commercial chip-based box router specification to the OCP, and proposed the concept of DDC (Disaggregated Distributed Chassis). To put it simply, DDC uses a cluster composed of several low-power box-type devices to replace hardware units such as service line cards and network boards of the box-type devices, and the box-type devices are interconnected through cables. The entire cluster is managed through a centralized or distributed NOS (Network Operating System) in order to break through the performance and power consumption bottlenecks of DCI single-frame equipment.

Advantages claimed by DDC include:

Break through the expansion limit of frame-type equipment : realize expansion through multi-device clusters, not limited by the size of the frame;

Reduce single-point power consumption : Multiple low-power box devices are deployed in a decentralized manner, which solves the problem of concentrated power consumption and reduces the power and heat dissipation requirements of the cabinet;

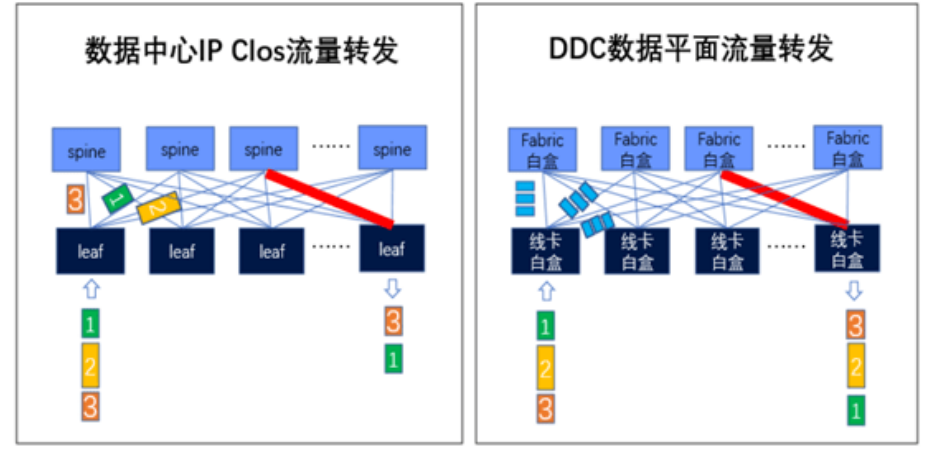

Improve bandwidth utilization : Compared with traditional ETH network Hash exchange, DDC adopts cell ( Cell ) exchange, load balancing based on Cell, which helps to improve bandwidth utilization;

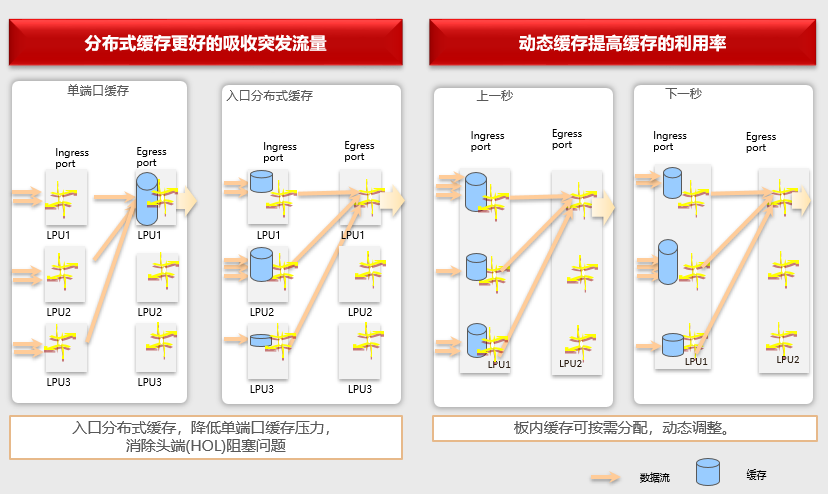

Alleviate packet loss : Use the device's large cache capability to meet the high convergence ratio requirements of DCI scenarios. Firstly, through the VOQ (Virtual Output Queue) technology, the packets received in the network are assigned to different virtual output queues, and then these packets are sent after confirming that the receiving end has enough buffer space through the Credit communication mechanism, thereby reducing the Packet loss caused by egress congestion.

The DDC solution is only a flash in the pan in DCI scenarios

The idea looks perfect, but it is not all smooth sailing. The Network Cloud product of DriveNets is the industry's first and only commercial DDC solution, and the whole set of software adapts to general white box routers. But so far no clear sales case has been seen in the market. AT&T, as the proposer of the DDC architecture solution, deployed the DDC solution in grayscale on its self-built IP backbone network in 2020 , but there was basically no sound in the follow-up. Why didn't this water flower make much waves? This should be attributed to the four major defects of DDC.

Defect 1: Unreliable equipment control plane

The components of the frame-type equipment realize the interconnection of the control and management plane through the PCIe bus with highly integrated hardware and high reliability, and the equipment uses a dual main control board design to ensure the high reliability of the management and control plane of the equipment. The DDC uses "replace if broken" fragile module cables to interconnect, build a multi-device cluster and support the operation of the cluster management and control plane. Although breaking through the scale of framed devices, this unreliable interconnection method brings great risks to the management and control plane. When two devices are stacked, problems such as split brain and out-of-sync table items may occur when abnormalities occur. For DDC, which is an unreliable management and control plane, this kind of problem is more likely to occur.

Flaw 2: Highly complex equipment NOS

The SONiC community has already designed a distributed forwarding chassis based on the VOQ architecture, and iteratively supplements and modifies it to meet the support for DDC. Although there are indeed many landing cases of white boxes, few people challenge the "white box". To build a remote "white frame", not only the status of multiple devices in the cluster, synchronization and management of table item information need to be considered, but also multiple actual scenarios such as version upgrades, rollbacks, hot patches, etc. systematic realization. DDC requires an exponential increase in the NOS complexity of the cluster. At present, there are no mature commercial cases in the industry, and there are great development risks.

Defect three: lack of maintainable solutions

The network is unreliable, so the ETH network has made a lot of maintainable and locatable features or tools, such as the familiar INT and MOD. These tools can monitor specific flows, identify flow characteristics of packet loss, and locate and troubleshoot. However, the cell used by the DDC is only a slice of the message, and there is no quintuple information such as the relevant IP, so it cannot be associated with a specific service flow. Once there is a packet loss problem in DDC, the current operation and maintenance methods cannot locate the packet loss point, and the maintenance plan is seriously lacking.

Defect four: cost increase

In order to break through the size limit of the chassis, the DDC needs to interconnect the devices of the cluster through high-speed cables/modules; the interconnection cost is much higher than that of the frame-type device line card and the network board through PCB routing and high-speed link interconnection, and The larger the scale, the higher the interconnection cost.

At the same time, in order to reduce the concentration of single-point power consumption, the overall power consumption of DDC clusters interconnected through cables/modules is higher than that of frame-type devices. For chips of the same generation, assuming that the DDC cluster devices are interconnected by modules, the power consumption of the cluster is 30% higher than that of frame-type devices.

Refuse to fry leftovers, the DDC solution is also not suitable for AI networks

The immaturity and incompleteness of the DDC solution have left the DCI scene sadly. But it is currently revived under the AI tuyere. The author believes that DDC is also not suitable for AI networks, and we will analyze it in detail next.

Two core requirements of AI networks: high throughput and low latency

The service supported by the AI network is characterized by a small number of flows and a large bandwidth of a single flow; at the same time, the traffic is uneven, and often there are multiple calls or multiple calls (All-to-All and All-Reduce). Therefore, problems such as uneven traffic load, low link utilization, and packet loss caused by frequent traffic congestion are prone to occur, and computing power cannot be fully released.

DDC only solves the Hash problem, but also brings many defects

The DDC uses cell switching to slice packets into cells, and sends them using the polling mechanism based on the reachability information. The traffic load will be distributed to each link in a relatively balanced manner, realizing full utilization of bandwidth, and better solving the Hash problem. But beyond this, DDC still has four major flaws in the AI scene.

Defect 1: The hardware requires specific equipment, and the closed private network is not universal

The cell switching and VOQ technologies in the DDC architecture rely on specific hardware chips for implementation. None of the current DCN network equipment can be reused. The rapid development of the ETH network benefits from its plug-and-play convenience, generalization, and standardization. DCC relies on hardware and builds a closed private network through a proprietary switching protocol, which is not universal.

Defect 2: The large cache design increases network costs and is not suitable for large-scale DCN networking

If the DDC solution enters the DCN, in addition to the high interconnection cost, it will also bear the cost burden of the chip's large cache. DCN networks currently use small cache devices, the maximum of which is only 64M; and the DDC solution originating from the DCI scenario usually has a chip with HBM of up to GB. Compared with DCI, a large-scale DCN network cares more about network costs.

Defect 3: The static delay of the network increases, which does not match the AI scene

As a high-performance AI network that releases computing power, the goal is to shorten the completion time of the business. The large cache capacity of DDC caches packets, which will inevitably increase the static delay of hardware forwarding. At the same time, cell exchange, packet slicing, encapsulation, and reassembly also increase network forwarding delay. Compared with the test data, DDC increases the forwarding delay by 1.4 times compared with the traditional ETH network.

Defect 4: As the scale of DC increases, the problem of DDC unreliability will worsen

Compared with the scenario where DDC replaces frame-type devices in the DCI scenario, DDC entering the DCN needs to satisfy a larger cluster, at least one network POD. This means that in this extended "frame", the distance between each component is farther. Then there are higher requirements for the reliability of the management and control plane of this cluster, the synchronous management of device network NOS, and the operation and maintenance management of the network POD level. Various defects of DDC will crack.

DDC is at most a transitional solution

Of course, no problem is insoluble. Accepting some constraints, for this specific scene, it is easy to become a stage for various major manufacturers to "show off their skills". The network pursues reliability, simplicity, and efficiency, and dislikes complexity. Especially under the current background of "reducing staff and increasing efficiency", it is really necessary to consider the cost of DDC implementation.

In AI scenarios, the problem of network load sharing has been solved by global static or dynamic orchestration of forwarding paths in many cases. In the future, it can also be solved by end-side NICs based on Packet Spray and out-of-order rearrangement. So DDC is at best a short-term transition solution.

After a deep dive, the driving force behind DDC may be DNX

Finally, let’s talk about the mainstream network chip company Broadcom (Broadcom). We are more familiar with two product series, StrataXGS and StrataDNX. XGS continued the high-bandwidth, low-cost route, quickly launched chip products with small cache and large bandwidth, and continued to take the lead in DCN network occupancy. StrataDNX carries the cost of a large cache, continues the myth of VOQ+cell exchange, and expects DDC to enter DC to continue its life. There seems to be no case in North America. Domestic DDC may be the last straw for DNX.

Today, a large number of hardware facilities such as GPUs have been restricted to a certain extent in our country. Do we really need DDC? Let's give more chances to localized devices!