4 ways to ensure service availability in the face of burst traffic

foreword

I don't know if you have such an experience. The online system suddenly has a lot of traffic. It may be a hacker attack, or it may be that the business volume is far greater than your estimate. If your system does not have any protection Measures. At this time, the system load is too high, the system resources are gradually exhausted, and the interface response becomes slower and slower until it becomes unavailable. This in turn leads to the resource exhaustion of the upstream system that calls your interface, and finally leads to an avalanche of the system. This is a catastrophic outcome when you think about it, so what can be done about it?

In the face of such sudden traffic scenarios, the core idea is to prioritize core services and the vast majority of users. There are four common coping methods, downgrade, circuit breaker, current limit and queuing, I will explain them one by one below.

1. Downgrade

Downgrading means that the system reduces the functions of certain services or interfaces. It can be to provide only some functions, or to completely stop all functions, and to ensure the core functions first.

For example, when Taobao Double 11 is rushing to buy at midnight, you will find that the product return function cannot be used. Another example is that a forum can be downgraded to only view posts, but not to post; to only view posts and comments, but not to post comments;

There are two common ways to implement downgrade:

- System backdoor downgrade

To put it simply, the system reserves a backdoor for downgrade operations. For example, the system provides a downgrade URL. When accessing this URL, it is equivalent to executing a downgrade instruction. The specific downgrade instruction can be passed in through URL parameters. This scheme has certain security risks, so security measures such as passwords will also be added to the URL.

The implementation cost of the system backdoor downgrade is low, but the main disadvantage is that if there are many servers, it needs to be operated one by one, and the efficiency is relatively low.

- Independent downgrading system

In order to solve the shortcomings of the system's backdoor downgrade method, we can separate the downgrade operation into a separate system to realize complex rights management, batch operations and other functions.

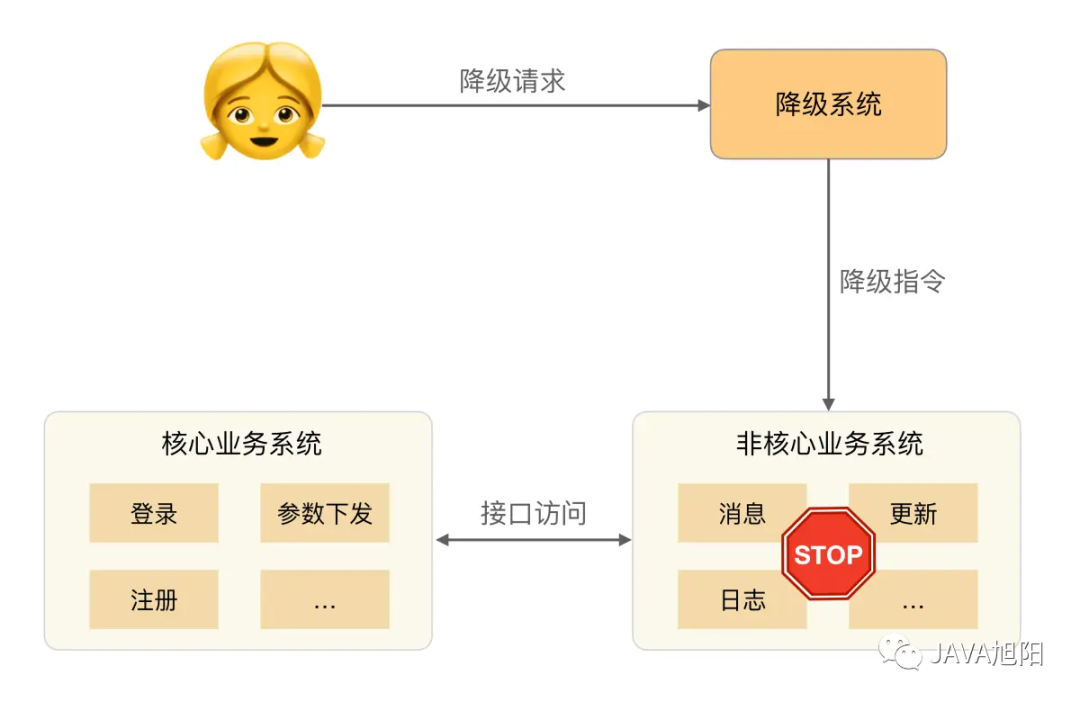

The basic structure is as follows:

2. Fusing

Fusing means that according to certain rules, such as 60% of the request response errors within 1 minute, the access to the external interface will be stopped, so as to prevent the failure of some external interfaces from causing a sharp decline in the processing capacity of the system or failure.

Fusing and downgrading are two concepts that are easy to confuse, because purely from the name, it seems that both have the meaning of prohibiting a certain function. But their connotations are different, because the purpose of downgrading is to deal with the failure of the system itself, while the purpose of fusing is to deal with the failure of the dependent external system.

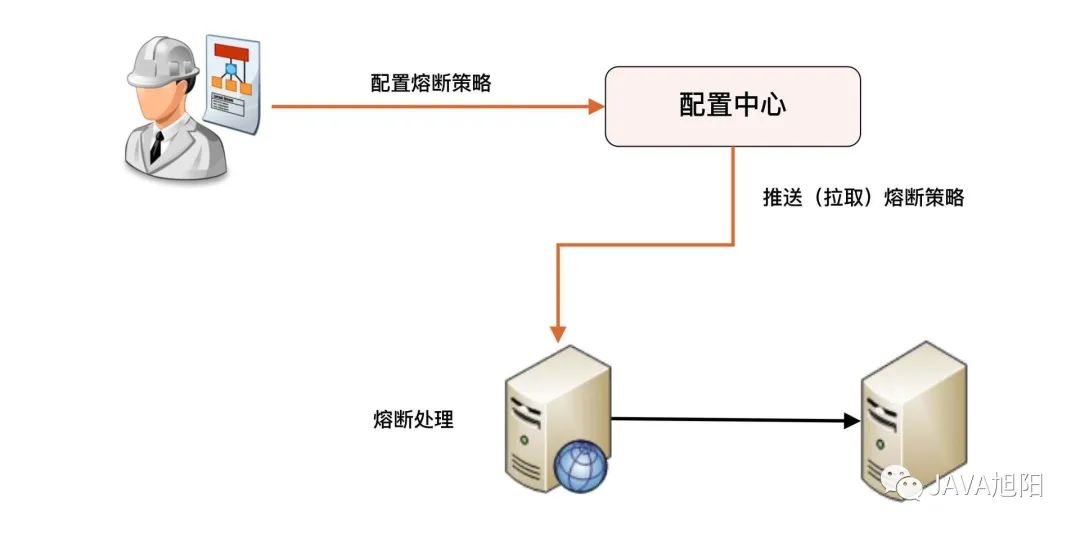

Regarding the implementation of service circuit breakers, there are two mainstream solutions, and Ali’s , our company's project uses . Spring Cloud Netflix Hystrix Sentinel Sentinel

- Hystrix is an open source library used to deal with delay and fault tolerance of distributed systems. In distributed systems, many dependencies will inevitably fail to call, such as timeouts, exceptions, etc. Hystrix can guarantee that in the case of a dependency problem , will not cause overall service failure, avoid cascading failures, and improve the stability of distributed systems.

- Sentinel is an open-source, lightweight and highly available flow control component for distributed service architectures developed by the Alibaba middleware team. It mainly uses traffic as an entry point to help users protect services from multiple dimensions such as brakerrad as flow control, cir and system load protection. stability.

3. Current limiting

Every system has services online, so when the traffic exceeds the service limit capacity, the system may freeze or crash, so there are downgrades and current restrictions. Current limiting is actually: when there is high concurrency or instantaneous high concurrency, in order to ensure The stability and availability of the system, the system will sacrifice some requests or delay processing requests to ensure the availability of the overall system services.

Current limiting is generally implemented within the system. Common current limiting methods can be divided into two categories: request-based current limiting and resource-based current limiting.

- Limit based on request

Request-based current limiting refers to considering current limiting from the perspective of external access requests. There are two common methods.

The first is to limit the total amount, that is, to limit the cumulative upper limit of a certain indicator. It is common to limit the total number of users served by the current system. For example: a live broadcast room limits the total number of users to 1 million. Users cannot enter; there are only 100 products in a certain snap-up event, and the upper limit of users participating in the snap-up is 10,000, and users after 10,000 are directly rejected.

The second is to limit the amount of time, that is, to limit the upper limit of a certain indicator within a period of time, for example, only 10,000 users are allowed to access within 1 minute; the peak value of requests per second is up to 100,000.

Whether it is limiting the total amount or limiting the amount of time, the common feature is that it is easy to implement, but the main problem in practice is that it is difficult to find a suitable threshold. For example, the system is set to have 10,000 users in one minute, but in fact the system can't handle it when there are 6,000 users; or after reaching 10,000 users in one minute, the system is actually not under great pressure, but at this time it has already begun to discard user visits.

Even if a suitable threshold is found, request-based throttling faces hardware-related issues. For example, the processing power of a 32-core machine and a 64-core machine are very different, and the threshold is different. Some technicians may think that it can be obtained by simply performing mathematical operations based on hardware indicators. In fact, this is not feasible. 64 The business processing performance of a machine with 32 cores is not 2 times that of a machine with 32 cores, it may be 1.5 times, or even 1.1 times.

In order to find a reasonable threshold, the performance stress test can usually be used to determine the threshold, but the performance stress test also has the problem of limited coverage scenarios, and there may be a function that is not covered by the performance stress test , which causes a lot of pressure on the system; another The way is to gradually optimize: first set a threshold and then go online to observe the running situation, and adjust the threshold if it is found to be unreasonable.

Based on the above analysis, the method of restricting visits based on thresholds is more suitable for systems with relatively simple business functions, such as load balancing systems, gateway systems, and snap-up systems.

- Resource-based current limiting

Request-based current limiting is considered from the outside of the system, while resource-based current limiting is considered from within the system, that is, to find key resources that affect performance within the system and limit their use. Common internal resources include connections , file handles, threads, and request queues.

For example, using Netty to implement the server, each incoming request is first put into a queue, and the business thread reads the request from the queue for processing. The maximum queue length is 10000, and the subsequent request is rejected when the queue is full; CPU load or occupancy is limited, and new requests are rejected when the CPU occupancy exceeds 80%.

Resource-based rate limiting can more effectively reflect the pressure of the current system than request-based rate limiting, but there are two main difficulties in actual design: how to determine key resources and how to determine the threshold of key resources.

Usually, this is also a step-by-step optimization process: when designing, first select a key resource and threshold based on inference, then test and verify, and then go online for observation. If it is found to be unreasonable, then optimize .

4. Queue

This way of queuing must be familiar to everyone. When you buy a train ticket at 12306, will it tell you that you are in the queue, and the ticket will be locked and paid after waiting for a period of time. At the end of the year, so many people in China bought tickets, and 12306 handled it through the queuing mechanism. But there are also disadvantages, that is, the user experience is not so good.

Because queuing needs to temporarily cache a large number of business requests, a single system cannot cache so much data. Generally, queuing needs to be implemented by an independent system, such as using a message queue such as Kafka to cache user requests.

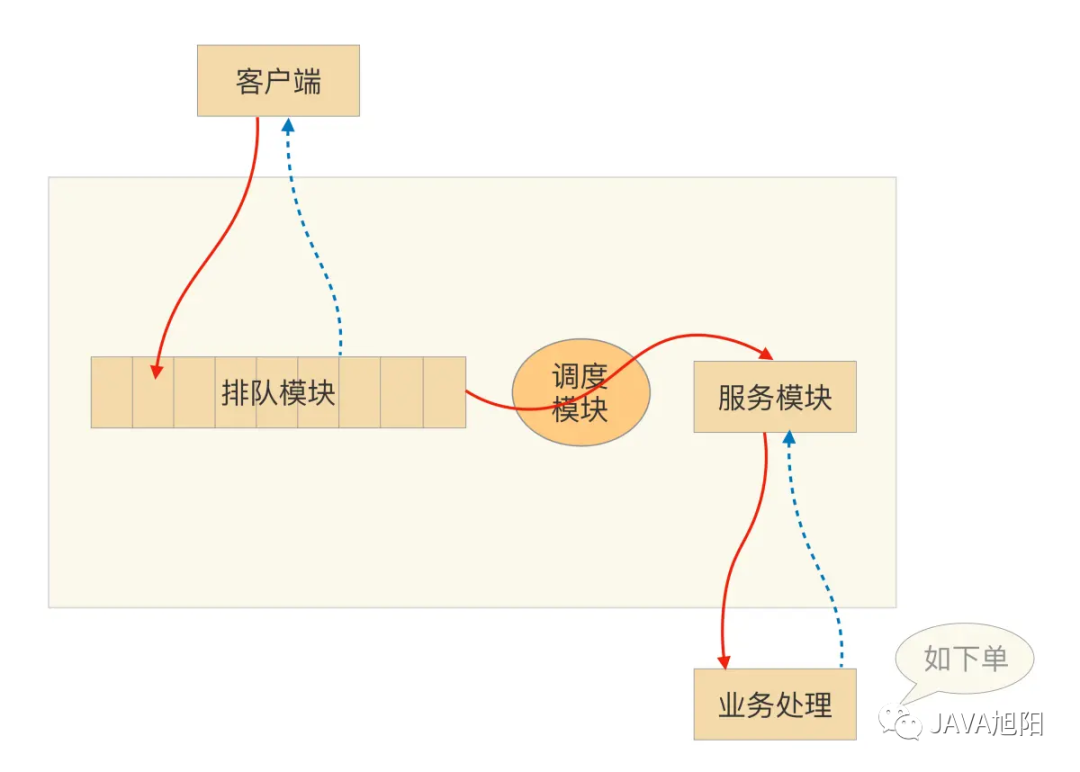

- queuing module

Responsible for receiving snap-up requests from users, and saving the requests in a first-in first-out manner. Each product participating in the flash sale saves a queue, and the size of the queue can be defined according to the number of products participating in the flash sale (or add some margin).

- Scheduling module

Responsible for the dynamic scheduling from the queuing module to the service module, constantly checking the service module, once the processing capacity is free, transfer the user access request from the head of the queuing queue to the service module, and be responsible for distributing the request to the service module. Here, the scheduling module plays the role of an intermediary, but not just passing requests, it is also responsible for regulating the processing capacity of the system. We can dynamically adjust the speed of pulling requests to the queuing system According to the actual processing capacity of the service module.

- service module

Responsible for invoking the real business to process the service, return the processing result, and call the interface of the queuing module to write back the business processing result.

Summarize

Finally, we use a table to summarize the above four means of ensuring high service availability.