Regarding "computing power", this article is worth reading

In today’s article, let’s talk about computing power.

In the past two years, computing power can be said to be a popular concept in the ICT industry. It always appears in news reports and speeches by big names.

So, what exactly is computing power? What categories does computing power include and what are their uses? What is the current state of global computing power?

Next, Xiaozaojun will give you a detailed popular science.

what is computing power

The literal meaning of computing power, as everyone knows, is Computing Power.

More specifically, computing power is the computing power to achieve target result output by processing information data.

We humans, in fact, have this ability. In the course of our lives, calculations are going on at every moment. Our brain is a powerful computing engine. Most of the time, we perform toolless calculations through oral and mental arithmetic. However, such computing power is a bit low. Therefore, when encountering complex situations, we will use computing power tools to perform in-depth calculations. In ancient times, our primitive tools were straw ropes and stones. Later, with the progress of civilization, we have more practical computing power tools such as abacus (a small stick used for calculation) and abacus, and the level of computing power has been continuously improved.

By the 1940s, we ushered in a computing revolution. In February 1946, the world's first digital electronic computer ENIAC was born, marking the official entry of human computing power into the digital electronic age.

ENIAC, 1946

Later, with the emergence and development of semiconductor technology, we entered the chip era. Chips have become the main carrier of computing power.

The world's first integrated circuit (chip), 1958

Time goes on.

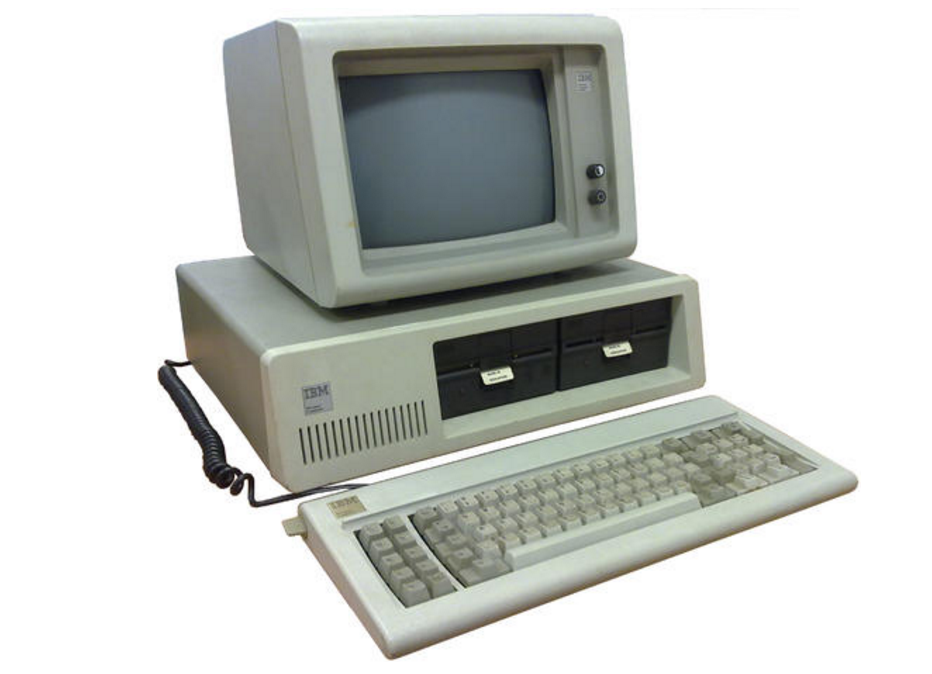

By the 1970s and 1980s, chip technology had come a long way under Moore's Law. The performance of the chip continues to improve, and the volume continues to decrease. Finally, computers were miniaturized and the PC (personal computer) was born.

The world's first PC (IBM5150), 1981

The birth of the PC has far-reaching significance. It marks that IT computing power no longer serves only a few large enterprises (mainframes), but heads to ordinary families and small and medium-sized enterprises. It has successfully opened the door to the information age of the whole people and promoted the popularization of informationization in the whole society. With the help of PC, people can fully feel the improvement of life quality and production efficiency brought by IT computing power. The advent of the PC also laid the foundation for the subsequent vigorous development of the Internet. After entering the 21st century, computing power has ushered in great changes again. The sign of this great change is the emergence of cloud computing technology.

cloud computing

Before cloud computing, human beings suffered from the lack of computing power of single-point computing (a mainframe or a PC, which can independently complete all computing tasks), and have already tried grid computing (decomposing a huge computing task into many distributed computing architectures such as small computing tasks, which are handed over to different computers. Cloud computing is a new attempt of distributed computing. Its essence is to package and aggregate a large number of scattered computing resources to achieve higher reliability, higher performance, and lower cost computing power. Specifically, in cloud computing, computing resources such as the central processing unit (CPU), memory, hard disk, and graphics card (GPU) are assembled to form a virtual infinitely scalable "computing resource pool" through software. .

If users have computing power needs, the "computing resource pool" will dynamically allocate computing resources, and users pay as needed.

Compared with users' self-purchased equipment, self-built computer room, and self-operation and maintenance, cloud computing has obvious cost-effective advantages.

cloud computing data center

After the cloudification of computing power, the data center has become the main carrier of computing power. The scale of human computing power has begun a new leap.

Classification of computing power

The emergence of cloud computing and data centers is due to the continuous deepening of informatization and digitization, which has triggered a strong demand for computing power in the entire society.

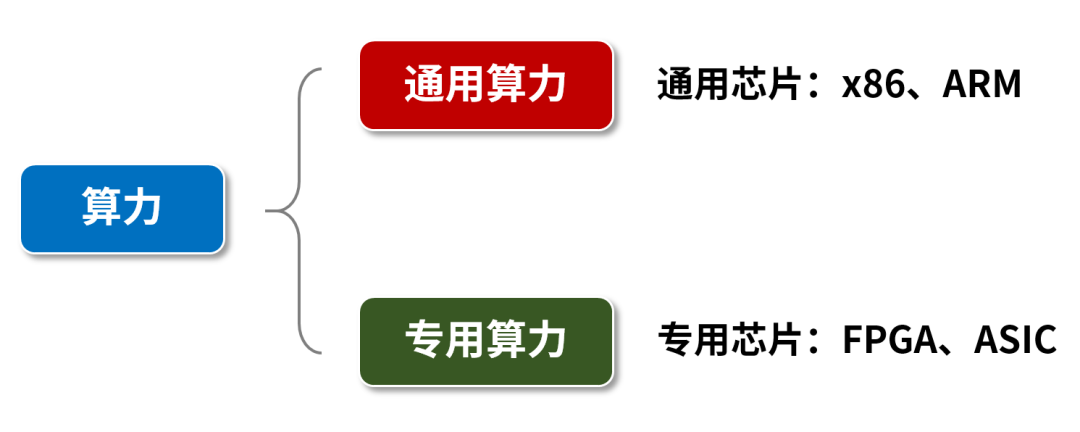

These demands not only come from the consumer sector (mobile Internet, drama watching, online shopping, taxi-hailing, O2O, etc.), but also from the industry sector (industrial manufacturing, transportation and logistics, financial securities, education and medical care, etc.), and from the urban governance sector (Smart City, Yizhengtong, City Brain, etc.). Different computing power applications and needs have different algorithms. Different algorithms have different requirements for the characteristics of computing power. Generally, we divide computing power into two categories, general computing power and dedicated computing power.

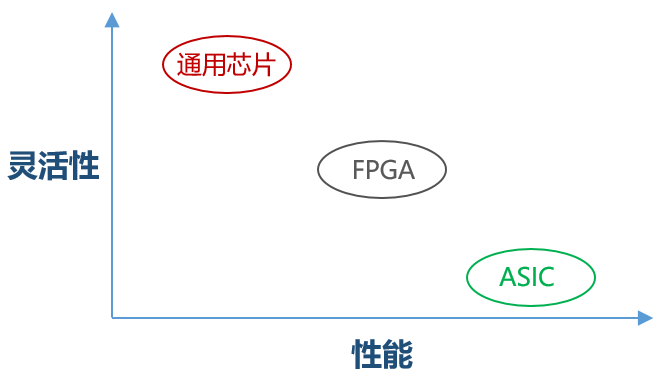

Everyone should have heard that the chips responsible for outputting computing power are divided into general-purpose chips and special-purpose chips. CPU processor chips like x86 are general-purpose chips. The computing power tasks they can complete are diverse and flexible, but with higher power consumption.

The dedicated chips, mainly refers to FPGA and ASIC.

FPGA is a programmable integrated circuit. It can be programmed in hardware to change the logic structure of the chip inside, but the software is deeply customized to perform specialized tasks.

ASIC is an application-specific integrated circuit. As the name suggests, it is a custom-made chip for professional use, and most of its software algorithms are solidified in silicon.

ASIC can complete specific computing functions, and its role is relatively simple, but the energy consumption is very low. FPGA, between general-purpose chips and ASICs.

Let's take Bitcoin mining as an example.

In the past, people used PCs (x86 general-purpose chips) to mine. Later, the more difficult it was to mine, the less computing power. So, start using the graphics card (GPU) to mine. Later, the energy consumption of the graphics card was too high, and the value of the mined coins could not cover the electricity bill, so FPGA and ASIC cluster arrays were used for mining.

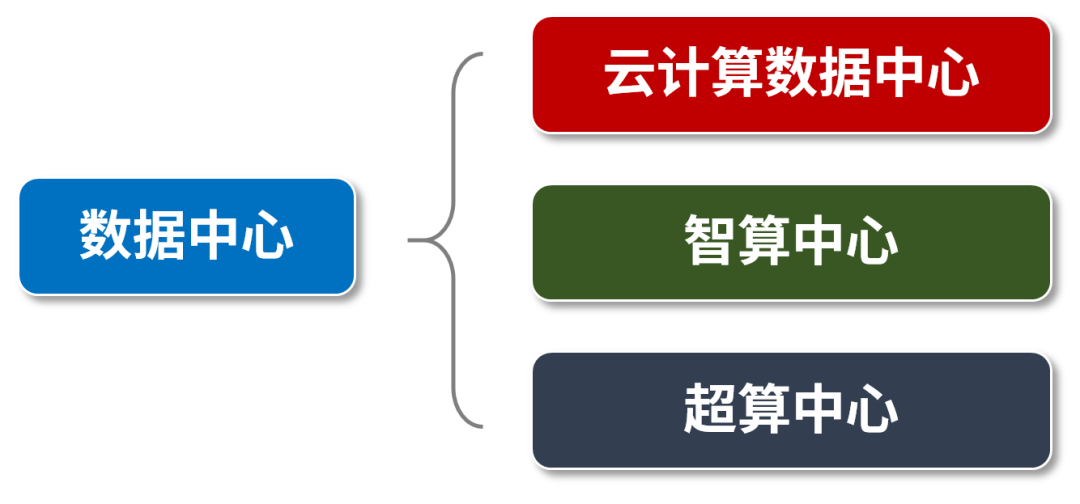

In the data center, the computing power tasks are also divided into basic general computing and HPC high-performance computing.

HPC computing is further subdivided into three categories:

Scientific computing: physical chemistry, meteorological environmental protection, life sciences, oil exploration, astronomical exploration, etc. Engineering calculation categories: computer-aided engineering, computer-aided manufacturing, electronic design automation, electromagnetic simulation, etc. Intelligent computing: artificial intelligence (AI, Artificial Intelligence) computing, including: machine learning, deep learning, data analysis, etc. Everyone should have heard of scientific computing and engineering computing. These professional scientific research fields generate a large amount of data and require extremely high computing power. Take oil and gas exploration as an example. Oil and gas exploration, in simple terms, is to perform CT on the surface. After a project, the raw data often exceeds 100TB, and may even exceed 1 PB. Such a huge amount of data requires massive computing power to support. Intelligent computing, we need to focus on this. AI artificial intelligence is the development direction that the whole society is focusing on. Regardless of the field, the application and implementation of artificial intelligence are being studied.

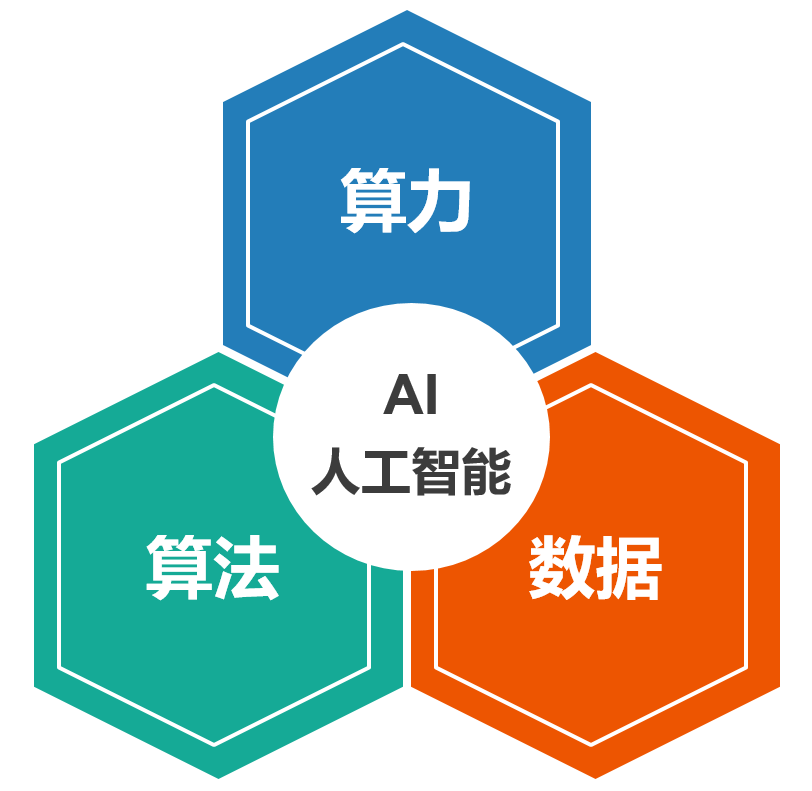

The three core elements of artificial intelligence are computing power, algorithms and data.

We all know that AI artificial intelligence is a large computing power, especially "eat" computing power. In artificial intelligence computing, there are many multiplications and additions of matrices or vectors, which are highly specialized, so they are not suitable for computing with CPU. In real-world applications, people mainly use GPUs and the aforementioned dedicated chips for computing. In particular, the GPU is the main force of the current AI computing power. Although the GPU is a graphics processor, its number of GPU cores (logical operation units) far exceeds that of the CPU. It is suitable for sending the same instruction stream to many cores in parallel and executing with different input data to complete graphics processing or big data processing. Lots of simple operations. Therefore, GPUs are more suitable for processing computationally intensive, highly parallel computing tasks (such as AI computing). In recent years, due to the strong demand for artificial intelligence computing, the country has also built many intelligent computing centers, that is, data centers dedicated to intelligent computing.

Chengdu Intelligent Computing Center (picture from the Internet)

In addition to the intelligent computing center, there are many supercomputing centers now. In the supercomputing center, supercomputers such as "Tianhe-1" are placed, which are specially designed to undertake various large-scale scientific and engineering computing tasks.

(The picture comes from the Internet)

The data centers we usually see are basically cloud computing data centers.

The tasks are complex, including basic general-purpose computing and high-performance computing, as well as a large number of heterogeneous computing (computing methods that use different types of instruction sets at the same time). Because of the increasing demand for high-performance computing, the proportion of dedicated computing chips is gradually increasing. The TPU, NPU, and DPU, which have gradually become popular in the past few years, are actually dedicated chips.

The "unloading of computing power" that everyone often hears now does not actually delete computing power, but transfers many computing tasks (such as virtualization, data forwarding, compressed storage, encryption and decryption, etc.) from CPU to NPU, DPU and other chips. , reducing the computational burden of the CPU.

In recent years, in addition to basic general computing power, intelligent computing power, and super computing power, the concept of cutting-edge computing power has also emerged in the scientific community, mainly including quantum computing, photon computing, etc., which are worthy of attention.

Measure of computing power

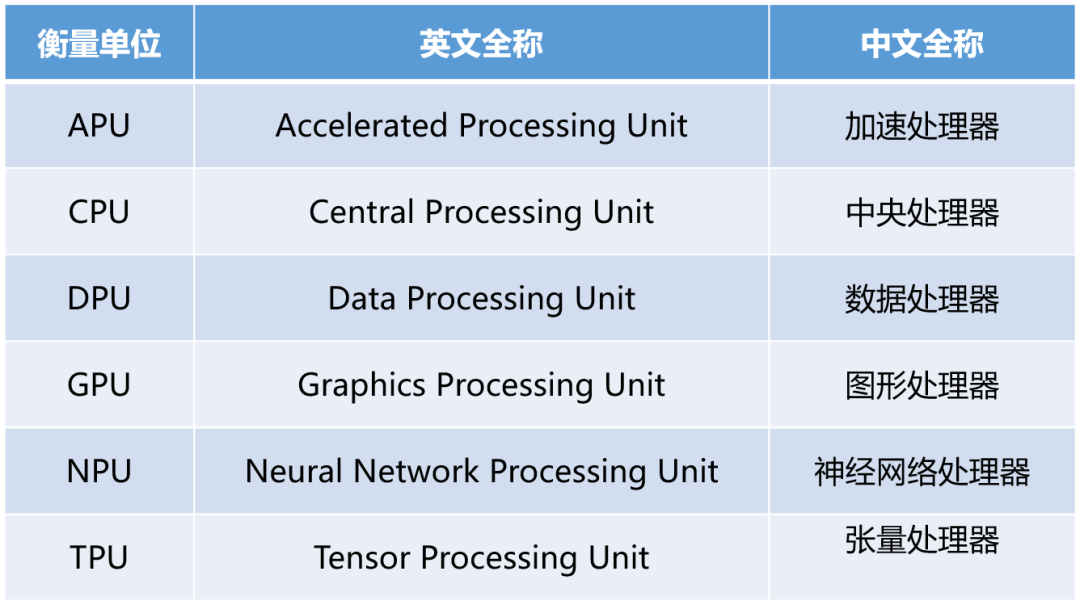

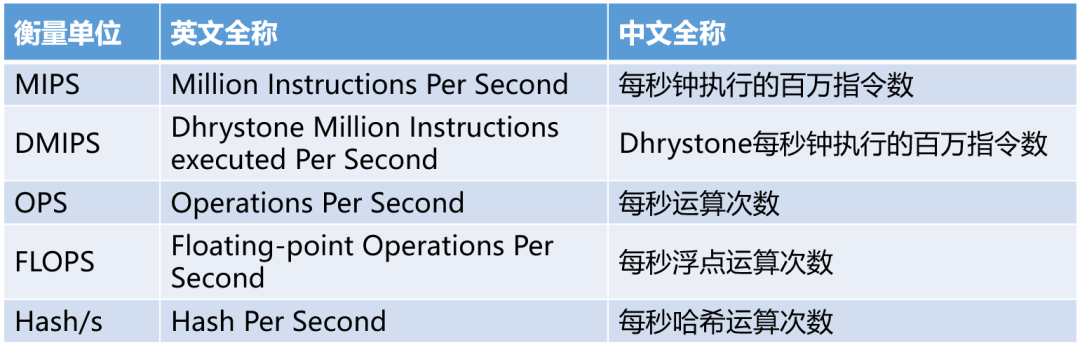

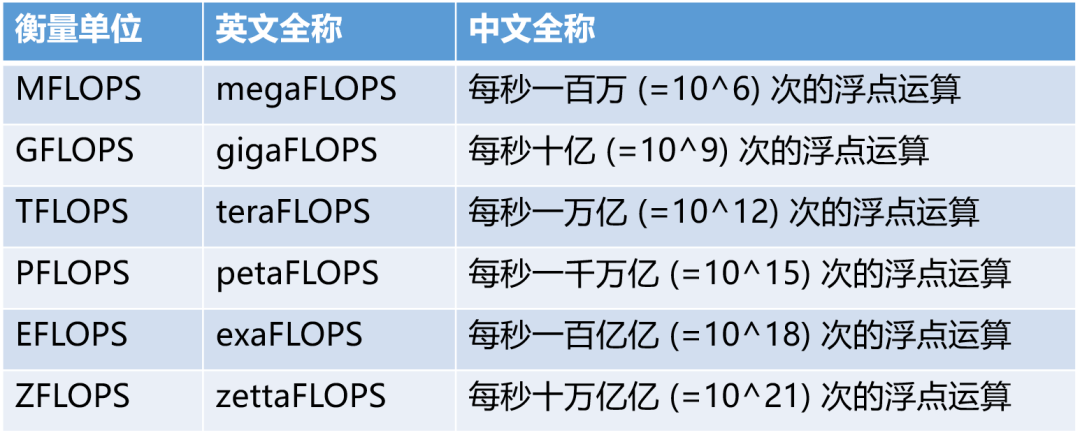

Since computing power is a "capacity", of course, there will be indicators and benchmark units to measure its strength. The units that everyone is more familiar with should be FLOPS, TFLOPS, etc.

In fact, there are many indicators to measure the size of computing power, such as MIPS, DMIPS, OPS, etc.

MFLOPS, GFLOPS, TFLOPS, PFLOPS, etc. are all different orders of magnitude of FLOPS. The specific relationship is as follows:

Floating point numbers have different specifications of FP16, FP32, and FP64

The difference in computing power between different computing power carriers is huge. In order to facilitate everyone to better understand this difference, Xiaozaojun made a comparison table of computing power:

Earlier we mentioned general computing, intelligent computing and supercomputing. From the trend point of view, the growth rate of the computing power of intelligent computing and super computing far exceeds that of general computing power. According to GIV statistics, by 2030, the general computing power (FP32) will increase tenfold to 3.3 ZFLOPS. The AI computing power (FP16) will increase by 500 times to 105 ZFLOPS.

The current state and future of computing power

As early as 1961, the "father of artificial intelligence" John McCarthy proposed the goal of Utility Computing. He believes: "One day computing may be organized as a public utility, just as the telephone system is a public utility". Today, his vision has become a reality. Under the digital wave, computing power has become a public basic resource like water and electricity, and data centers and communication networks have also become important public infrastructure. This is the result of the hard work of the IT industry and the communications industry for more than half a century.

For the entire human society, computing power is no longer a concept of a technical dimension. It has risen to the dimensions of economics and philosophy, and has become the core productivity of the digital economy era and the cornerstone of the digital-intelligence transformation of the whole society.

The life of each of us, as well as the operation of factories and enterprises, and the operation of government departments are inseparable from computing power. In key fields such as national security, national defense construction, and basic discipline research, we also need massive computing power. Computing power determines the speed of development of the digital economy and the height of social intelligence development. According to data jointly released by IDC, Inspur Information, and the Global Industry Research Institute of Tsinghua University, for every 1 point increase in the computing power index, the digital economy and GDP will increase by 3.5‰ and 1.8‰ respectively.

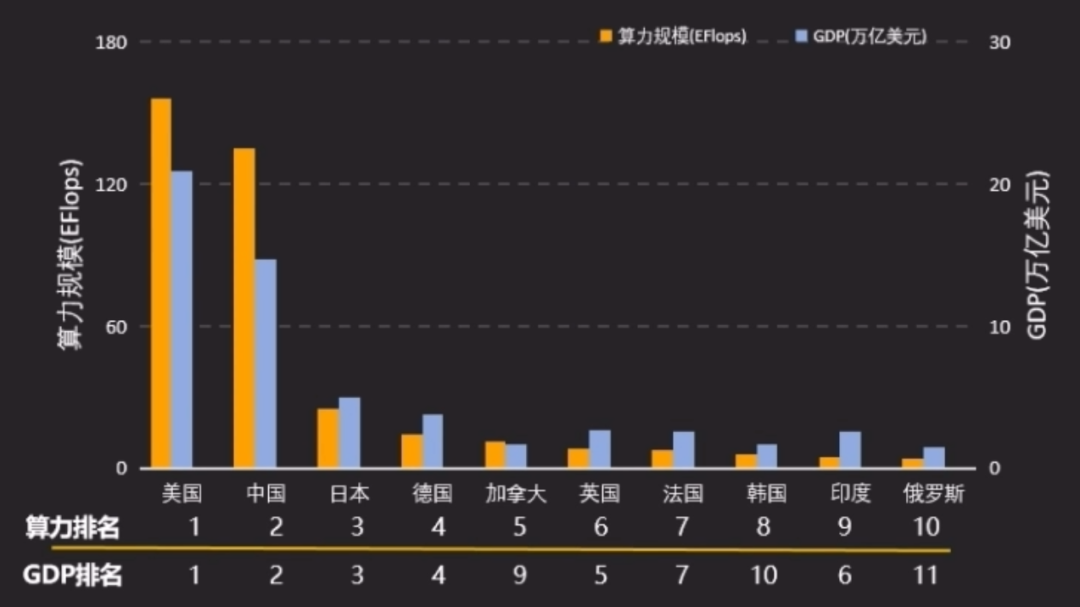

There has been a significant positive correlation between the scale of computing power and the level of economic development in countries around the world. The larger the computing power of a country, the higher the level of economic development.

Ranking of computing power and GDP of countries in the world (Source: Chi Jiuhong, speech at Huawei Computing Times Summit)

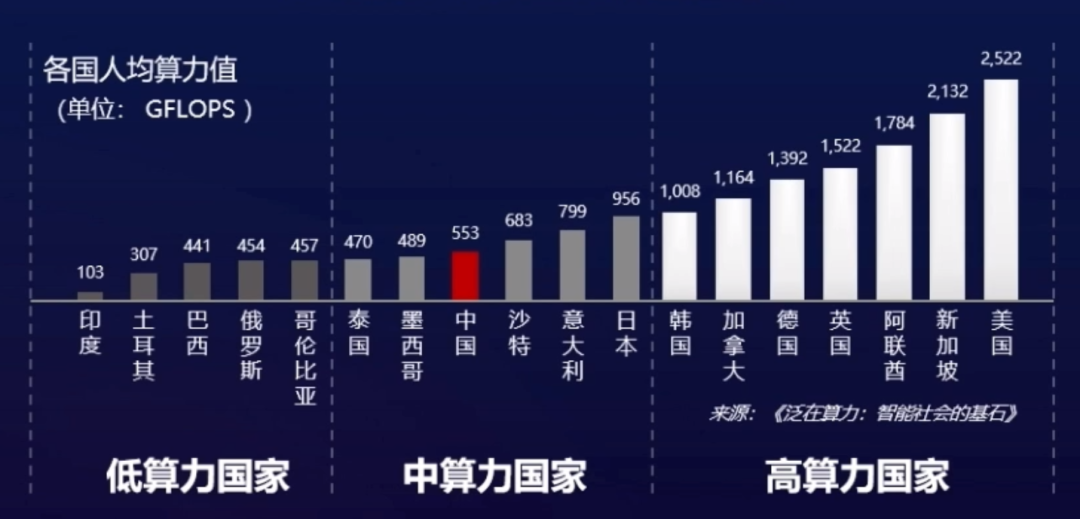

In the field of computing power, competition among countries is becoming increasingly fierce. In 2020, my country's total computing power will reach 135 EFLOPS, a year-on-year increase of 55%, about 16 percentage points higher than the global growth rate. Currently, our absolute computing power ranks second in the world. However, from a per capita perspective, we are not dominant, only at the level of countries with medium computing power.

Comparison of per capita computing power among countries in the world (Source: Tang Xiongyan, speech at Huawei Computing Times Summit)

Especially in computing core technologies such as chips, we still have a big gap with developed countries. Many neck-choking techniques have not been resolved, which has seriously affected our computing power security, which in turn has affected national security. Therefore, there is still a long way to go, and we still need to continue to work hard.

Recently, the opponent has the idea of a lithography machine (picture from the Internet)

In the future society, informatization, digitization and intelligence will be further accelerated. The arrival of the era of the Internet of Everything, the introduction of a large number of intelligent IoT terminals, and the implementation of AI intelligent scenarios will generate an unimaginable amount of data. These data will further stimulate the demand for computing power. According to Roland Berger's forecast, from 2018 to 2030, the demand for computing power for autonomous driving will increase by 390 times, the demand for smart factories will increase by 110 times, and the per capita computing power demand in major countries will increase from less than 500 GFLOPS today, an increase of 20 times. times, it becomes 10,000 GFLOPS in 2035. According to the forecast of Inspur Artificial Intelligence Research Institute, by 2025, the global computing power scale will reach 6.8 ZFLOPS, a 30-fold increase compared to 2020.

A new round of computing power revolution is accelerating.

Epilogue

Computing power is such an important resource, but in fact, there are still many problems with our utilization of computing power.

For example, the problem of computing power utilization and the balance of computing power distribution. According to IDC data, the utilization rate of small computing power scattered by enterprises is currently only 10%-15%, which is a great waste.

Moore's Law has slowed down since 2015, and the growth rate of computing power per unit of energy consumption has gradually been opened up by the growth rate of data volume. While we continue to tap the computing power potential of chips, we must consider the resource scheduling of computing power. So, how do we schedule computing power? Can the existing communication network technology meet the scheduling needs of computing power?

Stay tuned for the next episode: What exactly is a "computing network"?