A major communication failure report, thought provoking

Beginning at 1:35 a.m. local time on July 2, a large-scale communication failure occurred on the mobile network of Japanese operator KDDI, resulting in the inability to make calls, send and receive text messages, and slow down data communication throughout Japan.

The accident has a large scope and a long duration, affecting 39.15 million users. The failure continued until the afternoon of July 4 and it was basically completely restored, causing great inconvenience and loss to the entire Japanese society. It is also the history of KDDI. The largest network system failure.

After the failure, KDDI executives held a press conference in a timely manner, bowed to apologize to the vast number of deeply affected individual and corporate users, and expressed consideration for compensation for losses.

So what is the reason for this large-scale communication failure? After reading KDDI's report, it is thought-provoking.

Cause 1: The core router fails to cutover

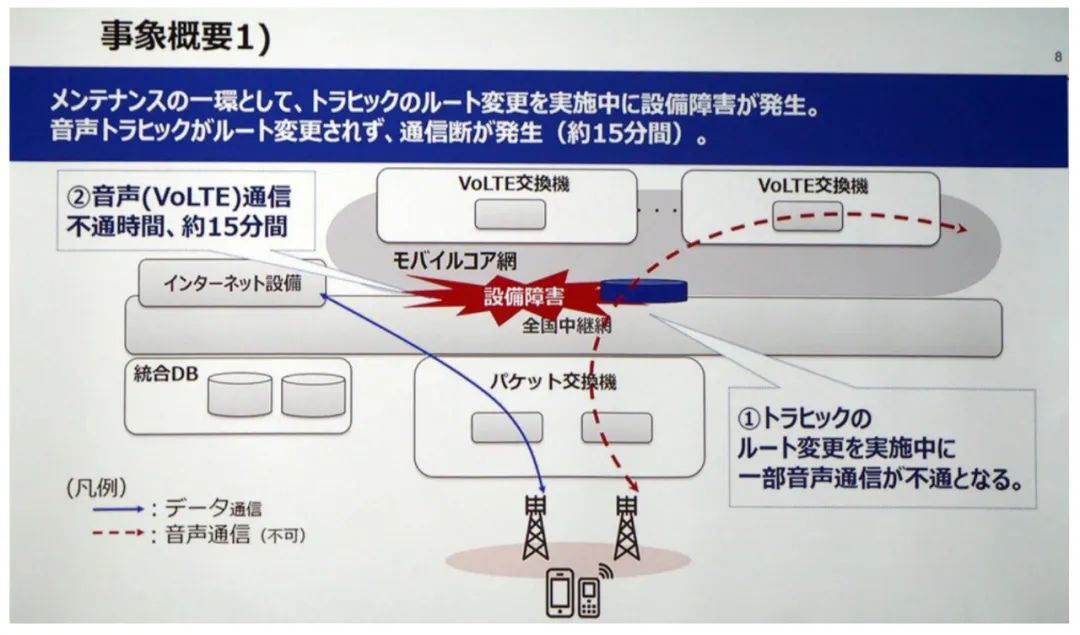

In the early morning of July 2, KDDI organized engineers to cut over a core router connecting the national mobile core network and relay network, and replaced the old core router with a new product.

Unfortunately, the worst nightmare of the correspondent happened - the cutover failed. During the process of replacing the core router, the new core router had an unexplained failure.

All communication partners know that the core router is located at the core of the network and is the "transportation hub" of the entire network. It is not only powerful and expensive, but also needs to maintain stable operation all the time. Otherwise, once a problem occurs, it may affect the whole network. millions or even tens of millions of users.

Because of this, core router cutover is like replacing the "heart" for a living person. It is a very challenging job, and it also requires extremely high capabilities such as maturity, stability, and interoperability of the new products to be replaced.

But KDDI just fell off the chain on this work that requires extreme caution, and of course the subsequent consequences are quite serious——

Because the new core router could not correctly route the voice traffic to the VoLTE switching node, some VoLTE voice services were interrupted for 15 minutes.

Fault cause 2: Signaling storm overwhelms VoLTE network

The cutover of the core router fails. I can't imagine this scene. It can make you sweat through the screen!

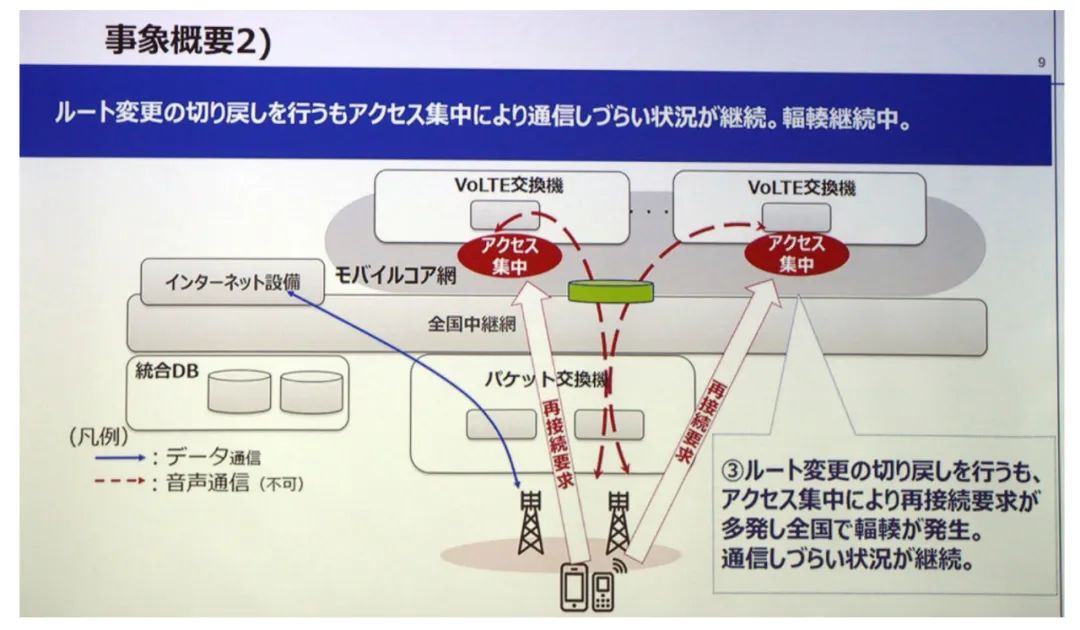

How to do? Go back now. KDDI engineers quickly initiated a fallback, switching the connection back to the old core router at 1:50 a.m. on July 2.

But a bigger problem arose.

After the fallback, "since VoLTE terminals perform location registration every 50 minutes", a large number of terminals initiate location registration signaling to VoLTE switching nodes to reconnect to the network. Massive signaling bursts in a concentrated manner, which quickly leads to congestion of VoLTE switching nodes, making VoLTE communication impossible for a large number of users.

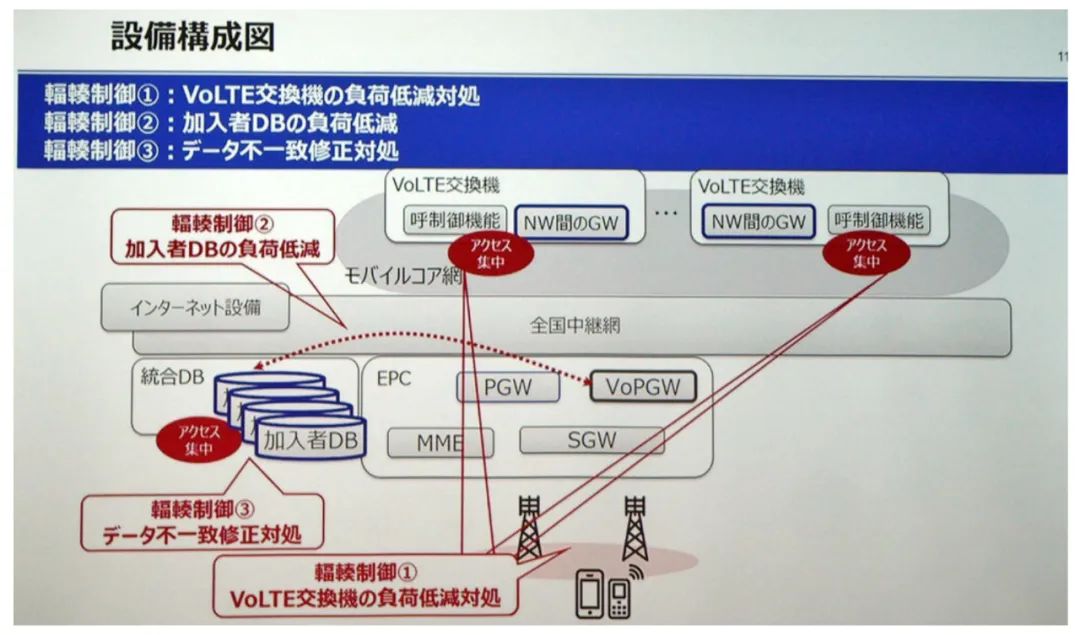

At the same time, there is a "user database" in the mobile network, which is responsible for storing the user's subscription data and location information. Due to the congestion of VoLTE switching nodes, "the location information registered in the user database cannot be reflected on the VoLTE switch", and there is a data mismatch problem. It also causes many users to be unable to communicate and make calls.

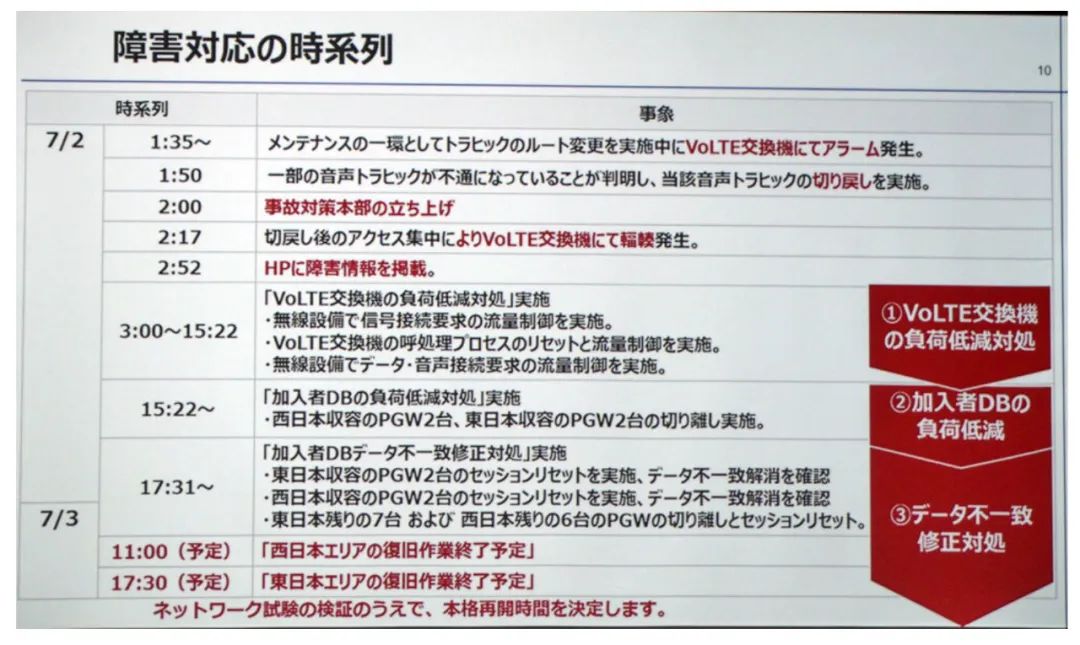

In response to this situation, KDDI started to implement the flow control strategy from the wireless side and the VoLTE core network side at the same time after 3:00 am on July 2, and reduce the load on the user database by disconnecting the PGW to relieve network congestion. Use "session reset" measures to resolve data inconsistencies in the user database.

Because of the implementation of flow control, it subsequently caused difficulties in connecting data communication and voice calls nationwide.

Next, KDDI began the intense network recovery work. At 11:00 a.m. on July 3, KDDI announced that the network repair work in western Japan was basically completed. At 5:30 pm, Eastern Japan was basically completed. But there are still some users who have difficulty in data communication and voice calls.

Until 4 p.m. on July 4, 62 hours after the failure, KDDI said it had basically recovered nationwide.

thought provoking

Such major network outages are not the first in Japan.

On October 14, 2021, the mobile network of another Japanese operator, NTT DoCoMo, also experienced a major nationwide communication accident, resulting in the inability of a large number of mobile phone users to make calls and data communications.

The accident was also caused by the fallback operation after the cutover failure, which caused a large explosion of signaling traffic and caused a large network congestion.

Specifically, NTT DoCoMo had a problem replacing the network equipment used to store user and location information of IoT end devices, and then immediately initiated a fallback operation to rewind to the old equipment.

However, this fallback operation caused a large number of IoT terminals to re-initiate location registration information to old devices. The surging "signaling storm" quickly caused network congestion and affected voice and data packets of 3G/4G/5G networks. Core equipment, resulting in a large number of users unable to call and data communication.

Different from NTT DoCoMo, KDDI was caused by the cutover failure of the core router this time, and the failure duration was much longer.

But it is worth mentioning that KDDI does not seem to have learned the lessons of DoCoMo.

KDDI has 6 switching centers throughout Japan, with a total of 18 VoLTE switching nodes, and the VoLTE switching nodes in the switching centers are redundant with each other. However, this time, due to the cutover of the core router, the VoLTE service was interrupted only by the VoLTE switching node of one of the switching centers.

"We have done stress tests, because there are redundant backups, even if all terminals within the range of a switching center initiate reconnection requests at the same time, there will be no congestion."

KDDI said, "But for some reason, it turned out to be congestion, and we haven't fully figured out what went wrong."

Hopefully KDDI can finally find out all the causes of this accident. I also hope that the communications industry will never make the same mistakes again. Because, a major network failure, these six characters are really terrible for the communication industry.