Three strategies for deploying MLOps models: batch processing, real-time, and edge computing

Batch Processing

Batch deployment is suitable for scenarios where real-time decision making is not required and large amounts of data need to be processed at specified intervals. Instead of constantly updating or reacting to new data, the model is run on a batch of data collected over a period of time. This approach involves processing large chunks of data at predetermined times. Common use cases include nightly risk assessment, customer segmentation, or predictive maintenance, among others. This approach is well suited for applications where real-time insights are not important.

advantage:

Batch processing can be scheduled during off-peak hours, optimizing computing resources and reducing costs. It is easier to implement and manage than real-time systems because it does not require continuous data ingestion and instant response capabilities.

Ability to handle large data sets, making it ideal for applications such as data warehousing, reporting, and offline analysis.

shortcoming:

。

Example:

Fraud Detection: Identifying fraudulent transactions by analyzing historical data.

Predictive maintenance: Scheduling maintenance tasks based on patterns observed in collected data.

Market Analysis: Analyze historical sales data to gain insights and trends.

Example:

For example, we want to analyze the sentiment of customer reviews on an e-commerce platform. We use a pre-trained sentiment analysis model and apply it to a batch of reviews periodically.

import pandas as pd

from transformers import pipeline

# Load pre-trained sentiment analysis model

sentiment_pipeline = pipeline("text-classification", model="distilbert-base-uncased-finetuned-sst-2-english")

# Load customer reviews data

reviews_data = pd.read_csv("customer_reviews.csv")

# Perform sentiment analysis in batches

batch_size = 1000

for i in range(0, len(reviews_data), batch_size):

batch_reviews = reviews_data["review_text"][i:i+batch_size].tolist()

batch_sentiments = sentiment_pipeline(batch_reviews)

# Process and store batch results

for review, sentiment in zip(batch_reviews, batch_sentiments):

print(f"Review: {review}\nSentiment: {sentiment['label']}\n")- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

We read customer review data from CSV files and process the reviews in batches of 1000. For each batch, we use the sentiment analysis pipeline to predict the sentiment (positive or negative) of each review, and then process and store the results as needed.

The actual output will depend on the contents of the customer_reviews.csv file and the performance of the pre-trained sentiment analysis model.

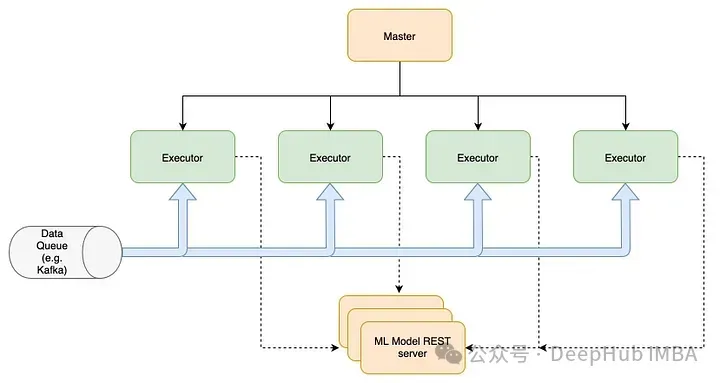

Real-time processing

Real-time deployment processes data as it arrives, enabling instant action. This approach is essential for applications that require real-time data processing and decision making. When real-time deployment processes data and provides output almost instantly, it is suitable for applications that require immediate response, such as fraud detection, dynamic pricing, and real-time personalization.

advantage:

Providing instant feedback is critical for time-sensitive applications, supporting decision-making between milliseconds and seconds. Providing dynamic and responsive interactions, supporting applications that interact directly with end users, providing responses without perceptible delays, which can improve user stickiness. The ability to quickly respond to emerging trends or issues improves operational efficiency and risk management.

shortcoming:

A robust and scalable infrastructure is required to handle the likely high throughput and low latency demands. And ensuring uptime and performance can be challenging and costly.

Example:

Customer Support: Chatbots and virtual assistants provide instant responses to user queries.

Financial Trading: Algorithmic trading systems that make split-second decisions based on real-time market data.

Smart cities: Leverage real-time data for real-time traffic management and public safety monitoring.

Example:

We want to perform real-time fraud detection on financial transactions and need to deploy a pre-trained fraud detection model and expose it as a web service.

import tensorflow as tf

from tensorflow.keras.models import load_model

import numpy as np

from flask import Flask, request, jsonify

# Load pre-trained fraud detection model

model = load_model("fraud_detection_model.h5")

# Create Flask app

app = Flask(__name__)

@app.route('/detect_fraud', methods=['POST'])

def detect_fraud():

data = request.get_json()

transaction_data = np.array(data['transaction_data'])

prediction = model.predict(transaction_data.reshape(1, -1))

is_fraud = bool(prediction[0][0])

return jsonify({'is_fraud': is_fraud})

if __name__ == '__main__':

app.run(host='0.0.0.0', port=8080)- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

For example, we use TensorFlow to load a pre-trained fraud detection model. Then we create a Flask web application and define an endpoint /detect_fraud, which accepts JSON data containing transaction details. For each incoming request, the data goes through preprocessing and other processes, enters the model and returns a JSON response to determine whether the data is fraudulent.

In order to increase the response speed of the service, containerization tools such as Docker are generally used, and the containers are deployed on cloud platforms or dedicated servers, and automatic resource scheduling and expansion can be performed.

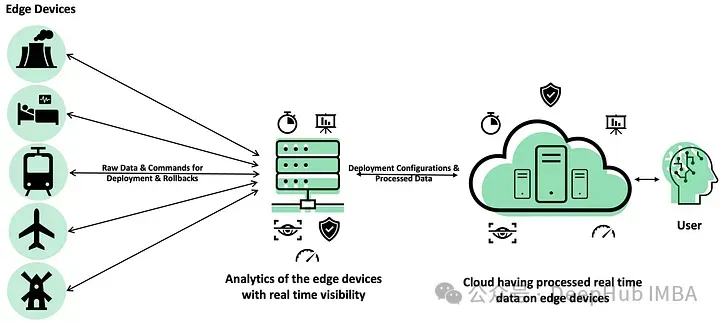

Edge computing

Edge deployment involves running machine learning models on devices at the edge of the network, closer to where the data is generated. This approach reduces latency and bandwidth usage by processing data locally instead of sending it to a centralized server. This approach is used in situations where sending data to a central server is too slow or too sensitive, such as self-driving cars, smart cameras, etc.

advantage:

Processing data locally reduces the need to transmit data back to central servers, saving bandwidth and reducing costs. Minimizing latency by processing data close to the source is ideal for applications that require fast response times.

It operates independently of network connectivity, ensuring continuous functionality even in remote or unstable environments. And sensitive data is stored on the device, minimizing exposure and compliance risks.

shortcoming:

The processing power of edge devices is usually lower than that of server environments, which may limit the complexity of deploying models. And deploying and updating models on many edge devices may be technically challenging, especially version management.

Example:

Industrial Internet of Things: Real-time monitoring of machinery in manufacturing plants.

Healthcare: Wearable devices analyze health indicators and provide instant feedback to users.

Self-driving cars: On-board sensor data processing for real-time navigation and decision making.

We will use the simplest example of performing real-time object detection on mobile devices. We will use the TensorFlow Lite framework to optimize and deploy a pre-trained object detection model on Android devices.

import tflite_runtime.interpreter as tflite

import cv2

import numpy as np

# Load TensorFlow Lite model

interpreter = tflite.Interpreter(model_path="object_detection_model.tflite")

interpreter.allocate_tensors()

# Get input and output tensors

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

# Function to perform object detection on an image

def detect_objects(image):

# Preprocess input image

input_data = preprocess_image(image)

# Set input tensor

interpreter.set_tensor(input_details[0]['index'], input_data)

# Run inference

interpreter.invoke()

# Get output tensor

output_data = interpreter.get_tensor(output_details[0]['index'])

# Postprocess output and return detected objects

return postprocess_output(output_data)

# Main loop for capturing and processing camera frames

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

if ret:

objects = detect_objects(frame)

# Draw bounding boxes and labels on the frame

for obj in objects:

cv2.rectangle(frame, (obj['bbox'][0], obj['bbox'][1]), (obj['bbox'][2], obj['bbox'][3]), (0, 255, 0), 2)

cv2.putText(frame, obj['class'], (obj['bbox'][0], obj['bbox'][1] - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (36, 255, 12), 2)

cv2.imshow('Object Detection', frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

Loads a pre-trained object detection model in TensorFlow Lite format that is optimized for mobile and embedded devices.

In the main loop, frames are continuously captured from the device's camera, passed to the detect_objects function, and bounding boxes and labels are drawn on the frames for the detected objects. The processed frames are then displayed on the device's screen. The bounding boxes will be drawn in green and the object label will be displayed in the top left corner of each bounding box.

These codes can be integrated into Android or iOS applications using the respective TensorFlow Lite APIs and libraries.

Choose the right deployment strategy

Choosing the right machine learning model deployment strategy is key to ensuring efficiency and cost-effectiveness. Here are some of the main factors to consider when deciding on a deployment strategy:

1. Response time requirements

- Real-time deployment : If the application requires instant feedback, such as online recommendation systems, fraud detection, or automated trading systems.

- Batch deployment : If the processing tasks can tolerate delays, such as nightly batch processing of data warehouses and large-scale report generation.

2. Data Privacy and Security

- Edge deployment : Edge deployment is ideal when data privacy is an important factor or regulations require that data not leave the local device.

- Centralized deployment : If the data has low privacy or can be processed in the cloud with security measures, centralized deployment can be chosen.

3. Available resources and infrastructure

- Resource-limited environments : Edge devices usually have limited computing power and are suitable for running simplified or lightweight models.

- Resource-rich environments : Cloud environments with powerful computing resources are suitable for real-time or large-scale batch processing deployments.

4. Cost considerations

- Cost-sensitive : Batch processing can reduce the demand for real-time computing resources, thereby reducing costs.

- Return on investment : Real-time systems, while costly, may provide a higher return on investment due to their fast response times.

5. Maintenance and Scalability

- Easy maintenance : Batch systems are relatively easy to maintain because their workload is predictable.

- High scalability is required : Real-time systems need to be able to handle sudden high traffic and require more complex management and automatic expansion capabilities.

6. User Experience

- Direct interaction with users : Applications that require instant response to improve user experience, such as personalization features in mobile applications, are more suitable for real-time deployment.

- Background processing : Batch processing is more appropriate in scenarios where users do not directly experience processing delays, such as data analysis and reporting.

Combining the above factors, you can choose the most suitable deployment strategy according to the specific application scenario and business needs. This helps to optimize performance, control costs, and improve overall efficiency.

Summarize

Understanding the differences and applications of batch, real-time, and edge deployment strategies is fundamental to optimizing MLOps. Each approach offers unique advantages tailored to specific use cases, and by evaluating the needs and constraints of your application, you can choose the deployment strategy that best meets your goals, paving the way for successful AI integration and utilization.