DiffMap: The first network to use LDM to enhance HD map construction

01 Background

For autonomous vehicles, high-definition (HD) maps can help improve the accuracy of their understanding (perception) of the environment and the precision of navigation. However, manual mapping faces the problems of complexity and high cost. To this end, the current study integrates map construction into the BEV (bird's eye view) perception task, and constructs a rasterized HD map in the BEV space as a segmentation task, which can be understood as adding a segmentation head similar to FCN (fully convolutional network) after obtaining BEV features. For example, HDMapNet encodes sensor features through LSS (Lift, Splat, Shoot), and then uses multi-branch FCN for semantic segmentation, instance detection, and direction prediction to build a map.

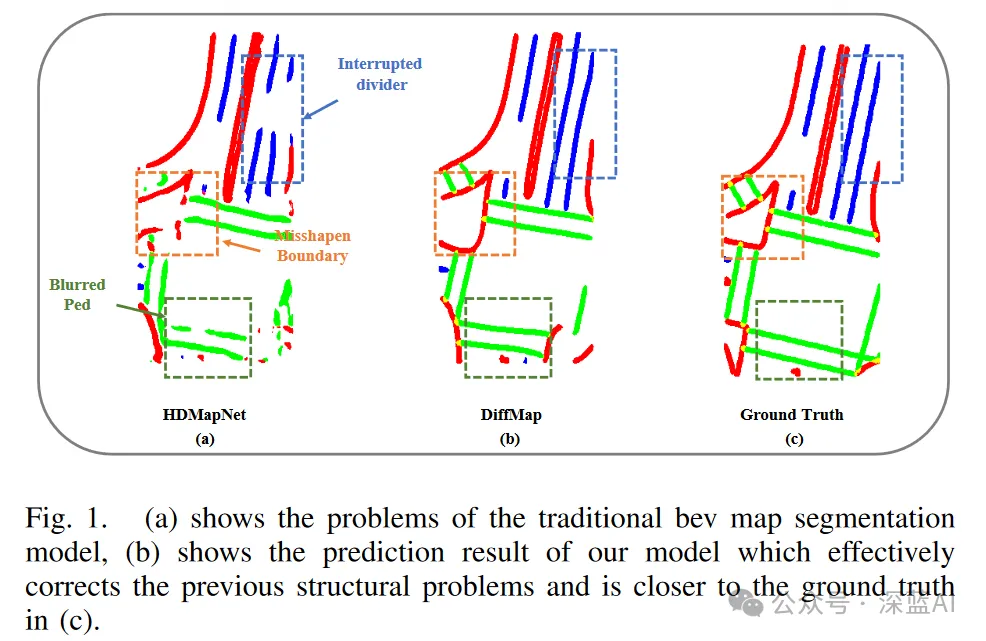

However, such methods (pixel-based classification methods) still have inherent limitations, including the possibility of ignoring specific category attributes, which may lead to distortion and interruption of medians, blurred pedestrian crosswalks, and other types of artifacts and noise, as shown in Figure 1 (a). These problems not only affect the structural accuracy of the map, but may also directly affect the downstream path planning module of the autonomous driving system.

▲Figure 1|Comparison of HDMapNet, DiffMap and GroundTruth

Therefore, it is best for the model to take into account the structural prior information of the HD map, such as the parallelism and straightness of lane lines. Some generative models have such capabilities in capturing the authenticity and inherent characteristics of images. For example, LDM (latent diffusion model) has shown great potential in high-fidelity image generation and has demonstrated its effectiveness in tasks related to segmentation enhancement. In addition, the generation of images can be further guided to meet specific control requirements by introducing control variables. Therefore, applying generative models to capture map structural priors is expected to reduce segmentation artifacts and improve map construction performance.

In this paper, the authors proposed the DiffMap network. For the first time, the network uses the improved LDM as an enhancement module to model the map structured prior of the existing segmentation model and supports plug-and-play. DiffMap can not only learn the map prior through the process of adding and removing noise, but also integrate the BEV features as a control signal to ensure that the output matches the current frame observation. Experimental results show that DiffMap can effectively generate smoother and more reasonable map segmentation results, while greatly reducing artifacts and improving the overall map construction performance.

02 Related Work

2.1 Semantic Map Construction

In traditional high-definition (HD) map construction, semantic maps are usually manually or semi-automatically annotated based on LiDAR point clouds. Generally, SLAM-based algorithms are used to build globally consistent maps, and semantic annotations are manually added to the maps. However, this method is time-consuming and labor-intensive, and there are also great challenges in updating maps, which limits its scalability and real-time performance.

HDMapNet proposed a method to dynamically build local semantic maps using on-board sensors. It encodes lidar point cloud and panoramic image features into a bird's-eye view (BEV) space and decodes them using three different heads, ultimately producing a vectorized local semantic map. SuperFusion focuses on building long-range high-precision semantic maps, using lidar depth information to enhance image depth estimation, and using image features to guide long-range lidar feature prediction. A map detection head similar to HDMapNet is then used to obtain a semantic map. MachMap divides the task into polyline detection and polygon instance segmentation, and uses post-processing to refine the mask to obtain the final result. Subsequent research focuses on end-to-end online mapping to directly obtain vectorized high-definition maps. Dynamic construction of semantic maps without manual annotation effectively reduces construction costs.

2.2 Diffusion Model for Segmentation and Detection

Denoising Diffusion Probabilistic Models (DDPMs) are a class of generative models based on Markov chains. They have shown excellent performance in areas such as image generation and have been gradually extended to various tasks such as segmentation and detection. SegDiff applies the diffusion model to image segmentation tasks, where the UNet encoder used is further decoupled into three modules: E, F, and G. Modules G and F encode the input image I and the segmentation map, respectively, which are then combined by addition in E to iteratively refine the segmentation map. DDPMS uses the base segmentation model to generate an initial prediction prior and refines the prior using a diffusion model. DiffusionDet extends the diffusion model to the target detection framework, modeling target detection as a denoising diffusion process from the noise box to the target box.

Diffusion models are also used in the field of autonomous driving, such as MagicDrive, which uses geometric constraints to synthesize street scenes, and Motiondiffuser, which extends the diffusion model to multi-agent motion prediction problems.

2.3 Map Priors

There are currently several methods that enhance model robustness and reduce the uncertainty of on-board sensors by leveraging prior information, including explicit standard map information and implicit temporal information. MapLite2.0 starts with a standard definition (SD) prior map and combines on-board sensors to infer local high-definition maps in real time. MapEx and SMERF use standard map data to improve lane perception and topology understanding. SMERF uses a Transformer-based standard map encoder to encode lane lines and lane types, and then calculates cross-attention between standard map information and sensor-based bird's-eye view (BEV) features to integrate standard map information. NMP provides long-term memory capabilities for autonomous vehicles by combining past map prior data with current perception data. MapPrior combines discriminative and generative models to encode preliminary predictions generated based on existing models as priors during the prediction phase, inject them into the discrete latent space of the generative model, and then use the generative model to refine the prediction. PreSight uses data from previous trips to optimize urban-scale neural radiation fields, generate neural priors, and enhance online perception in subsequent navigation.

03 Method Analysis

3.1 Preparation

3.2 Overall Architecture

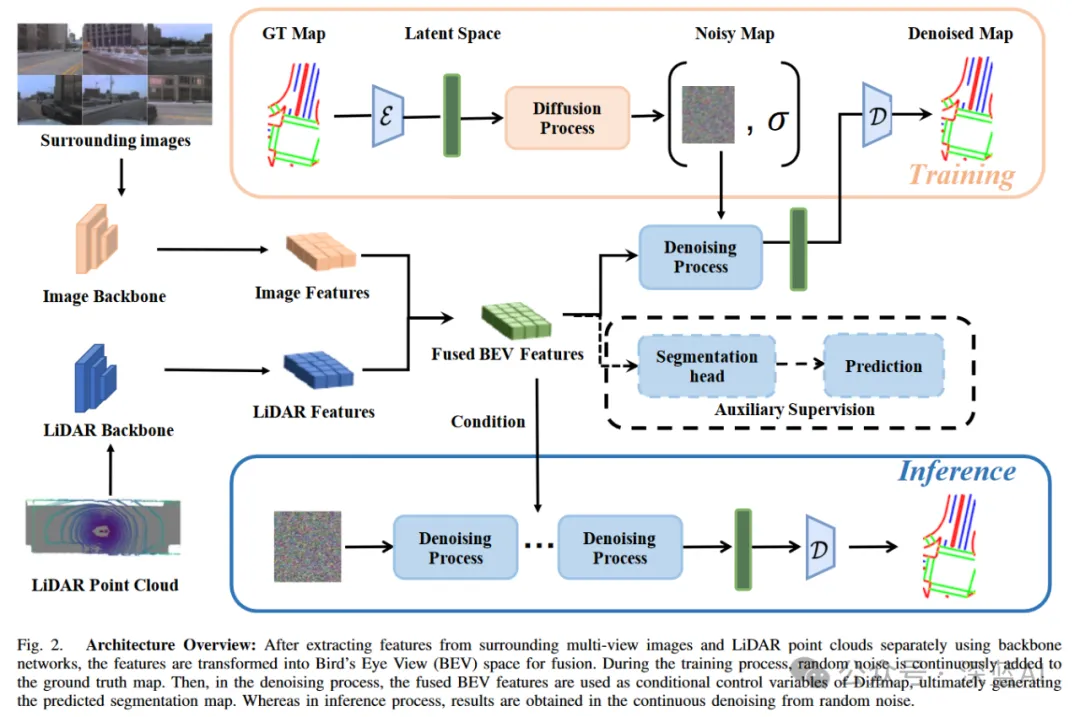

As shown in Figure 2. DiffMap is used as a decoder to incorporate the diffusion model into the semantic map segmentation model, which takes the surrounding multi-view images and LiDAR point clouds as input, encodes them into BEV space and obtains fused BEV features. Then DiffMap is used as a decoder to generate the segmentation map. In the DiffMap module, the BEV features are used as conditions to guide the denoising process.

▲Figure 2|DiffMap Architecture©️【Deep Blue AI】Compilation

▲Figure 2|DiffMap Architecture©️【Deep Blue AI】Compilation

◆Baseline for semantic map construction: The baseline mainly follows the BEV encoder-decoder paradigm. The encoder part is responsible for extracting features from the input data (LiDAR and/or camera data) and converting them into high-dimensional representations. At the same time, the decoder usually acts as a segmentation head to map the high-dimensional feature representation to the corresponding segmentation map. The baseline plays two main roles in the whole framework: supervisor and controller. As a supervisor, the baseline generates segmentation results as auxiliary supervision. At the same time, as a controller, it provides intermediate BEV features as conditional control variables to guide the generation process of the diffusion model.

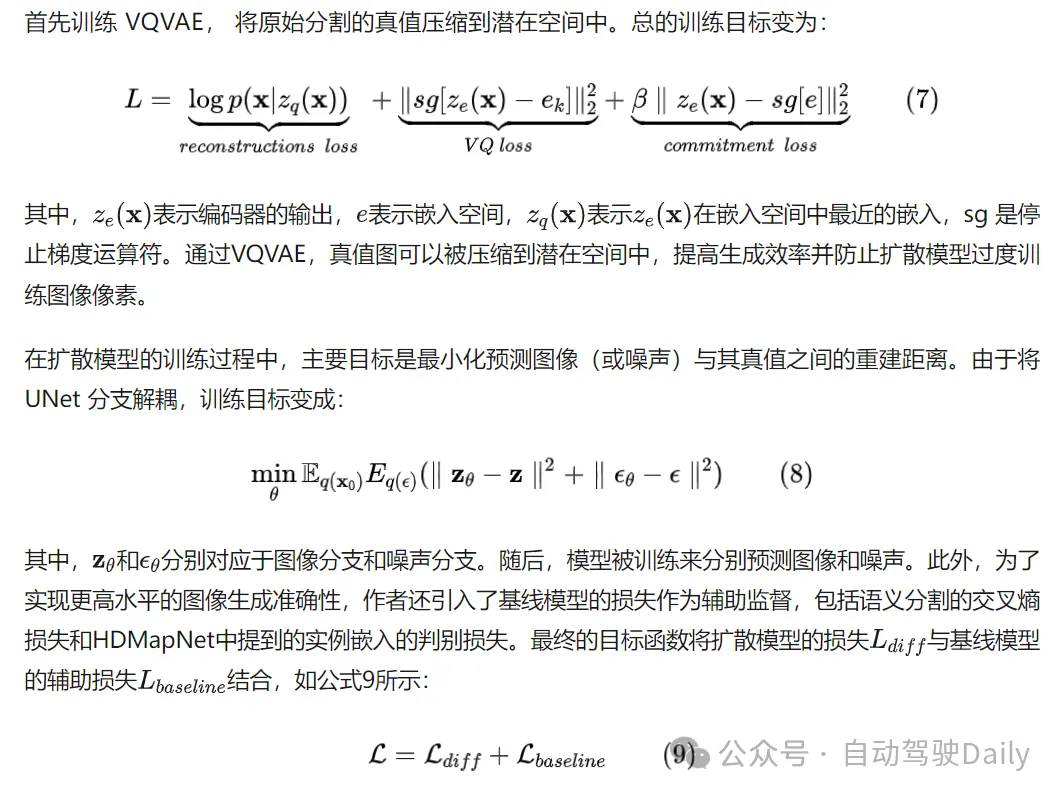

◆DiffMap module: Following LDM, the author introduces the DiffMap module as a decoder in the baseline framework. LDM mainly consists of two parts: an image-aware compression module (such as VQVAE) and a diffusion model built using UNet. First, the encoder encodes the map segmentation ground truth into a latent space, where represents the low dimension of the latent space. Subsequently, diffusion and denoising are performed in the low-dimensional latent variable space, and then the decoder is used to restore the latent space to the original pixel space.

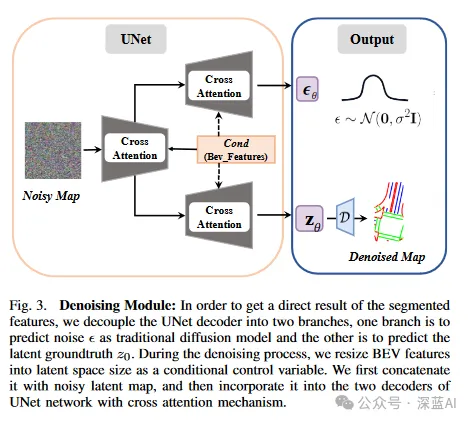

First, noise is added through a diffusion process to obtain a noise potential map at each time step, where. Then in the denoising process, UNet is used as the backbone network for noise prediction. In order to enhance the supervision part of the segmentation result, and to hope that the DiffMap model directly provides semantic features for instance-related predictions during training. Therefore, the author divides the UNet network structure into two branches, one for predicting noise, such as the traditional diffusion model, and the other for predicting in the latent space.

As shown in Figure 3. After obtaining the latent map prediction, it is decoded into the original pixel space as a semantic feature map. Then, instance predictions can be obtained from it according to the method proposed by HDMapNet, and predictions of three different heads are output: semantic segmentation, instance embedding, and lane direction. These predictions are then used in the post-processing step to vectorize the map.

▲Figure 3|Denoising module

The whole process is a conditional generation process, and the map segmentation result is obtained according to the current sensor input. The probability distribution of the result can be modeled as, where represents the map segmentation result and represents the conditional control variable, namely the BEV feature. The author uses two methods to fuse the control variable. First, since the BEV feature has the same category and scale in the spatial domain, it is adjusted to the size of the latent space, and then they are connected in series as the input of the denoising process, as shown in Formula 5.

Secondly, the cross attention mechanism is integrated into each layer of the UNet network, with as the key/value and as the query. The formula of the cross attention module is as follows:

3.3 Specific Implementation

Training:

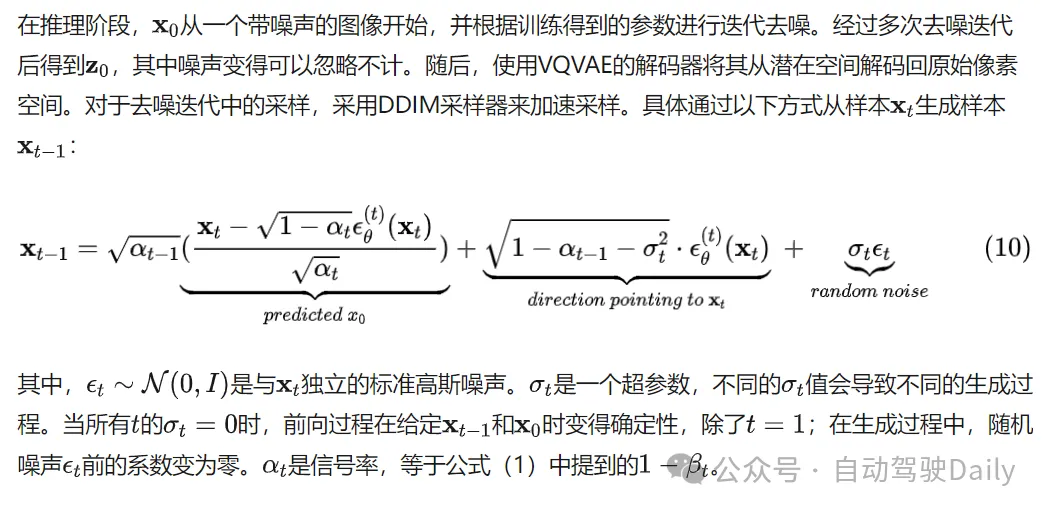

◆Reasoning:

04 Experiment

4.1 Experimental Details

◆Dataset: DiffMap is validated on the nuScenes dataset. The nuScenes dataset contains multi-view images and point clouds of 1000 scenes, of which 700 scenes are used for training, 150 for validation, and 150 for testing. The nuScenes dataset also contains annotated HD map semantic labels.

◆Architecture: ResNet-101 is used as the backbone network of the camera branch, and PointPillars is used as the backbone network of the LiDAR branch of the model. The segmentation head in the baseline model is an FCN network based on ResNet-18. For the autoencoder, VQVAE is used, which is pre-trained on the nuScenes segmentation map dataset to extract map features and compress the map into a basic latent space. Finally, UNet is used to build the diffusion network.

◆Training details: The VQVAE model is trained for 30 epochs using the AdamW optimizer. The learning rate scheduler used is LambdaLR, which gradually decreases the learning rate in an exponential decay mode with a decay factor of 0.95. The initial learning rate is set to , and the batch size is 8. Then, the diffusion model is trained from scratch for 30 epochs using the AdamW optimizer with an initial learning rate of 2e-4. The MultiStepLR scheduler is adopted, which adjusts the learning rate according to the specified milestone time points (0.7, 0.9, 1.0) and the scaling factor 1/3 at different training stages. Finally, the BEV segmentation result is set to a resolution of 0.15m, and the LiDAR point cloud is voxelized. The detection range of HDMapNet is [-30m, 30m]×[-15m, 15m]m, so the corresponding BEV map size is 400×200, while Superfusion uses [0m, 90m]×[-15m, 15m] and obtains a result of 600×200. Due to the dimensionality constraints of LDM (downsampled by a factor of 8 in VAE and UNet), the size of the semantic ground truth map needs to be padded to a multiple of 64.

◆Inference details: The prediction results are obtained by performing the denoising process 20 times on the noise map under the current BEV feature conditions. The average of the 3 samplings is used as the final prediction result.

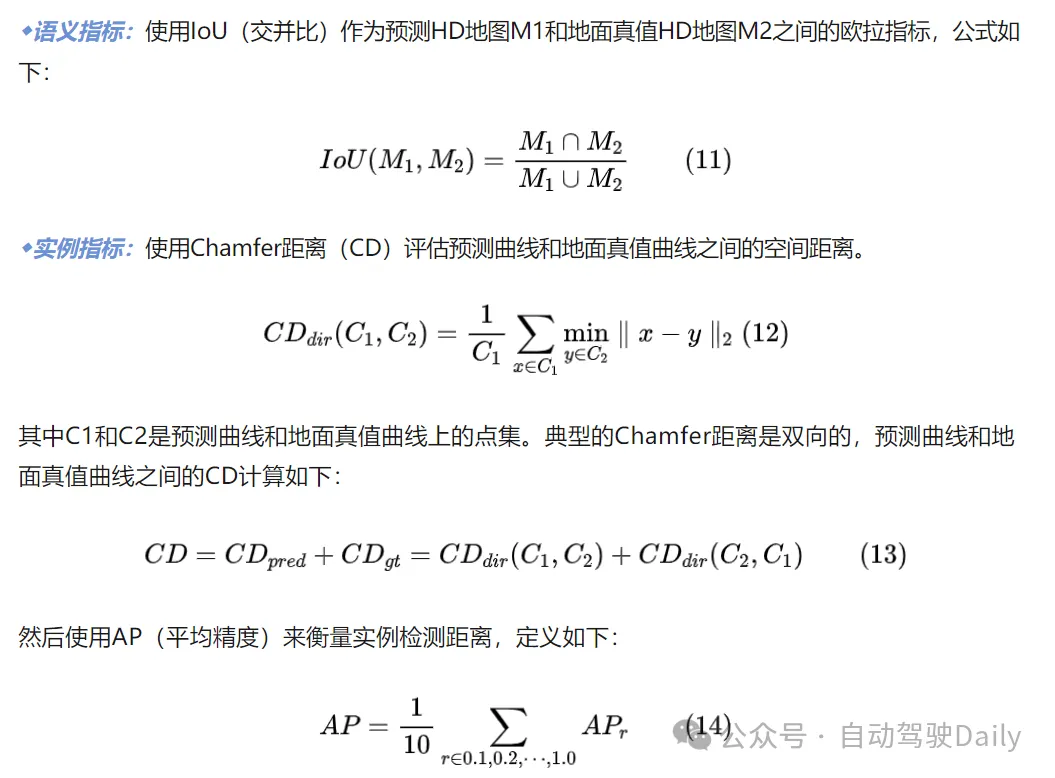

4.2 Evaluation Metrics

The evaluation is mainly conducted on map semantic segmentation and instance detection tasks, and mainly focuses on three static map elements: lane boundaries, lane dividers, and pedestrian crosswalks.

4.3 Evaluation Results

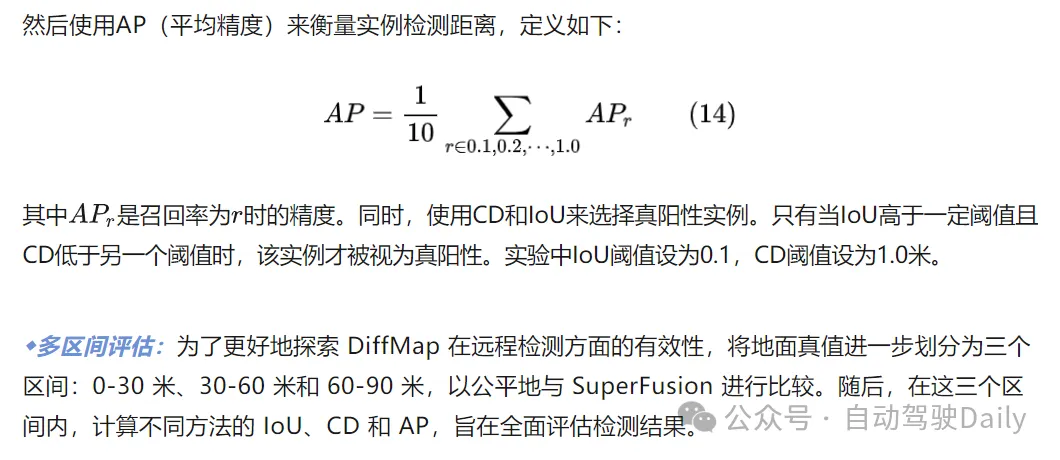

表1显示了语义地图分割的 IoU 得分比较。DiffMap 在所有区间都显示出显著的改善,尤其在车道分隔线和行人横道上取得了最佳结果。

▲Table 1|IoU score comparison

▲Table 1|IoU score comparison

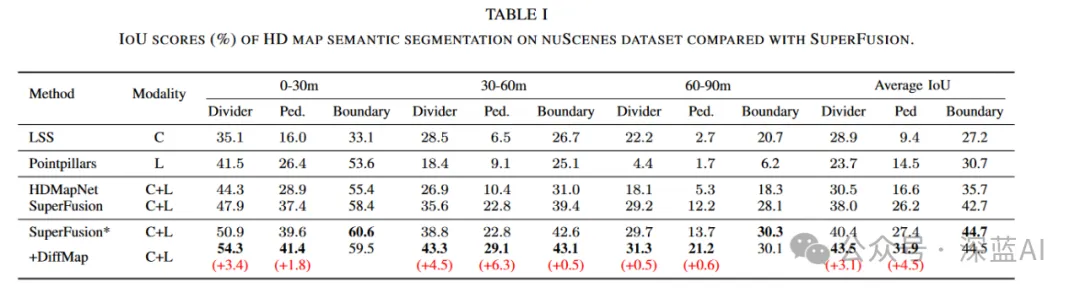

如表2所示,DiffMap方法在平均精度(AP)方面也有显著提升,验证了 DiffMap 的有效性。

▲Table 2|MAP score comparison

▲Table 2|MAP score comparison

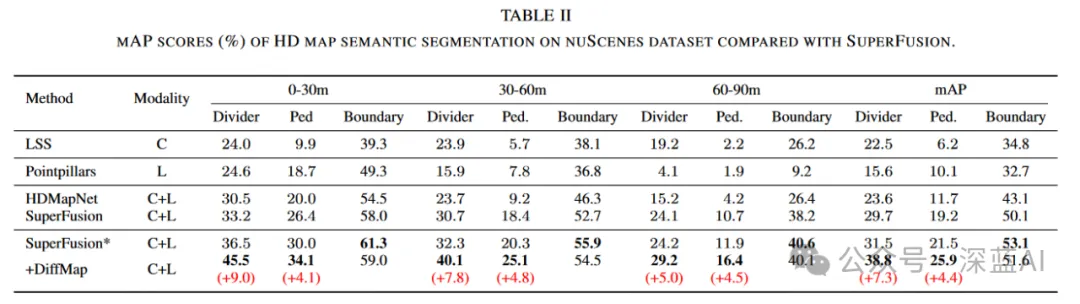

As shown in Table 3, when the DiffMap paradigm is integrated into HDMapNet, it can be observed that DiffMap can improve the performance of HDMapNet, whether using only the camera or the camera-lidar fusion method. This shows that the DiffMap method is effective in various segmentation tasks, including long-range and close-range detection. However, for boundaries, DiffMap does not perform well because the shape structure of boundaries is not fixed and there are many unpredictable distortions, which makes it difficult to capture prior structural features.

▲Table 3|Quantitative analysis results

▲Table 3|Quantitative analysis results

4.4 Ablation Experiment

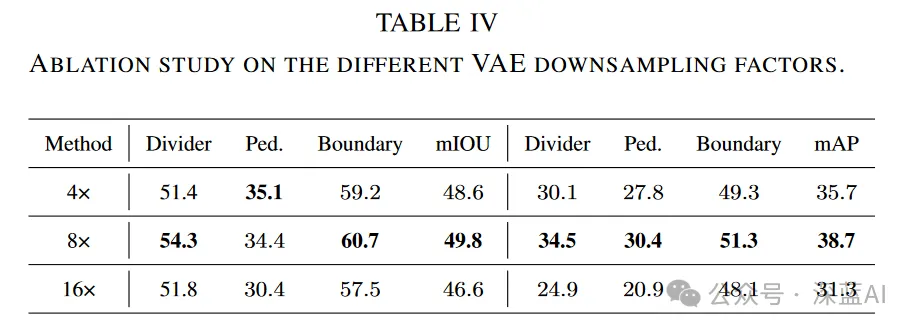

Table 4 shows the impact of different downsampling factors in VQVAE on the detection results. By analyzing the behavior of DiffMap when the downsampling factor is 4, 8, and 16, it can be seen that the best result is achieved when the downsampling factor is set to 8x.

▲Table 4|Ablation experiment results

▲Table 4|Ablation experiment results

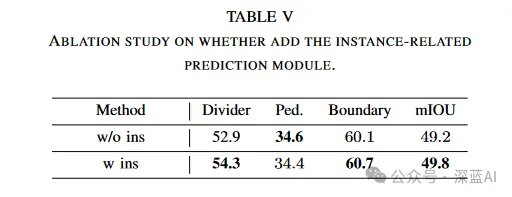

In addition, the authors also measured the impact of removing the instance-related prediction module on the model, as shown in Table 5. The experiments show that adding this prediction further improves the IOU.

▲Table 5|Ablation experiment results (whether or not the prediction module is included)

4.5 Visualization

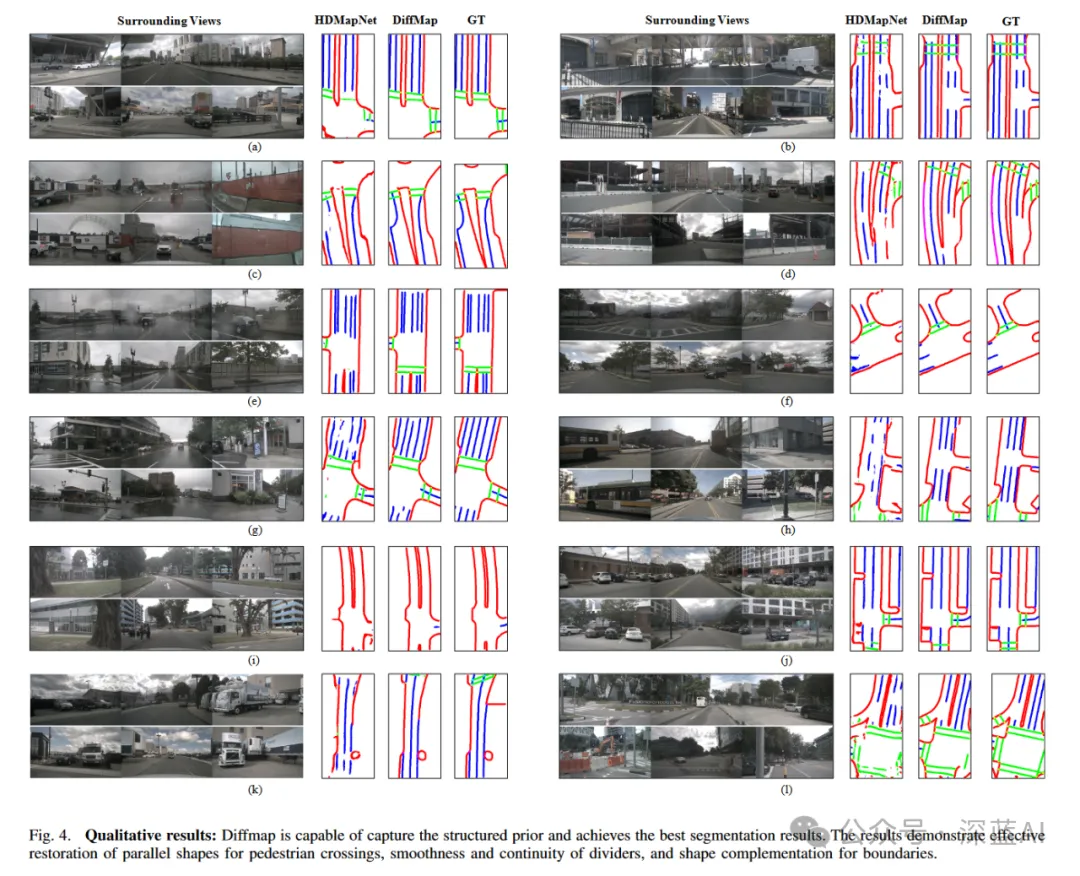

Figure 4 shows the comparison between DiffMap and the baseline (HDMapNet-fusion) in complex scenes. It is obvious that the segmentation results of the baseline ignore the shape properties and consistency of the internal elements. In contrast, DiffMap demonstrates the ability to correct these problems and produces segmentation output that is well aligned with the map specification. Specifically, in cases (a), (b), (d), (e), (h), and (l), DiffMap effectively corrects the inaccurately predicted crosswalks. In cases (c), (d), (h), (i), (j), and (l), DiffMap completes or removes inaccurate boundaries, making the results closer to the realistic boundary geometry. In addition, in cases (b), (f), (g), (h), (k), and (l), DiffMap solves the problem of broken separators and ensures the parallelism of adjacent elements.

▲Figure 4|Qualitative analysis results

▲Figure 4|Qualitative analysis results