Deploy large language models locally on 2GB DAYU200

Implementation ideas and steps

Port the lightweight LLM model inference framework InferLLM to the OpenHarmony standard system and compile a binary product that can run on OpenHarmony. (InferLLM is a simple and efficient LLM CPU inference framework that can locally deploy the quantitative model in LLM)

Use OpenHarmony NDK to compile the InferLLM executable file on OpenHarmony (specifically use the OpenHarmony lycium cross-compilation framework, and then write some scripts. Then store it in the tpc_c_cplusplusSIG warehouse.)

Deploy large language model locally on DAYU200

Compile and obtain the InferLLM third-party library compilation product

Download OpenHarmony sdk, download address:

http://ci.openharmony.cn/workbench/cicd/dailybuild/dailyList

Download this repository

git clone https://gitee.com/openharmony-sig/tpc_c_cplusplus.git --depth=1- 1.

# 设置环境变量

export OHOS_SDK=解压目录/ohos-sdk/linux # 请替换为你自己的解压目录

cd lycium

./build.sh InferLLM- 1.

- 2.

- 3.

- 4.

- 5.

Get the InferLLM third-party library header file and generated library

The InferLLM-405d866e4c11b884a8072b4b30659c63555be41d directory will be generated in the tpc_c_cplusplus/thirdparty/InferLLM/ directory. There are compiled 32-bit and 64-bit third-party libraries in this directory. (The relevant compilation results will not be packaged into the usr directory under the lycium directory).

InferLLM-405d866e4c11b884a8072b4b30659c63555be41d/arm64-v8a-build

InferLLM-405d866e4c11b884a8072b4b30659c63555be41d/armeabi-v7a-build- 1.

- 2.

Push the compiled product and model files to the development board for running

- Download model file: https://huggingface.co/kewin4933/InferLLM-Model/tree/main

- Package the llama executable file generated by compiling InferLLM, libc++_shared.so in OpenHarmony sdk, and the downloaded model file chinese-alpaca-7b-q4.bin into the folder llama_file

# 将llama_file文件夹发送到开发板data目录

hdc file send llama_file /data- 1.

- 2.

# hdc shell 进入开发板执行

cd data/llama_file

# 在2GB的dayu200上加swap交换空间

# 新建一个空的ram_ohos文件

touch ram_ohos

# 创建一个用于交换空间的文件(8GB大小的交换文件)

fallocate -l 8G /data/ram_ohos

# 设置文件权限,以确保所有用户可以读写该文件:

chmod 777 /data/ram_ohos

# 将文件设置为交换空间:

mkswap /data/ram_ohos

# 启用交换空间:

swapon /data/ram_ohos

# 设置库搜索路径

export LD_LIBRARY_PATH=/data/llama_file:$LD_LIBRARY_PATH

# 提升rk3568cpu频率

# 查看 CPU 频率

cat /sys/devices/system/cpu/cpu*/cpufreq/cpuinfo_cur_freq

# 查看 CPU 可用频率(不同平台显示的可用频率会有所不同)

cat /sys/devices/system/cpu/cpufreq/policy0/scaling_available_frequencies

# 将 CPU 调频模式切换为用户空间模式,这意味着用户程序可以手动控制 CPU 的工作频率,而不是由系统自动管理。这样可以提供更大的灵活性和定制性,但需要注意合理调整频率以保持系统稳定性和性能。

echo userspace > /sys/devices/system/cpu/cpufreq/policy0/scaling_governor

# 设置rk3568 CPU 频率为1.9GHz

echo 1992000 > /sys/devices/system/cpu/cpufreq/policy0/scaling_setspeed

# 执行大语言模型

chmod 777 llama

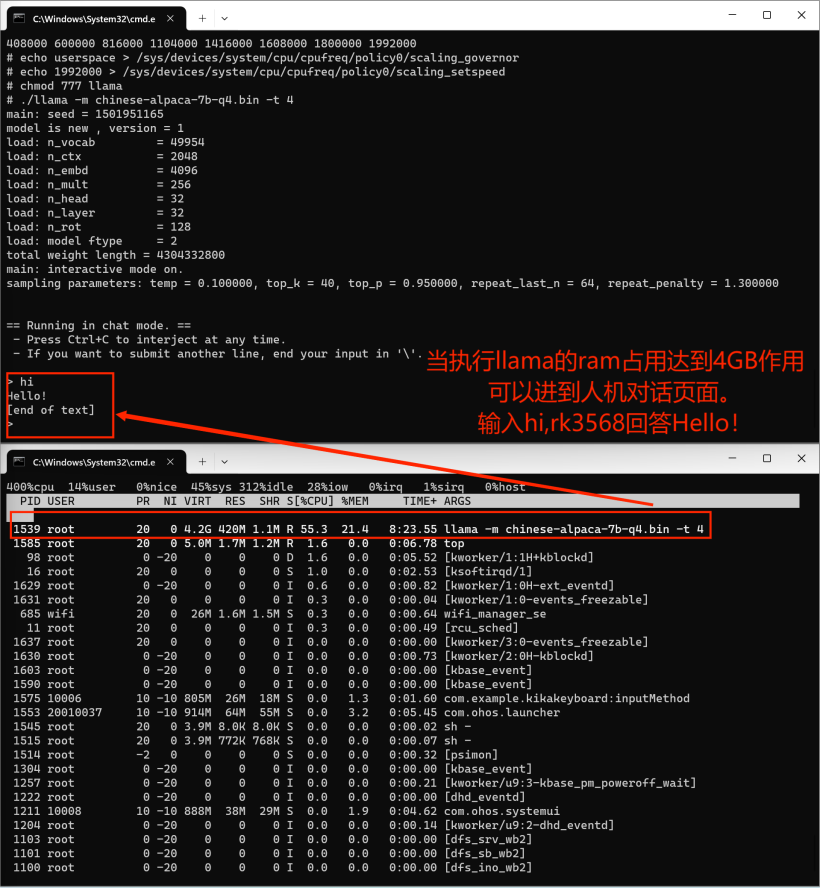

./llama -m chinese-alpaca-7b-q4.bin -t 4- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

Transplant the InferLLM third-party library and deploy a large language model on the OpenHarmmony device rk3568 to realize human-computer dialogue. The final running effect is a bit slow, and the pop-up of the human-machine dialog box is also a bit slow. Please wait patiently.