BAT, the first universal bidirectional Adapter multi-modal target tracking method, was selected for AAAI 2024

Object tracking is a basic visual task in computer vision. Due to the rapid development of computer vision, single-modality (RGB) object tracking has made significant progress in recent years. Considering the limitations of a single imaging sensor, we need to introduce multi-modal images (RGB, infrared, etc.) to make up for this shortcoming to achieve all-weather target tracking in complex environments.

However, existing multi-modal tracking tasks also face two main problems:

- Due to the high cost of data annotation for multi-modal target tracking, most existing datasets are limited in size and insufficient to support the construction of effective multi-modal trackers;

- Because different imaging methods have different sensitivities to objects in changing environments, the dominant modality in the open world changes dynamically, and the dominant correlation between multi-modal data is not fixed.

Many multimodal tracking efforts that are pretrained on RGB sequences and then transferred to multimodal scenes in a fully fine-tuned manner suffer from time-expensive and inefficient problems while exhibiting limited performance.

In addition to full fine-tuning methods, inspired by the success of parameter-efficient fine-tuning methods in the field of natural language processing (NLP), some recent methods have introduced parameter-efficient prompt fine-tuning to multiple models by freezing the backbone network parameters and appending an additional set of learnable parameters. Modal tracking in progress.

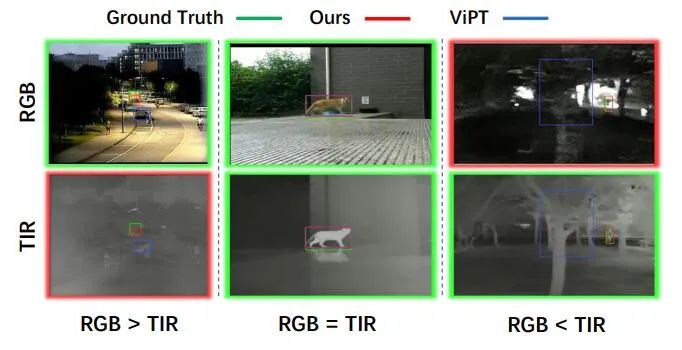

These methods usually use one modality (usually RGB) as the dominant mode and the other mode as the auxiliary mode. However, these methods ignore the dynamic dominant correlation of multi-modal data and are difficult to fully utilize the complementary multi-modal information in complex scenes as shown in Figure 1, thus limiting the tracking performance.

Figure 1: Different dominant modes in complex scenarios.

In order to solve the above problems, researchers from Tianjin University designed a bidirectional adapter for multi-modal tracking (BAT). Different from the method that adds auxiliary modality information in the dominant mode as a cue to enhance the representation ability of the base model in downstream tasks (usually using RGB as the main modality), this method does not preset a fixed dominant mode-auxiliary mode. state, but dynamically extract effective information in the process of the auxiliary mode changing to the dominant mode.

BAT consists of two modality branch-specific base model encoders that share parameters and a general bidirectional adapter. During the training process, BAT did not fully fine-tune the basic model. Each specific modal branch was initialized by the basic model with fixed parameters, and only the new bidirectional adapter was trained. Each modal branch learns prompt information from other modalities and combines it with the feature information of the current modality to enhance representation capabilities. Two modality-specific branches perform interactions through a universal bidirectional adapter and dynamically fuse dominant auxiliary information with each other in a multi-modal non-fixed association paradigm.

The universal bidirectional adapter has a lightweight hourglass structure, which can be embedded into each layer of the transformer encoder of the base model without introducing a large number of learnable parameters. By adding a small number of training parameters (0.32M), BAT achieves better tracking performance with lower training cost compared with fully fine-tuned methods and cue learning-based methods.

Paper "Bi-directional Adapter for Multi-modal Tracking":

Paper link: https://arxiv.org/abs/2312.10611

Code link: https://github.com/SparkTempest/BAT

Main contributions

- We first propose an adapter-based visual cueing framework for multi-modal tracking. Our model is able to perceive the dynamic changes of dominant modalities in open scenes and effectively fuse multi-modal information in an adaptive manner.

- To the best of our knowledge, we propose a general bidirectional adapter for the base model for the first time. It has a simple and efficient structure and can effectively realize multi-modal cross-cue tracking. By adding only 0.32M learnable parameters, our model is robust to multi-modal tracking in open scenarios.

- We conducted an in-depth analysis of the impact of our universal adapter at different levels. We also explored a more efficient adapter architecture in experiments and verified our advantages on multiple RGBT tracking related datasets.

core methods

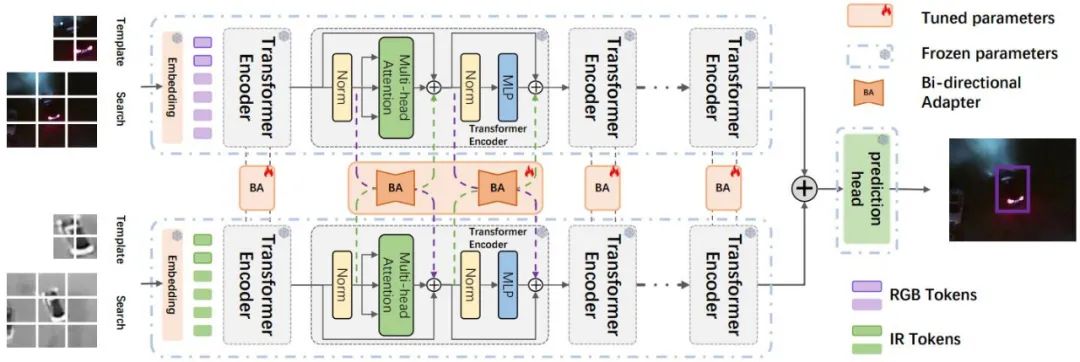

As shown in Figure 2, we propose a multi-modal tracking visual cue framework (BAT) based on a bidirectional Adapter. The framework has a dual-stream encoder structure of RGB modality and thermal infrared modality. Each stream uses the same basic model parameters. . The bidirectional Adapter is set up in parallel with the dual-stream encoder layer to cross-prompt multi-modal data from the two modalities.

The method does not completely fine-tune the basic model, but only learns a lightweight bidirectional Adapter to efficiently transfer the pre-trained RGB tracker to the multi-modal scene, achieving excellent multi-modal complementarity and excellent tracking. Accuracy.

Figure 2: Overall architecture of BAT.

First, the template frame (initial frame of the target object in the first frame

First, the template frame (initial frame of the target object in the first frame  ) and search frame (subsequent tracking image) of each modality

) and search frame (subsequent tracking image) of each modality  are converted

are converted  , and they are spliced together and passed to the N-layer dual-stream transformer encoder respectively.

, and they are spliced together and passed to the N-layer dual-stream transformer encoder respectively.

The bidirectional adapter is set up in parallel with the dual-stream encoder layer and can learn feature cues from one modality to the other. To this end, the output features of the two branches are added and input into the prediction head H to obtain the final tracking result box B.

The bidirectional adapter adopts a modular design and is embedded in the multi-head self-attention stage and the MLP stage respectively. The detailed structure of the bidirectional adapter is shown on the right side of Figure 1. It is designed to transfer feature cues from one modality to another. state. It consists of three linear projection layers, tn represents the number of tokens in each modality, the input token is first dimensionally reduced to de through down projection and passes through a linear projection layer, and then projected upward to the original dimension dt and fed back as a feature prompt Transformer encoder layers to other modalities.

Through this simple structure, the bidirectional adapter can effectively  perform feature prompts between modalities and achieve multi-modal tracking.

perform feature prompts between modalities and achieve multi-modal tracking.

Since the transformer encoder and prediction head are frozen, only the parameters of the newly added adapter need to be optimized. Notably, unlike most traditional adapters, our bidirectional adapter functions as a cross-modal feature cue for dynamically changing dominant modalities, ensuring good tracking performance in the open world.

Experimental effect

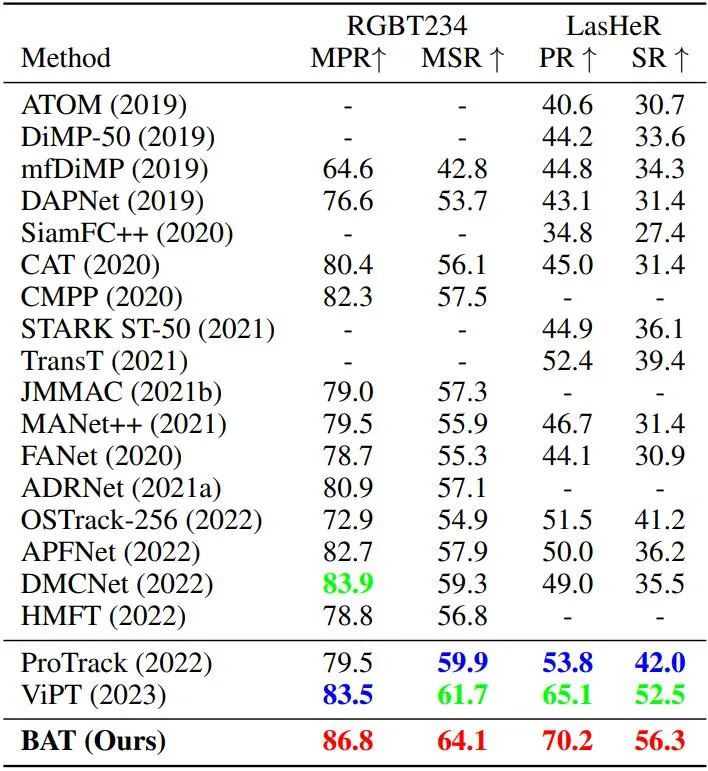

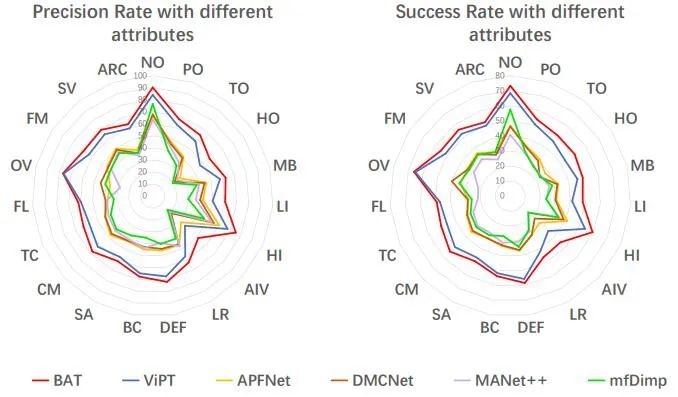

As shown in Table 1, the comparison on the two data sets of RGBT234 and LasHeR shows that our method outperforms the state-of-the-art methods in both accuracy and success rate. As shown in Figure 3, the performance comparison with state-of-the-art methods under different scene attributes of the LasHeR dataset also proves the superiority of the proposed method.

These experiments fully demonstrate that our dual-stream tracking framework and bidirectional Adapter successfully track targets in most complex environments and adaptively extract effective information from dynamically changing dominant-auxiliary modes, achieving state-of-the-art performance.

Table 1 Overall performance on RGBT234 and LasHeR datasets.

Figure 3 Comparison of BAT and competing methods under different attributes in the LasHeR dataset.

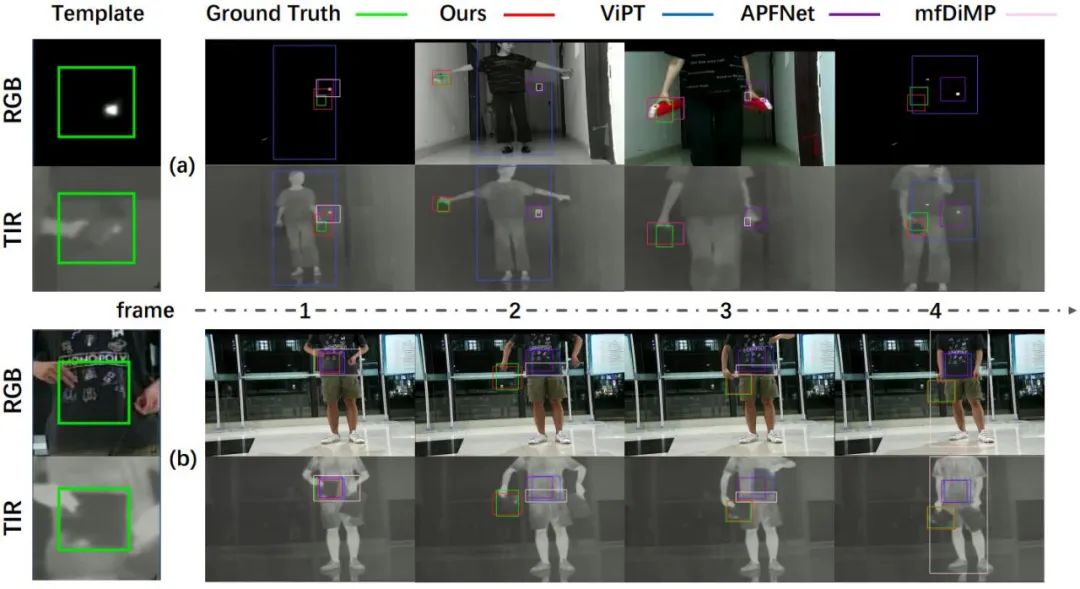

Experiments demonstrate our effectiveness in dynamically prompting effective information from changing dominant-auxiliary patterns in complex scenarios. As shown in Figure 4, compared with related methods that fix the dominant mode, our method can effectively track the target even when RGB is completely unavailable, when both RGB and TIR can provide effective information in subsequent scenes. , the tracking effect is much better. Our bidirectional Adapter dynamically extracts effective features of the target from RGB and IR modalities, captures more accurate target response locations, and eliminates interference from the RGB modality.

Figure 4 Visualization of tracking results.

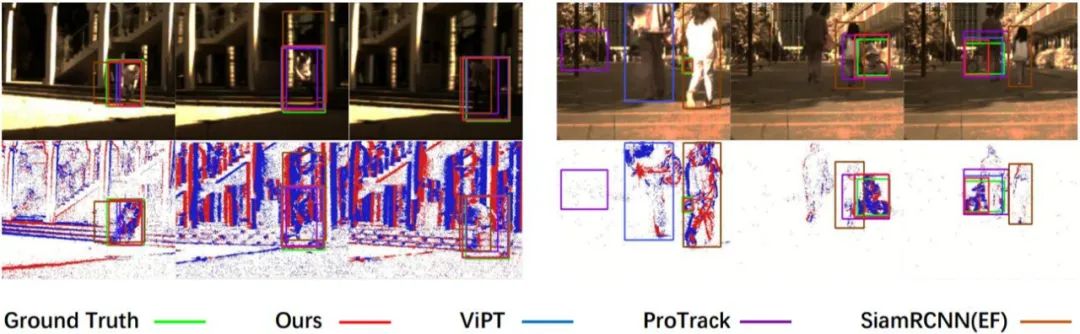

We also evaluate our method on the RGBE tracking dataset. As shown in Figure 5, compared with other methods on the VisEvent test set, our method has the most accurate tracking results in different complex scenarios, proving the effectiveness and generalization of our BAT model.

Figure 5 Tracking results under the VisEvent data set.

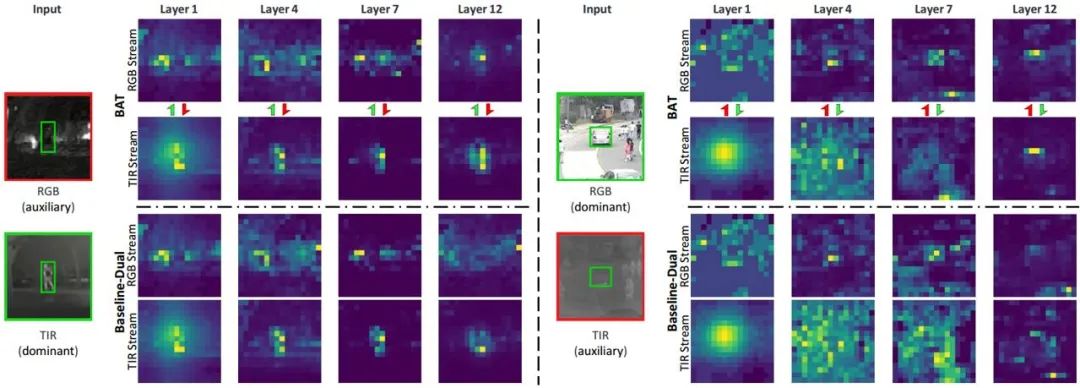

Figure 6 Visualization of attention weight.

We visualize the attention weights of different layers tracking targets in Figure 6. Compared with the baseline-dual (dual-stream framework for basic model parameter initialization) method, our BAT effectively drives the auxiliary mode to learn more complementary information from the dominant mode, while maintaining the effectiveness of the dominant mode as the network depth increases. performance, thereby improving overall tracking performance.

Experiments show that BAT successfully captures multi-modal complementary information and achieves sample-adaptive dynamic tracking.