Which network IO model should be used for RPC design?

Which network IO model should be used for RPC design?

What role does network communication play in RPC calls? RPC is a way to solve inter-process communication. An RPC call is essentially a process of network information exchange between a service consumer and a service provider. The service caller sends a request message through network IO, and the service provider receives and parses it. After processing the relevant business logic, it sends a response message to the service caller. The service caller receives and parses the response message, and processes the related response. Logically, one RPC call is over. It can be said that network communication is the basis of the entire RPC calling process.

1 Common network I/O models

Network communication between two PCs is the operation of network IO by the two PCs.

Synchronous blocking IO, synchronous non-blocking IO (NIO), IO multiplexing and asynchronous non-blocking IO (AIO). Only AIO is asynchronous IO, and the others are synchronous IO.

1.1 Synchronous blocking I/O (BIO)

By default, all sockets in Linux are blocked.

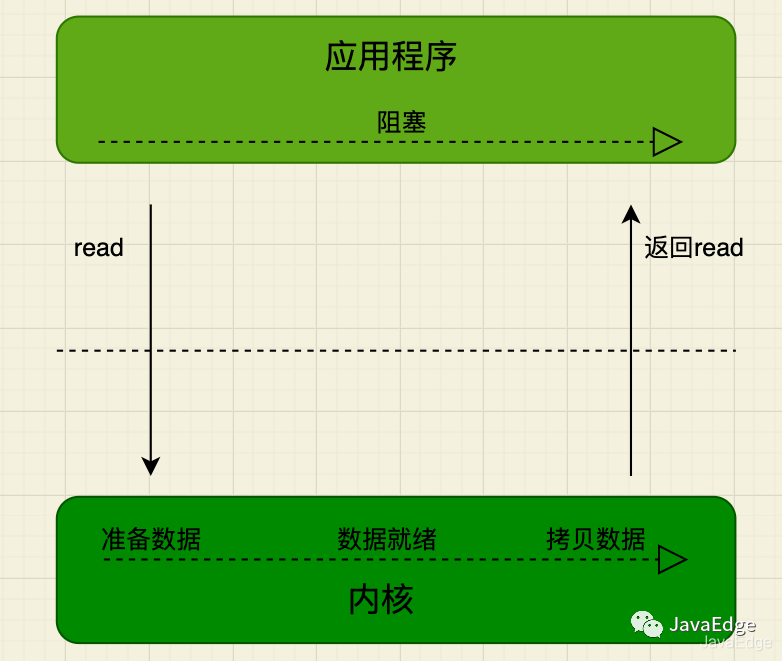

After the application process initiates an IO system call, the application process is blocked and transferred to the kernel space for processing. After that, the kernel starts to wait for the data. After waiting for the data, it copies the data in the kernel to the user memory. After the entire IO processing is completed, it returns to the process. Finally, the application process is released from the blocking state and runs the business logic.

The system kernel processes IO operations in two stages:

- • Waiting for data The system kernel writes the data to the kernel after waiting for the network card to receive the data.

- • Copy data. After obtaining the data, the system kernel copies the data to the space of the user process.

In these two stages, the threads for IO operations in the application process will always be blocked. If developed based on Java multi-threading, each IO operation will occupy the thread until the IO operation ends.

After the user thread initiates the read call, it blocks and gives up the CPU. The kernel waits for the network card data to arrive, copies the data from the network card to the kernel space, then copies the data to the user space, and then wakes up the user thread.

1.2 IO multiplexing (IO multiplexing)

One of the most widely used IO models in high-concurrency scenarios, such as the underlying implementation of Java's NIO, Redis, and Nginx, is the application of this type of IO model:

- • Multi-channel, i.e. multiple channels, i.e. multiple network connected IOs

- • Multiplexing, multiple channels multiplexed in a multiplexer

The IO of multiple network connections can be registered to a multiplexer (select). When the user process calls select, the entire process will be blocked. At the same time, the kernel will "monitor" all sockets responsible for select. When the data in any socket is ready, select will return. At this time, the user process calls the read operation to copy the data from the kernel to the user process.

When the user process initiates a select call, the process will be blocked. It will return when it is found that the socket responsible for the select has prepared data, and then initiate a read. The entire process is more complicated than blocking IO and seems to be more wasteful of performance. But the biggest advantage is that users can handle multiple socket IO requests at the same time in one thread. Users can register multiple sockets and then continuously call select to read the activated sockets to achieve the purpose of processing multiple IO requests simultaneously in the same thread. In the synchronous blocking model, it must be implemented through multi-threading.

For example, we went to a restaurant to eat. This time we went together with a few people. We left one person to wait in line at the restaurant, while the others went shopping. When our friend who was waiting in line informed us that we could eat, we just went Go and enjoy.

In essence, multiplexing is still synchronous blocking.

1.3 Why is blocking IO and IO multiplexing the most commonly used?

The application of network IO requires the support of the system kernel and the support of the programming language.

Most system kernels support blocking IO, non-blocking IO and IO multiplexing, but signal-driven IO and asynchronous IO are only supported by higher version Linux system kernels.

Regardless of C++ or Java, high-performance network programming frameworks are based on the Reactor mode, such as Netty. The Reactor mode is based on IO multiplexing. In non-high concurrency scenarios, synchronous blocking IO is the most common.

The most widely used ones, with the most complete system kernel and programming language support, are blocking IO and IO multiplexing, which meet the vast majority of network IO application scenarios.

1.4 Which network IO model does the RPC framework choose?

IO multiplexing is suitable for high concurrency and uses fewer processes (threads) to handle IO requests for more sockets, but it is more difficult to use.

Blocking IO blocks the process (thread) every time a socket IO request is processed, but it is less difficult to use. In scenarios where the amount of concurrency is low and the business logic only needs to perform IO operations synchronously, blocking IO can meet the needs, and there is no need to initiate a select call, and the overhead is lower than IO multiplexing.

Most RPC calls are high-concurrency calls. Taking comprehensive considerations into account, RPC chooses IO multiplexing. The optimal framework choice is Netty, a framework implemented based on the Reactor pattern. Under Linux, epoll must also be enabled to improve system performance.

2 Zero-copy

2.1 Network IO reading and writing process

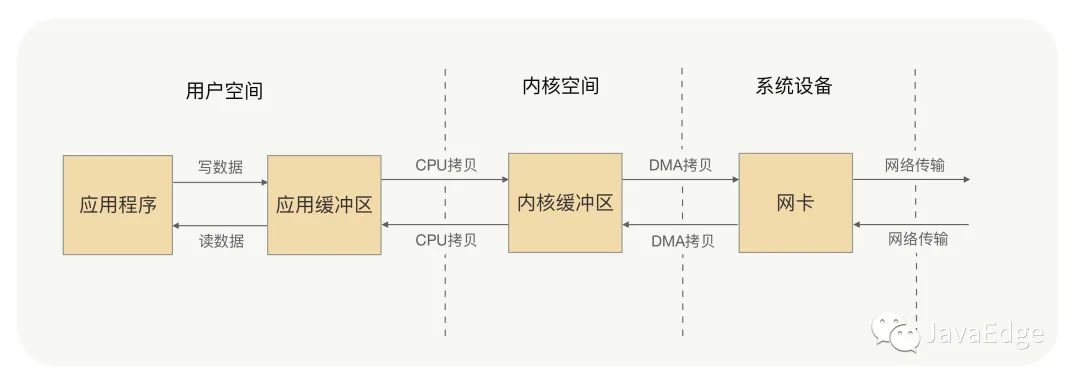

Each write operation of the application process writes the data to the user space buffer. The CPU then copies the data to the system kernel buffer. The DMA then copies the data to the network card and sends it out from the network card. For a write operation, the data must be copied twice before it can be sent out through the network card, while for user process read operations, the opposite is true. The data must also be copied twice before the application can read the data.

A complete read and write operation of the application process must be copied back and forth between user space and kernel space. Each copy requires the CPU to perform a context switch (switching from the user process to the system kernel, or from the system kernel to the user process). Isn't this a waste of CPU and performance? Is there any way to reduce data copying between processes and improve the efficiency of data transmission?

This requires zero copy: cancel the data copy operation between user space and kernel space. Every read and write operation of the application process allows the application process to write or read data to the user space, just like writing or reading data directly to the kernel space. The same as reading data, and then copy the data in the kernel to the network card through DMA, or copy the data in the network card to the kernel.

2.2 Implementation

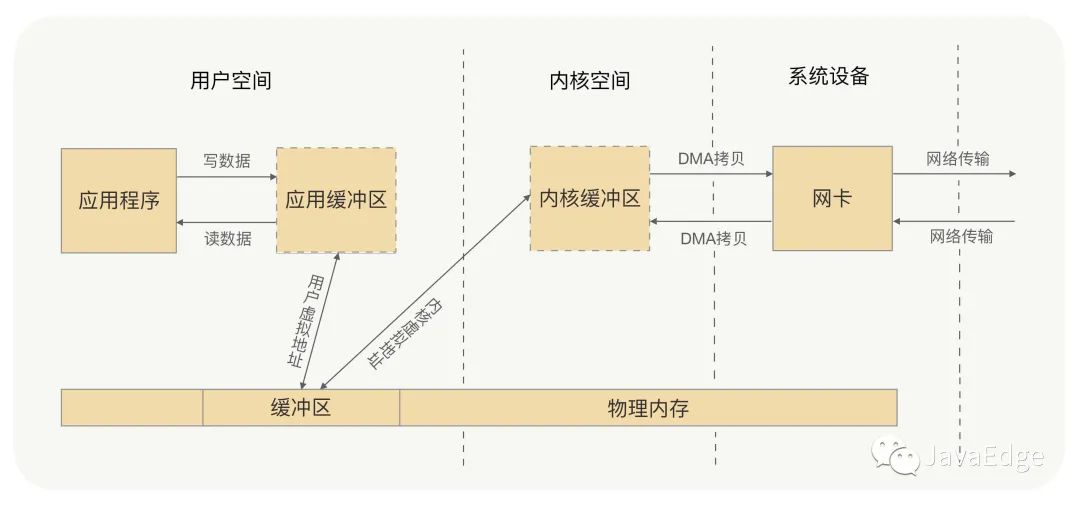

Does it mean that both user space and kernel space write data to the same place, so there is no need to copy it? Think of virtual memory?

Virtual Memory

There are two implementations of zero copy:

mmap+write

Solved by virtual memory.

sendfile

Nginx sendfile

3 Netty zero copy

The selection of the RPC framework in the network communication framework is based on the framework implemented in the Reactor mode, such as Netty, which is preferred for Java. Does Netty have a zero-copy mechanism? What is the difference between zero copy in the Netty framework and the zero copy I talked about before?

The zero copy in the previous section is the zero copy of the os layer. In order to avoid data copy operations between user space and kernel space, it can improve CPU utilization.

Netty zero copy is different. It stands entirely on the user space, that is, the JVM, and is biased towards the optimization of data operations.

What does Netty mean by doing this?

During the transmission process, RPC will not send all the binary data of the request parameters to the peer machine at once. It may be split into several data packets, or the data packets of other requests may be merged, so the message must have boundaries. After the machine at one end receives the message, it must process the data packets, split and merge the data packets according to the boundaries, and finally obtain a complete message.

After receiving the message, is the splitting and merging of the data packets completed in user space or in kernel space?

Of course it is in user space, because the processing of data packets is handled by the application, so is there possible data copy operation here? It may exist, but of course it is not a copy between user space and kernel space, but a copy processing operation in the internal memory of user space. Netty's zero copy is to solve this problem and optimize data operations in user space.

So how does Netty optimize data operations?

- • Netty provides the CompositeByteBuf class, which can merge multiple ByteBufs into one logical ByteBuf, avoiding copying between ByteBufs.

- • ByteBuf supports slice operation, so ByteBuf can be decomposed into multiple ByteBufs sharing the same storage area, avoiding memory copying.

- • Through the wrap operation, we can wrap the byte[] array, ByteBuf, ByteBuffer, etc. into a Netty ByteBuf object to avoid copy operations.

Many internal ChannelHandler implementation classes in the Netty framework handle unpacking and sticking issues in TCP transmission through CompositeByteBuf, slice, and wrap operations.

Netty solves data copy between user space and kernel space

Netty's ByteBuffer uses Direct Buffers and uses off-heap direct memory to perform Socket read and write operations. The final effect is the same as that achieved by the virtual memory I just explained.

Netty also provides the FileChannel.transferTo() method that wraps NIO in FileRegion to implement zero copy, which is the same principle as the sendfile method in Linux.

4 Summary

The benefit of zero copy is to avoid unnecessary CPU copies, freeing the CPU to do other things. It also reduces the context switching of the CPU between user space and kernel space, thereby improving network communication efficiency and application programs. overall performance.

Netty zero copy is different from os zero copy. Netty zero copy prefers the optimization of data operations in user space. This is of great significance to deal with the unpacking and sticking problem in TCP transmission. It is also important for applications to process request data and return data. It is also significant.