Kubernetes uses OkHttp client for network load balancing

Kubernetes uses OkHttp client for network load balancing

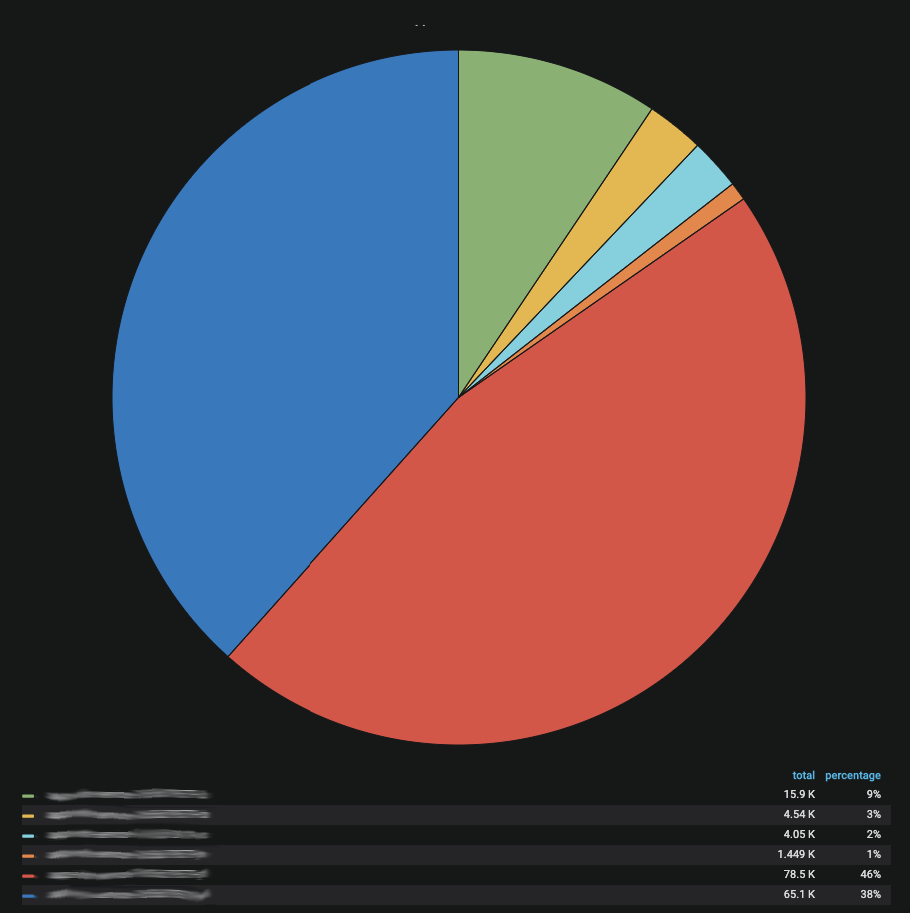

During an internal Java service audit, we discovered that some requests were not properly load balanced across the Kubernetes (K8s) network. The issue that led us to dig deeper was a sharp increase in HTTP 5xx error rates due to very high CPU usage, high number of garbage collection events, and timeouts, but this only occurred in a few specific Pods.

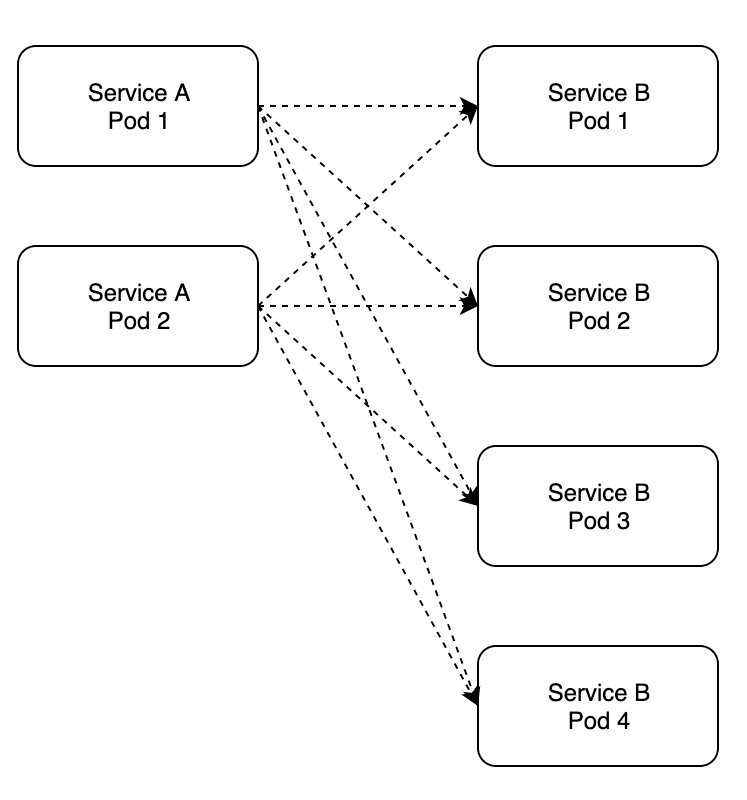

This situation is not visible in all cases because it affects multi-pod services where the number of source Pods and target Pods are different. In this blog post, I will discuss the steps we took to load balance this set of services and pods.

How are requests balanced among Pods in our deployment?

Two source Pods send requests to six target Pods.

You can clearly see that the request distribution is uneven among the target Pods.

But why is this happening?

The default load balancing scheduler of K8s load balancer (IPVS proxy mode) is set to round robin. IPVS provides more options for balancing traffic to Pod backends. While testing these options, we found that the behavior was the same regardless of configuration when it came to our services, which communicated with each other using internal routing.

In the end what happened? IPVS in K8s balances traffic based on connections, which works pretty well in most cases. Our services use OkHttp as the HTTP client to communicate with each other. Our problem has to do with the way this HTTP client behaves. With the default configuration, it creates a connection to the server, and if you don't want to explicitly close the connection in your code because it's too expensive, it maintains and re-establishes the connection to the previous partner. This means that the client tries to maintain a connection to the target and sends requests over that specific connection. Normally it creates a 1:1 connection, which is not balanced on the K8s side.

what to do?

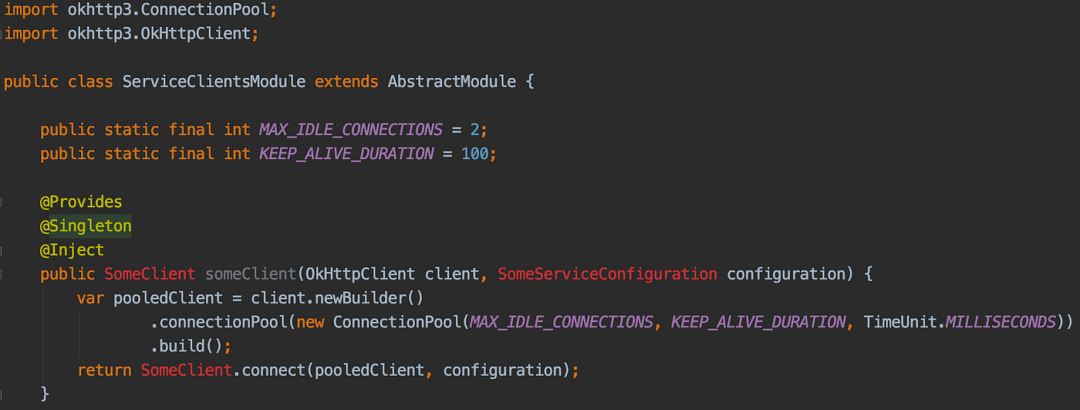

If you need to scale or want your service to be properly load balanced, you will need to update the configuration on the client side. OkHttp provides ConnectionPool functionality. When using the ConnectionPool option, the connection will be established for a limited period of time and then repeatedly set up with a new connection, so IPVS can be load balanced as it has a large number of new connections that should be routed to the destination according to the IPVS scheduler. Basically, it works like a machine gun instead of a laser beam.

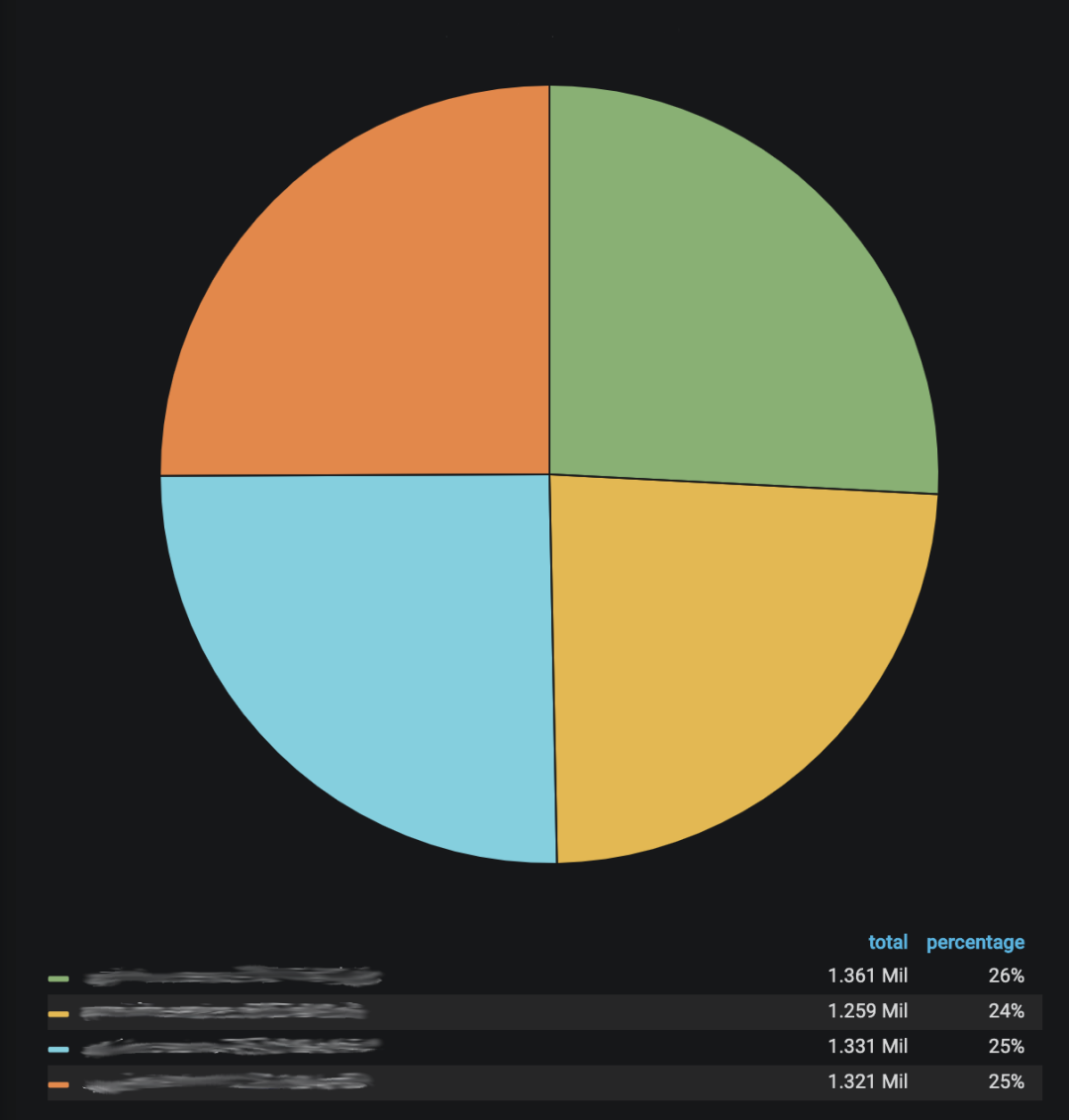

How effective are we after releasing this update?

Implemented load-balanced connections between multi-pod services using an updated HTTP client and the default IPVS scheduler.

What changes were made?

We conducted extensive testing using various configurations to measure response times and performance overhead to ensure load balancing. Below are the major code changes that appear to have no significant performance overhead.

Code change example

There is an option to set up the scheduler to be able to send more requests in parallel. In our case this ended up establishing a set of recently closed connections and then continuing to use only one connection. Additionally, we try to prevent opening new connections too frequently, since executing a request is much less demanding than opening a new connection.

How is the result?

Network and resource usage is now more balanced than before - there are no huge or long-lasting spikes, and there are no "noisy neighbor" effects that only affect certain Pods in the deployment. Now almost all Pods are utilized in almost the same way, so we were able to reduce the number of Pods in our deployment. We know this isn't perfect, but it's good enough for our use case as it doesn't impose a noticeable performance overhead on the service or the IPVS load balancer.

Request load balancing on the current Pod

in conclusion

Conducting a thorough service audit on a regular basis is beneficial because it can reveal optimization points that will benefit all services in the future and save you time when troubleshooting odd symptoms of functionality that should be working immediately. Additionally, take the time to review the documentation, test, discuss, and understand the impact of default settings regarding connection settings and handling when using client libraries to ensure that they will behave as you expect.