How should the system’s facade API gateway be designed?

As his e-commerce website became more and more famous, the system welcomed some "uninvited guests". In the early morning, the number of search products and user interface calls in the system would rise sharply, and then return to normal after a period of time.

The characteristic of such search requests is that they originate from a specific set of devices. When I introduced the device ID traffic limiting policy on the search service, the peak search requests in the early morning were controlled. However, soon after, the user service began to receive a large number of crawler requests for user information, and the product service also began to receive crawler requests for product information. Therefore, I was forced to add the same throttling policy on both services. But this will cause a problem: the same strategy needs to be implemented repeatedly in multiple services, and code reuse cannot be achieved. If other services face similar issues, then the code will need to be duplicated, which is obviously not a good practice.

As a Java programmer, my first reaction is to separate the current limiting function into a reusable JAR package for reference by these three services. But the problem is that our e-commerce team not only uses Java, but also uses PHP, Go and other programming languages to develop services. For services in different programming languages, Java JAR packages cannot be used directly. This is why API Gateway was introduced. API gateway can provide a cross-language solution, allowing services in different programming languages to easily use the same current limiting strategy.

The role of API gateway

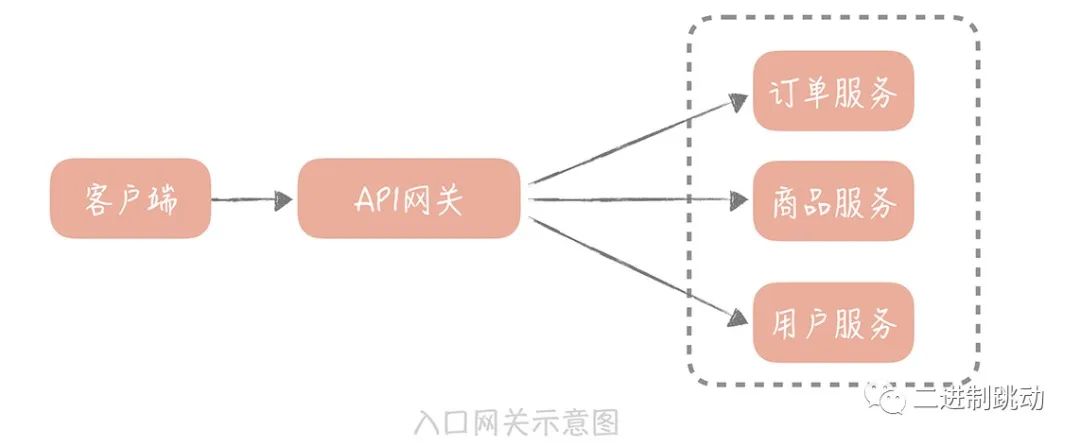

API Gateway is not a single open source component, but an architectural pattern. It integrates some service-shared functions and deploys them independently as an independent layer to solve service governance issues. You can think of it as the front door of the system, responsible for unified management of system traffic. From my perspective, API gateways can be divided into two main types: ingress gateways and egress gateways.

- Ingress gateway: The ingress gateway is located inside the system and is used to process the traffic of external requests entering the system. It can perform some important tasks such as routing requests to the correct service, authentication, authentication, flow limiting, request transformation and response transformation, etc. Ingress gateways help manage a system's visibility to the outside world and provide a consistent interface.

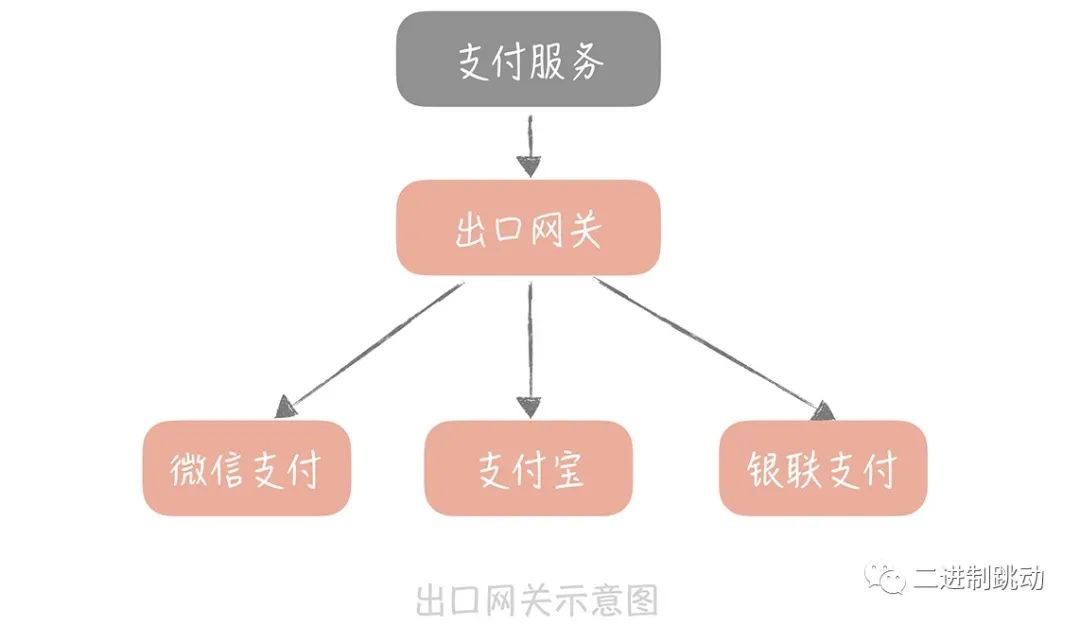

- Exit gateway: The egress gateway is located inside the system and is used to process the traffic of internal service calls in the system to external systems. It can perform tasks such as load balancing, caching, retries, error handling, etc. to ensure communication reliability and performance between internal services. Egress gateways are often used to isolate internal services and external systems while providing consistent interfaces.

These two types of API gateways can help manage the boundaries of the system and provide solutions in traffic control, security, performance optimization, etc. to ensure the stability and scalability of the system.

The entrance gateway is the type of gateway we often use. It is deployed between the load balancing server and the application server and has several main functions.

- The API gateway provides a single entry address for the client. It can dynamically route requests to different business services based on the client's requests and perform necessary protocol conversions. In your system, different microservices may provide services to the outside world using different protocols: some are HTTP services, some have been transformed into RPC services, and some may still be Web services. The function of the API gateway is to shield the deployment addresses and protocol details of these services from the client, thereby facilitating client calls. The client only needs to interact with the API gateway instead of directly communicating with services of various protocols, making the overall calling process simpler.

- On the other hand, in the API gateway, we can embed some service governance strategies, such as service circuit breaker, downgrade, flow control and diversion, etc.

- Furthermore, the implementation of client authentication and authorization can also be placed in the API gateway.

- In addition, the API gateway can also do some things related to black and white lists, such as black and white lists for device ID, user IP, user ID and other dimensions.

- Finally, some logging can also be done in the API gateway, such as recording access logs of HTTP requests. In the distributed tracking system, the mentioned requestId that marks a request can also be generated in the gateway.

picture

picture

The function of the egress gateway is relatively simple, but it also plays an important role in system development. Our applications often rely on external third-party systems, such as third-party account login or payment services. In this case, we can deploy an egress gateway between the application server and the external system to uniformly handle tasks such as authentication, authorization, auditing, and access control for external APIs. This can help us better manage and protect communications with external systems and ensure system security and compliance.

picture

picture

How to implement API gateway

After understanding the role of an API gateway, the next step is to focus on the key aspects to pay attention to when implementing an API gateway, as well as learn about some common open source API gateway solutions. This will allow you to make more comfortable decisions whether you are considering developing your own API gateway or using an existing open source implementation.

When developing an API gateway, the primary consideration is its performance. This is very important because the API entry gateway needs to handle all traffic from clients. For example, assuming that the processing time of the business service is 10 milliseconds and the processing time of the API gateway is 1 millisecond, it is equivalent to increasing the response time of each interface by 10%, which will have a huge impact on performance. The key to performance usually lies in the choice of I/O model. Here is just an example to illustrate the impact of I/O model on performance.

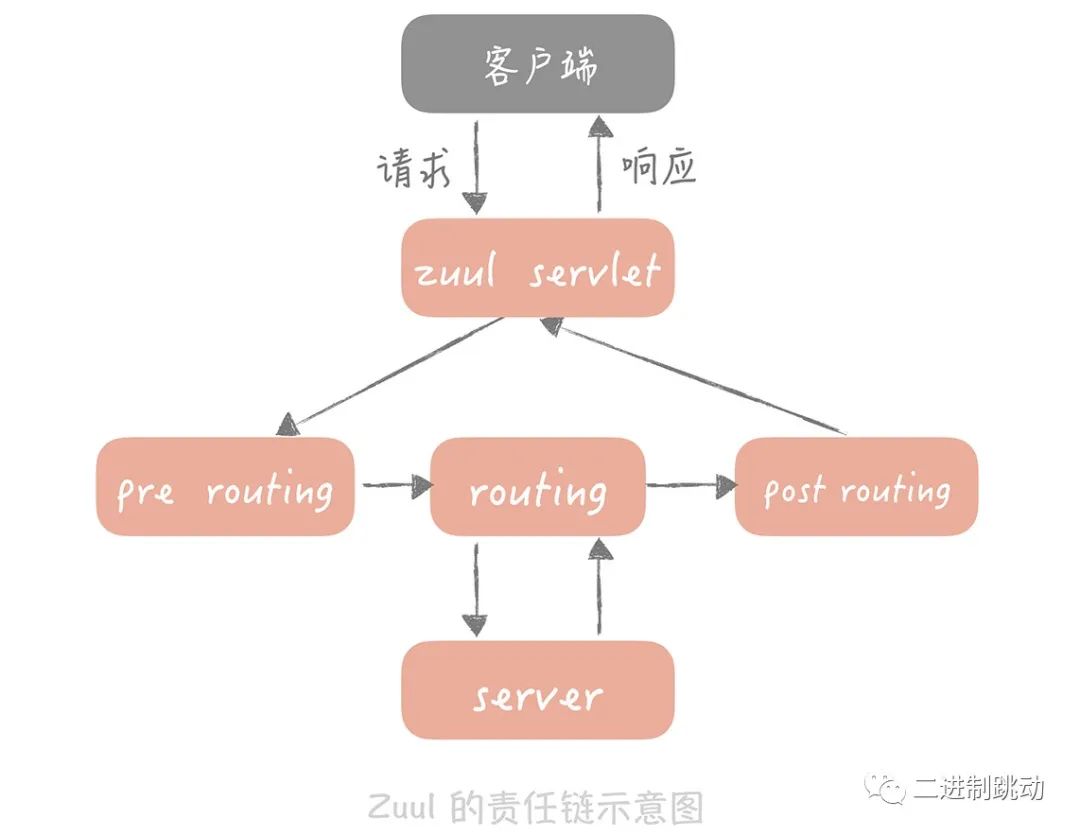

In version 1.0 of Netflix's open source API gateway Zuul, a synchronous blocking I/O model is adopted. The overall system can be regarded as a Servlet, which receives the user's request, then performs the configured authentication, protocol conversion and other logic in the gateway, and finally calls the back-end service to obtain the data and return it to the user.

In Zuul 2.0, the Netflix team has made significant improvements. They transformed the original Servlet into a Netty server and adopted an I/O multiplexing model to handle incoming I/O requests. At the same time, they also improved the previous synchronous blocking method of calling back-end services and adopted a non-blocking method of Netty client calling. After these improvements, the Netflix team tested and found that performance increased by about 20%.

The functions of API gateway can be divided into two types: one can be pre-defined and configured, such as black and white list settings, interface dynamic routing, etc.; the other needs to be defined and implemented according to specific business. Therefore, in the design of API gateway, it is very important to pay attention to scalability. This means that you can add custom logic to the execution link at any time, and you can remove unnecessary logic at any time, enabling so-called hot-swap functionality. This flexibility allows the behavior of the API gateway to be customized according to different business needs, making it more versatile and adaptable.

Typically, we can define each operation as a filter, and then use the chain of responsibility pattern to string these filters together. The Chain of Responsibility pattern allows us to dynamically organize these filters and decouple them. This means we can add or remove filters at any time without affecting other filters. This model allows us to flexibly customize the behavior of the API gateway and build filter chains according to specific needs without worrying about the mutual influence between filters.

Zuul uses a chain of responsibility model to handle requests. In Zuul 1, it defines filters into three types: pre-routing filter (pre routing filter), routing filter (routing filter) and post-processing filter (after routing filter). Each filter defines the order in which it is executed, and when you register a filter, they are inserted into the filter chain in order. This means that when Zuul receives a request, it executes the filters inserted in the filter chain in order. This approach makes it possible to easily apply various filters during request processing to enable different types of operations.

picture

picture

In addition, special attention needs to be paid to the fact that in order to improve the gateway's parallel processing capabilities for requests, a thread pool is usually used to process multiple requests at the same time. However, this also brings a problem: if the product service responds slowly, causing the thread calling the product service to be blocked and unable to be released, as time goes by, the threads in the thread pool may be occupied by the product service, thus affecting other Serve. Therefore, we need to consider thread isolation or protection strategies for different services. From my perspective, there are two main ideas to deal with this problem:

If your back-end services are not split too much, you can use different thread pools for different services, so that failures in product services will not affect payment services and user services;

Within the thread pool, thread protection can be done for different services and even different interfaces. For example, if the maximum number of threads in the thread pool is 1000, then you can set a maximum quota for each service.

Typically, the execution time of a service should be very fast, usually on the millisecond level. After a thread is used, it should be quickly released back to the thread pool for use by subsequent requests. At the same time, the number of executing threads running in the system should be kept low and not increase significantly. This ensures that the thread quota of the service or interface does not affect normal execution.

However, once a failure occurs and the response time of an interface or service slows down, the number of threads may increase significantly. However, due to thread quota restrictions, this will not have a negative impact on other interfaces or services. This approach helps isolate faults and ensures they don't spread throughout the system.

You can also combine these two methods in practical applications, such as using different thread pools for different services and setting quotas for different interfaces within the thread pool.

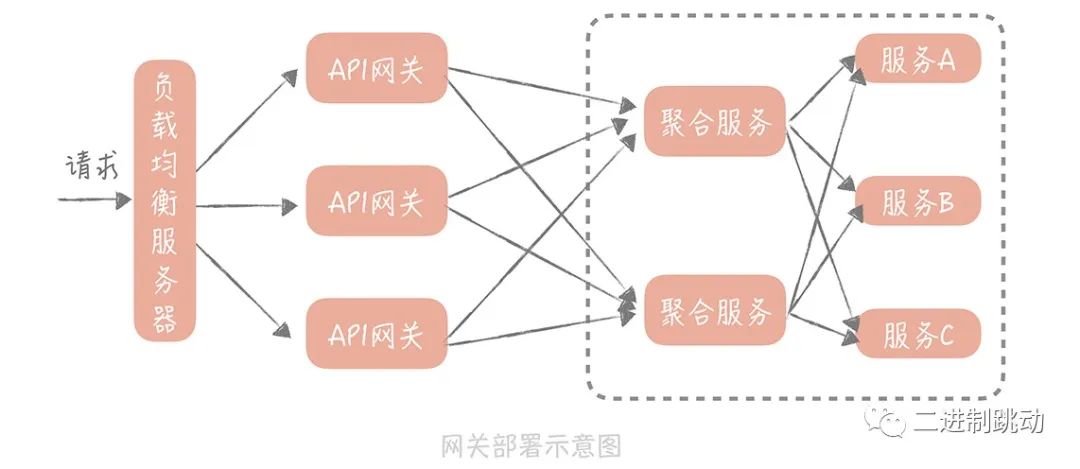

How to introduce API gateway into your system

Currently, our e-commerce system has undergone a service-oriented transformation, in which a thin Web layer was introduced between the service layer and the client. This Web layer has two main tasks: First, it is responsible for aggregating service layer interface data. For example, the interface of the product details page may aggregate data from multiple service interfaces, including product information, user information, store information, and user reviews. Secondly, the web layer needs to convert HTTP requests into RPC requests and impose some restrictions on the front-end traffic, such as adding a blacklist of device IDs for certain requests.

Therefore, when carrying out transformation, we can first separate the API gateway from the Web layer, move protocol conversion, current limiting, black and white lists and other functions to the API gateway for processing, forming an independent entrance gateway layer. For the operation of service interface data aggregation,

There are usually two solutions: one is to create a separate set of gateways, which are responsible for traffic aggregation and timeout control. We usually call them traffic gateways;

The other is to extract the interface aggregation operations into a separate service layer. In this way, the service layer can be roughly divided into the atomic service layer and the aggregate service layer.

In my opinion, interface data aggregation belongs to business operations. Rather than implementing it in a general gateway layer, it is better to implement it in a service layer that is closer to the business. Therefore, I prefer the second option. This approach helps to better divide responsibilities and make the system more modular and maintainable.

picture

picture

At the same time, we can deploy export gateway services between the system, third-party payment services, and login services. Originally, you would complete operations such as encryption and signature of the data required by the third-party payment interface in the split payment service, and then call the third-party payment interface to complete the payment request. Now, you put the data encryption and signature operations in the export gateway. In this way, the payment service only needs to call the unified payment interface of the export gateway.

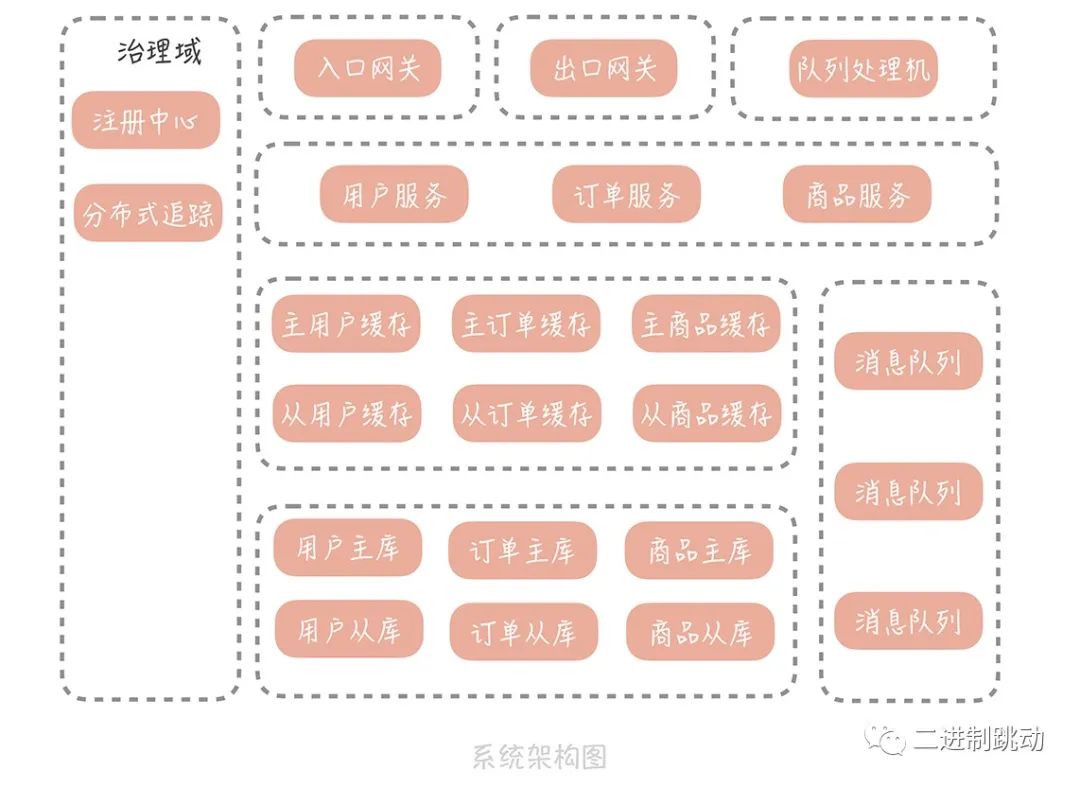

After introducing the API gateway, our system architecture became as follows:

picture

picture

Key points to emphasize:

API gateways can be divided into two categories: ingress gateways and egress gateways. The ingress gateway has multiple functions, including isolating clients and microservices, providing protocol conversion, implementing security policies, performing authentication, current limiting, and implementing circuit breakers. The main function of the exit gateway is to provide a unified exit for calling third-party services. It can perform tasks such as unified authentication, authorization, auditing, and access control to ensure the security and compliance of communications with external systems.

The key to API gateways is the implementation of performance and scalability. In order to improve the performance of the gateway, the multi-channel I/O multiplexing model and thread pool can be used to process requests concurrently. In order to improve the scalability of the gateway, the chain of responsibility model can be used to organize and manage filters so that filters can be easily added, removed, or modified to meet different needs.

The thread pool in the API gateway can isolate and protect different interfaces or services, which can improve the availability of the gateway;

The API gateway can replace the Web layer in the original system, move the protocol conversion, authentication, current limiting and other functions in the Web layer into the API gateway, and sink the service aggregation logic to the service layer.

API gateway not only provides convenient API calling methods, but also isolates some service management functions to achieve better reusability. Although there may be some sacrifices in performance, typically these performance losses are acceptable using mature open source API gateway components. Therefore, when your microservice system becomes more and more complex, you can consider using the API gateway as the facade of the entire system to simplify the system architecture and provide better maintainability.