The interviewer asked how the reliability of TCP is ensured?

The interviewer asked how the reliability of TCP is ensured?

We know that TCP is reliable. Our previous article explained data transmission after three-way handshakes and four waves. They are based on the sequence number mechanism and the confirmation response mechanism. To ensure the reliability of this mechanism, it is necessary to Some other auxiliary, TCP reliability guarantees include: retransmission mechanism, sliding window, flow control, congestion control, etc.

1. Retransmission mechanism

The reliability of tcp relies on the sequence number mechanism and the confirmation response mechanism. That is, one end sends data to the other end, and the other end will reply with an ack packet. This ensures that the data is sent successfully, and there are two possibilities for this process:

- One is that the data packet does not reach the receiving end because the data is lost or delayed;

- One is that the ack packet has not reached the sender because it was lost or delayed.

The former data has not reached the receiving end, while the latter data has arrived at the receiving end, but the reply ack packet has been lost and has not reached the sending end.

TCP uses a retransmission mechanism to solve the problem of packet loss and repeated transmission. Retransmission in TCP includes timeout retransmission, fast retransmission, sack and d-sack.

1. Timeout retransmission

As the name suggests, it means to resend the data if no reply is received after a certain period of time. It is difficult to determine the time RTO for retransmission. This time is neither too large nor too small. It should be a little longer than the round-trip time RTT of the data packet. It is reasonable. , but the round-trip time RTT of a data packet is not fixed and will be affected by network fluctuations, so the RTT time is calculated based on the weighted average of several round-trip times and the fluctuation range of RTT, and RTO is based on this Converted through some coefficients.

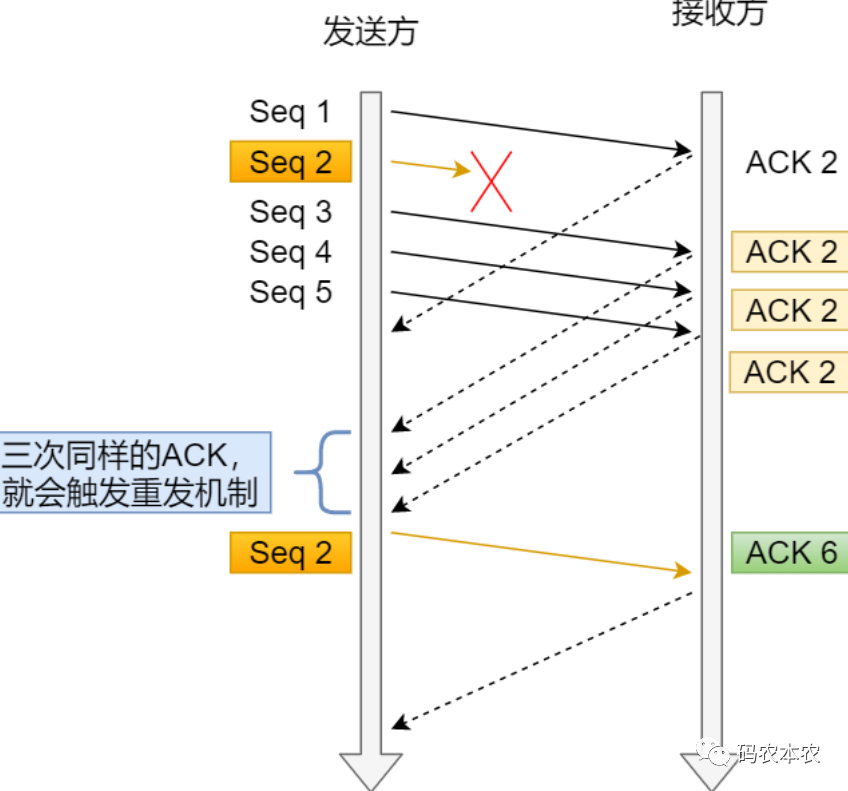

2. Fast retransmission

Although there is timeout retransmission, it is too slow for some data packets to wait until they actually time out before retransmitting. Therefore, Linux also has a retransmission mechanism called fast retransmission.

The principle is: when the sender sends a piece of data seq2, it fails to get an ack packet back. At this time, if several pieces of data seq3, seq4, seq5, seq6 are sent in succession, and the ack packets received in these subsequent times are They are all ack2, ack2, ack2, ack2, which means that the receiving end has received the data before seq2, but seq2 has not been received yet. The fast retransmission mechanism is that if multiple pieces of data are sent at the sending end, but each data packet The sequence numbers of the reply packets are all the same. For example, in this example, the data packets returned by seq3, seq4, seq5, and seq6 are all ack2. This 2 refers to the sequence number, which means that the sequence number of the largest data missing from the receiving end is 2. , the sender should send seq2. If there are three consecutive ack2, tcp will judge that seq2 needs to be resent. This situation can solve the problem of too long waiting time for timeout retransmission, but the new problem is that the sender does not know to resend it. Send seq2 or resend seq3, seq4, seq5, seq6. In this case, different versions of Linux have different implementations.

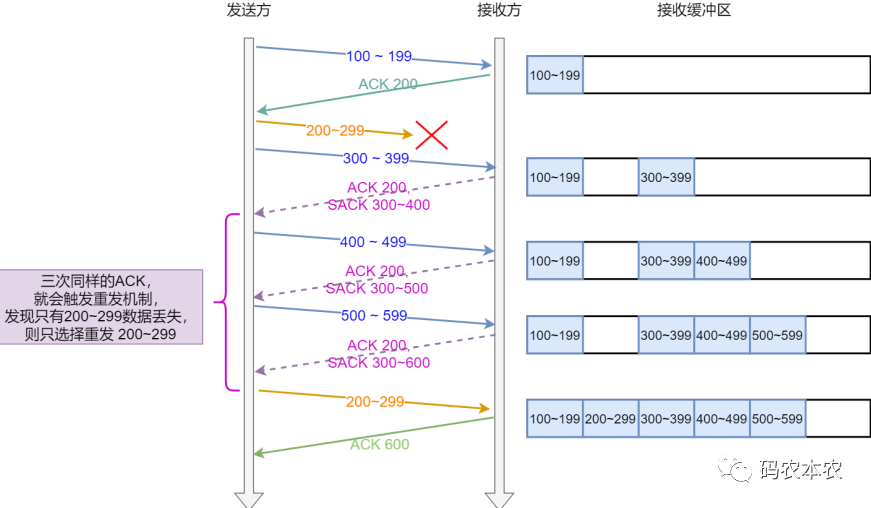

3.sack

The above problem has been raised. How to solve it in Linux later? By introducing sack, the value of this field will be placed in the option field of the tcp header. In the case of the above example, the receiving end will reply with one every time it receives a request. ack and sack, the middle part of these two values is the missing data at the current receiving end, that is, ack<=x<sack. x is the missing part of the data. The sequence number of this part of the data starts with ack and ends with sack-1. In this way, the sending end can know which part of the data is missing on the receiving end. The sending end only needs to resend the data. .

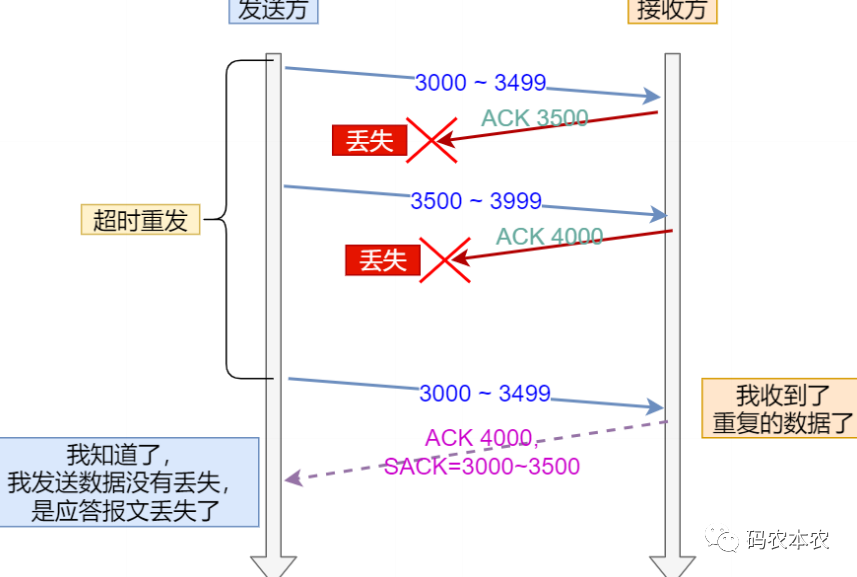

4.d-sack

Another situation is that the data sent by the sender is delayed in the network and is not lost. Then after the sender retransmits, the delayed data arrives again, which causes repeated transmission. In this case, it will Using the d-sack method, dsack is actually a way to use sack to process duplicate data. The receiving end still replies ack+sack, but sack represents the sequence number of the currently repeated data, and ack represents the sequence number that needs to be accepted, so that the sending end can know that this data has been sent.

It is not difficult to find that the two modes can be distinguished by comparing the sizes of ack and sack. If ack is larger than sack, it means that the data has been sent repeatedly. If ack is smaller than sack, it means that the data is missing.

2. Sliding window

TCP uses an acknowledgment response mechanism to ensure reliability. In theory, the sender sends a piece of data to the receiver, and the receiver replies with a response data after receiving it. The conversation is considered over, and then the sender sends the next piece of data.

This method is undoubtedly an extremely inefficient method, so in order to achieve reliability and efficiency, TCP introduces sliding windows and flow control.

TCP introduces the concept of sliding window. The sliding window means that the receiving end and the sending end open up a space for each socket. The sending end's data can only be processed when the receiving end's sliding window is idle.

Principle: Every time the sender sends data to the receiver, the receiver will return a sliding window size, indicating the maximum number of bytes that the receiver can accept. After receiving this sliding window size, the sender can continuously send multiple data packets, as long as Just within the sliding window range.

The sender's sliding window has the following areas:

- Sent but no reply received area

- Unsent area

When the sent data is replied to, the sliding window moves to the right.

The sliding window on the receiving end has the following areas:

- Received but not yet picked up by the application

- Areas not yet received

If the received data is taken away by the application, the window moves to the right.

Within the sliding window range, the sender sends several data packets continuously. If the ack of one of the packets is lost, it does not necessarily need to be resent. The sender can determine whether the lost ack packet needs to be resent through the next ack. In other words, with the concept of sliding window, when the ack reply packet is lost, it does not necessarily need to be retransmitted.

The window size of the sender is determined by the receiver.

3. Flow control

The introduction of the concept of sliding window allows multiple data packets to be sent at a time, which solves the problem of low efficiency of processing only one data packet at a time. However, because the processing capability of the receiving end is limited, the sending end cannot continuously send data to the receiving end. If the data traffic is too large and the receiving end cannot process it, the data can only be discarded. Therefore, there must be a mechanism to control the flow of data. flow.

The flow control of TCP is also based on the sliding window. The sliding window is confirmed by the receiving end and sent to the sending end. The sending end sends data according to the window size, which ensures that the sent data can be received and processed by the receiving end.

However, TCP considers the following issues when implementing flow control based on sliding windows:

The sliding window is a space in the socket buffer, and the buffer corresponding to the socket is not static, so if the buffer changes, it will have some impact on the synchronization of the sliding window. For example, the receiving end notifies the sending end that the window size is 100, but at this time the operating system reduces the socket buffer to 50. If the received data is greater than 50, the packet will be dropped, resulting in packet loss.

There is another problem, the confused window problem. For example, because the receiving end processes slowly, the sliding window is 0, and the window is closed. After a period of time, there may be 50 bytes of free space in the window. At this time, the window = 50 Notify the sending end that after receiving the window = 50, the sending end will send 50 bytes of data. However, you must know that no matter how much data is sent, TCP must add a TCP header to the data packet. The TCP header has 20 bytes. At the same time, The IP header also needs to be included, which is also 20 bytes, a full 40 bytes, and the data sent is only 10 bytes, which is very low cost performance. And it also takes up bandwidth.

TCP has to consider the above issues when implementing flow control based on sliding windows. How does TCP solve it?

TCP does not allow reducing the buffer size and window size at the same time. If you need to reduce the buffer size, you must reduce the window size first, and then reduce the buffer size after a period of time.

To solve the problem of confused windows, it is necessary to avoid the receiving end replying a smaller window to the sending end and to avoid the sending end sending small data packets.

TCP stipulates that the receiving end must close the window (reply the receiving end window to 0) when the window size is <min (mss, half of the buffer). This ensures that the receiving end will not send a small window to the sending end.

The sending end also needs to solve the problem of sending small data. The sending end performs delay processing through the nagle algorithm, that is, it can send only when one of the following two conditions is met:

- The window size is greater than mss or the data size is greater than mss

- ACK sent as reply after receiving the previous data

As long as one of these two is met, it can be sent. However, once this algorithm is turned on, some packets with very small data themselves cannot be sent in time, so turning on this algorithm must be carefully considered.

TCP relies on the above mechanism to implement flow control. The specific process is that the receiving end will reply to the sending end with the window size every time. When the receiving end is very busy, the window may become smaller to 0, and the sending end will be notified to close the window. At this time, the sender will no longer send data to the receiver. When the receiver window becomes larger, it will actively reply to the sender window size.

However, this reply may be lost, so there will be a problem of waiting for each other, which can be understood as a deadlock. TCP’s solution is to define a clock, which is a timer. When a certain time is reached and the receiving end has not notified the window, The sender will send a detection message, usually once every 30-60 seconds and three times (different implementations may be different here and can be configured). If the window is replied to, it will start sending data. If the window of the reply is still 0, it will be repeated. Set the clock and re-time. If the window is not opened in the end, the sender may send an rst packet to the server to terminate the connection.

4. Congestion Control

Sliding window and flow control ensure that when the receiving end is busy, data will not be discarded because there is nowhere to put it.

But the network is shared, and the network will also be very busy. If the network is congested, packet loss will also occur. TCP will retransmit when it does not receive ack, making the network more congested and losing more data. It will make the entire network environment worse.

In order to solve the problem of data loss caused by network congestion, tcp proposes congestion control.

Two concepts in congestion control mechanism: congestion window and congestion algorithm

First, let’s look at what constitutes congestion. TCP considers congestion as long as there is a timeout and retransmission.

When TCP sends data, the size of the sent data is limited by the sliding window and the congestion window. That is, the maximum amount of data that the sender can send is the minimum value of the congestion window and the sliding window.

congestion algorithm

(1) Slow start:

When the connection is just established, the sliding window and the congestion window are consistent. Next, we assume that the initial values of the sliding window and the congestion window are 100 bytes. The following explanations are based on this assumption.

Slow start means that based on the initial value, the sending end sends a 100-byte data packet to the receiving end (do not consider the number of data packets here, just explain it directly by the total number of bytes). When all acks are returned, congestion The window will add 100 to 100. One cycle is to add 200 to 200, and the next time is to add 800. The congestion window of this algorithm grows exponentially, and the growth rate is very fast, so there must be a limit. This limit is called the congestion threshold. Value, this value defaults to 65535 bytes. When the congestion window value reaches this value, it enters the congestion avoidance phase.

(2) Congestion avoidance:

When the congestion window value reaches the congestion threshold, it will enter the congestion avoidance phase. In this phase, for example, the current congestion window value reaches 65535 bytes. If all 65535 bytes are sent, when all After ack returns, 65535 bytes are added to the 65535 bytes, and 65535 bytes are added again, and 65535 bytes are added again. It is no longer an exponential increase, but Linear growth, to put it bluntly, means that the rate of growth has slowed down. But even so, the congestion window value is still in a growing state. So when does it end? The answer is when there is a timeout and retransmission.

(3) Congestion occurs:

When retransmission occurs, it means that congestion has occurred. Congestion occurs because of retransmission. Retransmission is divided into timeout retransmission and fast retransmission. When timeout retransmission occurs, it means that the network is indeed very bad. TCP The best approach is to set the congestion threshold value to half of the congestion window value at this time, and at the same time set the congestion window value to 1. Thus entering the slow start phase again. And if a fast retransmission occurs, it means that the network is not very bad. The congestion window value will be set to half of the congestion window value at this time, and the congestion threshold value will also be set to half of the congestion window value. If nothing is done at this time, Doing so will enter the congestion avoidance phase, but for this situation, tcp will enter the fast recovery algorithm.

(4) Quick recovery:

It is to add 3 to the current congestion threshold to indicate the size of the congestion window. Then resend the failed data. After receiving the recovery, add 1. Then send new data and enter the congestion avoidance stage.