Application of load balancing technology in computing power network

Application of load balancing technology in computing power network

Part 01, ECMP

ECMP is a hop-by-hop, flow-based load balancing strategy. When the router finds that there are multiple optimal paths for the same destination address, it will update the routing table and add multiple rules for this destination address, corresponding to multiple next hops. And these paths can be used to forward data at the same time to increase bandwidth. The ECMP algorithm is supported by multiple routing protocols, such as OSPF, ISIS, EIGRP, BGP, etc. The use of ECMP as a load balancing algorithm is also mentioned in the data center architecture VL2 [1] .

Simply put, ECMP is load balancing based on the routing layer. Load balancing based on the IP layer has many advantages, as follows:

(1) The deployment and configuration is simple, and the load can be implemented based on the characteristics of many protocols without additional configuration.

(2) Provides a variety of traffic scheduling algorithm methods, which can be based on hashing or weighting and polling.

The simple approach also means there are many pitfalls, as follows:

(1) May aggravate link congestion. Because ECMP will not determine whether the original link is blocked, it will load the traffic, which will cause the originally blocked link to become even more blocked.

(2) In many cases, the load effect is not good. ECMP cannot distinguish traffic idle conditions behind multiple networks and ECMP has poor load performance when traffic gaps are large.

Although this load method based on network layer three is easy to use and deploy, it cannot meet the needs of the business level and cannot maintain sessions. Therefore, the author will introduce several load methods above network layer four below.

Part 02, LVS load

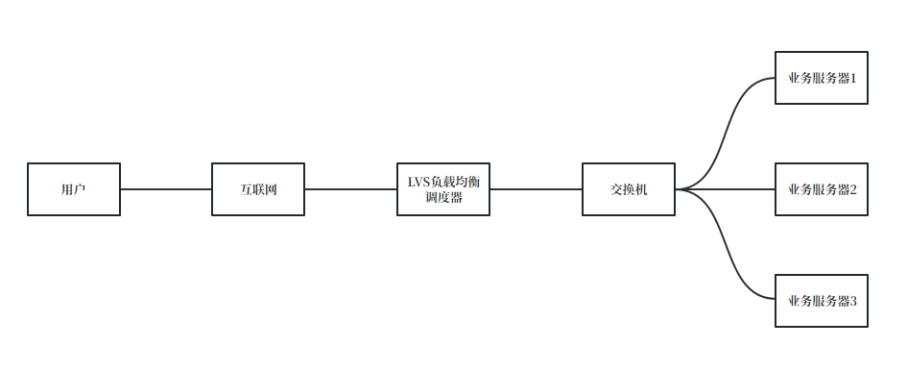

LVS (Linux Virtual Server) is a Linux virtual server. It is an open source load balancing project led by Dr. Zhang Wensong. Currently, LVS has been integrated into the Linux kernel module. This project implements an IP-based data request load balancing scheduling scheme in the Linux kernel. End Internet users access the company's external load balancing server from the outside. The end user's Web request will be sent to the LVS scheduler. The algorithm decides to send the request to a certain back-end web server. For example, the polling algorithm can evenly distribute external requests to all back-end servers. Although the end user accessing the LVS scheduler will be forwarded to the real back-end server, If the real server is connected to the same storage and provides the same service, the end user will receive the same service content no matter which real server they access, and the entire cluster will be transparent to the user. Finally, depending on the LVS working mode, the real server will choose different ways to send the data required by the user to the end user. The LVS working mode is divided into NAT mode, TUN mode, and DR mode [2 ] .

Different from ECMP, LVS is a session-based four-layer load balancing. LVS will maintain sessions for different flows based on upstream and downstream five-tuples. Combined with the long-term development of LVS, LVS has many advantages :

(1) Strong load resistance. LVS only distributes on the fourth layer of the network and will not consume too much CPU and memory resources.

(2) Low configuration requirements. It can be used normally with simple configuration.

(3) Strong robustness. The development time is long, there are many deployment solutions in the industry, and the stability is high.

At the same time, LVS also has many shortcomings:

(1) The functions are not rich enough. The simple configuration also causes LVS to lack more functions, such as fault migration, added recovery and other functions.

(2) The performance of NAT mode is limited. Of course, this is also a problem faced by many four-layer loads. The author will give some thoughts later.

Part 03, NGINX load

In addition to being a high-performance HTTP server, NIGINX can also provide the function of reverse proxy WBE server, which means that it is feasible to deploy NGINX as a load balancing server. Of course, NGINX has been widely used in the industry as load balancing servers, service clusters, primary and backup links, etc.

NGINX is similar to LVS, both are based on load balancing above four layers and can maintain sessions. At the same time, because NGINX works at the seventh layer of the network, NGINX will be less dependent on the network than LVS load.

Compared with LVS load balancing, NGINX has the following advantages:

(1) Little dependence on the network. As long as the network is connected, the load can be done, unlike some LVS modes that require a specific network environment.

(2) Easy to install and quick to configure and deploy.

(3) NIGINX load can detect internal server failures. Simply put, if a failure occurs when uploading files, NIGINX will automatically switch the upload to another load device for processing, and LVS cannot be used in this way.

Similarly, NGINX also has some disadvantages:

(1) There is a lack of dual-machine hot backup solution, and in most cases there are certain risks in single-machine deployment. (2) The high degree of functional adjustment makes its maintenance cost and difficulty higher than LVS in disguise.

Part 04, Thinking and Exploration

Based on the advantages and disadvantages of the above common load technologies, it is not difficult to find that each has its own advantages. However, in the actual use process, the author found that these methods are difficult to meet the high-performance cross-network load, that is, when doing FULL- To achieve cross-metropolitan area network load under the premise of NAT, simply put, when experimenting with multi-node cloud deployment, these solutions have certain poor performance.

Based on this, after reviewing relevant information, the author found that Cisco's open source VPP project provides a high-performance load balancer method. Based on DPDK to send and receive packets, VPP's high-performance processing can achieve cross-network high-performance load after secondary development. Some results have been achieved so far. The next issue will introduce and discuss this high-performance four-layer cross-network load balancing technology.

In the future, the Smart Home Operation Center will conduct more research on the implementation of high-performance cross-network load balancers, and welcomes more development architects to invest in the function development and scenario exploration of high-performance cross-network load balancers.