Netflix zero-configuration service mesh and on-demand cluster discovery

Netflix zero-configuration service mesh and on-demand cluster discovery

This article is translated from the Netflix blog Zero Configuration Service Mesh with On-Demand Cluster Discovery[1] written by David Vroom, James Mulcahy, Ling Yuan, and Rob Gulewich.

I believe everyone is familiar with Netflix. There is a Netflix family bucket in the Spring Cloud ecosystem. Many years ago, I also moved the microservice architecture based on Netflix OSS to the Kubernetes platform and continued to work on it for several years.

Spring Cloud Netflix has a huge user base and user scenarios, and it provides a complete set of solutions for microservice governance: service discovery Eureka, client load balancing Ribbon, circuit breaker Hystrix, and microservice grid Zuul.

Friends who have had the same experience should agree that the architecture of such a microservice solution will also be painful after years of evolution: the complexity is getting higher and higher, the version is seriously fragmented, and the support of multiple languages and frameworks and functions cannot be unified, etc. This is also a problem that Netflix itself had to face. Then they turned their attention to the service mesh and sought a seamless migration solution.

In this article, they gave the answer: Netflix cooperates with the community and builds its own control plane that is compatible with the existing service discovery system. It may not be easy to implement this set, and I haven’t seen their idea of making it open source, but at least it can give everyone a reference.

By the way, I would like to preview an article I am planning on how to smoothly migrate microservices to the Flomesh service grid platform. Not only is it seamlessly compatible with Eureka, but also HashiCorp Consul, and will be compatible with more service discovery solutions in the future.

The following is a translation of the original text:

In this article, we will discuss the Netflix Service Mesh practice: historical background, motivation, and how we work with the Kinvolk and Envoy communities to promote the implementation of service mesh in complex microservice environments. One feature: On-demand Cluster Discovery.

A brief history of Netflix’s IPC

Netflix was an early adopter of cloud computing, especially for large-scale companies: we began the migration in 2008, and by 2010, Netflix's streaming ran entirely on AWS[2]. Today we have a rich set of tools, both open source and commercial, all designed for cloud-native environments. However, in 2010, almost no such tool existed: CNCF[3] was not established until 2015! Since there are no ready-made solutions, we need to build them ourselves.

For inter-process communication (IPC) between services, we need a rich feature set typically provided by a mid-tier load balancer. We also needed a solution that dealt with the realities of the cloud environment: a highly dynamic environment where nodes are constantly coming online and offline, and services need to respond quickly to changes and isolate failures. To increase availability, we designed system components to fail individually to avoid single points of failure. These design principles led us toward client-side load balancing, and the Christmas 2012 outage [4] further solidified this decision. In the early days of the cloud, we built Eureka[5] for service discovery, and Ribbon (internally named NIWS) for IPC[6]. Eureka solves the problem of how services discover instances to communicate with, while Ribbon provides load-balanced clients, as well as many other resiliency features. These two techniques, along with a range of other resilience and chaos tools, yielded huge benefits: our reliability improved significantly as a result.

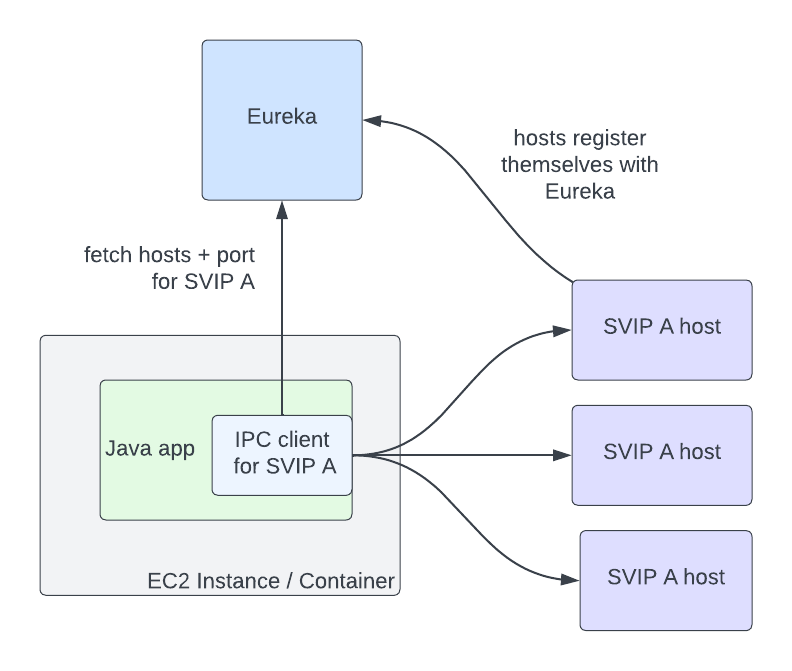

Eureka and Ribbon provide a simple yet powerful interface that makes their use easy. For a service to communicate with another service, it needs to know two things: the name of the destination service, and whether the traffic should be secure. The abstractions Eureka provides for this are Virtual IPs (VIPs) for non-secure communication, and Secure VIPs (SVIPs) for secure communication. The service announces to Eureka a VIP name and port (for example: _myservice_, port _8080_), or an SVIP name and port (for example: _myservice-secure_, port 8443), or both. The IPC client is instantiated against the VIP or SVIP, and the Eureka client code handles the translation of the VIP to a set of IP and port pairs by getting them from the Eureka server. Clients can also choose to enable IPC features like retries or circuit breaking, or use a sensible set of defaults.

picture

picture

In this architecture, inter-service communication no longer passes through the single point of failure channel of the load balancer. But the problem is that Eureka has now become a new single point of failure for the authenticity of VIP registration hosts. However, if Eureka goes down, services can still communicate with each other, although their host information gradually becomes outdated as VIPs come online and offline. Running in a degraded but available state during a failure is still a significant improvement over completely stopping traffic.

In this architecture, service-to-service communication no longer passes through the single point of failure of the load balancer. But the problem is that Eureka has now become a new single point of failure for the authenticity of VIP registration hosts. However, if Eureka goes down, services can still communicate with each other, although their host information gradually becomes outdated as VIPs come online and offline. Running in a degraded but available state during a failure is still a significant improvement over completely stopping traffic.

Why choose grid?

The above architecture has served us well over the past decade, but as business needs change and industry standards evolve, our IPC ecosystem has added more complexity in many ways. First, we increased the number of different IPC clients. Our internal IPC traffic is now a mix of pure REST, GraphQL[7] and gRPC[8]. Second, we moved from a Java-only environment to a multi-language environment: we now also support node.js[9], Python[10], and various open source and off-the-shelf software. Third, we continue to add more features to the IPC client, such as adaptive concurrency limits [11], circuit breakers [12], hedging, and fault injection have become standard tools adopted by our engineers to make systems more reliable. We now support more features, more languages, and more clients than we did ten years ago. Ensuring functional consistency between all these implementations and making sure they all behave the same way is challenging: we want to have a single, well-tested implementation of these features so we can make changes and fix bugs in one place .

This is the value of a service mesh: we can centralize IPC functionality in one implementation and make the clients in each language as simple as possible: they only need to know how to talk to the local proxy. Envoy[13] was an excellent choice of proxy for us: it is a battle-tested open source product that is already widely used in the industry, has many key resiliency features[14], and when we need to extend its functionality A good extension point [15]. Being able to configure proxies through a centralized control plane [16] is a killer feature: this allows us to dynamically configure client load balancing as if it were a centralized load balancer, but still avoids the service-to-service request path The load balancer acts as a single point of failure.

Why choose grid?

The above architecture has served us well over the past decade, even as changing business needs and the evolution of industry standards have made our IPC ecosystem more complex. First, we increased the number of different IPC clients. Currently, our internal IPC traffic includes simple REST, GraphQL[17], and gRPC[18]. Second, we moved from a Java-only environment to a multi-language environment: now we also support node.js[19], Python[20], and various open source and off-the-shelf software. Third, we continue to add more features to the IPC client: features such as adaptive concurrency limiting [21], circuit breaking [22], hedging, and fault injection have become standard tools used by our engineers to improve system reliability. We now support more features in more languages and in more clients than we did ten years ago. Maintaining functional consistency across all these implementations and ensuring that they behave consistently is challenging: what we want is a single, well-tested implementation of all these features so that we can make changes and fixes in one place mistake.

This is where the service mesh comes in: we can centralize IPC functionality in a single implementation and make it as simple as possible for clients in each language: they only need to know how to communicate with the local proxy. Envoy[23] is a good fit for us as a proxy: it is a battle-tested open source product used in high-scale scenarios in the industry, has many key elastic features[24], and has good expansion points[25] , so that we can expand its functionality if needed. The ability to configure proxies via a central control plane [26] is a killer feature: this allows us to dynamically configure client load balancing as if it were a central load balancer, but still avoids the load balancer becoming a service-to-service request Single point of failure in the path.

Moving to a service mesh

Once we decided that our decision to move to a service mesh was the right one, the next question was: How do we make the move? We identified some constraints on migration. First: we want to keep the existing interface. The abstraction of specifying VIP names plus security services has served us well and we don't want to break backwards compatibility. Second: we want to automate the migration and make it as seamless as possible. These two limitations mean that we need to support the Discovery abstraction in Envoy so that IPC clients can continue to use it under the hood. Fortunately, Envoy already has ready-made abstractions [27] available. VIPs can be represented as Envoy clusters, and agents can obtain them from our control plane using Cluster Discovery Service (CDS). Hosts in these clusters are represented as Envoy endpoints and can be obtained using the Endpoint Discovery Service (EDS).

We quickly hit a roadblock to a seamless migration: Envoy requires that the cluster be specified in the proxy's configuration. If service A needs to communicate with clusters B and C, then clusters B and C need to be defined in A's proxy configuration. This can be challenging at scale: any given service may be communicating with dozens of clusters, and the set of clusters is different for each application. Furthermore, Netflix is always changing: we are constantly launching new projects such as live streaming, advertising [28] and games, and we are constantly evolving our architecture. This means that the clusters in which services communicate can change over time. Given the Envoy primitives available to us, we evaluated a few different ways of populating the cluster configuration:

- Let service owners define the clusters their services need to communicate with. This option may seem simple, but in reality, service owners don't always know, or want to know, which services they communicate with. Services often import libraries provided by other teams that communicate under the hood with multiple other services, or with other operational services like telemetry and logging. This means that service owners need to know how these auxiliary services and libraries are implemented under the hood and adjust the configuration when they change.

- Automatically generate Envoy configuration based on the service's call graph. This approach is simple for pre-existing services, but challenging when launching new services or adding new upstream clusters for communication.

- Push all clusters to each application: This option attracted us with its simplicity, but simple calculations on a paper towel quickly showed us that pushing millions of endpoints to each broker is not feasible.

Given our goal of seamless adoption, each option had significant enough drawbacks that we explored another option: What if we could get cluster information on demand at runtime, rather than defining it up front? At the time, the service mesh effort was still in its infancy, with only a handful of engineers working on it. We contacted Kinvolk [29] to see if they could work with us and the Envoy community to implement this feature. The result of this collaboration is On-Demand Cluster Discovery [30] (ODCDS). With this feature, the agent can now look up cluster information the first time you try to connect to it, instead of pre-defining all clusters in the configuration.

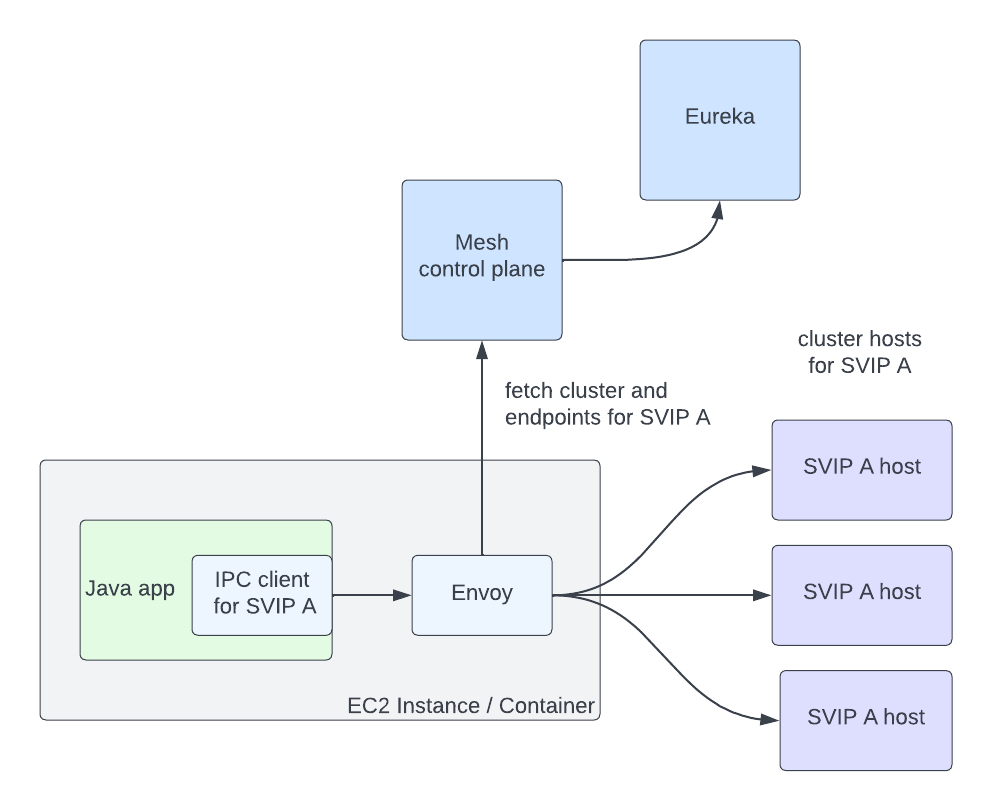

With this functionality, we need to provide cluster information to the agent for querying. We have developed a service mesh control plane that implements the Envoy XDS service. Then we need to get the service information from Eureka to return to the agent. We represent Eureka's VIP and SVIP as separate Envoy Cluster Discovery Service (CDS) clusters (so the service myservice may have a cluster myservice.vip

- Client requests come into Envoy.

- Extract the target cluster based on the Host/:authority header (the header used here is configurable, but this is our approach). If the cluster is known, skip to step 7.

- The cluster does not exist, so we pause the request in progress.

- Make a request to the control plane's Cluster Discovery Service (CDS) endpoint. The control plane generates a customized CDS response based on the service's configuration and Eureka registration information.

- Envoy obtains the cluster (CDS) and triggers pulling of endpoints through the Endpoint Discovery Service (EDS). Returns the endpoint of the cluster based on the Eureka status information of this VIP or SVIP.

- The client requested to unpause.

- Envoy handles requests normally: it uses a load balancing algorithm to select an endpoint and make the request.

This process completes in a few milliseconds, but only on the first request to the cluster. Afterwards, Envoy behaves as if the cluster was defined in the configuration. Crucially, this system allows us to seamlessly migrate services to a service mesh without any configuration, meeting one of our main adoption constraints. The abstraction we present continues to be the VIP name plus security, and we can migrate to the grid by configuring individual IPC clients to connect to a local proxy instead of connecting directly to the upstream application. We continue to use Eureka as the source of truth for VIP and instance state, which allows us to support heterogeneous environments where some applications are on the grid and others are not when migrating. There's an added benefit: we can keep Envoy's memory usage low by only fetching data for the clusters we actually communicate with.

picture

picture

The above figure shows an IPC client in a Java application communicating with the host registered as SVIP A through Envoy. Envoy obtains the cluster and endpoint information of SVIP A from the grid control plane. The grid control plane obtains host information from Eureka.

The disadvantage of fetching this data on demand is that it increases the latency of the first request to the cluster. We ran into use cases where the service required very low latency access on the first request and added a few milliseconds of extra overhead. For these use cases, services need to predefine the cluster they communicate with, or have the connection ready before the first request. We also considered pre-pushing the cluster from the control plane at agent startup based on historical request patterns. Overall, we feel that the reduced complexity in the system justifies the disadvantages for a small number of services.

We are in the early days of our service mesh journey. Now that we're using it in good faith, we hope to work with the community to make more Envoy improvements. Porting our adaptive concurrency limiting [31] implementation to Envoy is a great start - we look forward to working with the community on more. We are particularly interested in community work on incremental EDS. The EDS endpoint accounts for the largest portion of update volume, which puts unnecessary pressure on the control plane and Envoy.

We would like to thank the people at Kinvolk for their contributions to Envoy: Alban Crequy, Andrew Randall, Danielle Tal, and especially Krzesimir Nowak for their excellent work. We would also like to thank the Envoy community for their support and sharp reviews: Adi Peleg, Dmitri Dolguikh, Harvey Tuch, Matt Klein, and Mark Roth. It has been a great experience working with all of you.

This is the first in a series of articles on our journey to a service mesh, so stay tuned.

References

[1]

Zero Configuration Service Mesh with On-Demand Cluster Discovery: https://netflixtechblog.com/zero-configuration-service-mesh-with-on-demand-cluster-discovery-ac6483b52a51

[2] Netflix’s streaming runs entirely on AWS: https://netflixtechblog.com/four-reasons-we-choose-amazons-cloud-as-our-computing-platform-4aceb692afec

[3] CNCF: https://www.cncf.io/

[4] Christmas 2012 outage: https://netflixtechblog.com/a-closer-look-at-the-christmas-eve-outage-d7b409a529ee

[5] We built Eureka: https://netflixtechblog.com/netflix-shares-cloud-load-balancing-and-failover-tool-eureka-c10647ef95e5

[6] Ribbon (internally named NIWS) for IPC: https://netflixtechblog.com/announcing-ribbon-tying-the-netflix-mid-tier-services-together-a89346910a62

[7] GraphQL: https://netflixtechblog.com/how-netflix-scales-its-api-with-graphql-federation-part-1-ae3557c187e2

[8] gRPC: https://netflixtechblog.com/practical-api-design-at-netflix-part-1-using-protobuf-fieldmask-35cfdc606518

[9] node.js: https://netflixtechblog.com/debugging-node-js-in-production-75901bb10f2d

[10] Python: https://netflixtechblog.com/python-at-netflix-bba45dae649e

[11] Adaptive concurrency limit: https://netflixtechblog.medium.com/performance-under-load-3e6fa9a60581

[12] Circuit breaker: https://netflixtechblog.com/making-the-netflix-api-more-resilient-a8ec62159c2d

[13] Envoy: https://www.envoyproxy.io/

[14] Many key resiliency features: https://github.com/envoyproxy/envoy/issues/7789

[15] Good extension points: https://www.envoyproxy.io/docs/envoy/latest/configuration/listeners/network_filters/wasm_filter.html

[16] Configure the proxy through a centralized control plane: https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/operations/dynamic_configuration

[17] GraphQL: https://netflixtechblog.com/how-netflix-scales-its-api-with-graphql-federation-part-1-ae3557c187e2

[18] gRPC: https://netflixtechblog.com/practical-api-design-at-netflix-part-1-using-protobuf-fieldmask-35cfdc606518

[19] node.js: https://netflixtechblog.com/debugging-node-js-in-production-75901bb10f2d

[20] Python: https://netflixtechblog.com/python-at-netflix-bba45dae649e

[21] Adaptive concurrency limit: https://netflixtechblog.medium.com/performance-under-load-3e6fa9a60581

[22] Meltdown: https://netflixtechblog.com/making-the-netflix-api-more-resilient-a8ec62159c2d

[23] Envoy: https://www.envoyproxy.io/

[24] Many key resiliency features: https://github.com/envoyproxy/envoy/issues/7789

[25] Good extension points: https://www.envoyproxy.io/docs/envoy/latest/configuration/listeners/network_filters/wasm_filter.html

[26] The ability of the central control plane to configure the proxy: https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/operations/dynamic_configuration

[27] Ready-made abstractions: https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/intro/terminology

[28] Advertising: https://netflixtechblog.com/ensuring-the-successful-launch-of-ads-on-netflix-f99490fdf1ba

[29] Kinvolk: https://kinvolk.io/

[30] On-demand cluster discovery: https://github.com/envoyproxy/envoy/pull/18723

[31] Adaptive concurrency limit: https://github.com/envoyproxy/envoy/issues/7789