Performance improvement of Http/2 compared to Http/1.1

Performance improvement of Http/2 compared to Http/1.1

- What has changed since HTTP/1.1 was invented?

- Performance flaws of the HTTP/1.1 protocol

- New features of HTTP/2

- Problems with HTTP/2

What has changed since HTTP/1.1 was invented?

In recent years, if you carefully observe the resources that need to be downloaded on the homepages of the most popular websites, you will find a very obvious trend:

- Messages get bigger: from messages of a few KB to messages of several MB;

- More page resources: from less than 10 resources per page to more than 100 resources per page;

- The content forms are varied: from simple to text content, to pictures, videos, audio and other content;

- Real-time requirements are becoming higher: more and more applications require real-time performance of pages;

We've been using HTTP/1.x for quite some time since HTTP/1.1 was released in 1997, but the explosive growth of content in recent years has made HTTP/1.1 less and less adequate for the needs of the modern web

Performance flaws of the HTTP/1.1 protocol

- High latency: page access speed slows down

- Stateless: Head Huge Cut Repeat

- Head-of-line blocking problem, the same connection can only process the next transaction after completing one HTTP transaction (request and response);

- Clear text transmission: insecure

- The server does not support push messages, so when the client needs to get notifications, it can only pull messages continuously through the timer, which undoubtedly wastes a lot of bandwidth and server resources.

New Features of Http/2

Compatible with HTTP/1.1

The purpose of HTTP/2 is to improve the performance of HTTP. A very important aspect of protocol upgrade is to be compatible with the old version of the protocol, otherwise it will be quite difficult to promote the new protocol. How does HTTP/2 do it?

HTTP/2 does not introduce a new protocol name in the URI, and still uses "http://" to represent the plaintext protocol, and "https://" to represent the encrypted protocol, so only the browser and the server need to automatically upgrade the protocol behind the scenes, so that It can prevent users from being aware of protocol upgrades and achieve smooth protocol upgrades.

It is still based on TCP protocol transmission. In order to maintain functional compatibility, the application layer is completely consistent with HTTP/1.1, such as request method, status code, header field and other rules remain unchanged.

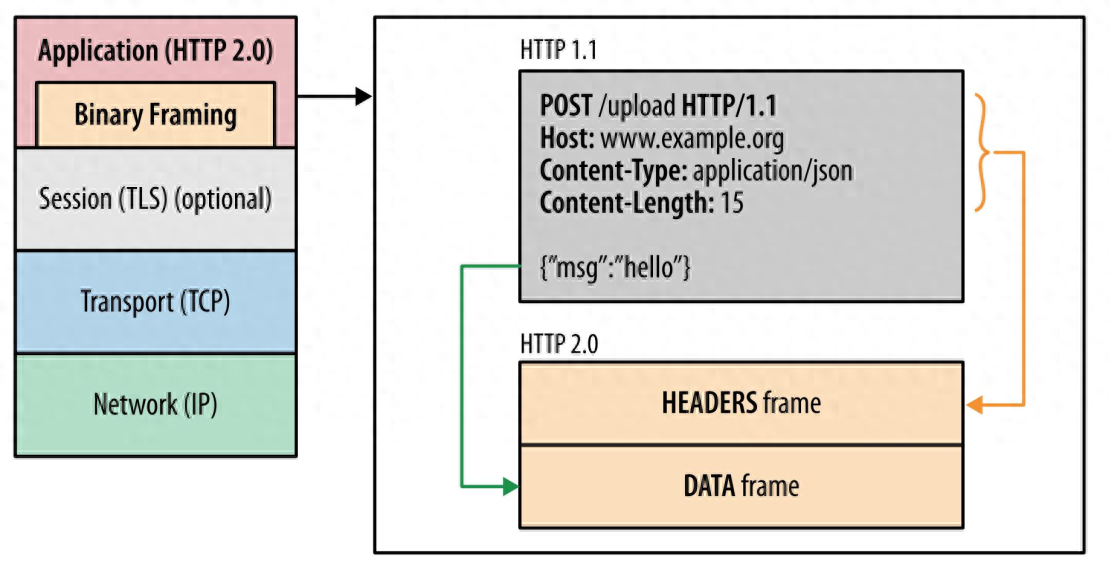

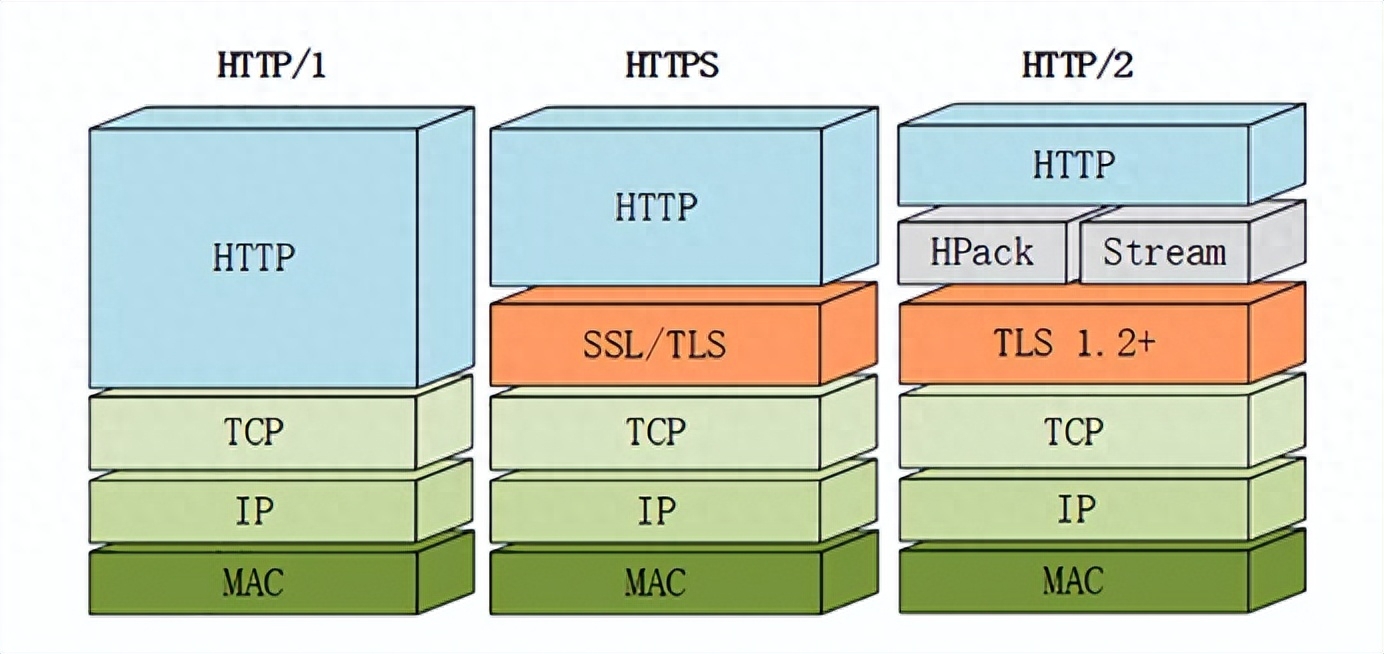

binary transfer

HTTP/2 is no longer a plain text message like HTTP/1.1, but a binary format is adopted in an all-round way. The header information and data body are both binary, and are collectively called frames: Headers Frame and Data Frame.

This is very friendly to the computer, because after the computer receives the message, it does not need to convert the plaintext message into binary, but directly parses the binary message, which increases the efficiency of data transmission.

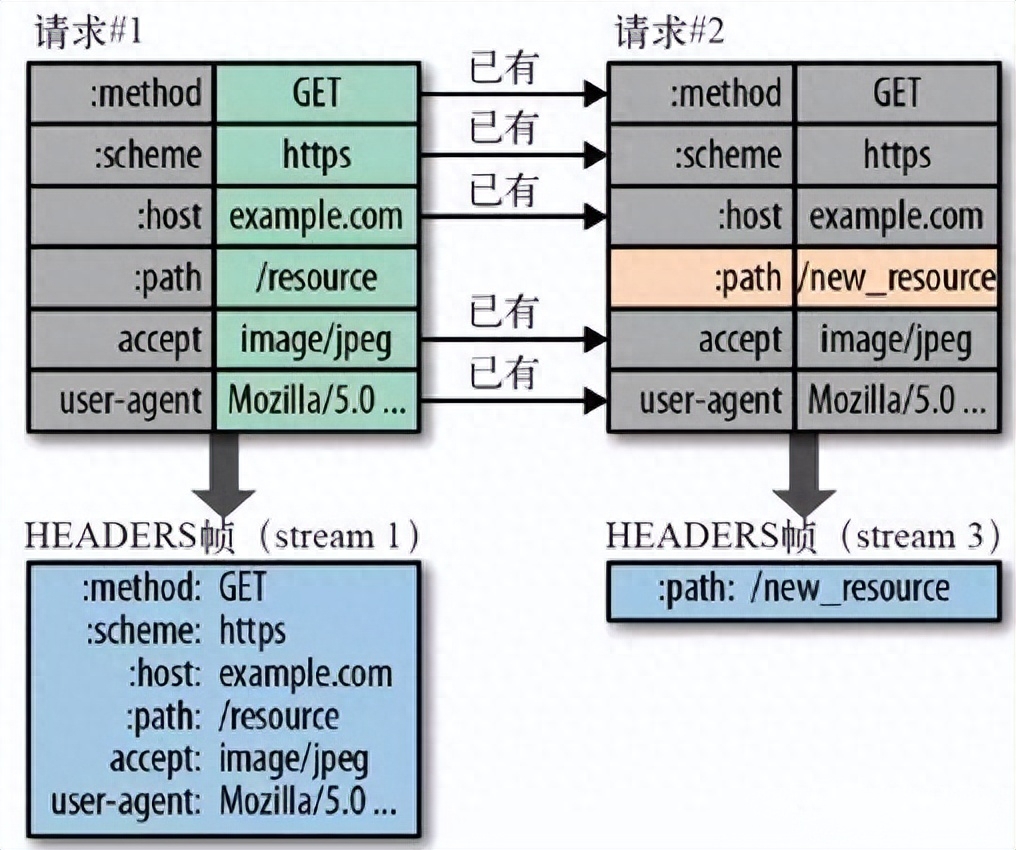

Header compression (HPACK)

The HTTP protocol is stateless and all information must be attached to each request. Therefore, many fields of the request are repeated, such as Cookie and User Agent, the exact same content must be attached to each request, which will waste a lot of bandwidth and affect the speed.

HTTP/2 optimizes this by introducing header compression. On the one hand, the header information is compressed with gzip or compress before sending; on the other hand, the client and server maintain a header information table at the same time, and all fields will be stored in this table to generate an index number, and the same fields will not be sent in the future , only the index number is sent, which improves the speed.

HPACK algorithm: Both the client and the server maintain a table of header information, all fields will be stored in this table, and an index number will be generated, and the same field will not be sent in the future, only the index number will be sent, which will increase the speed.

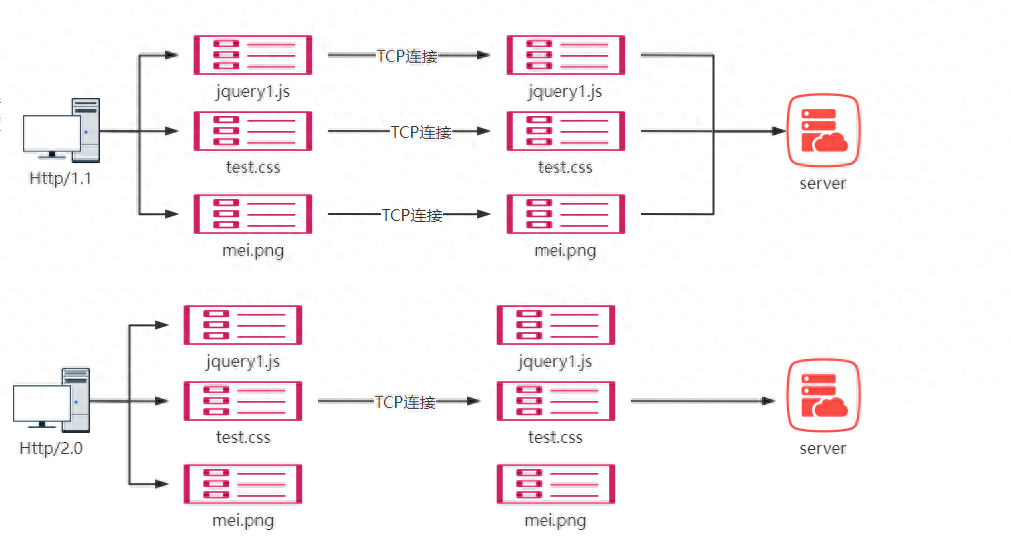

multiplexing

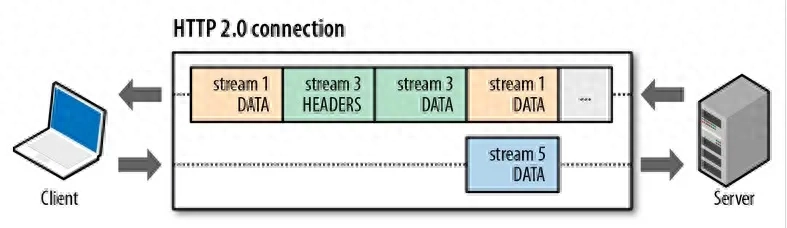

Multiplexing means that multiple streams can exist in one TCP connection. In other words, multiple requests can be sent, and the peer end can know which request belongs to through the identifier in the frame.

This feature greatly improves HTTP transmission performance, which is mainly reflected in the following three aspects:

Reuse TCP connections

HTTP/2 reuses TCP connections. In one connection, both the client and the browser can send multiple requests or responses at the same time without corresponding one-to-one in order, thus avoiding "head-of-line congestion"

data flow

HTTP/2 sends multiple requests/responses in parallel and interleaved, and the requests/responses do not affect each other

HTTP/2 refers to all data packets of each request or response as a data stream (stream). Each data stream has a unique number. When a data packet is sent, it must be marked with a data flow ID to distinguish which data flow it belongs to. In addition, it is also stipulated that the IDs of the data streams sent by the client are all odd numbers, and the IDs sent by the server are even numbers.

When the data stream is sent halfway, both the client and the server can send a signal (RST_STREAM frame) to cancel the data stream. The only way to cancel the data flow in version 1.1 is to close the TCP connection. That is to say, HTTP/2 can cancel a certain request, while ensuring that the TCP connection is still open and can be used by other requests.

priority

HTTP/2 can also set different priorities for each Stream. The "flag bit" in the frame header can set the priority. For example, when the client accesses HTML/CSS and image resources, it hopes that the server will pass the HTML/CSS first and then the image. , then it can be achieved by setting the priority of Stream to improve user experience.

Server Push

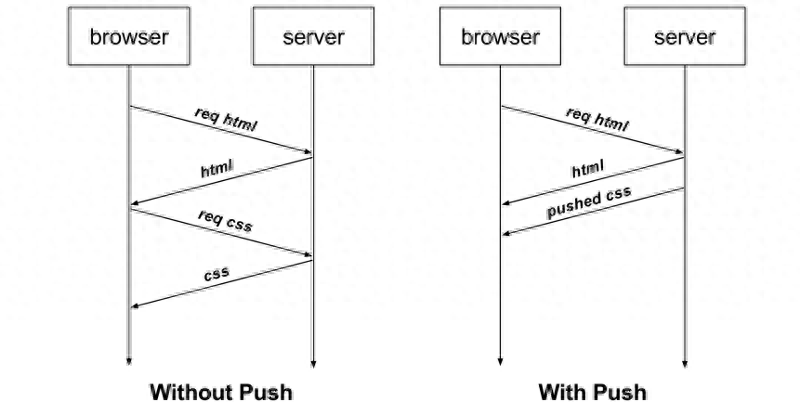

HTTP/1.1 does not support the server actively pushing resources to the client. The client can obtain the resources responded by the server only after the client initiates a request to the server.

For example, the client obtains an HTML file from the server through an HTTP/1.1 request, and the HTML may also need to rely on CSS to render the page. At this time, the client has to initiate a request to obtain the CSS file, which requires two round-trip messages, as shown below Left part:

As shown on the right side of the figure above, in HTTP/2, when the client accesses HTML, the server can directly and actively push CSS files, reducing the number of message transmissions.

improve security

For compatibility reasons, HTTP/2 continues the "plaintext" feature of HTTP/1. It can use plaintext to transmit data as before, and does not force the use of encrypted communication. However, HTTPS is already the general trend, and all major mainstream browsers support encryption. HTTP/2, so, HTTP/2 in real applications is still encrypted:

HTTP/2 legacy issues

HTTP/2 also blocks on the head of line

HTTP/2 uses the concurrency capability of Stream to solve the problem of HTTP/1 head-of-line blocking. It seems perfect, but HTTP/2 still has the problem of "head-of-line blocking", but the problem is not at the HTTP level. But at the TCP layer.

If the head of the queue is blocked, the problem may be more serious than http1.1, because there is only one tcp connection, and subsequent transmissions have to wait. In http/1.1, if there are multiple tcp connections, one is blocked, and the others can run normally.