How AI and software are driving 5G data center transformation

How AI and software are driving 5G data center transformation

Today, we are witnessing a phase of enormous innovation in computing, with rapid global deployments of 5G intertwined with breakthroughs in data-intensive workloads and generative artificial intelligence. The need for flexibility across the computing ecosystem has never been greater to innovate and explore new types of acceleration, novel system architectures, and new ways to meet growing data consumption demands. The added challenges of rising semiconductor design costs and tightening global energy budgets mean we are in the midst of a global computing infrastructure transformation.

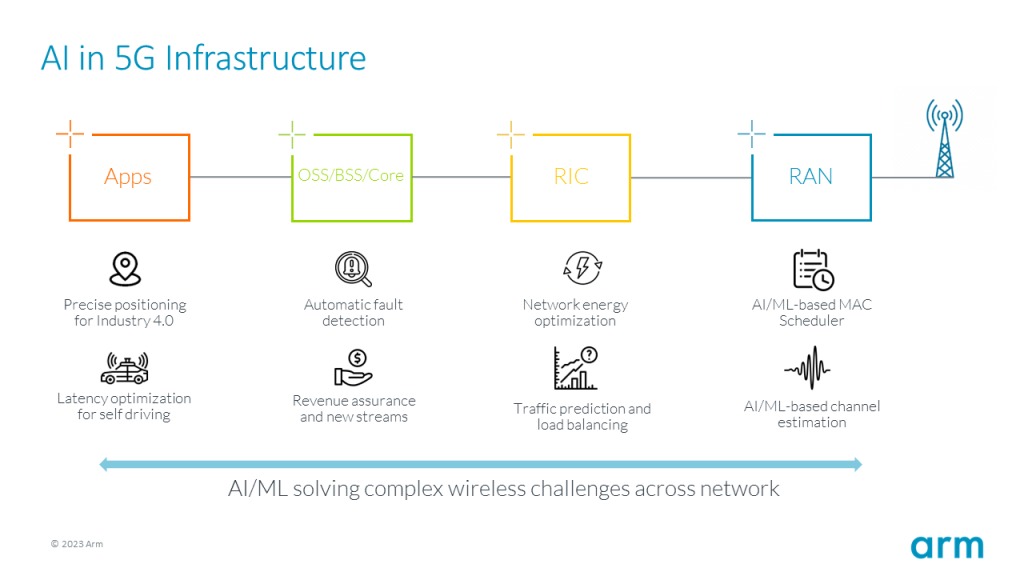

The combination of 5G and artificial intelligence holds great opportunities. 5G technology can provide the high-throughput, low-latency, and reliable connectivity that AI applications need to collect, transmit, and process large amounts of data. In return, AI can help 5G networks dynamically allocate resources, manage traffic and detect anomalies. Artificial intelligence can also use 5G to provide personalized and contextualized intelligent services to users and devices. Together, these two technologies will truly change the way we communicate, work and live.

But every story has two sides. 5G and artificial intelligence pose significant challenges for data center operators and customers, as both generate large amounts of data that need to be stored, processed and analyzed in a flexible, timely, cost-effective and energy-efficient manner. To take full advantage of this opportunity, data center architects need to find a transformative approach around the way computing is designed, built and deployed.

5G Virtual RAN: A Key Enabler for Edge Data Centers

One of the key innovations supporting 5G is the virtual radio access network (vRAN). vRAN is a relatively new deployment model that is gaining popularity. It decouples the cellular baseband processing function from the hardware and runs it as software on a general-purpose server.

This can provide greater flexibility, scalability and efficiency when deploying and managing 5G networks. The economies of scale of virtualized 5G infrastructure can be achieved in a number of ways. First, combine the same computing infrastructure to serve 5G connectivity and edge applications, bringing connectivity and data processing closer to the source; second, software-driven cloud-native infrastructure enables dynamic resource allocation, load balancing, faster provisioning, and automation Network management, which has a huge impact on operational efficiency. Third, with fiber optic connections, vRAN can reduce the cost and complexity of 5G infrastructure by bringing together multiple base stations into a centralized server pool.

By bringing computing power closer to end users and their devices, vRAN can facilitate edge computing while reducing latency and improving performance.

But to realize its full potential, vRAN requires computing platforms capable of supporting high-throughput, low-latency, and deterministic radio signal processing while maintaining very high computational efficiency. It also requires high-speed network interfaces to handle the high volumes of data traffic between servers, radios and end devices, further underscoring the need for a transformative approach.

Today's 5G Data Centers: CPUs and DPUs

Traditional general-purpose CPUs cannot handle the complex and diverse workloads of the 5G vRAN stack. By virtualizing all network functions into software, including the operating system, network layer, applications, and protocols, the traffic in the data center has increased dramatically, reducing CPU efficiency. To meet this challenge, the CPU needs a complementary dedicated acceleration device that can offload the CPU's network tasks to improve performance and efficiency. One of the most popular acceleration devices in today's 5G data centers is the data processing unit (DPU).

How AI and software are driving 5G data center transformation

How AI and software are driving 5G data center transformation

AI in 5G infrastructure (Source: Arm)

A DPU is a network card with an embedded processor or programmable logic that can perform network functions such as packet processing, encryption/decryption, compression/decompression, load balancing, firewalling, routing/switching, and quality of service (QoS). The DPU can help reduce the CPU utilization and power consumption of the server by taking over some network-related tasks, so as to achieve the flexibility and programmability of configuring and optimizing network functions according to different scenarios.

Heterogeneous computing adds more flexibility for future workloads

While today's 5G data centers already utilize CPUs and DPUs to support vRAN and other 5G applications, next-generation 5G data centers will need to offer greater flexibility in terms of hardware architecture, software platforms, and service models. For example, a data center might use GPUs to process complex math, graphics, video, or other large datasets; GPUs or TPUs for machine learning workloads; and FPGAs for programmable logic to solve more custom workloads .

This type of heterogeneous computing helps improve the performance, efficiency, and scalability of converged intelligent edge data centers that can deliver RAN services, cloud computing capabilities, and AI application services closer to end users. Ultimately, moving more specialized computing closer to devices and end users can improve latency and enable many of the next-generation use cases promised by 5G.

SoftBank, Nvidia, and Arm recently announced a collaboration aimed at creating the world's most advanced data centers for artificial intelligence and high-performance computing (HPC). It's one of the best examples of this type of data center transformation right now, with dedicated processing. The collaboration is expected to provide a pioneering platform for generative AI and 5G/6G applications based on the Nvidia GH200 Grace Hopper superchip, which SoftBank plans to roll out at new distributed AI data centers across Japan. The new data centers will be more evenly distributed than those used in the past and can handle artificial intelligence and 5G workloads. This will allow them to better operate at peak capacity with low latency and drastically reduce overall energy costs.

SoftBank will work with Nvidia to build a data center that paves the way for the rapid deployment of generative AI applications and services around the world . cost and provide more services.

Such innovations represent an important inflection point for 5G and artificial intelligence. The entire data center is built around targeted performance delivered by specialized processing, giving operators the operational efficiency they need to realize the potential of vRAN. Additionally, specialized processing enables operators to co-host compute-intensive services, such as artificial intelligence or 3D video conferencing, in the same physical location - closer to the data and closer to the user. This is critical because flexibility, low latency and performance efficiency ensure optimal utilization of computing resources and their required capital and energy costs, thereby changing the TCO (Total Cost of Ownership) of the entire data center.

As we look ahead to the next generation of 5G data centers, the need for flexible and efficient computing platforms is paramount. To meet the growing demand for data center services driven by 5G and artificial intelligence, next-generation data centers will be defined as the ability to adapt to new technologies and workloads and deliver the performance and efficiency required by businesses and consumers. Therefore, we will continue to view efficient and specialized chips as the basis for innovation and transformation in this field.