Physical server network performance optimization

Physical server network performance optimization

#01. Basic knowledge

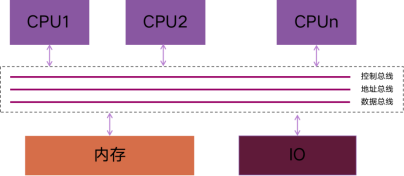

The essence of an interrupt is an electrical signal, which is generated by the hardware and sent directly to the interrupt controller, and then the interrupt controller sends a signal to the CPU. There are two common interrupt controllers: programmable interrupt controller (8259A) and advanced programmable interrupt controller (APIC). 8259A is only suitable for single CPU, and advanced programmable interrupt controller (APIC) can give full play to multiple CPU multi-core SMP system (Symmetric Multi-Processor) advantage, the interrupt is passed to each CPU for better parallelism and performance improvement. At the same time, versions after Linux kernel 2.4 support the allocation of different hardware interrupt requests (IRQs) to specific CPUs through SMP IRQ Affinity.

SMP IRQ affinity allows the system to limit or redistribute the workload of the server, so that the server can work more efficiently. Take network card interrupts as an example. When the SMP IRQ affinity is not set, all network card interrupts are associated with CPU0, which leads to excessive load on CPU0, and cannot effectively and quickly process network data packets, which is prone to performance bottlenecks. Through the SMP IRQ affinity, multiple interrupts of the network card are allocated to multiple CPUs, which can disperse the CPU pressure and improve the data processing speed.

Figure 1 SMP symmetric multiprocessor structure

Figure 1 SMP symmetric multiprocessor structure

At the same time, Linux's irqbalance is used to optimize interrupt allocation, automatically collect system data to analyze usage patterns, and put the working state into Performance Mode or Power-Save Mode according to the system load status. When in Performance Mode, irqbalance will distribute interrupts to each CPU as evenly as possible to make full use of CPU multi-core and improve performance; Sleep time, reduce energy consumption.

Under normal circumstances, when a network card has only one queue to receive network data packets, the processing of data packets can only be processed by a single core at the same time. block. Therefore, with the development of technology, a network card multi-queue mechanism has been introduced. One network card supports multiple queues to receive and receive data packets, so that the data packets of multiple queues can be distributed to different CPUs for processing at the same time. RSS (Receive Side Scaling) is a hardware feature of the network card, which implements multiple queues, each queue corresponds to an interrupt number, and realizes the distribution of network card interrupts on multiple CPUs and cores through interrupt binding.

However, when the network pressure is high, the I/O of multi-queue network card I/O will generate a lot of interrupt accesses. At this time, the number of queues will have a great impact on the processing efficiency of network card data packets. According to practical experience, when the total number of queues of the network card in a single PCIe slot is equal to the number of CPU physical cores connected to the PCIe slot, the processing efficiency of the network card I/O interrupt will be the highest. For example, the number of physical cores of a single CPU is 32, and the number of ports of each network card is 2, so the multi-queue number of the network card should be set to 16=32/2.

Since the operating system uses the irqbalance service to assign the network data packets in the network card queue to which CPU core to process by default, when the CPU core allocated by the irqbalance service to handle network card interruptions is not the CPU die or CPU connected to the PCIe slot of the network card, it will trigger Cross-CPU die or CPU core access, interrupt processing efficiency will be relatively low. Therefore, after the number of network card queues is set, we also need to close the irqbalance service, and bind the network card queue interrupt number to the CPU core that handles network card interrupts, thereby reducing the extra overhead caused by cross-CPU die or CPU access, and further improving Network processing performance.

#02. Hands-on practice

1. Determine whether the current system environment supports multi-queue network cards.

# lspci -vvv |

If the MSI-X: Enable+ Count=9 Masked- statement is included in the Ethernet item, it means that the current system environment supports multi-queue network cards, otherwise it does not support it.

2. Check whether the network card supports multiple queues, how many queues are supported at most, and how many are currently enabled.

# ethtool -l eth0 ## View eth0 multi-queue situation |

3. Set the network card to use multiple queues currently.

# ethtool -L eth0 combined <N> ## Set multiple queues for the eth0 network card, N is the number of queues to be set |

4. Make sure that the multi-queue setting has indeed taken effect, and you can view the file.

# ls /sys/class/net/eth0/queues/ ## Confirm that the number of rx is equal to the set value rx-0 rx-2 rx-4 rx-6 tx-0 tx-2 tx-4 tx-6 |

5. Check the interrupt number assigned by the system to the network card port.

# cat /proc/interrupts | grep –i eth0 |

6. Actively close the irqbalance process.

# service irqbalance stop |

7. Update the file and set the interrupt binding.

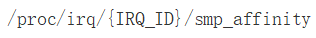

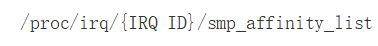

Interrupt binding can be set by modifying  or

or  , where {IRQ_ID} is the corresponding interrupt number. The content of smp_affinity is hexadecimal, and the content of smp_affinity_list is decimal. The two files are connected. After one is modified, the other is changed accordingly.

, where {IRQ_ID} is the corresponding interrupt number. The content of smp_affinity is hexadecimal, and the content of smp_affinity_list is decimal. The two files are connected. After one is modified, the other is changed accordingly.

#03. Lessons learned

1. You can manually change the value in the smp_affinity file to bind the IRQ to the specified CPU core, or enable the irqbalance service to automatically bind the IRQ to the CPU core. For multi-CPU and multi-core SMP systems, irqbalance is generally enabled by default to simplify configuration and ensure performance.

2. For applications such as file servers and high-traffic Web servers, binding different network card IRQs to different CPUs in a balanced manner will reduce the burden on a certain CPU and improve the ability of multiple CPUs to handle interrupts as a whole.

3. For applications such as database servers, binding the disk controller to one CPU and the network card to another CPU will improve the response time of the database to achieve optimal performance.

To sum up, performance optimization is a process rather than a result, requiring a lot of testing, observation, verification and improvement. Therefore, the application system needs to reasonably balance IRQ interrupts according to the production environment configuration and application characteristics, so as to continuously improve the overall throughput and performance of the system.