An article explaining the principle of Docker network

An article explaining the principle of Docker network

overview

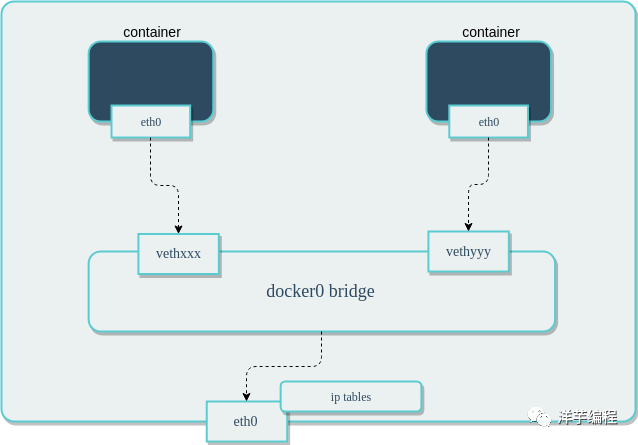

Docker's native network is implemented based on Linux's network namespace (net namespace) and virtual network device (veth pair). When the Docker process starts, a virtual network bridge named docker0 will be created on the host, and the Docker container started on the host will be connected to this virtual bridge.

$ ifconfig

# 输出如下

docker0: ... mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

...- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

The working mode of the virtual network bridge is similar to that of a physical switch. All containers on the host are connected in a layer 2 network through the virtual network bridge.

Assign an IP from the docker0 subnet to the container, and set the IP address of docker0 as the default gateway of the container. Create a pair of virtual network card veth pair devices on the host machine. Docker puts one end of the veth pair device in the newly created container and names it eth0 (the network card of the container), and puts the other end in the host machine, similar to vethxxx Name it and connect this network device to the docker0 bridge.

Docker will automatically configure iptables rules and NAT to connect to the docker0 bridge on the host. After these operations are completed, the container can use its eth0 virtual network card to connect to other containers and access the external network.

The network interfaces in Docker are all virtual interfaces by default. Linux implements data transmission between interfaces through data replication in the kernel, which can give full play to the forwarding efficiency of data between different Docker containers or between containers and hosts. Packets in , are copied directly to the buffer of the receiving interface without switching through physical network devices.

# 查询主机上 veth 设备

$ ifconfig | grep veth*

veth06f40aa:

...

vethfdfd27a:- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

Image source: https://www.suse.com/c/rancher_blog/introduction-to-container-networking/

The virtual bridge docker0 is connected to the network card on the host machine through iptables configuration, and qualified requests will be forwarded to docker0 through iptables, and then distributed to the corresponding containers.

# 查看 docker 的 iptables 配置

$ iptables -t nat -L

# 输出如下

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DOCKER all -- anywhere anywhere ADDRTYPE match dst-type LOCAL

Chain DOCKER (2 references)

target prot opt source destination

RETURN all -- anywhere anywhere- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

network driver

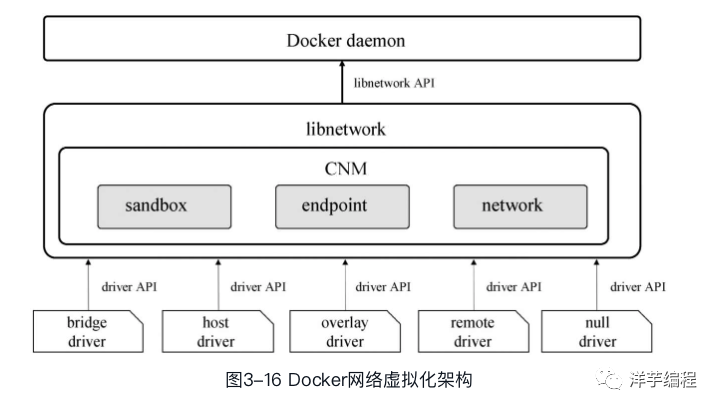

Docker's network subsystem supports pluggable drivers, multiple drivers exist by default, and provides core network functions.

name | describe |

bridge | The default network device, used when the container where the application resides needs to communicate |

host | Remove the network isolation between the container and the host, and use the host's network directly |

overlay | Connect multiple containers and enable cluster services to communicate with each other |

ipvlan | Gives users complete control over IPv4 and IPv6 addressing |

macvlan | MAC addresses can be assigned to containers |

none | disable all networks |

Network plugins | Install and use third-party network plugins with Docker |

Image source: Docker - Containers and Container Clouds

Docker daemon completes functions such as network creation and management by calling the API provided by libnetwork. CNM is used in libnetwork to complete network functions. CNM mainly includes three components: sandbox, endpoint and network.

• Sandbox: A sandbox contains information about a container network stack. A sandbox can have multiple endpoints and multiple networks. The sandbox can manage the interface, routing and DNS settings of the container. The implementation of the sandbox can be Linux network namespace, FreeBSD Jail or similar mechanisms

• Endpoint: An endpoint can join a sandbox and a network. An endpoint belongs to only one network and one sandbox, and the implementation of the endpoint can be a veth pair, an Open vSwitch internal port, or a similar device

• Network: A network is a group of endpoints that can communicate directly with each other. A network can contain multiple endpoints, and the implementation of the network can be Linux bridge, VLAN, etc.

bridge mode

bridge is the default network mode, which creates an independent network namespace for the container, and the container has an independent network card and all network stacks. All containers using this mode are connected to the docker0 bridge, as a virtual switch so that the containers can communicate with each other, but because the IP address of the host machine and the IP address of the container veth pair are not in the same network segment, so in order to communicate with outside the host machine For network communication, Docker uses port binding, that is, through iptables NAT, port traffic on the host machine is forwarded to the container.

The bridge mode can already meet the most basic usage requirements of Docker containers, but it uses NAT when communicating with the outside world, which increases the complexity of communication and has limitations in complex scenarios.

$ docker network inspect bridge

# 输出如下 (节选部分信息)

[

{

"Name": "bridge",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Containers": {

# 使用 bridge 网络的容器列表

},

}

]- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

From the above output, we can see that the IP address of the virtual bridge is the gateway address of the bridge network type.

We can find a container from the output list of Containers and view its network type and configuration:

$ docker inspect 容器ID

# 输出如下 (节选部分信息)

[

...

"NetworkSettings": {

"Bridge": "",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.4",

"Networks": {

"bridge": {

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.4",

}

}

...

]- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

From the above output, we can see that the IP address of the virtual bridge is the gateway address of the bridge network type container.

Implementation Mechanism

Make a DNAT rule in iptables to realize the port forwarding function:

# iptables 配置查看

$ iptables -t nat -vnL

# 输出如下

Chain PREROUTING (policy ACCEPT 37M packets, 2210M bytes)

...

0 0 DNAT tcp -- !docker0 * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8080 to:172.17.0.4:80- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

When a container needs to map a port to the host, Docker will automatically assign an IP address to the container and add an iptables rule.

host mode

The container does not get an independent network namespace, but shares one with the host. The container will not virtualize its own network card, configure its own IP, etc., but directly use the host machine. However, other aspects of the container, such as the file system and process list, are still isolated from the host machine. The container is completely open to the outside world. If you can access the host machine, you can also access the container.

The host mode reduces the isolation at the network level between containers and between containers and hosts. Although it has performance advantages, it causes competition and conflicts for network resources, so it is suitable for scenarios with small container clusters.

Start an Nginx container with network type host:

$ docker run -d --net host nginx

Unable to find image 'nginx:latest' locally

latest: Pulling from library/nginx

...

f202870092fc40bc08a607dddbb2770df9bb4534475b066f45ea35252d6e76e2- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

View a list of containers with network type host:

$ docker network inspect host

# 输出如下 (节选部分信息)

[

{

"Name": "host",

"Scope": "local",

"Driver": "host",

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"Containers": {

# 使用 host 网络的容器列表

"f202870092fc40bc08a607dddbb2770df9bb4534475b066f45ea35252d6e76e2": {

"Name": "frosty_napier",

"EndpointID": "7306a8e4103faf4edd081182f015fa9aa985baf3560f4a49b9045c00dc603190",

"MacAddress": "",

"IPv4Address": "",

"IPv6Address": ""

}

},

}

]- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

View Nginx container network type and configuration:

$ docker inspect f202870092fc4

# 输出如下 (节选部分信息)

[

...

"NetworkSettings": {

"Bridge": "",

"Gateway": "",

"IPAddress": "",

"Networks": {

"host": {

"Gateway": "",

"IPAddress": "",

}

}

...

]- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

From the above output, we can see that the network type used by the Nginx container is host, and there is no independent IP.

View the Nginx container IP address:

# 进入容器内部 shell

$ docker exec -it f202870092fc4 /bin/bash

# 安装 ip 命令

$ apt update && apt install -y iproute2

# 查看 IP 地址

$ ip a

# 输出如下

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

...

2: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

...

10: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

...

# 退出容器,查看宿主机 IP 地址

$ exit

$ ip a

# 输出如下

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

...

2: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

...

10: eth0@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

...- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

From the above output, we can see that there is no independent IP inside the Nginx container, but the IP of the host machine is used.

View the port listening status of the host:

$ sudo netstat -ntpl

# 输出如下

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1378/nginx: master

tcp6 0 0 :::80 :::* LISTEN 1378/nginx: master- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

From the above output, we can see that the process listening on port 80 is nginx, not docker-proxy.

none mode

The container has its own Network Namespace, but does not perform any network configuration. It means that the container has no network card, IP, routing and other information, you need to manually add a network card, configure IP, etc. for the container, the container in none mode will be completely isolated, and only the loopback (loopback network) network card lo in the container is used for process communication .

The none mode makes the least network settings for the container, and provides the highest flexibility by customizing the network configuration of the container without network configuration.

Start an Nginx container with network type host:

$ docker run -d --net none nginx

Unable to find image 'nginx:latest' locally

latest: Pulling from library/nginx

...

d2d0606b7d2429c224e61e06c348019b74cd47f0b8c85347a7cdb8f1e30dcf86- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

View a list of containers with a network type of none:

$ docker network inspect none

# 输出如下 (节选部分信息)

[

{

"Name": "none",

"Scope": "local",

"Driver": "null",

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"Containers": {

# 使用 none 网络的容器列表

"d2d0606b7d2429c224e61e06c348019b74cd47f0b8c85347a7cdb8f1e30dcf86": {

"Name": "hardcore_chebyshev",

"EndpointID": "b8ff645671518e608f403818a31b1db34d7fce66af60373346ea3ab673a4c6b2",

"MacAddress": "",

"IPv4Address": "",

"IPv6Address": ""

}

},

}

]- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

View Nginx container network type and configuration:

$ docker inspect d2d0606b7d242

# 输出如下 (节选部分信息)

[

...

"NetworkSettings": {

"Bridge": "",

"Gateway": "",

"IPAddress": "",

"Networks": {

"none": {

"Gateway": "",

"IPAddress": "",

}

}

...

]- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

From the above output, we can see that the network type used by the Nginx container is none, and there is no independent IP.

View the Nginx container IP address:

# 进入容器内部 shell

$ docker exec -it d2d0606b7d242 /bin/bash

# 访问公网链接

$ curl -I "https://www.docker.com"

curl: (6) Could not resolve host: www.docker.com

# 为什么会报错呢? 这是因为当前容器没有网卡、IP、路由等信息,是完全独立的运行环境,所以没有办法访问公网链接。

# 查看 IP 地址

$ hostname -I

# 没有任何输出,该容器没有 IP 地址- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

View the port listening status of the host:

$ docker port d2d0606b7d242

或者

$ sudo netstat -ntpl | grep :80

# 没有任何输出,Nginx 进程运行在容器中,端口没有映射到宿主机- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

container mode

Similar to the host mode, the container shares the network namespace with the specified container. There is no network isolation between the two containers, but they are network isolated from the host and other containers. Containers in this mode can access other containers in the same network namespace through localhost, which has high transmission efficiency and saves certain network resources. It is very useful in some special scenarios, such as k8s Pod.

other modes

For the sake of space, other network modes will not be described here. Interested readers can read it by themselves according to the reference link at the end of the article.

Network Driver Overview

- • Use bridge when multiple containers need to communicate on the same host

- • Use host when the network stack should not be isolated from the host, but other aspects of the container are expected to be isolated

- • Use overlay when containers running on different hosts need to communicate

- • When migrating from a virtual machine or needing to make containers look like physical hosts, use Macvlan, each container has a unique MAC address

- • Use third-party when you need to integrate Docker with a dedicated network stack

Docker and iptables

If you run Docker on a server that is accessible from the public network, you need corresponding iptables rules to restrict access to containers or other services on the host.

Add iptables rules before Docker rules

Docker installs two custom iptables chains named DOCKER-USER and DOCKER to ensure that incoming packets are always inspected first by these two chains.

# 可以通过该命令查看

$ iptables -L -n -v | grep -i docker- 1.

- 2.

- 3.

All iptables rules for Docker are added to the Docker chain, do not modify this chain manually (may cause problems). If you need to add some rules that are loaded before Docker, add them to the DOCKER-USER chain, these rules are applied before all the rules created automatically by Docker.

Rules added to the FORWARD chain are inspected after these chains, which means that if a port is exposed via Docker, it will be exposed regardless of what rules the firewall has configured. If you want these rules to still apply when exposing ports via Docker, you must add these rules to the DOCKER-USER chain.

Limit connections to the Docker host

By default, all external IPs are allowed to connect to the Docker host, to only allow specific IPs or networks to access the container, insert a rule at the top of the DOCKER-USER filter chain.

For example, to allow only 192.168.1.1 access:

# 假设输入接口为 eth0

$ iptables -I DOCKER-USER -i eth0 ! -s 192.168.1.1 -j DROP- 1.

- 2.

It is also possible to allow connections from source subnets, for example, to allow access to users on the 192.168.1.0/24 subnet:

# 假设输入接口为 eth0

$ iptables -I DOCKER-USER -i eth0 ! -s 192.168.1.0/24 -j DROP- 1.

- 2.

Prevent Docker from operating iptables

Set the value of iptables to false in the configuration file /etc/docker/daemon.json of the Docker engine, but it is best not to modify it, because it is likely to destroy the container network of the Docker engine.

Set the default bind address for the container

By default, the Docker daemon will expose ports on the 0.0.0.0 address, which is any address on the host machine. If you wish to change that behavior to only expose ports on internal IP addresses, you can specify a different IP address with the --ip option.

Integrate into firewall

If you are running Docker 20.10.0 or higher and iptables is enabled on your system, Docker will automatically create a firewall zone named docker and add all the network interfaces it creates (such as docker0 ) to the docker zone to Allows for seamless networking.

Run the command to remove the docker interface from the firewall zone:

firewall-cmd --znotallow=trusted --remove-interface=docker0 --permanent

firewall-cmd --reload- 1.

- 2.

Reference

• Networking overview[1]

• Networking tutorials[2]

• Docker and iptables[3]

• Detailed explanation of container Docker[4]

• Introduction to Container Networking[5]

• docker container network solution: calico network model[6]

• Docker——container and container cloud[7]

quote link

[1] Networking overview: https://docs.docker.com/network/[2] Networking tutorials: https://docs.docker.com/network/network-tutorial-standalone/[3] Docker and iptables: https://docs.docker.com/network/iptables/

[4] Detailed explanation of container Docker: https://juejin.cn/post/6844903766601236487

[5] Introduction to Container Networking: https://www.suse.com/c/rancher_blog/introduction-to-container-networking/

[6] docker container network solution: calico network model: https://cizixs.com/2017/10/19/docker-calico-network/

[7] Docker——Container and Container Cloud: https://book.douban.com/subject/26894736/