Use eBPF technology to achieve faster network packet transmission

Use eBPF technology to achieve faster network packet transmission

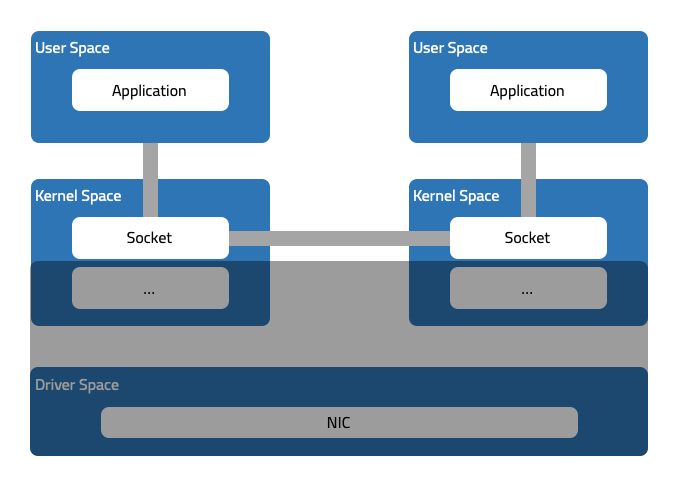

In the last article, I used the entire content to describe the trajectory of network data packets in the Kubernetes network. At the end of the article, we put forward a hypothesis: two sockets in the same kernel space can directly transmit data, is it possible? Save the delay caused by the kernel network protocol stack processing?

Whether it is two different containers in the same pod, or the network communication between two pods on the same node, they all actually happen in the same kernel space, and the two sockets that are opposite to each other are also located in the same memory. At the beginning of the previous article, it was also concluded that the transmission track of the data packet is actually the addressing process of the socket, and the problem can be further expanded: if the communication between two sockets on the same node can quickly locate the socket at the opposite end - - Find its address in memory, we can save the delay caused by the network protocol stack processing.

The two sockets that are opposite to each other are the client socket and the server socket that establish a connection, and they can be associated through IP addresses and ports. The local address and port of the client socket are the remote address and port of the server socket; the remote address and port of the client socket are the local address and port of the server socket.

After the connection between the client and the server is established, if you can use the combination of local address + port and remote address + port to point to the socket, you only need to exchange the local and remote address + port to locate the peer. socket, and then write the data directly to the peer socket (actually written to the receiving queue RXQ of the socket, which is not expanded here), so as to avoid the processing of the kernel network stack (including netfilter/iptables) and NIC.

How to achieve? You should have guessed it from the title, here we use eBPF technology.

What is eBPF?

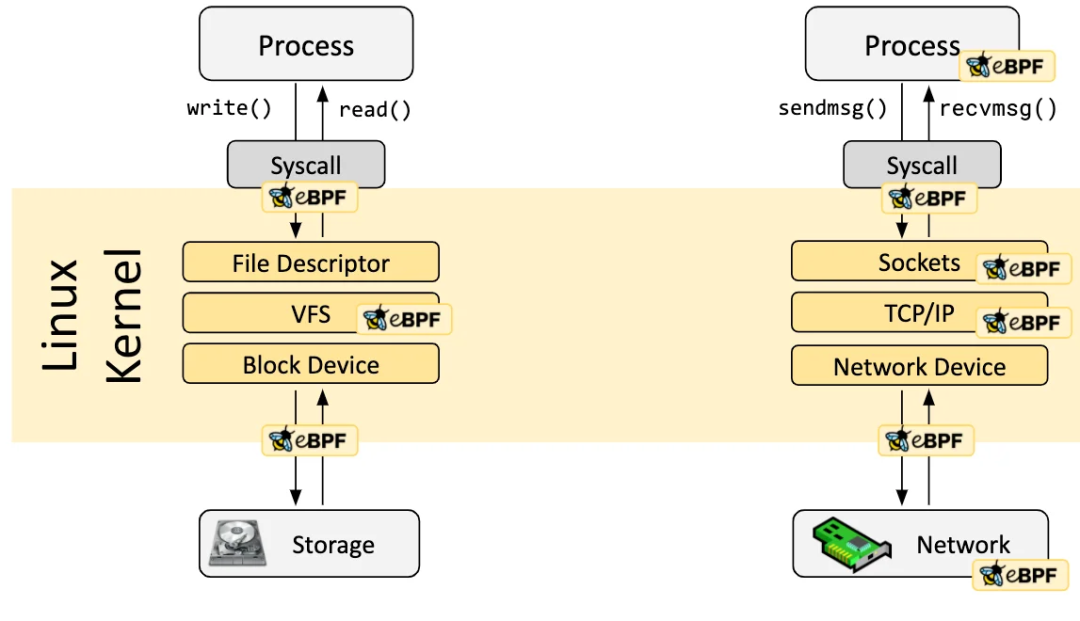

The Linux kernel has always been an ideal place for monitoring/observability, networking, and security features. However, this is not easy in many cases, because these tasks require modifying the kernel source code or loading kernel modules, and the final implementation form is to superimpose new abstractions on top of existing layers of abstractions. eBPF is a revolutionary technology that can run sandbox programs (sandbox programs) in the kernel without modifying the kernel source code or loading kernel modules.

By making the Linux kernel programmable, it is possible to build on existing (rather than adding new) abstraction layers to build smarter, more feature-rich infrastructure software without increasing system complexity or sacrificing Execution efficiency and security.

Application Scenario

The introduction of the eBPF.io[1] website is intercepted below.

On the networking side, using eBPF can speed up packet processing without leaving kernel space. Add additional protocol parsers and easily write any forwarding logic to meet changing needs.

In terms of observability, using eBPF makes it possible to customize the collection of metrics and kernel aggregations, as well as generate visibility events and data structures from numerous sources without exporting samples.

In terms of link tracing and analysis, attaching eBPF programs to tracepoints as well as kernel and user application probepoints can provide powerful inspection capabilities and unique insights to troubleshoot system performance issues.

On the security side, combine viewing and understanding of all system calls with packet and socket level views of all networking to create secure systems that operate in more contexts and have a greater level of control.

event driven

eBPF programs are event-driven and run when the kernel or application passes a certain hook point. Predefined hook types include system calls, function entry/exit, kernel tracepoints, network events, and more.

The Linux kernel provides a set of BPF hooks on system calls and network stacks, through which the execution of BPF programs can be triggered. The following introduces several common hooks.

- XDP: This is the hook that can trigger the BPF program when receiving network packets in the network driver, and it is also the earliest point. Since it has not entered the kernel network stack at this point, and has not performed expensive operations such as assigning `sk_buff`[2] to network packets, it is very suitable for running filters that remove malicious or accidental traffic, as well as other common DDOS protection mechanism.

- Traffic Control Ingress/Egress: A BPF program attached to a traffic control (tc) ingress hook that can be attached to a network interface. This hook executes before L3 of the network stack and has access to most of the metadata of network packets. It can handle operations on the same node, such as applying L3/L4 endpoint policies and forwarding traffic to endpoints. CNI typically uses the virtual machine ethernet interface pair veth to connect containers to the host's network namespace. All traffic leaving the container can be monitored using a tc ingress hook attached to the host-side veth (also attached to the container's eth0 interface of course). It can also be used to handle operations across nodes. By attaching another BPF program to the tc egress hook at the same time, Cilium can monitor all traffic to and from the node and enforce policies.

The above two hooks belong to the network event type, and the following introduces the socket system calls, which are also network-related.

- Socket operations: Socket operations hooks are attached to specific cgroups and run on socket operations. For example attach a BPF socket ops program to cgroup/sock_ops and use it to monitor socket state changes (get information from `bpf_sock_ops`[3]), especially ESTABLISHED state. When the state of the socket becomes ESTABLISHED, if the opposite end of the TCP socket is also the current node (or a local agent), then store the information. Or attach the program to the cgroup/connect4 operation, which can be executed when the connection is initialized using the ipv4 address, modifying the address and port.

- Socket send: This hook runs on every send operation performed by the socket. At this point the hook can inspect the message and discard it, send the message to the kernel networking stack, or redirect the message to another socket. Here, we can use it to complete the fast addressing of the socket.

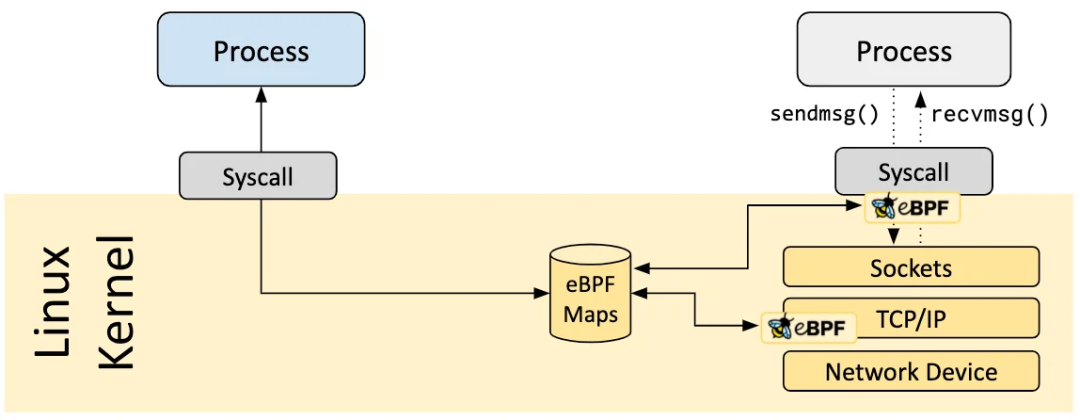

map

An important aspect of eBPF programs is the ability to share collected information and stored state. For this purpose, eBPF programs can utilize the concept of eBPF Map to store and retrieve data. An eBPF Map can be accessed from an eBPF program, or from an application in user space through a system call.

There are various types of Maps: hash tables, arrays, LRU (least recently used) hash tables, ring buffers, stack call traces, and more.

For example, the program attached to the socket socket to be executed every time a message is sent is actually attached to the socket hash table, and the socket is the value in the key-value pair.

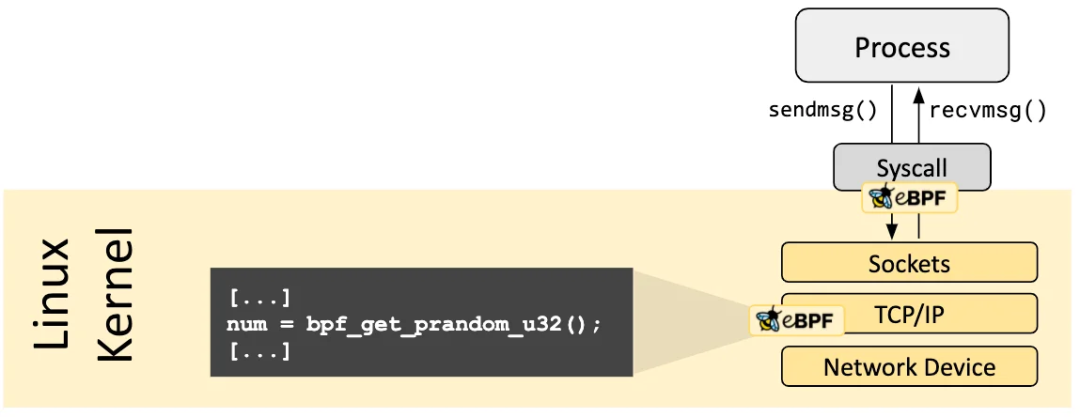

helper function

eBPF programs cannot call arbitrary kernel functions. Doing so would tie eBPF programs to a specific kernel version and complicate program compatibility. Instead, eBPF programs can make function calls to helper functions, which are well-known and stable APIs provided by the kernel.

These helper functions [4] provide different functionality:

- Generate random numbers

- Get the current time and date

- Access eBPF Maps

- Get process/cgroup context

- Manipulate network packets and forwarding logic

accomplish

After talking about the content of eBPF, you should have a general idea of the implementation. Here we need two eBPF programs to maintain the socket map and pass the message directly to the peer socket respectively. Thanks to Idan Zach for the sample code ebpf-sockops[5], I made a simple modification to the code[6] to make it more readable.

The original code used 16777343 to represent the address 127.0.0.1, and 4135 to represent the port 10000, both of which are values converted from network byte sequences.

Socket map maintenance: sockops

Program attached to sock_ops: monitor the socket status, when the status is BPF_SOCK_OPS_PASSIVE_ESTABLISHED_CB or BPF_SOCK_OPS_ACTIVE_ESTABLISHED_CB, use the auxiliary function bpf_sock_hash_update[^1] to save the socket as a value in the socket map, key

__section("sockops")

int bpf_sockmap(struct bpf_sock_ops *skops)

{

__u32 family, op;

family = skops->family;

op = skops->op;

//printk("<<< op %d, port = %d --> %d\n", op, skops->local_port, skops->remote_port);

switch (op) {

case BPF_SOCK_OPS_PASSIVE_ESTABLISHED_CB:

case BPF_SOCK_OPS_ACTIVE_ESTABLISHED_CB:

if (family == AF_INET6)

bpf_sock_ops_ipv6(skops);

else if (family == AF_INET)

bpf_sock_ops_ipv4(skops);

break;

default:

break;

}

return 0;

}

// 127.0.0.1

static const __u32 lo_ip = 127 + (1 << 24);

static inline void bpf_sock_ops_ipv4(struct bpf_sock_ops *skops)

{

struct sock_key key = {};

sk_extract4_key(skops, &key);

if (key.dip4 == loopback_ip || key.sip4 == loopback_ip ) {

if (key.dport == bpf_htons(SERVER_PORT) || key.sport == bpf_htons(SERVER_PORT)) {

int ret = sock_hash_update(skops, &sock_ops_map, &key, BPF_NOEXIST);

printk("<<< ipv4 op = %d, port %d --> %d\n", skops->op, key.sport, key.dport);

if (ret != 0)

printk("*** FAILED %d ***\n", ret);

}

}

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

Message direct: sk_msg

The program attached to the socket map: trigger this program every time a message is sent, use the current socket's remote address + port and local address + port as keys to locate the peer socket from the map. If the location is successful, it means that the client and the server are on the same node, and the auxiliary function bpf_msg_redirect_hash[^2] is used to write the data directly to the peer socket.

Here bpf_msg_redirect_hash is not used directly, but accessed through a custom msg_redirect_hash. Because the former cannot be accessed directly, otherwise the verification will fail.

__section("sk_msg")

int bpf_redir(struct sk_msg_md *msg)

{

__u64 flags = BPF_F_INGRESS;

struct sock_key key = {};

sk_msg_extract4_key(msg, &key);

// See whether the source or destination IP is local host

if (key.dip4 == loopback_ip || key.sip4 == loopback_ip ) {

// See whether the source or destination port is 10000

if (key.dport == bpf_htons(SERVER_PORT) || key.sport == bpf_htons(SERVER_PORT)) {

//int len1 = (__u64)msg->data_end - (__u64)msg->data;

//printk("<<< redir_proxy port %d --> %d (%d)\n", key.sport, key.dport, len1);

msg_redirect_hash(msg, &sock_ops_map, &key, flags);

}

}

return SK_PASS;

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

test

environment

- Ubuntu 20.04

- Kernel 5.15.0-1034

Install dependencies.

sudo apt update && sudo apt install make clang llvm gcc-multilib linux-tools-$(uname -r) linux-cloud-tools-$(uname -r) linux-tools-generic- 1.

Clone the code.

git clone https://github.com/addozhang/ebpf-sockops

cd ebpf-sockops- 1.

- 2.

Compile and load a BPF program.

sudo ./load.sh- 1.

Install iperf3.

sudo apt install iperf3- 1.

Start the iperf3 server.

iperf3 -s -p 10000- 1.

Run the iperf3 client.

iperf3 -c 127.0.0.1 -t 10 -l 64k -p 10000- 1.

Run the trace.sh script to view the printed logs, and you can see 4 logs: 2 connections are created.

./trace.sh

iperf3-7744 [001] d...1 838.985683: bpf_trace_printk: <<< ipv4 op = 4, port 45189 --> 4135

iperf3-7744 [001] d.s11 838.985733: bpf_trace_printk: <<< ipv4 op = 5, port 4135 --> 45189

iperf3-7744 [001] d...1 838.986033: bpf_trace_printk: <<< ipv4 op = 4, port 45701 --> 4135

iperf3-7744 [001] d.s11 838.986078: bpf_trace_printk: <<< ipv4 op = 5, port 4135 --> 45701- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

How to determine that the kernel network stack has been skipped, use tcpdump to capture packets and see. From the results of packet capture, there is only handshake and wave traffic, and the sending of subsequent messages completely skips the kernel network stack.

sudo tcpdump -i lo port 10000 -vvv

tcpdump: listening on lo, link-type EN10MB (Ethernet), capture size 262144 bytes

13:23:31.761317 IP (tos 0x0, ttl 64, id 50214, offset 0, flags [DF], proto TCP (6), length 60)

localhost.34224 > localhost.webmin: Flags [S], cksum 0xfe30 (incorrect -> 0x5ca1), seq 2753408235, win 65495, options [mss 65495,sackOK,TS val 166914980 ecr 0,nop,wscale 7], length 0

13:23:31.761333 IP (tos 0x0, ttl 64, id 0, offset 0, flags [DF], proto TCP (6), length 60)

localhost.webmin > localhost.34224: Flags [S.], cksum 0xfe30 (incorrect -> 0x169a), seq 3960628312, ack 2753408236, win 65483, options [mss 65495,sackOK,TS val 166914980 ecr 166914980,nop,wscale 7], length 0

13:23:31.761385 IP (tos 0x0, ttl 64, id 50215, offset 0, flags [DF], proto TCP (6), length 52)

localhost.34224 > localhost.webmin: Flags [.], cksum 0xfe28 (incorrect -> 0x3d56), seq 1, ack 1, win 512, options [nop,nop,TS val 166914980 ecr 166914980], length 0

13:23:31.761678 IP (tos 0x0, ttl 64, id 59057, offset 0, flags [DF], proto TCP (6), length 60)

localhost.34226 > localhost.webmin: Flags [S], cksum 0xfe30 (incorrect -> 0x4eb8), seq 3068504073, win 65495, options [mss 65495,sackOK,TS val 166914981 ecr 0,nop,wscale 7], length 0

13:23:31.761689 IP (tos 0x0, ttl 64, id 0, offset 0, flags [DF], proto TCP (6), length 60)

localhost.webmin > localhost.34226: Flags [S.], cksum 0xfe30 (incorrect -> 0x195d), seq 874449823, ack 3068504074, win 65483, options [mss 65495,sackOK,TS val 166914981 ecr 166914981,nop,wscale 7], length 0

13:23:31.761734 IP (tos 0x0, ttl 64, id 59058, offset 0, flags [DF], proto TCP (6), length 52)

localhost.34226 > localhost.webmin: Flags [.], cksum 0xfe28 (incorrect -> 0x4019), seq 1, ack 1, win 512, options [nop,nop,TS val 166914981 ecr 166914981], length 0

13:23:41.762819 IP (tos 0x0, ttl 64, id 43056, offset 0, flags [DF], proto TCP (6), length 52) localhost.webmin > localhost.34226: Flags [F.], cksum 0xfe28 (incorrect -> 0x1907), seq 1, ack 1, win 512, options [nop,nop,TS val 166924982 ecr 166914981], length 0

13:23:41.763334 IP (tos 0x0, ttl 64, id 59059, offset 0, flags [DF], proto TCP (6), length 52)

localhost.34226 > localhost.webmin: Flags [F.], cksum 0xfe28 (incorrect -> 0xf1f4), seq 1, ack 2, win 512, options [nop,nop,TS val 166924982 ecr 166924982], length 0

13:23:41.763348 IP (tos 0x0, ttl 64, id 43057, offset 0, flags [DF], proto TCP (6), length 52)

localhost.webmin > localhost.34226: Flags [.], cksum 0xfe28 (incorrect -> 0xf1f4), seq 2, ack 2, win 512, options [nop,nop,TS val 166924982 ecr 166924982], length 0

13:23:41.763588 IP (tos 0x0, ttl 64, id 50216, offset 0, flags [DF], proto TCP (6), length 52)

localhost.34224 > localhost.webmin: Flags [F.], cksum 0xfe28 (incorrect -> 0x1643), seq 1, ack 1, win 512, options [nop,nop,TS val 166924982 ecr 166914980], length 0

13:23:41.763940 IP (tos 0x0, ttl 64, id 14090, offset 0, flags [DF], proto TCP (6), length 52)

localhost.webmin > localhost.34224: Flags [F.], cksum 0xfe28 (incorrect -> 0xef2e), seq 1, ack 2, win 512, options [nop,nop,TS val 166924983 ecr 166924982], length 0

13:23:41.763952 IP (tos 0x0, ttl 64, id 50217, offset 0, flags [DF], proto TCP (6), length 52)

localhost.34224 > localhost.webmin: Flags [.], cksum 0xfe28 (incorrect -> 0xef2d), seq 2, ack 2, win 512, options [nop,nop,TS val 166924983 ecr 166924983], length 0- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

Summarize

Through the introduction of eBPF, we shorten the datapath of the same-node communication packet, skipping the kernel network stack and directly connecting the two peer sockets.

This design is suitable for communication between two applications on the same pod and communication between two pods on the same node.

[^1]: This auxiliary function adds or updates the referenced socket to the sockethash map, and the input of the program bpf_sock_ops is used as the value of the key-value pair. For details, refer to bpf_sock_hash_update in https://man7.org/linux/man-pages/man7/bpf-helpers.7.html.

[^2]: This helper function forwards msg to the key in the socket map

References

[1] eBPF.io: https://ebpf.io

[2] sk_buff: https://atbug.com/tracing-network-packets-in-kubernetes/#sk_buff

[3] bpf_sock_ops: https://elixir.bootlin.com/linux/latest/source/include/uapi/linux/bpf.h#L6377

[4] Helper functions: https://man7.org/linux/man-pages/man7/bpf-helpers.7.html

[5] Idan Zach's sample code ebpf-sockops: https://github.com/zachidan/ebpf-sockops

[6] Simple modification: https://github.com/zachidan/ebpf-sockops/pull/3/commits/be09ac4fffa64f4a74afa630ba608fd09c10fe2a