I finally understand about the service limit.

I finally understand about the service limit.

preface

With the development of microservices and distributed systems, the mutual calls between services are becoming more and more complicated. In order to ensure the stability and high availability of its own services, when facing requests that exceed its own service capabilities, certain flow-limiting measure must be taken. Just like the tourist trips during May Day and National Day, the scenic spots are full, and the flow of tourists is limited. Our services also need to limit service traffic in scenarios with high concurrency and large traffic, such as flash sales, big promotions, 618, Double Eleven, possible malicious attacks, and crawlers.

Intercepting requests that exceeded the service's processing capacity and restricting the flow of access to the service is service flow limiting. Next, let's talk about service current limiting.

Two current limiting methods

Common current limiting methods can be divided into two categories: request-based current limiting and resource-based current limiting.

- Limit based on request

Request-based current limiting refers to considering current limiting from the perspective of external access requests. There are two common methods.

The first is to limit the total amount, that is, to limit the cumulative upper limit of a certain indicator. It is common to limit the total number of users served by the current system. For example: a live broadcast room limits the total number of users to 1 million. Users cannot enter; there are only 100 products in a certain snap-up event, and the upper limit of users participating in the snap-up is 10,000, and users after 10,000 are directly rejected.

The second is to limit the amount of time, that is, to limit the upper limit of a certain indicator within a period of time, for example, only 10,000 users are allowed to access within 1 minute; the peak value of requests per second is up to 100,000.

advantage:

- easy to implement

shortcoming:

- The main problem faced in practice is that it is difficult to find a suitable threshold. For example, the system is set to have 10,000 users in one minute, but in fact the system can’t handle it when there are 6,000 users; or after reaching 10,000 users in one minute, the system is actually not under great pressure, but at this time it has already begun to discard user visits . Moreover, hardware-related factors must also be considered. For example, the processing capabilities of a 32-core machine and a 64-core machine are very different, and the thresholds are different.

application:

- It is suitable for systems with relatively simple business functions, such as load balancing systems, gateway systems, and snap-up systems.

- Resource-based current limiting

Request-based current limiting is considered from the outside of the system, while resource-based current limiting is considered from within the system, that is, to find key resources that affect performance within the system and limit their use. Common internal resources include connections, file handles, threads, and request queues. For example, when the CPU usage exceeds 80%, new requests are rejected.

advantage:

- Effectively reflect the pressure of the current system and better limit current

shortcoming:

- Difficulty identifying key resources

- It is difficult to determine the threshold of key resources, and it is necessary to gradually debug online and continue to observe until an appropriate value is found.

application:

- Applicable to a specific service, such as order system, commodity system, etc.

Four current limiting algorithms

There are four common current limiting algorithms. Their implementation principles and advantages and disadvantages are different, and they need to be selected according to the business scenario during actual design.

- fixed time window

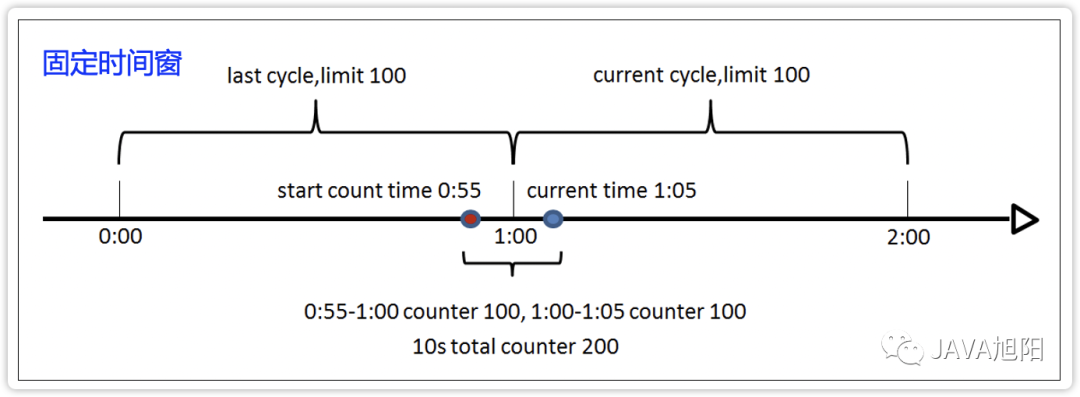

The implementation principle of the fixed time window algorithm is to count the amount of requests or resource consumption within a fixed time period, and if the limit is exceeded, the current limit will be activated, as shown in the following figure:

advantage:

- easy to implement

shortcoming:

- There is a tipping point problem. For example, the interval between the red and blue dots in the above figure is only 10 seconds, but the number of requests during this period has reached 200, which exceeds the limit specified by the algorithm (100 requests are processed within 1 minute). However, because these requests come from two statistical windows, the limit has not been exceeded from a single window, so the current limit will not be activated, which may cause the system to hang due to excessive pressure.

- sliding time window

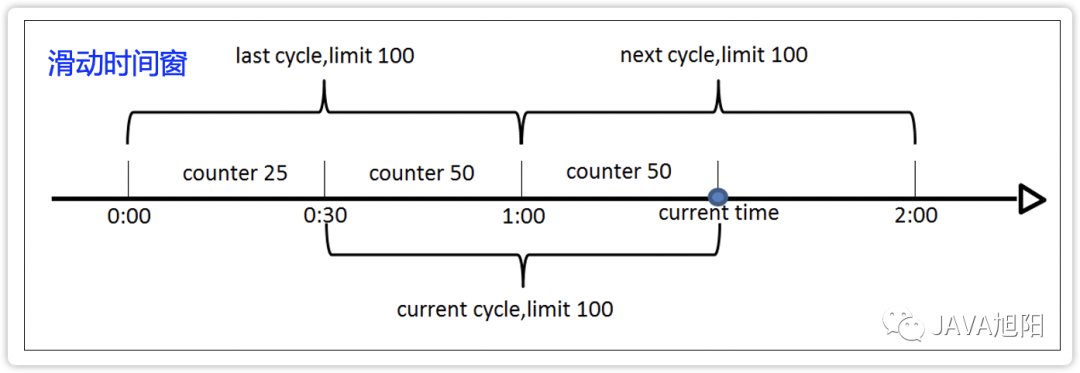

In order to solve the critical point problem, the sliding time window algorithm came into being. Its realization principle is that the two statistical periods partially overlap, so as to avoid the situation that the two statistical points in a short period of time belong to different time windows, as shown in the figure below :

advantage:

- There is no critical point problem

shortcoming:

- Compared with fixed windows, the complexity has increased

- Leaky Bucket Algorithm

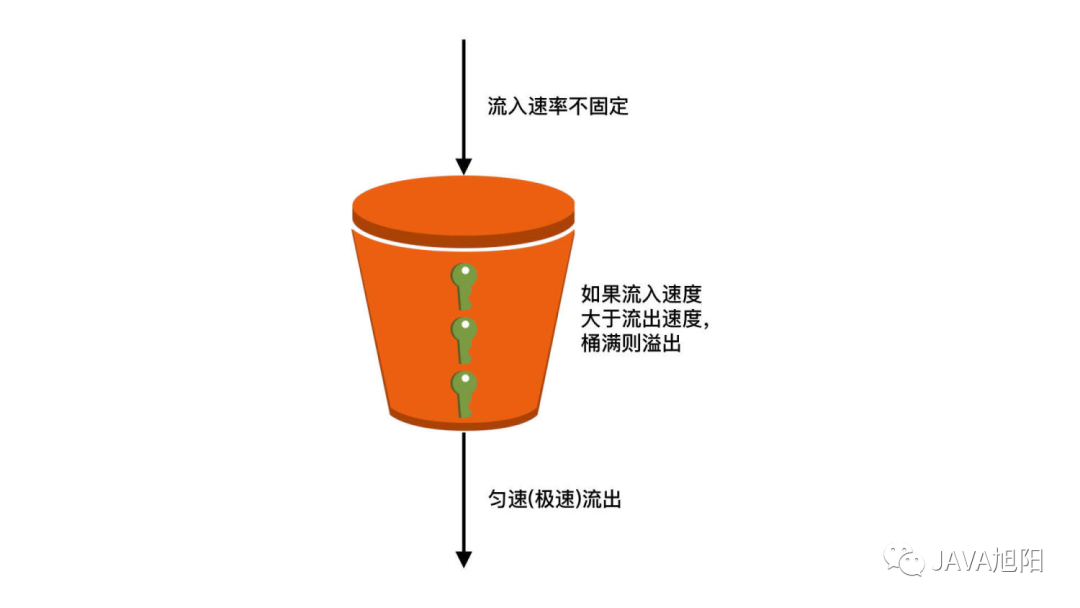

The implementation principle of the leaky bucket algorithm is to put the request into a "bucket" (message queue, etc.), and the business processing unit (thread, process, application, etc.) takes the request from the bucket for processing, and discards the new request when the bucket is full, as shown in the figure below Show:

advantage:

- Fewer requests are discarded when a large amount of traffic bursts, because the leaky bucket itself has the function of caching requests

shortcoming:

- It can smooth the traffic, but it cannot solve the problem of traffic surge.

- It is difficult to dynamically adjust the bucket size, and it takes continuous trial and error to find the optimal bucket size that meets business needs.

- It is impossible to precisely control the outflow speed, that is, the processing speed of the business.

The leaky bucket algorithm is mainly suitable for scenarios with instantaneous high concurrent traffic (such as the 0-point check-in mentioned earlier, hourly spikes, etc.). When a large number of requests flood in in just a few minutes, for better business effects and user experience, even if the processing is slower, try not to discard user requests.

- token bucket algorithm

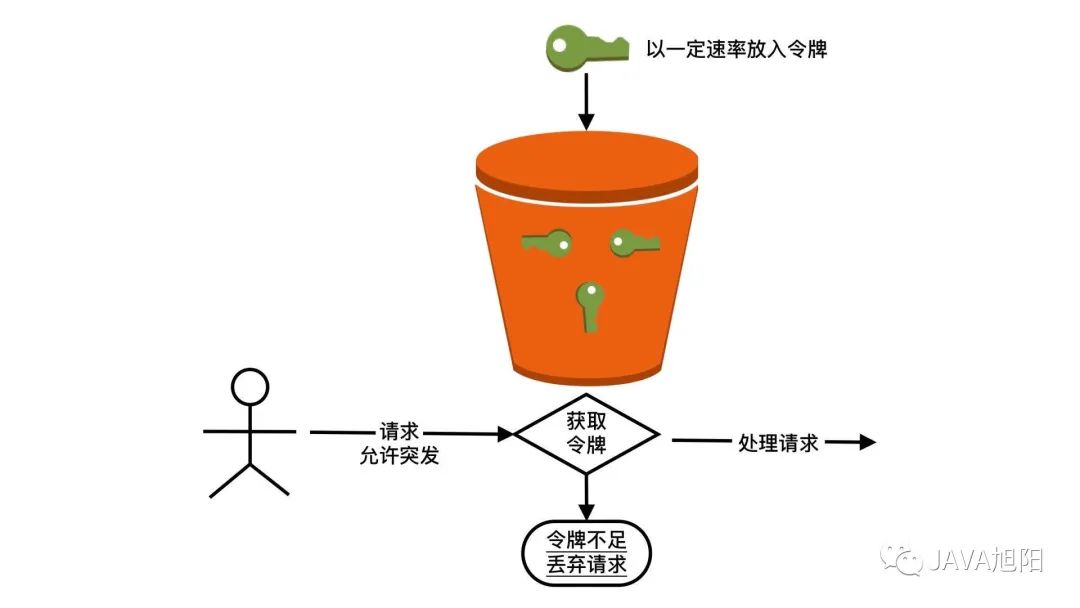

The difference between the token bucket algorithm and the leaky bucket algorithm is that what is placed in the bucket is not a request, but a "token". This token is the "license" that needs to be obtained before business processing. That is to say, when the system receives a request, it first needs to get the "token" in the token bucket, and then it can be processed further, and if it cannot be obtained, the request will be discarded.

Its implementation principle is shown in the following figure:

advantage:

- By controlling the rate at which tokens are put in, the processing rate can be dynamically adjusted for greater flexibility.

- It can smoothly limit traffic and tolerate burst traffic at the same time, because a certain number of tokens can be accumulated in the bucket. When the burst traffic comes, there are accumulated tokens in the bucket, and the business processing speed at this time will exceed the token storage. input speed.

shortcoming:

- Many requests may be dropped when there is a burst of large traffic, because the token bucket cannot accumulate too many tokens.

- Implementation is relatively complex.

The token bucket algorithm is mainly applicable to two typical scenarios. One is to control the speed of accessing third-party services to prevent the downstream from being overwhelmed. For example, Alipay needs to control the speed of accessing the bank interface; the other is to control its own processing Speed to prevent overloading. For example, the stress test results show that the maximum processing TPS of the system is 100, then the maximum processing speed can be limited by token buckets.

Five current limiting strategies

- denial of service

When the request traffic reaches the throttling threshold, redundant requests are directly rejected.

It can be designed to reject requests from different sources such as specified domain names, IPs, clients, applications, and users.

- Delay processing

By adding redundant requests to the cache queue or delay queue to deal with short-term traffic surges, the accumulated request traffic will be gradually processed after the peak period.

- Request classification (priority)

Prioritize requests from different sources and process requests with higher priority first. Such as VIP customers, important business applications (such as transaction service priority is higher than log service).

- Dynamic current limiting

It can monitor system-related indicators, evaluate system pressure, and dynamically adjust the current limit threshold through the registration center and configuration center.

- Monitoring and early warning & dynamic expansion

If there is an excellent service monitoring system and automatic deployment and release system, the monitoring system can automatically monitor the operation of the system, and send early warnings in the form of emails and text messages to sudden increases in service pressure and large amounts of traffic in the short term.

When specific conditions are met, related services can be automatically deployed and released to achieve the effect of dynamic expansion.

Three current limiting positions

- Access layer current limiting

Domain name or IP can be restricted through Nginx, API routing gateway, etc., and illegal requests can be intercepted at the same time.

- App throttling

Each service can have its own stand-alone or cluster current limiting measures, and can also call third-party current limiting services, such as Ali's Sentinel current limiting framework.

- Basic service current limit

It is also possible to limit the flow of the basic service layer.

- Database: limit database connection, limit read and write rate

- Message queue: limit consumption rate (consumption, consumption thread)

Summarize

From a macro perspective, this article summarizes two ways to limit service traffic, three locations where traffic can be limited, four common traffic-limiting algorithms, and five traffic-limiting strategies. Finally, I would like to add a few words. A reasonable current limiting configuration requires an understanding of the system throughput. Therefore, current limiting generally needs to be combined with capacity planning and stress testing. When the external request approaches or reaches the maximum threshold of the system, the current limit is triggered, and other means are taken to degrade to protect the system from being overwhelmed.