Vivo Version Release Platform: Practice of Intelligent Bandwidth Control and Optimization-Platform Product Series 03

Vivo Version Release Platform: Practice of Intelligent Bandwidth Control and Optimization-Platform Product Series 03

1. Background

With the rise of the Internet, many companies have experienced the barbaric growth of the Internet. However, after the Internet market has gradually matured and stabilized, the growth rate of major enterprises in business has gradually slowed down, and they have also begun to " excavate costs internally "in order to control costs in a more refined manner and enhance the competitiveness of enterprises. force.

Especially with the advent of the "Internet Winter", "air-conditioning" is transmitted to all walks of life, and the concept of "cost reduction and efficiency increase" has also been brought up again. There are power cuts, deducting costs and details; there are also large-scale layoffs across the board, eliminating some businesses and reducing manpower; some teams directly form horizontal teams, and use top-level thinking to focus on core costs without affecting team operations. cost reduction.

In this article, based on the author's practice in CDN bandwidth utilization optimization, we will share with you our cost reduction ideas and practical methods. As a continuous innovation direction, "cost reduction and efficiency increase" is not limited to a certain department, and any link in the enterprise value chain may become a breakthrough point. As technology developers, we need to " start from the business direction we are responsible for " to analyze and mine the business with touchpoints of the company's cost, and start from the technical point of view, make technical breakthroughs, explore in depth, and reduce costs. Increase efficiency and bring real value to the company .

Through this article, you can:

1) Open up the mind, provide possible thinking directions for "cost reduction and efficiency increase" , and help everyone explore lightweight but high-value goals.

2) At a glance, understand the core method of our CDN bandwidth utilization optimization .

Glossary:

[CDN] content distribution network.

The CDN in this article refers specifically to the static CDN. The dynamic CDN is mainly to accelerate the routing nodes, and the bandwidth cost is relatively small. Popular explanation: CDN manufacturers have deployed many nodes in various places to cache your resources. When users download, the CDN network helps users find the nearest node, and the download speed of users through this node is greatly improved.

【CDN Bandwidth Utilization】

Bandwidth utilization = real bandwidth / billing bandwidth, which can be understood as something of the same nature as the input-output ratio. Why improve utilization? For example: you are not willing to spend 100 million to buy something worth 10 million. If your utilization rate is only 10%, you have actually done such an illogical thing.

[Yugong Platform] A platform that greatly improves the overall CDN bandwidth utilization of enterprises through bandwidth prediction and peak-shaving and valley-filling.

2. "Searching and searching" to find cost reduction

As a large user of CDN, the author has the honor to witness and participate in every aspect of our CDN cost reduction under the circumstance of exponential growth of CDN bandwidth cost, from the initial optimization based on the utilization rate of the respective business CDN bandwidth to the later optimization based on controllable bandwidth The idea of regulation. In this chapter, let's talk about several important stages of our CDN cost reduction, and share with you why I choose the direction of CDN bandwidth utilization for research?

2.1 CDN bandwidth billing mode

First of all, we have to talk about our billing mode, peak average billing : take the highest value every day, and take the average of the highest daily value every month as the billing bandwidth.

Monthly billing = Avg (daily peak value)

This billing model means: the lower the daily peak value, the lower the cost . Bandwidth utilization determines cost. As a result, we have never given up on the pursuit of bandwidth utilization. Let's take a look at our experience in optimizing bandwidth utilization.

2.2 CDN Cost Reduction Trilogy

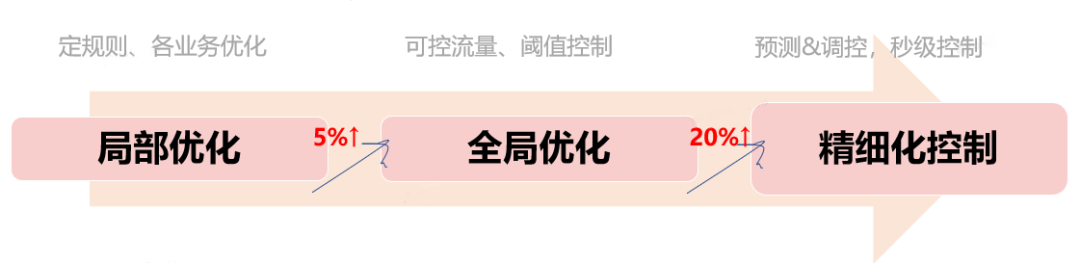

Our CDN cost reduction optimization is mainly divided into three stages . Each of the three stages has its own magical effect, and under the circumstances at that time, all of them had quite good results. And they are all relatively reasonable optimization directions explored based on the situation at that time.

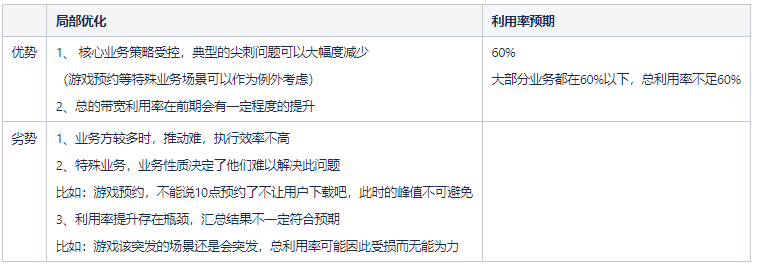

2.1.1 Local optimization

Local optimization is an idea based on our company's main CDN usage. As a mobile phone manufacturer, the core business of our static CDN usage scenario is application distribution, such as: application store app distribution, game center app distribution, and version self-upgrade distribution. To sum up, the six core businesses account for more than 90% of our company's bandwidth. As long as the bandwidth utilization rate of these six major businesses is increased, our bandwidth utilization rate can be significantly improved.

Based on such a situation, CDN operation and maintenance workers often find the core business and give an indicator of bandwidth utilization, so that each business can increase the bandwidth utilization. To sum up, the core idea is: find the core CDN users, set their own utilization indicators for them, raise demand tasks, and clearly explain the value of optimization. After this stage of optimization, the bandwidth burst scenarios of our core business have been closed, and the overall bandwidth utilization has been significantly improved.

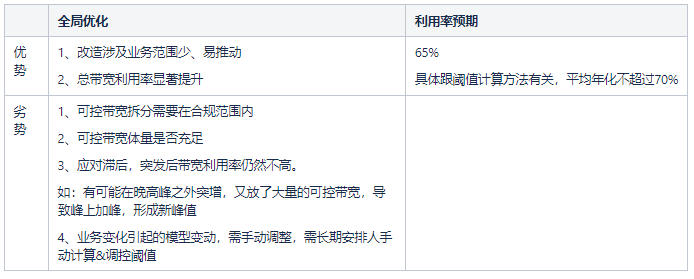

2.1.2 Global optimization

Since local optimization is the optimization of individual services, after a large number of spike problems are solved, the bandwidth utilization optimization of each service will enter a bottleneck area. Among them, the effect is relatively good, and the bandwidth utilization rate of a single service is difficult to exceed 60%, unless it is a special service form and the bandwidth is completely controlled. At this time, our business extracts part of the bandwidth to regulate the company's overall bandwidth and further improve the company's overall bandwidth utilization. We define it as the global optimization stage.

Based on such a global optimization idea, as long as each business does not have a big spike, the overall bandwidth utilization rate will be greatly improved. First of all, we divide the bandwidth and extract some of the bandwidth that can be controlled by the server and split it into controllable bandwidth, mainly the bandwidth of the WLAN download licensed by the Ministry of Industry and Information Technology; then, we observe the trend of bandwidth, and separate out the bandwidth of each time period according to the historical trend of bandwidth. Bandwidth data, control different bandwidth magnitudes and increase volumes for different time periods, and finally achieve the purpose of "shaving peaks and filling valleys". This strategy controls the speed of scaling through thresholds. A threshold is set per hour, and the number of tokens per second is generated at the threshold per hour to control the volume of token buckets.

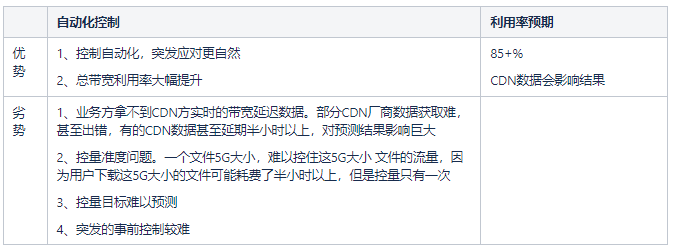

2.1.3 Automatic control

After global optimization, as a technology developer, a further method is to use programs instead of manual labor to control the volume in a more refined direction .

Based on such an idea, the author began to explore the prediction technology in machine learning, imagining that there is a certain possibility of prediction if there are rules and characteristics. Unlike stocks, although there are many factors that affect CDN bandwidth, the most influential factors are obvious. So we analyze these factors, attribute first, then extract features and feature decomposition, predict thresholds, and predict bandwidth values at each point. Finally, this prediction problem was overcome, and the peak-valley problem of CDN bandwidth was adjusted through prediction technology .

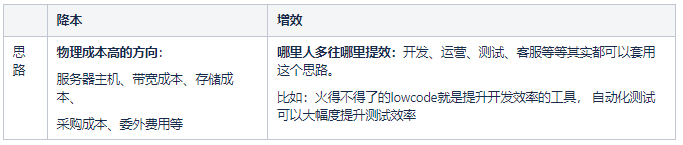

2.3 Origin of cost reduction idea

When it comes to reducing costs and increasing efficiency, as a technology development, the first thing that comes to mind is to use technology to replace labor and use programs to improve everyone's work efficiency . For example: automated testing, automated spot checks, automated monitoring and classification of alarms, etc., no matter which team is not uncommon. Even automated code generators to improve everyone's coding efficiency have already incubated a large number of platforms.

Next, analyze our main ideas for reducing costs and increasing efficiency.

The technical module that the author is mainly responsible for is application distribution, and the cost directions involved are: CDN bandwidth cost, storage cost, and host cost. Compared with the huge cost of CDN, storage cost and host cost, the volume of a small amount of business is insignificant. So far, the most suitable direction for us is to break through in the direction of CDN cost .

The CDN cost direction has two subdivision directions: reducing bandwidth volume and improving bandwidth utilization.

summary:

Reducing the bandwidth volume is complex. Based on the current situation, if the bandwidth can be automatically predicted and automatically controlled, the bandwidth utilization rate can be greatly improved and the obvious cost reduction effect can be achieved. Moreover, the higher the enterprise CDN fee, the greater the cost reduction benefit.

3. Make predictions in a down-to-earth manner

After the goal is determined, the topic is initially placed in the "personal OKR goal" and slowly advanced. In this chapter, the author will talk to you about our main exploration ideas of "prediction direction".

3.1 Exploration of Bandwidth Prediction

Our exploration period is mainly divided into two phases. In the early stage, it mainly revolves around: observation→analysis→modeling , similar to the feature analysis stage; in the later stage, it mainly conducts algorithm simulation→algorithm verification , selects an algorithm with relatively satisfactory effect, continues in-depth analysis and exploration, digs deeper into the value, and researches the final breakthrough of the algorithm. possibility.

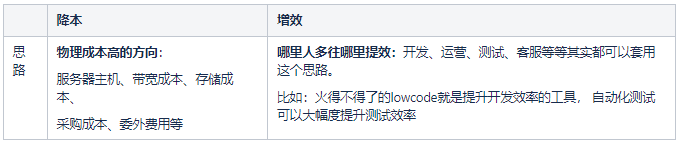

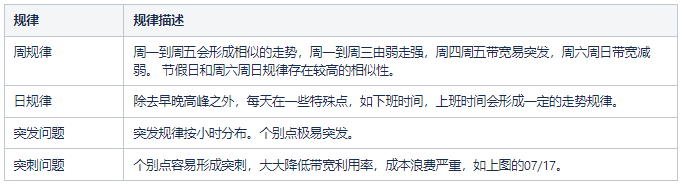

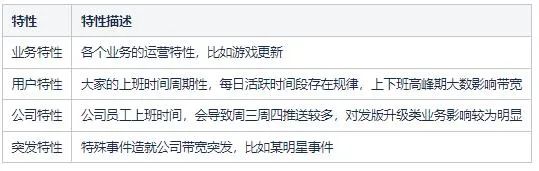

3.1.1 Observation rules

The picture shows the bandwidth trend chart of our company's three major projects from July 1st to July 31st this year (the data has been desensitized):

Some obvious regularities can be observed:

3.1.2 Cause Analysis

Our bandwidth will go out of such a trend, which is related to its influencing factors. Some "obvious" influencing factors are listed below.

3.1.3 Model Splitting

After understanding the causes of bandwidth, our main research directions are: machine learning, residual analysis, time series fitting and other directions. However, the prediction model that finally fits us is: threshold time series prediction, bandwidth real-time prediction model . From the initial exploration to the final shape, our model exploration has also changed directions many times and gradually matured.

3.2 Algorithm key

【Recent data】Although CDN manufacturers cannot provide real-time bandwidth data, they can provide data from a period of time ago, such as bandwidth data 15 minutes ago, which we simply call recent data.

Based on recent bandwidth data, we try to combine the relationship between delayed data and historical data, incorporate it into the model, and develop a self-developed algorithm (the main cycle unit is weeks) for real-time forecasting.

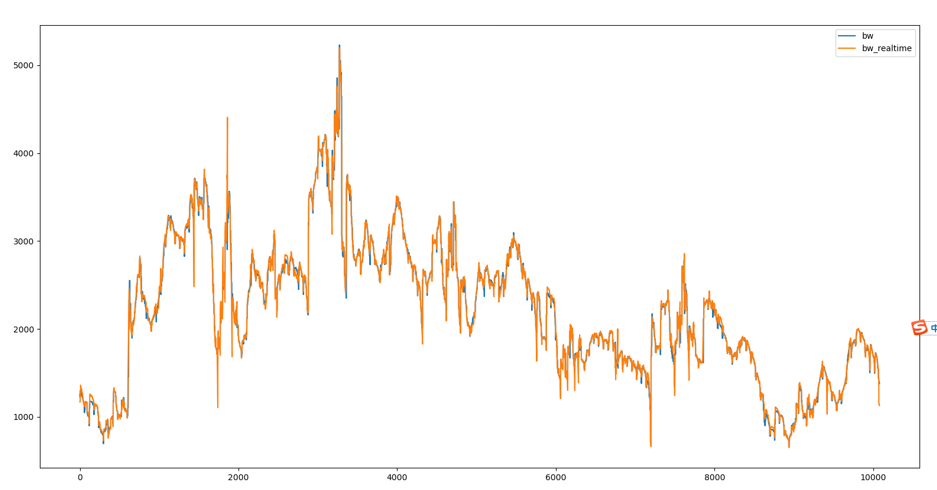

The graph below (data desensitized) is the one-week forecast fit after incorporating the most recent data. Moreover, different from the sensitivity of residual learning to mutation points, our model predicts that the main problem lies in the bandwidth turning point. As long as the bandwidth model of the turning point is properly handled, the algorithm effect can be guaranteed.

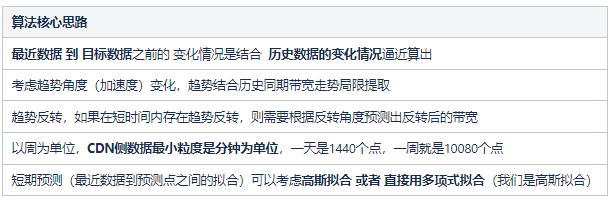

The core idea of our self-developed algorithm :

Algorithm notes:

4. Be a Foolish Old Man "Conscientiously"

Assuming that you have been able to predict the bandwidth more accurately (the standard is: outside the special mutation point, the variance is within 5%), then there are still many details to be done when it is actually put into production, and you may face some problems in model optimization or technical processing problem .

Next, we will mainly talk about the series of solutions that our "Yugong Platform" handles from forecasting to volume control , and we have made some explanations for the practical problems of implementation, so that everyone can avoid detours.

4.1 Our implementation plan

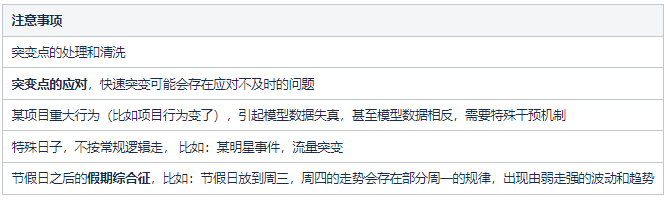

In general, the ultimate goal of our solution is to reduce costs, and the idea of cost reduction is based on the billing model. I summarized our plan in one sentence, as shown in the figure below, predict the blue line, control the shaded area, and finally lower the blue line, which is our billing bandwidth, so as to achieve the cost reduction effect as shown in the figure.

4.2 The practical thinking of Yugong platform

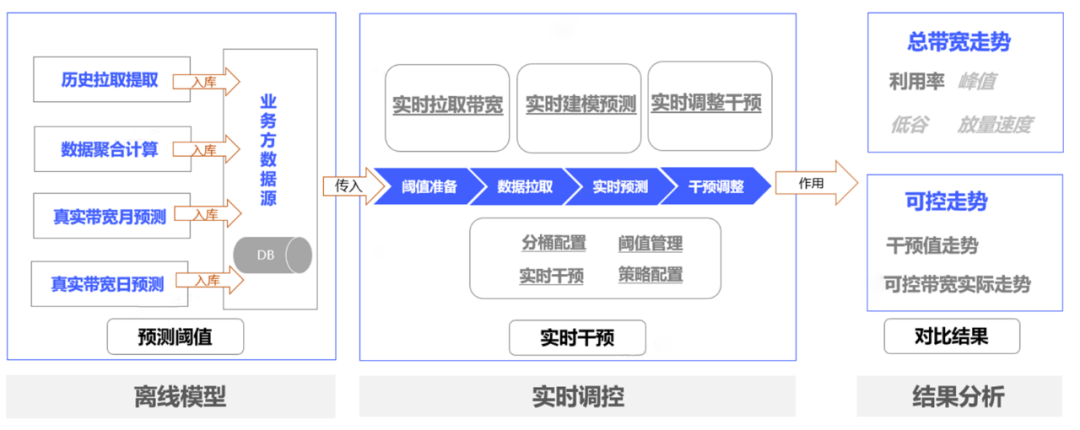

As shown in the figure below, the core idea of Yugong Platform can be summarized as follows:

- Tomorrow's Threshold Prediction by Offline Model

- Use the self-developed model to predict the bandwidth value in the future in real time

- Calculate a control value by combining the threshold value and the predicted value

- The control value is written into the token bucket, and tokens are generated every second for end-side consumption

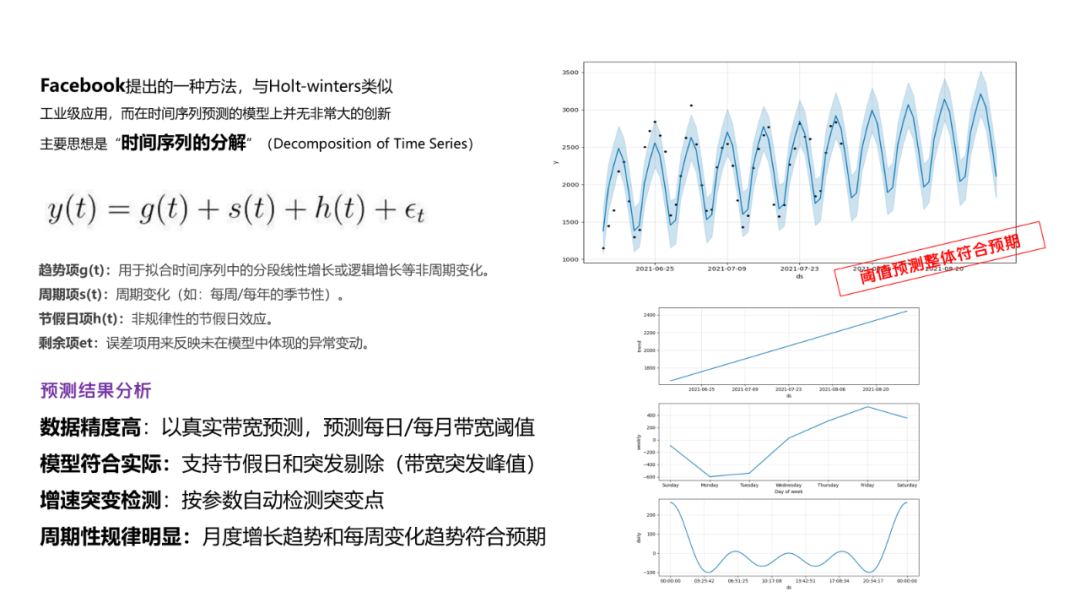

4.2.1 Using Prophet to predict the threshold

The first is threshold prediction. Based on the previous research on prophet prediction when doing bandwidth prediction, it was finally selected as the core algorithm of threshold prediction . Its time series characteristics and the identification of mutation points are very suitable for our scene, and the final effect is also very good.

As shown in the figure below (the data has been desensitized), except for a few special burst points, most of the threshold points basically fall within the predicted range .

Finally, the threshold prediction itself is a time series prediction model. There are many similar selection schemes on the Internet. We use prophet to predict the threshold, and we also design a threshold adaptive model to automatically expand the bandwidth threshold, which can reduce the threshold to a certain extent. Deviation risk. Therefore, the threshold we use is generally the volume threshold, combined with its adaptive characteristics and some intervention methods, can greatly guarantee our regulation effect.

4.2.2 Using self-developed algorithm to predict bandwidth

This part of the content has already been introduced in detail in the forecast section, so I won't repeat it here. In general, the recent bandwidth is still used, combined with historical trends, to fit the bandwidth trend for a period of time in the future, so as to predict the short-term bandwidth trend in the future.

4.2.3 Submodels to solve regulation problems

This is mainly for the conversion between the predicted data and the control data , and to do some refinement. To pay attention to issues such as emergencies, boundaries, conversions, and interventions, it is necessary to analyze and respond to actual business conditions.

4.2.4 Flow Control SDK Problems

The research found that many services may have controllable bandwidth. Based on this, we have unified a flow control SDK. Incorporate more bandwidth into management and control, and the larger the volume of bandwidth controlled, the better the effect will be. The essence of the SDK here is still a token bucket technology. Through this SDK, the unified control of all bandwidth can be achieved, which can greatly reduce the troubles of dealing with bandwidth problems for various businesses.

4.3 Fine Control Capability

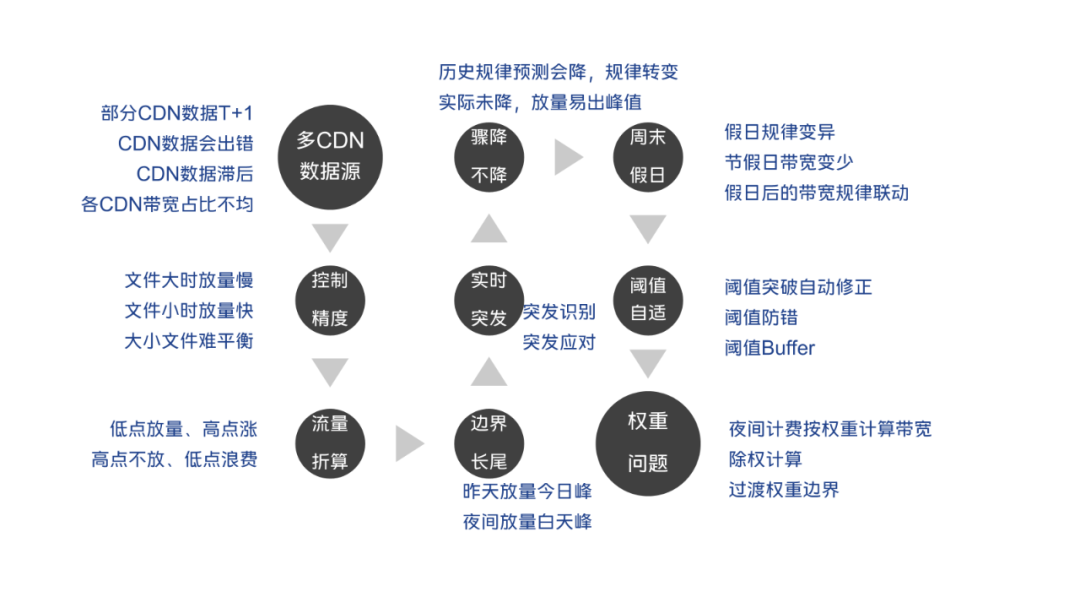

After the threshold prediction, there is a large conversion relationship between the final control amount, and further thinking and optimization may be needed to greatly improve the bandwidth utilization. This section gives examples of the challenges we encountered, some examples for our flow control problems , and some decomposition explanations.

4.3.1 Multiple CDN data source issues

Generally, companies connect to multiple CDNs, and each CDN allocates a certain level. Some CDNs do not provide the ability to pull the latest data, or have too severe frequency restrictions, making it difficult to pull data. Our solution: Find a manufacturer with a relatively acceptable ratio and a relatively cooperative data pull, use their data and ratio to reverse the company's total bandwidth, and reduce dependence on other CDN vendors .

Note: Because the company has a lot of business, small businesses do not support the multi-CDN ratio control capability, which will cause fluctuations in this ratio. If the fluctuation is too large, the control will be distorted and your utilization rate will be damaged.

4.3.2 Large and small files cause inaccurate bandwidth control

When the current is limited, generally you can only control the start of the download, but once the download starts, you don't know when the download will be completed. For small files, the download time is shorter and the control accuracy is higher. (Common small files, small resource distribution, etc.) Large files, such as system upgrade packages, 3G packages, may take more than an hour to download, and there will be problems when controlling the download start. The long tail caused by the download time will make you despair.

Our solution ideas:

- Idea 1: For such a particularly large file with low traffic timeliness requirements, split it into a rough control model (which can be understood as the set of ideas for global control). Use small files for precise control. Precision Control uses predictive models to further supplement the overall bandwidth .

- Idea 2: Split the download of a large file, assuming it is divided into 100M, and request the flow control server for each download of 100M. The complexity here is high, and it depends on the modification of the client, which is considered as a way of thinking.

4.3.3 Flow conversion problem

In fact, it is also caused by the long tail of traffic. You control that you can download 100 files per second, and each file is 100MB, so the bandwidth is 10000MB/s. In fact, because the download is continuous, the download will not be completed in this second. The actual running Not so much flow out.

Our solution: there is a traffic conversion factor, that is, you control 3Gbps, but in fact there is only 2Gbps after full capacity, here you need to manually set a conversion factor to facilitate more precise traffic control.

Note: This conversion factor will cause your utilization to suffer due to fluctuations in the size of the package body.

4.3.4 Boundary long tail problem

Under normal circumstances, the boundary is in the early morning, which means one charge per day. But at 23:59 yesterday, everyone’s bandwidth is often underestimated. If you control a lot of bandwidth (assuming that you controlled a large amount of 10Tbps because of a burst yesterday), and the traffic happens to be enough, this traffic may be due to the long tail problem. It was not finished until 00:01 today. Because the traffic is too large, today's billing bandwidth forms a huge peak in the early morning.

Our solution: special control on some points before the boundary point . For example: 23:50~23:59, use tomorrow's peak value for volume control.

4.3.5 Emergency response issues

This mainly refers to the burst identified by the model. For example, the data shows that the bandwidth increased sharply 20 minutes ago. According to the model, you can actually recognize that the current bandwidth is at a high level based on this burst, and because of the trend problem, the bandwidth is predicted. a higher value. Our solution: According to the total growth value (or the proportion of growth value), or the growth rate (or the proportion of growth rate), or the maximum growth step size (the data with the largest growth per minute) is considered as a way to judge bursts , Take emergency response plans.

Some people have also discovered that, as mentioned above, some emergencies cannot be predicted. How do we deal with them to reduce losses?

① Threshold space reserved for small bursts

For example, the predicted target threshold is 2T, and 1.8T may be set as the threshold. In this way, even if there is a short burst, the bandwidth bursts to 2T, which is within our target range. The reserved 0.2T is the retreat we leave for ourselves, and our goal is never 100% bandwidth utilization. This part of the reservation is the bandwidth utilization that we have to sacrifice. Moreover, when the bandwidth reaches a higher peak value, the reserved value should be reasonably configured.

②Unified access to large burst collection

How to define big burst? Some businesses, such as game publishing and WeChat publishing, involve a large package and a wide range of users. As long as the version is released, it will inevitably cause a large burst. This kind of large bandwidth mutation that can only be caused by large services is a large burst. If the sudden trend is not fast, you can try to control the speed to suppress this trend (we have actually done a good job in optimizing the bandwidth utilization in the first stage). If it becomes a slow burst, our model can identify the trend and deal with it well. So far, the big burst is not a problem. Conversely, for a large burst that cannot be suppressed, a sudden increase of more than 300G, which is too sudden to cover the ears, the model has no time to adjust. We recommend that similar behaviors be directly connected to the control system, so that the control system can dig holes in advance to deal with it.

③In the section that is prone to sudden bursts, use less bandwidth and sacrifice some bandwidth for defense

Some time periods, such as the evening peak, are prone to bursts, either this or that, and unexpected bursts will always occur. It is recommended to directly lower the threshold. The original threshold is 2T, and it is directly controlled to 1.5T for a period of time. This 0.5T is the protection of the system. After all, only one time period is dug, and the blocked traffic is released during other time periods. The overall impact is good.

4.3.6 Sudden drop without drop problem

According to the model prediction sudden drop (caused by historical laws), you know that the trend of bandwidth at this point is to decrease, and there are some regular reduction data. However, the actual decrease may not be that much, and eventually new peaks may be caused by regulatory issues.

Based on this problem, our solution idea is to define dip scenarios and dip recognition rules, make special marks on dip points, handle the heavy-duty strategy of dip bandwidth , and avoid introducing new peaks.

4.3.7 Manual intervention processing

Some special scenarios, such as large-scale software, can be expected to be very large in magnitude, or large-scale game appointments (such as: King of Glory) updates. This part can know the burst in advance.

Our solution: Provide sudden-onset SDK access capabilities and background input capabilities. For some businesses that are prone to sudden-onset, provide sudden-onset reporting SDK, and report data in advance to facilitate the model to respond to emergencies.

For example: according to the bandwidth volume and the law of historical burst scenarios, the burst peak value is simulated and simulated. During the burst, the threshold is lowered; before the burst, the threshold is raised.

4.3.8 Special Mode: Weekend & Holiday Mode

Holidays are different from the usual bandwidth trend, and there will be several obvious rules:

- Everyone does not go to work, and the controllable bandwidth is obviously too small

- The burst point shifts toward the morning peak

Our solution: model separately for holidays and analyze bandwidth trends during holidays .

4.3.9 Threshold adaptive adjustment problem

If the threshold remains unchanged, then after a burst, using the previous threshold will cause a lot of bandwidth waste.

Our solution: Design a threshold growth model. If the peak bandwidth outside the pool exceeds the threshold, or the total bandwidth exceeds the threshold by a certain percentage, then consider enlarging the threshold.

4.3.10 Bandwidth weight problem

In the era of intense CDN competition, due to user habits, more bandwidth is used during the day and less bandwidth is used at night. Therefore, some CDN vendors support segmented billing schemes, which means that we can use more bandwidth during certain periods of time, and use less bandwidth during certain periods of time.

Our solution: This solution is based on segmented billing, so it is necessary to set bandwidth weights for different time periods to ensure smooth operation of the bandwidth model. Of course, after segmentation, new boundary problems will arise, which need to be dealt with again.

4.4 Technical issues

This section explains the technical issues you are most likely to encounter based on the technologies we use.

4.4.1 Hot key problem

Due to the large number of access parties and the huge amount of traffic that passes through the download limit, which may be millions per second, there must be a problem here, which is the hotspot key problem. We determine the key prefix through the distribution relationship between the slot and the node (you can get it from the DBA), and each request is randomly assigned to a certain key prefix, so that it is randomly assigned to a certain node. In this way, the traffic is evenly distributed to each redis node, reducing the pressure on each node.

The following is our flow control SDK, LUA script reference:

//初始化和扣减CDN流量的LUA脚本

// KEY1 扣减key

// KEY2 流控平台计算值key

// ARGV1 节点个数 30 整形

// ARGV2 流控兜底值MB/len 提前除好(防止平台计算出现延迟或者异常,设一个兜底值),整形

// ARGV3 本次申请的流量(统一好单位MB,而不是Mb) 整形

// ARGV4 有效期 整形

public static final String InitAndDecrCdnFlowLUA =

"local flow = redis.call('get', KEYS[1]) " +

//优先从控制值里取,没有则用兜底值

"if not flow then " +

" local ctrl = redis.call('get', KEYS[2]) " +

" if not ctrl then " +

//兜底

" flow = ARGV[2] " +

" else " +

" local ctrlInt = tonumber(ctrl)*1024 " +

//节点个数

" local nodes = tonumber(ARGV[1]) " +

" flow = tostring(math.floor(ctrlInt/nodes)) " +

" end " +

"end " +

//池子里的值

"local current = tonumber(flow) " +

//扣减值

"local decr = tonumber(ARGV[3]) " +

//池子里没流量,返回扣减失败

"if current <= 0 then " +

" return '-1' " +

"end " +

//计算剩余

"local remaining = 0 " +

"if current > decr then " +

" remaining = current - decr " +

"end " +

//执行更新

"redis.call('setex', KEYS[1], ARGV[4], remaining) " +

"return flow";- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

4.4.2 Local cache

Although the hotspot key problem has been solved, when the real flow control is controlled to 0 (for example, it is controlled to 0 for 1 hour in a row), there will still be a large number of requests, especially when the client retry mechanism causes more frequent requests. Going through all LUA is a bit of a loss.

Therefore, it is necessary to design a cache to tell you that there is no traffic on the flow control platform at this second, so as to reduce concurrency.

Our solution: use memory caching. When all nodes have no traffic, set a local cache key for the specified second time, and after filtering by the local cache key, the redis access level can be greatly reduced .

Of course, in addition to this, there is also the problem of traffic balance. The traffic of the client is displayed within 1 minute: there is a lot of traffic in the first 10 seconds, which triggers the traffic limit, and there is no traffic in the next 50 seconds.

There is no good solution for this kind of problem on our server side yet. It may be necessary to think of a solution end-to-end, but there are many end-to-end, so it is not easy to push.

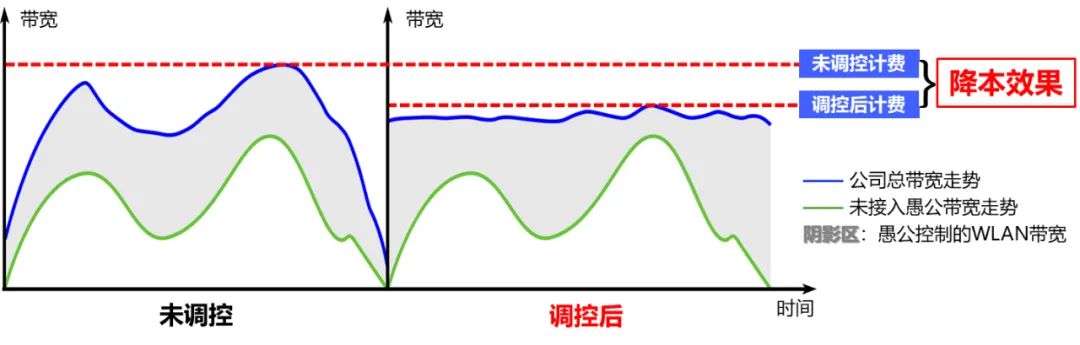

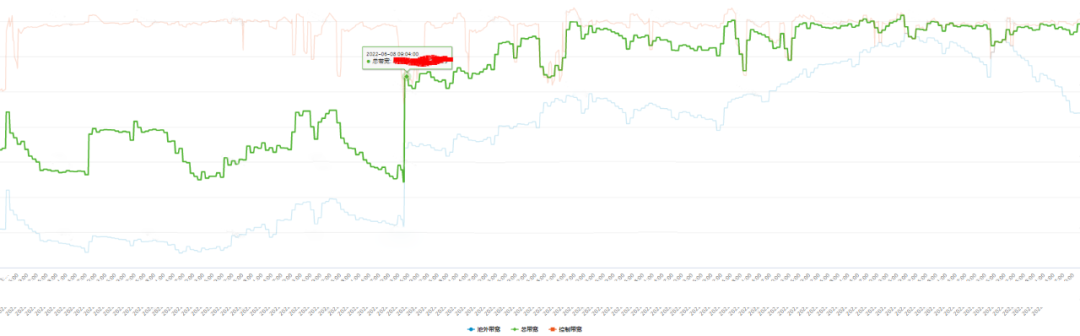

4.5 Final effect

The picture shows the bandwidth trend after de-weighting. As can be seen from the figure, using "Yugong Platform" to regulate bandwidth, the utilization rate of CDN bandwidth is significantly improved .

5. Write at the end

This article mainly describes the process of "Yugong Platform" from research to implementation:

- Combined with the business, the CDN cost reduction direction was chosen as the technical research direction.

- Observing the rules, Prophet was selected as the offline threshold prediction algorithm.

- Algorithm breakthrough, self-developed fitting algorithm as real-time prediction algorithm.

- Continuous thinking, thinking about dealing with problems in the model and continuous optimization plans.

- In the end, from a technical point of view, it will create huge cost reduction benefits for the company.