The HTTP protocol "Shiquan Dabu Wan" that must be mastered in front-end and back-end development

The HTTP protocol "Shiquan Dabu Wan" that must be mastered in front-end and back-end development

The full name of the HTTP protocol is HyperText Transfer Protocol, which is the Hypertext Transfer Protocol.

- Hypertext: Refers to a mixture of text, pictures, audio, video, files, etc., such as the most common HTML.

- Transmission: Refers to the transfer of data from one party to another, which may be thousands of miles apart.

- Protocol: Refers to some agreements made by the two parties in communication, such as how to start communication, the format and order of information, and how to end communication.

What is the HTTP protocol for? The answer is for communication between client and server. The HTTP protocol is the most common in our daily surfing process, and the browser is the most common HTTP client.

For example, when we use a browser to access Taobao, the browser will send a request message following the HTTP protocol to the Taobao server, telling the Taobao server that it wants to obtain Taobao homepage information.

After the Taobao server receives this message, it will send a response message that also follows the HTTP protocol to the browser, and the response message contains the content of the Taobao homepage.

After the browser receives the response message, it parses the content and displays it on the interface.

1. HTTP request

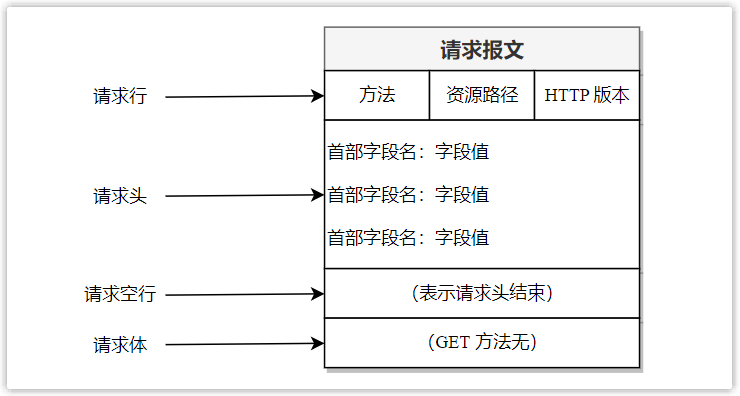

The information sent by the client to the server is called a request message, and the general structure is as follows:

(1) Request line

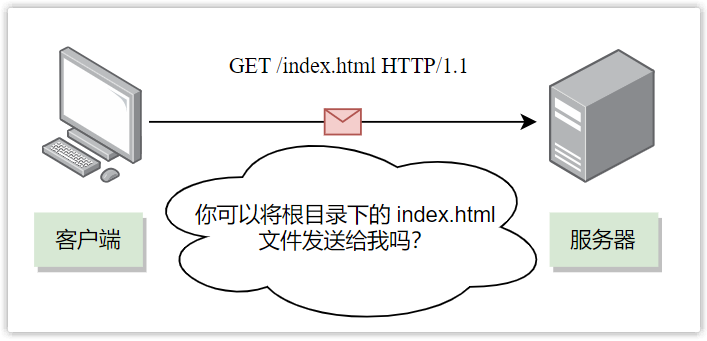

The request line is used to explain what to do, and consists of three parts, separated by spaces.

- Method, specify what kind of operation to do on the requested resource (such as query, modification, etc.). Common methods are: GET, POST, PUT, DELETE, HEAD, etc. In the development of front-end and back-end separation, the RESTful design style is often followed, which uses POST, DELETE, PUT, and GET to represent the addition, deletion, modification, and query of data, respectively.

- Resource path, specifying the location of the requested resource in the server. For example, /index.html means to access the html file named index in the root directory of the server.

- HTTP version, specify the HTTP version used. Currently the most used version is HTTP/1.1.

Take a chestnut:

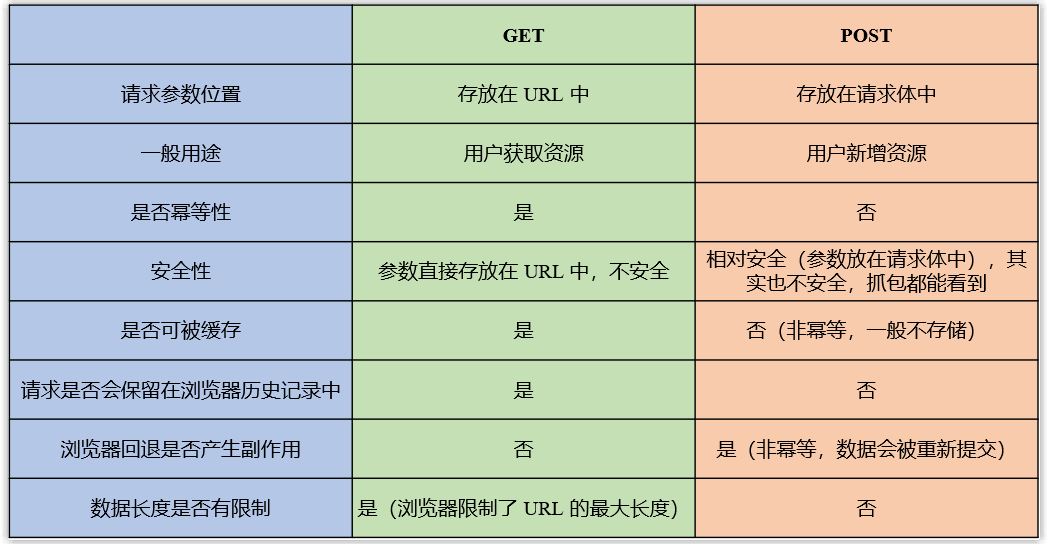

A common question in interviews: What is the difference between GET and POST? , answer here.

- First of all, general GET request parameters are stored in the URL, while POST request parameters are stored in the request body. GET requests are less secure than POST requests because parameters are placed in the URL and can be seen directly. But it does not mean that the POST request is safe, because the parameters are placed in the request body. If no encryption is used, the technician can see the plaintext by capturing the packet. At the same time, each browser restricts the length of the URL. For example, the IE browser restricts the length of the URL to a maximum of 2KB, which limits the length of the data transmitted by the GET request, while the length of the transmitted data by the POST request is unlimited.

- Secondly, the general GET request is used to obtain data, and the POST request is used to add data. The concept of idempotence needs to be mentioned here. Idempotence means that for the same system, under the same conditions, a request and repeated requests have the same impact on resources, and there will be no side effects due to multiple requests. GET requests are used to obtain resources and will not change system resources, so they are idempotent. POST is used to add new resources, which means that multiple requests will create multiple resources, so it is not idempotent. Based on this feature, GET requests can be cached, can be kept in the browser history, and browser fallback will not cause side effects, while POST requests do the opposite.

- In the end, there is essentially no difference between GET and POST. The underlying layer of HTTP is TCP, so whether it is GET or POST, the underlying layer is connected and communicated through TCP. We can add a request body to GET, add URL parameters to POST, use GET to request new data, and POST request to query data, which is actually completely feasible.

(2) Request header

The request header is used to pass some additional important information to the server, such as the language that can be received.

The request header consists of field names and field values, separated by colons. Some common request headers are:

request header | meaning |

Host | The domain name receiving the request |

User-Agent | Client software name and version number and other relevant information |

connection | Communication option to set whether the TCP connection is maintained after sending the response |

Cache-Control | Information about controlling the cache |

Referer | Record the source of the request (when clicking on a hyperlink to go to the next page, the URI of the previous page will be recorded) |

accept | The data types supported by the client, represented by MIME types |

Accept-Encoding | Encoding formats supported by the client |

Accept-Language | Languages supported by the client |

If-Modified-Since | Used to determine whether the resource cache is valid (the client notifies the server, the last change time of the local cache) |

If-None-Match | Used to determine whether the resource cache is valid |

Range | It is used for resuming transmission, specifying the position of the first byte and the position of the last byte. |

cookies | Indicates the identity of the requester and is used to save state information |

(3) Request a blank line

The request blank line is used to indicate the end of the request header.

(4) Request body

The request body is used to transmit the data that the client wants to send to the server, such as request parameters, which usually appear in the POST request method, while the GET method has no request body, and its request parameters will be directly displayed on the URL.

The data of the request line and the request header are in text form and formatted, but the request body is different, it can contain arbitrary binary data, such as text, pictures, videos, etc.

2. HTTP response

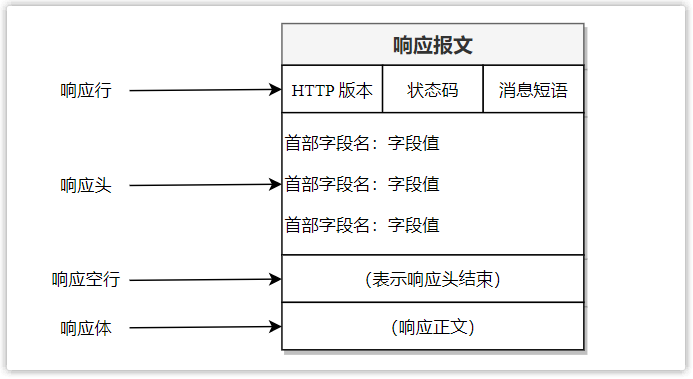

The information sent by the server to the client is called a response message, and the structure of the response message is generally as follows:

(1) Response line

The response line is used to describe the processing of the request and consists of three parts separated by spaces.

- HTTP version, specify the HTTP version used. For example, HTTP/1.1 means that the HTTP version used is 1.1.

- Status code, which describes the processing result of the server to the request in the form of three digits. For example, 200 means success.

- A message phrase that describes in text the result of the server's processing of the request. For example, OK means success.

Take a chestnut:

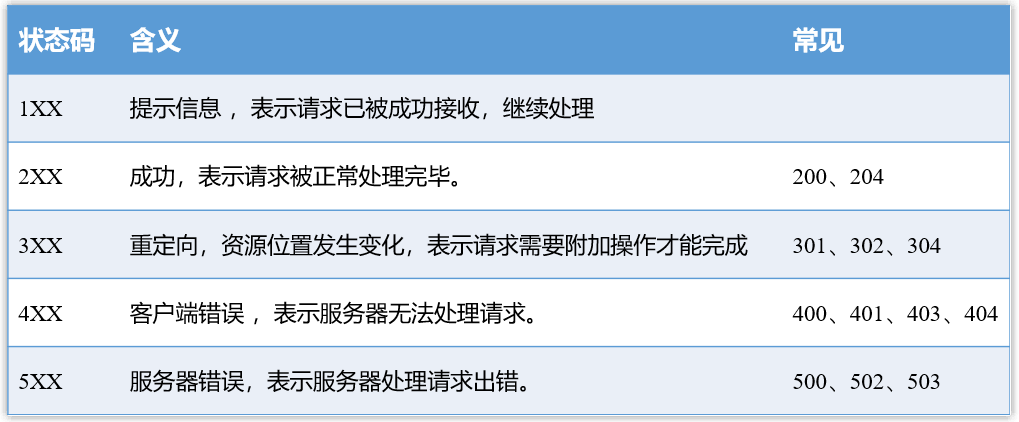

A common question in interviews: What are the common status codes for HTTP? , answer here.

- 200 OK: Indicates that the request was processed normally, which is the most common status code.

- 204 No Content: Indicates that the request is processed normally, but the response body content is not included in the returned response message.

- 301 Moved Permanently: Permanently redirected, indicating that the requested resource has been permanently moved, the new URL is defined in the Location field of the response message, and the browser will automatically obtain the new URL and issue a new request. Scenario: For example, after building a website, change the url of the website, and apply for a new domain name, but hope that the previous url can still be accessed, so you can make a redirection to the new url. For example, the earliest website of JD.com http://www.360buy.com redirects to http://www.jd.com.

- 302 Found: Temporary redirection (that is, it may change in the future), indicating that the requested resource has been temporarily assigned a new URL, and the new URL will be returned in the Location field in the response message, and the browser will automatically use it A new URL makes a new request. For example, when a user visits the personal center page without logging in, he can temporarily redirect to the login url; or the protocol changes, such as Jingdong http://www.jd.com redirecting to https://www.jd.com ;For another example, the background of the website needs system maintenance tonight, and the service is temporarily unavailable. This is "temporary" and can be configured as a 302 redirect to temporarily switch the traffic to a static notification page. The browser will know when it sees this 302 This is only a temporary situation, no cache optimization will be done, and the original address will be accessed the next day.

- 304 Not Modified: It means that the last document has been cached and can still be used, that is, access to the cache.

- 400 Bad Request: A general error status code, indicating that there is a syntax error in the request message, and an error occurred on the client side.

- 401 Unauthorized : The user is not authenticated.

- 403 Forbidden: Indicates that although the server has received the request, it refuses to provide the service. The common reason is that there is no access right (that is, the user is not authorized).

- 404 Not Found: Indicates that the requested resource does not exist.

- 500 Internal Server Error: It means that the server has an error, and there may be some bugs or failures.

- 502 Bad Gateway: It is usually an error code returned by the server as a gateway or proxy, indicating that the server itself is working normally, but an error occurred when accessing the backend server (the backend server may be down).

- 503 Service Unavailable: Indicates that the server is temporarily overloaded or is being shut down for maintenance, and cannot process requests temporarily, and you can try again later. If the web server limits the traffic, it can directly respond to the overloaded traffic with a 503 status code.

(2) Response header

The response header is used to pass some additional important information to the client, such as the length of the response content, etc.

The response header consists of field names and field values, separated by a colon. Some common response headers are:

response header | meaning |

date | Date and time information, indicating the date and time when the server generates and sends the response message. |

Server | Indicates the information of the HTTP server application, similar to the User-Agent in the request message |

location | This field will be used with redirection to provide a new URI after redirection. |

connection | Communication option to set whether the TCP connection is maintained after sending the response |

Cache-Control | Information about controlling the cache |

Content-Type | The response type returned by the server |

Content-length | The length of the response returned by the server |

Content-Encoding | The response encoding returned by the server |

Content-Language | The response language returned by the server |

Last-Modified | Specifies when the response content was last modified |

Expires | Indicates the time when the resource expires, and the browser will use the local cache within the specified expiration time |

Etag | Used for negotiating caching, returns a digest value |

Accept-Ranges | Used for resuming uploads, specifying the range of content supported by the server |

Set-Cookie | set status information |

(3) Response to blank lines

A blank response line is used to indicate the end of the response headers.

(4) Response body

The response body is used to transmit the body that the server will send to the browser.

Like the request body of the request message, the response body can contain arbitrary binary data. After the browser receives the response message, it loads the text into memory, parses and renders it, and finally displays the page content.

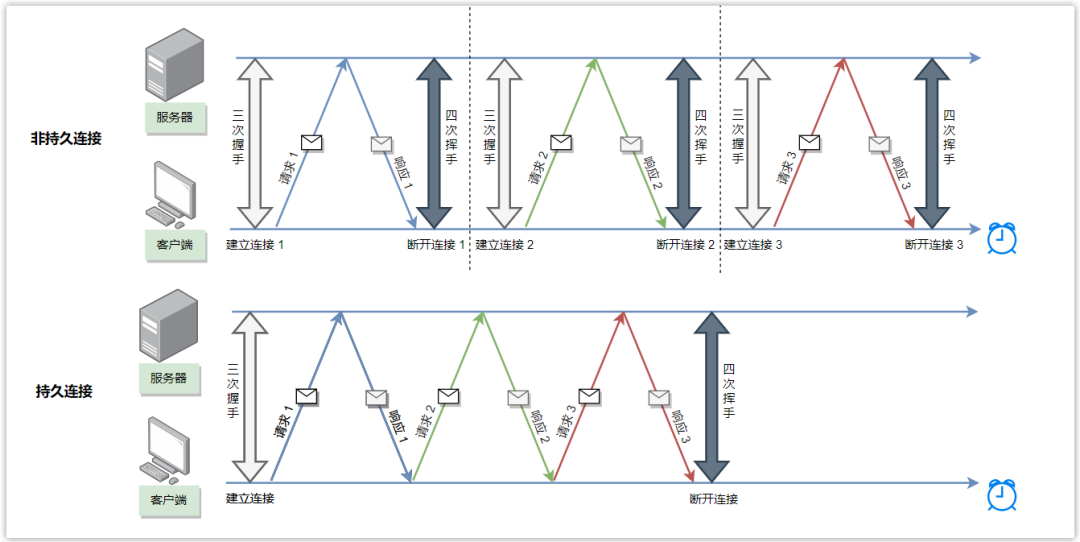

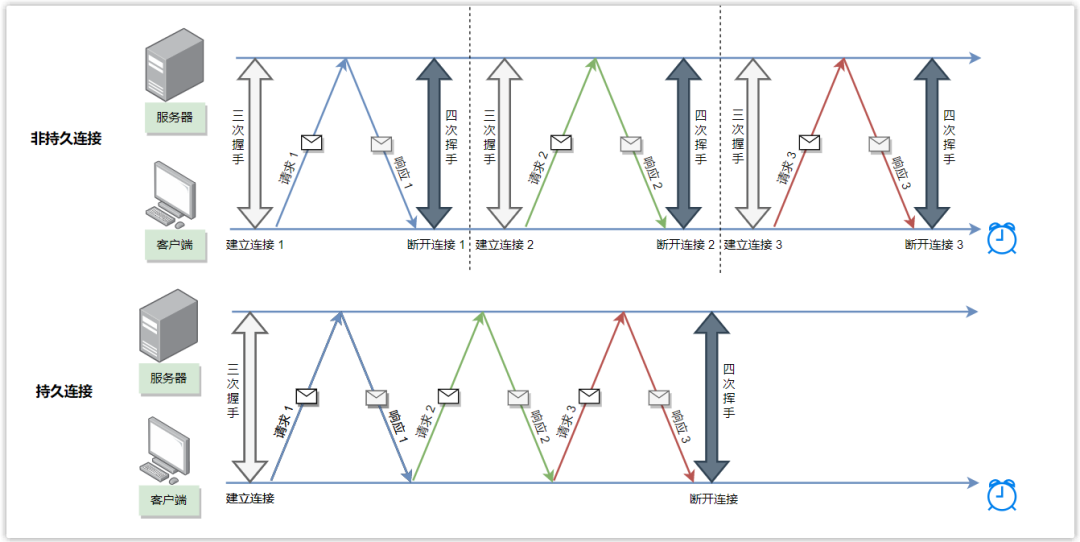

3. HTTP persistent connection

The client sends a series of requests to the server. If the server and the client send each request/response pair through a separate TCP connection, it is called a non-persistent connection, also known as a short connection; if it is sent through the same TCP connection , it is called a persistent connection, also known as a long connection.

For example, when opening a web page, assuming that the page contains an HTML basic file and 2 pictures, if the client and the server obtain these 3 data through the same TCP connection, it is a continuous connection. connection, it is a non-persistent connection.

Disadvantages of non-persistent connections:

- Each time a connection is established, a three-way handshake process is required, resulting in a longer overall request response time. Of course, it is not absolute. If multiple connections can request in parallel, the total response time may be shortened. For example, in order to improve the loading speed of the Chrome browser, 6 parallel connections can be opened at the same time, but multiple parallel connections will increase the burden on the web server.

- A new connection must be established and maintained for each requested object, and each connection requires the client and server to allocate TCP buffers and maintain TCP variables, which puts a serious burden on the Web server, because a Web server may serve for hundreds of different customer requests.

HTTP (1.1 and later) adopts the persistent connection mode by default, but it can also be configured as a non-persistent connection mode. Use the Connection field in the message to indicate whether to use a persistent connection.

- If the value of the Connection field is keep-alive, it indicates that the connection is a persistent connection, which can be disabled by default for HTTP1.1 and later.

- If the value of the Connection field is close, it indicates that the connection is to be closed.

Note: A persistent connection is not a permanent connection. Generally, after a configurable timeout interval, if the connection is still not in use, the HTTP server will close the connection.

4. HTTP caching

For some resources that will not change in a short period of time, the client (browser) can cache the resources responded by the server locally after a request, and then directly read the local data without resending the request.

We often come across the concept of "cache". For example, due to the large speed gap between memory and CPU, in order to further improve computer performance, L1 cache, L2 cache, etc. are designed to let the CPU fetch data from the cache first. If If you can’t get it, go to memory to get it.

Another example is in the back-end development, because the database is generally stored on the hard disk, and the reading speed is slow, so the memory database such as Redis may be used as the cache, and the data is first fetched from Redis, and if it cannot be fetched, it is fetched from the database. .

Another example is in the operating system, because the address conversion speed of the page table is slow, so there is a TLB fast table. When it is necessary to convert the logical address to the physical address, first query the faster TLB fast table. If you check If not, then query the page table. At this time, the TLB fast table is a kind of cache.

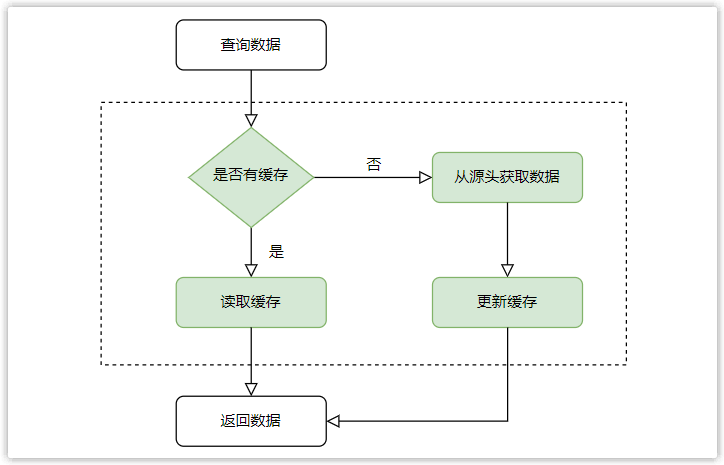

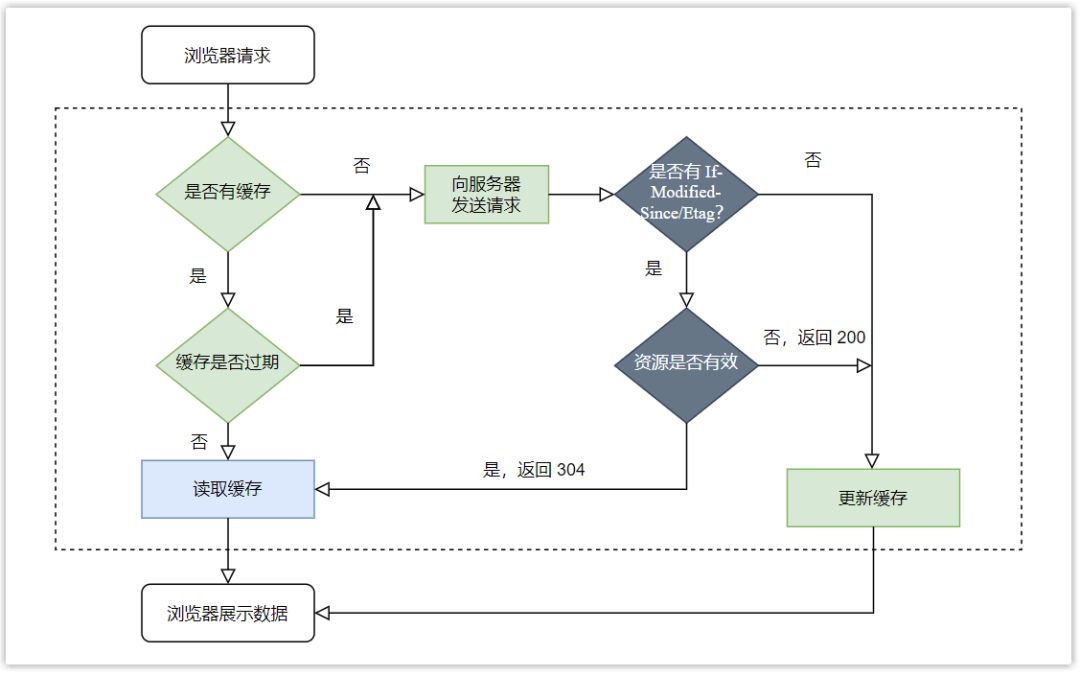

The main purpose of caching is to improve query speed, and the general logic is shown in the figure.

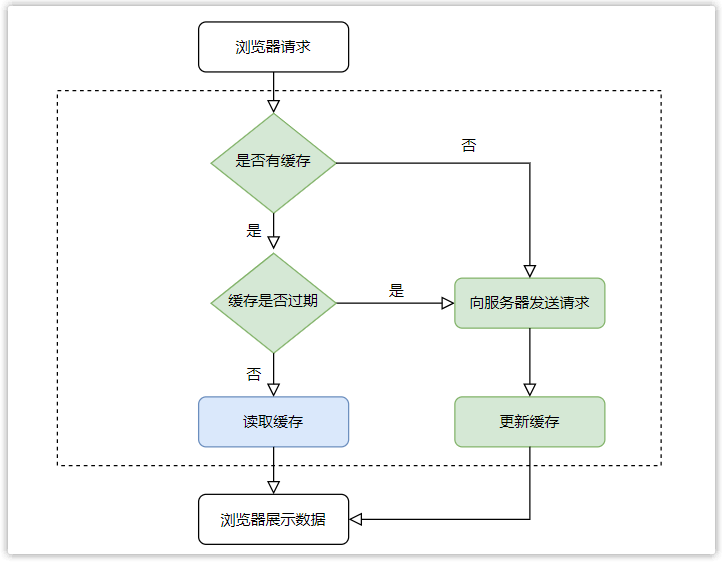

Similarly, there is also the concept of cache in HTTP design, mainly to speed up the response speed. The implementation of HTTP cache depends on some fields in the request message and response message, which are divided into strong cache and negotiation cache.

(1) Strong cache

Strong caching means that if the cached data is not invalid, the cached data of the browser will be used directly and no request will be sent to the server. The logic is similar to the L1 cache, Redis, and TLB fast table mentioned above.

The specific implementation is mainly through the Cache-Control field and the Expires field.

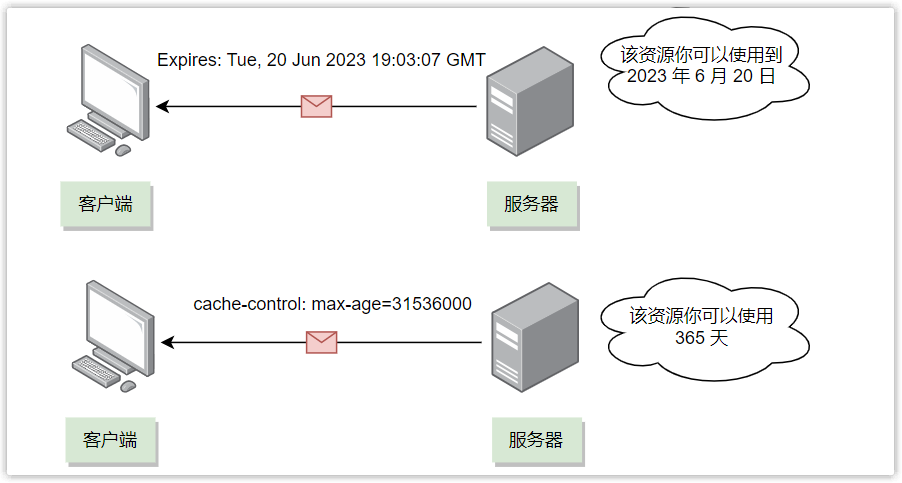

Cache-Control is a relative time (that is, how long to expire, http1.1 specification), Expires is an absolute time (that is, expires at a certain point in time, http1.0 specification), if two fields exist at the same time, Cache- Control has higher priority.

Because the server-side time and the client-side time may not be synchronized, there may be deviations, which may lead to time errors when using Expires, so it is generally recommended to use Cache-Control to implement strong caching.

Taking Cache-Control as an example, the specific implementation process of strong caching is as follows:

- When the browser requests to access server resources for the first time, the server will add Cache-Control to the response header. The following can be set in Cache-Control.

max-age=seconds, indicating that the cache will expire after the specified millisecond value. For example: cache-control: max-age=31536000, it means that the cache will expire after 365 days.

no-store, means caching is not allowed (including strong caching and negotiation caching).

no-cache, means not to use strong cache, but to use negotiated cache, that is, you must go to the server to verify whether it is invalid before using it. If it is not invalid, then use the cache. If it is invalid, return the latest data. Equivalent to max-age=0, must-revalidate.

must-revalidate, which means caching is allowed, and if the cache does not expire, use the cache first, and if the cache expires, go to the server to verify whether the cache is still valid. Many small partners here may have doubts. Even if must-revalidate is not added, after max-age is added, will the cache expire and go to the server for verification? What is the difference between adding must-revalidate? In the HTTP protocol specification, the client is allowed to use the expired cache directly in some special cases, such as when the verification request is wrong (for example, the server cannot be connected again), and after adding must-revalidate, when the verification request is wrong, Will return a 504 error code instead of using an expired cache.

- When the browser requests to access the resource in the server again, it calculates whether the resource is expired according to the time of requesting the resource and the expiration time set in Cache-Control.

If there is no expiration (and Cache-Control does not set the no-cache attribute and no-store attribute), use the cache and end;

Otherwise, re-request the server;

(2) Negotiation cache

Negotiated caching means that after the first request, the server response header Cache-Control field attribute is set to no-cache or the cache time expires, then the browser will negotiate with the server when it requests again to determine whether the cached resources are valid. That is, whether the resource has been modified and updated.

- If the resource is not updated, the server returns a 304 status code, indicating that the cache is still available, and there is no need to send the resource again, which reduces the data transmission pressure on the server and updates the cache time.

- If the data is updated, the server returns a 200 status code, and the new resource is stored in the request body.

Negotiation caching can be implemented in the following two ways:

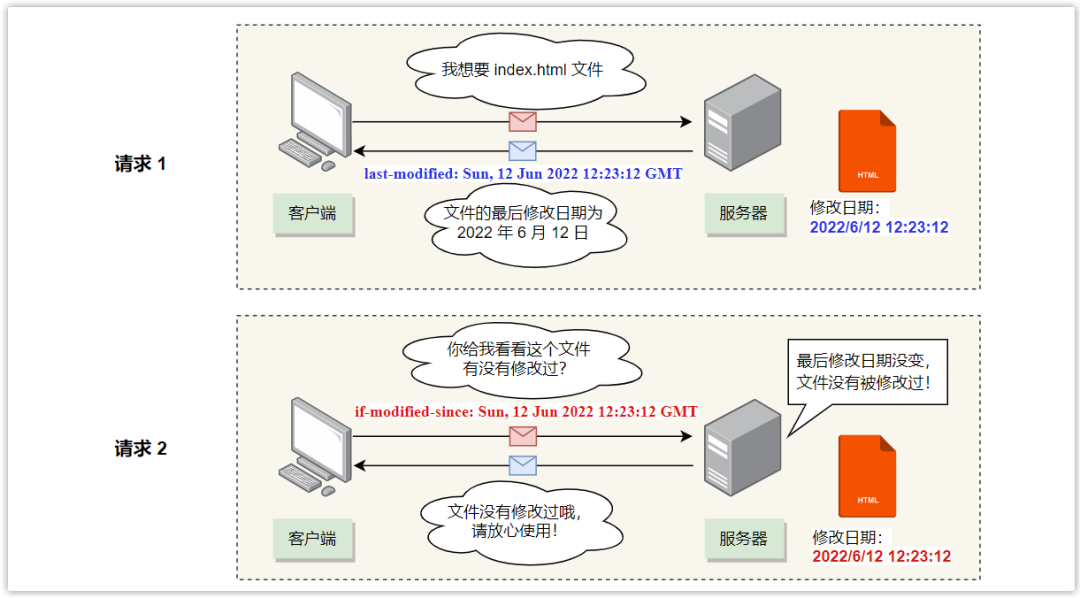

The first type (HTTP/1.0 specification): The If-Modified-Since field in the request header and the Last-Modified field in the response header:

- Last-Modified: Indicates the last modification time of this response resource. After the resource is requested for the first time, the server will include this information in the response header.

- If-Modified-Since: When the resource expires, the browser will bring the Last-Modified time (put in the request header If-Modified-Since) when the browser initiates the request again, and the server compares this time with the last modification time of the requested resource comparing,

If the last modification time is large, it means that the resource has been modified, then return the latest resource and 200 status code;

Otherwise, it means that there is no new modification of the resource, and a 304 status code is returned.

- This method has the following problems:

Based on time implementation, there may be unreliable problems due to time errors, and it can only be accurate to the second level. Within the same second, Last-Modified has no perception.

If some files have been modified, but the content has not changed (for example, only the modification time has changed), but the Last-Modified has changed, causing the file to fail to use the cache.

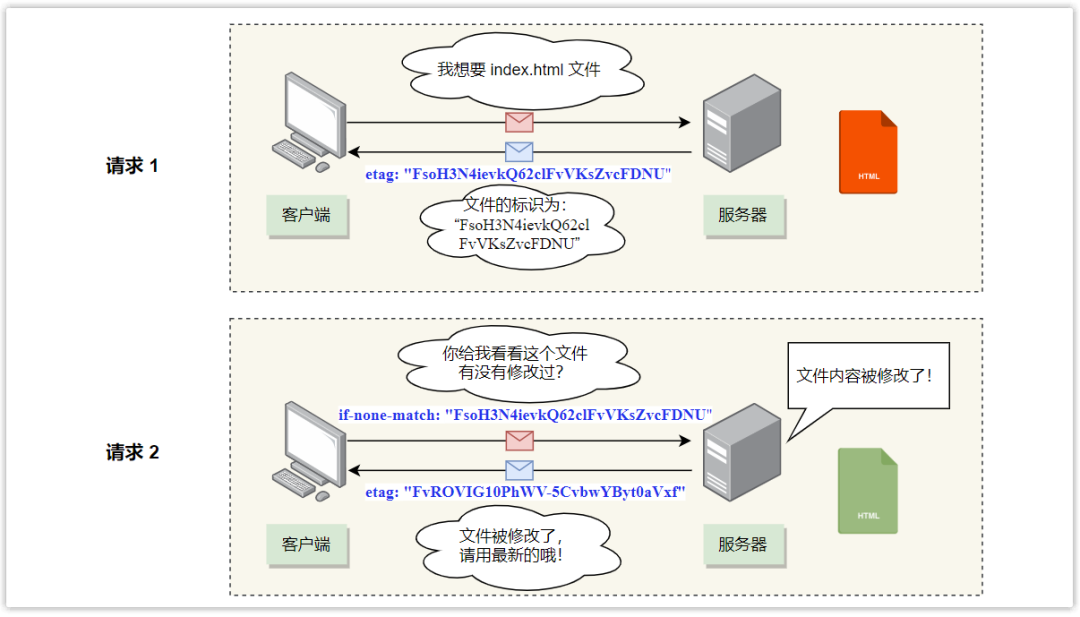

The second type (HTTP/1.1 specification): The If-None-Match field in the request header and the ETag field in the response header:

- Etag: uniquely identifies the response resource, which is a hash value; after the resource is requested for the first time, the server will carry this information in the response header.

- If-None-Match: When the resource expires and the browser initiates a request to the server again, it will set the If-None-Match value of the request header to the value in Etag. The server compares this value with the hash value of the resource,

If the two are equal, the resource has not changed, and a 304 status code is returned.

If the resource has changed, return the new resource and a 200 status code.

- The problem with this method is that calculating Etag will consume system performance, but it can solve the problems of the first method and is recommended.

Notice:

- If the HTTP response header has both Etag and Last-Modified fields, Etag has a higher priority, that is, it will first determine whether Etag has changed, and if Etag has not changed, then look at Last-Modified.

- Ctrl + F5 forces a refresh, which will fetch data directly to the server.

- Press F5 to refresh or the browser's refresh button, and add Cache-Control: max-age=0 by default, that is, the negotiated cache will be used.

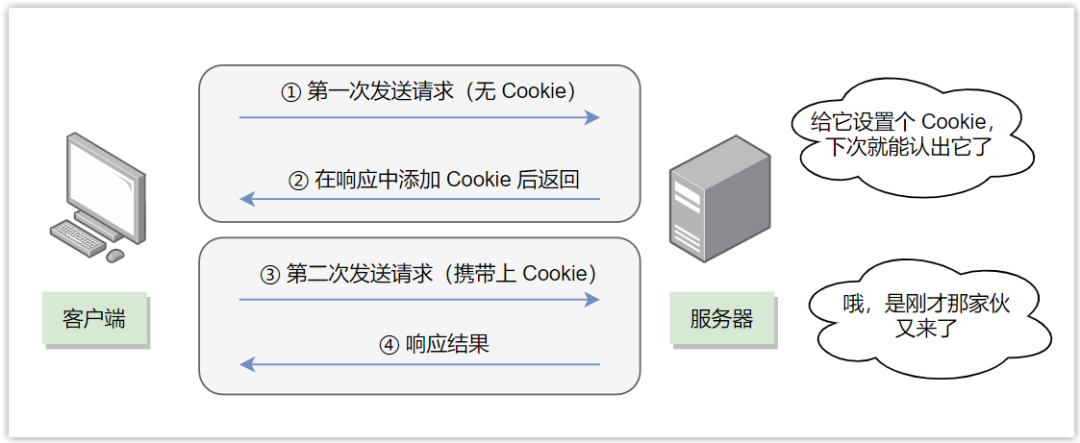

5. Cookies

HTTP is a stateless protocol, that is, it does not remember the communication state between the request and the response, so the web server cannot determine which user the request came from, and the HTTP protocol does not save any information about the user. The advantage of this design is that no additional resources are required to save user status information, which reduces the consumption of CPU and memory resources of the server.

But with the development of the Web, many businesses need to save user status.

- For example, an e-commerce website needs to save the user's login status when the user jumps to other product pages. Otherwise, users have to log in again every time they visit the website, which is too cumbersome and the experience effect will be poor.

- For example, a short video website hopes to record videos that users have watched before, so that they can accurately recommend videos of interest to them later.

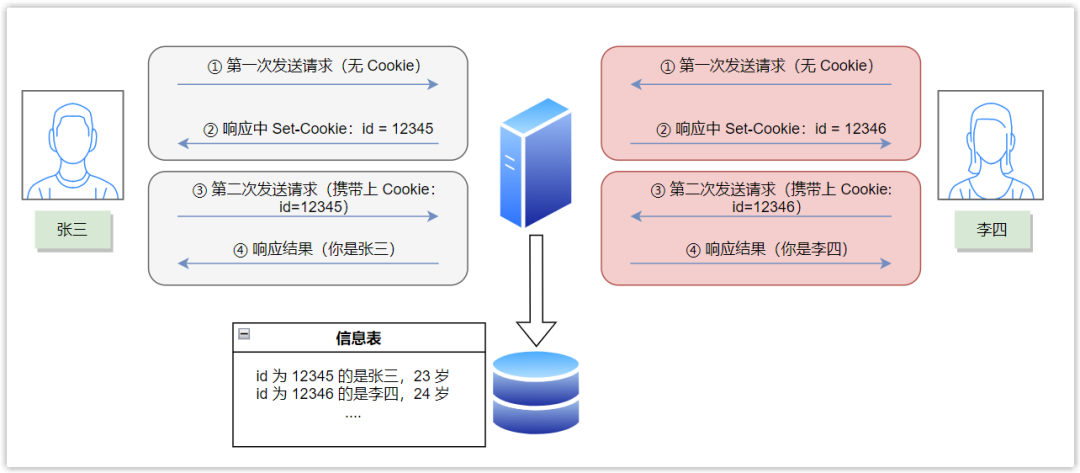

In order to achieve the function of maintaining state, cookies appear. Cookie (the certificate given by the server) is similar to the membership card (the certificate given by the merchant) when we go to the mall, which records our identity information. As long as we show the membership card, the mall staff can confirm our identity. Similarly, as long as a cookie is sent to the server when sending a message, he will know who we are.

A cookie can contain arbitrary information, most commonly a unique identifier generated by the server for tracking purposes.

Take a chestnut:

After Zhang San made the first request, the server recorded his status information, such as his name, age, address, shopping history, etc., and gave him a unique identification with id=12345 through the response header Set-Cookie field code as a Cookie, then when it sends a request to the server again, the browser will automatically include id=12345 in the Cookie field in the request message, and the server can query the specific information of Zhang San through this, thus realizing the state maintenance function.

Cookie properties:

- max-age: How long is the expiration time (absolute time, unit: second).

Negative number means that the browser will be invalid when it is closed. The default is -1.

Positive number: expiration time = creation time + max-age.

0: Indicates that the cookie is deleted immediately, that is, the cookie expires directly (so that the cookie is invalidated).

- expires: expiration time (relative time).

- secure: Indicates that this cookie will only be sent when https.

- HttpOnly: After setting, it cannot be accessed by using JavaScript scripts, which can ensure security and prevent attackers from stealing user cookies.

- domain: Indicates which domain the cookie is valid for. (By default, cookies cannot be directly accessed across domains, but secondary domain names can share cookies)

The disadvantage of cookies is that if more status information is transmitted, the packet will be too large, which will reduce the efficiency of network transmission.

General browsers limit the cookie size to 4KB.

6. HTTP version

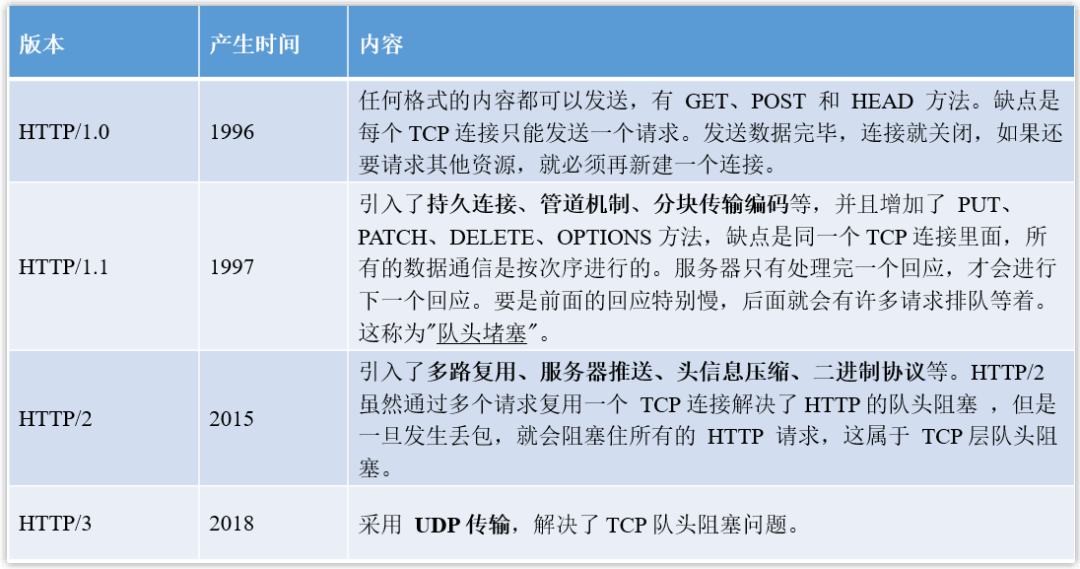

With the development of the Internet, HTTP is also constantly upgrading to fight monsters. The following introduces the improvements of HTTP/1.1, HTTP/2 and HTTP/3 based on the previous version.

(1) HTTP/1.1 performance improvement compared to HTTP/1.0

HTTP/1.1 is currently the most common version of HTTP, and it has the following improvements compared to HTTP/1.0.

① Persistent connection

As mentioned in the previous article, a TCP connection in HTTP/1.0 can only send one request and response, while HTTP/1.1 is optimized, the same TCP connection can send multiple HTTP requests, reducing the time required to establish and close the connection. performance overhead.

Web service software generally provides the keepalive_timeout parameter to specify the timeout time for HTTP persistent connections. For example, if the timeout period of HTTP persistent connection is set to 60 seconds, the Web service software will start a timer. If no new request is initiated within 60 seconds after an HTTP request is completed, a callback function will be triggered to release the connection.

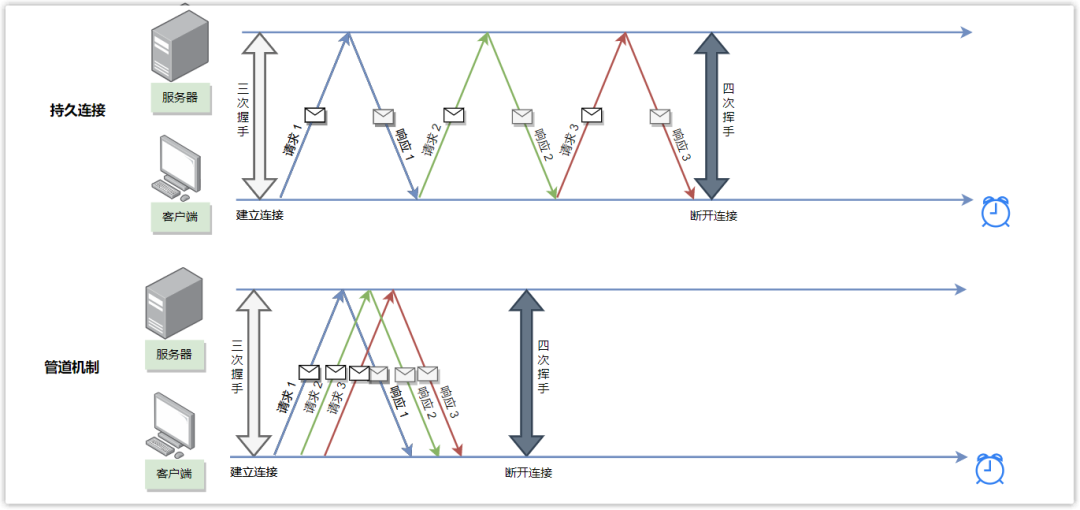

② Pipeline mechanism

Although a persistent connection can multiplex the same connection for multiple requests, it needs to wait until the previous request response is completed before sending the next request.

In the pipeline mechanism, as long as the first request is sent out, the second request can be sent out without waiting for it to come back, which is equivalent to sending out multiple requests at the same time, thus reducing the overall response time.

Although the client can send multiple HTTP requests at the same time without waiting for a response one by one, the server must send responses to these pipelined requests in the order in which the requests are received to ensure that the client can distinguish the response content of each request. This has the following problems:

If the server takes a long time to process a request, the processing of subsequent requests will be blocked, which will cause the client to not receive data for a long time, which is called "head-of-line blocking".

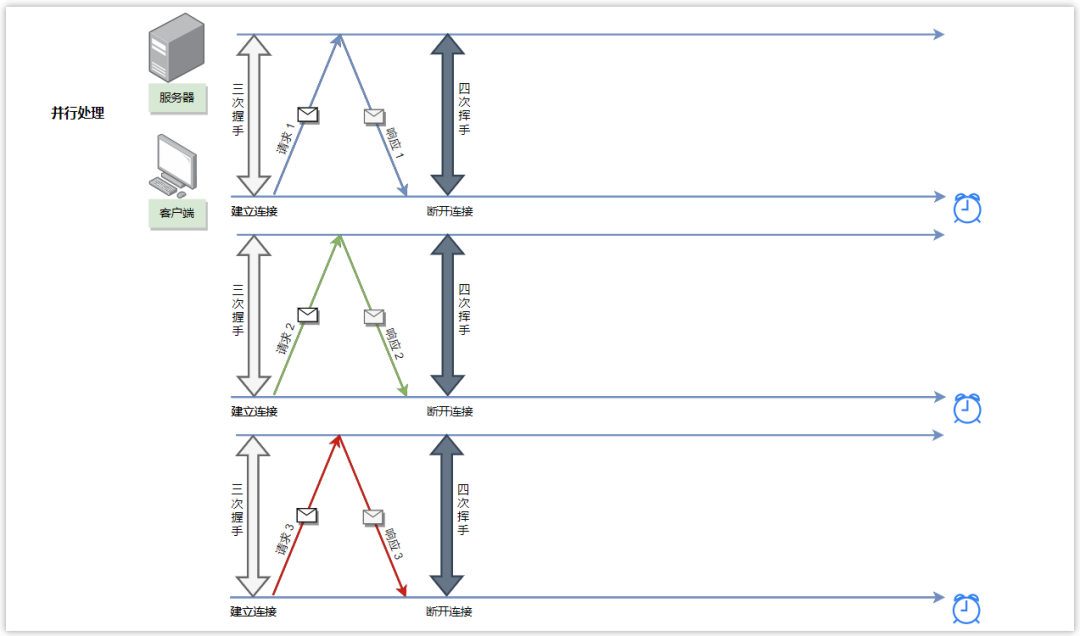

In fact, while the pipe mechanism is a good idea, it's so difficult to implement that many browsers don't support it at all. Generally, in order to improve performance, multiple parallel TCP connections are used to send requests at the same time.

③ cache control

As mentioned above, HTTP/1.1 adds some request response headers on the basis of HTTP/1.0 to better control the cache. for example

- Add Cache-Control to replace the original Expires;

- Add If-None-Match and Etag to replace the original If-Modified-Since and Last-Modified.

④ Breakpoint resume

Entity bodies are transferred in chunks using chunked transfer encoding using HTTP headers.

(2) HTTP/2 performance improvement compared to HTTP/1.1

The HTTP/2 protocol itself is based on HTTPS, so it is more secure. Compared with HTTP/1.1, it has the following improvements.

① Head compression

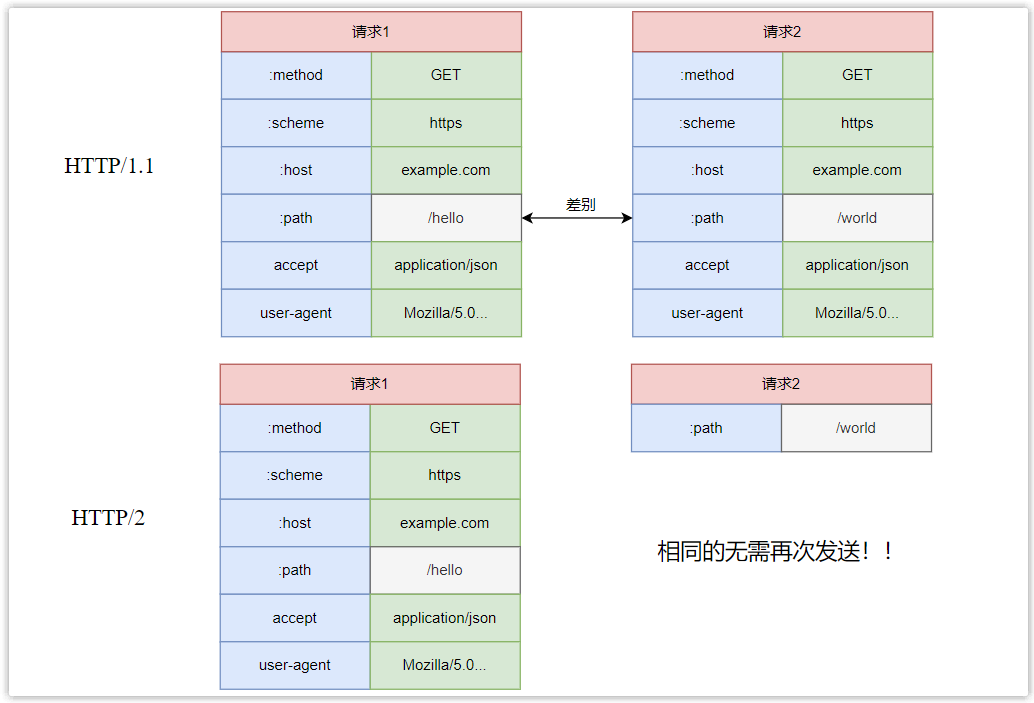

The request header in HTTP/1.1 carries a lot of information, and it has to be sent repeatedly every time. Even if it is the same content, it needs to be attached to each request, which will cause performance loss. HTTP/2 has been optimized and introduced a header compression mechanism.

The client and the server maintain a table of header information at the same time, and fields that appear frequently will be stored in this table to generate an index number. Directly use the index number to replace the field when sending the message. In addition, fields that do not exist in the index table are compressed using Huffman encoding.

At the same time, in multiple requests, if the request headers are the same, only the different parts need to be sent in subsequent requests, and the repeated parts do not need to be sent again.

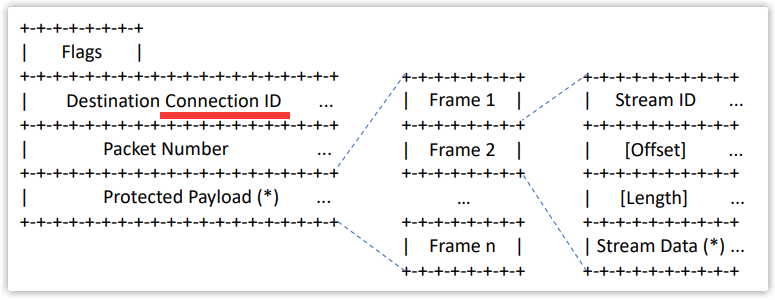

② Binary frame

The HTTP/1.1 message is in plain text format, while the HTTP/2 message is fully in binary format, and the original message is split into a header frame (Headers Frame) and a data frame (Data Frame). The use of binary format is beneficial to improve the efficiency of data transmission.

③ Multiplexing

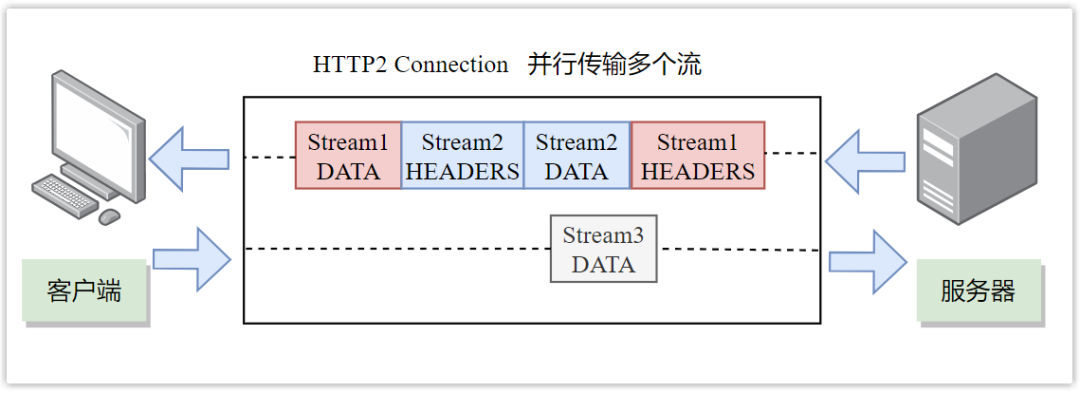

The concept of stream (Stream) is defined in HTTP/2, which is a bidirectional transmission sequence of binary frames. A data stream corresponds to a complete request-response process. During the same request response process, a round-trip frame will be assigned a A unique stream number (Stream ID).

With the support of streams, HTTP/2 can transmit multiple requests or responses in one TCP connection, instead of one-to-one correspondence in order (that is, multiplexing), because they belong to different streams, and the frame headers sent Each part will carry a Stream ID, which can be used to effectively distinguish different request-responses.

Therefore, HTTP/2 solves the "head-of-line blocking" problem of HTTP/1.1. There is no sequence relationship between multiple requests and responses, and there is no need to wait in line, which reduces delay and greatly improves connection utilization.

Take a chestnut:

In a TCP connection, the server receives the A request and the B request at the same time, so it responds to the A request first. It turns out that the processing process is very time-consuming, so it sends the processed part of the A request, and then responds to the B request. Send the rest of A's request.

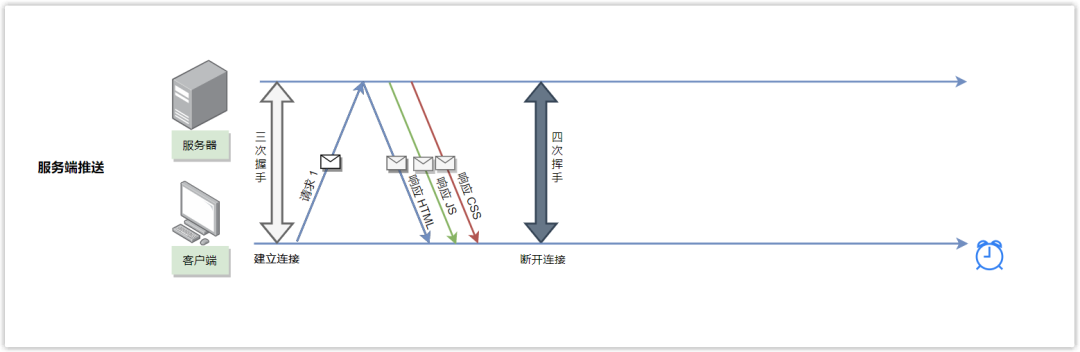

④ Server push

In HTTP/1.1, only the client can initiate a request, and the server can respond to the request.

In HTTP/2, the server can actively push the necessary resources to the client to reduce request delay time.

For example, when the client requests an HTML file from the server, the server not only responds to the HTML file to the client, but also proactively pushes the JS and CSS files that the HTML depends on to the client in advance, so that When the client parses HTML, it does not need to spend extra requests to get the corresponding JS and CSS files.

(3) Performance improvement of HTTP/3 compared to HTTP/2

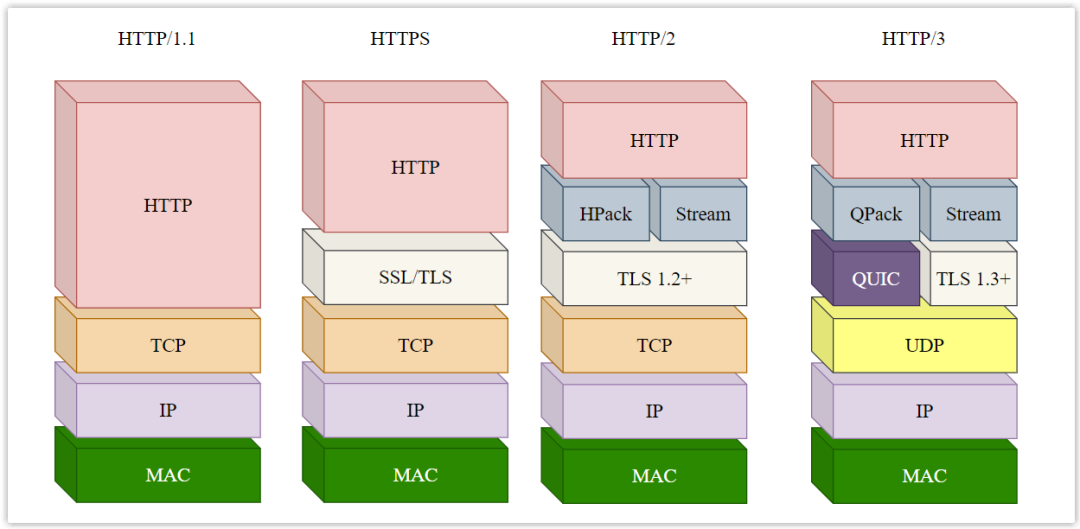

In order to solve some problems existing in HTTP/2, Google proposed the QUIC protocol, and HTTP-over-QUIC is HTTP/3, which has the following improvements compared to HTTP/2.

① No head-of-line blocking

As mentioned earlier, HTTP/2 solves the "head-of-line blocking" problem of HTTP1.1 through multiplexing, but it only solves the "head-of-line blocking" problem at the HTTP level. The bottom layer still uses TCP connections, HTTP /2 does not solve TCP's "head-of-line blocking" problem.

TCP is a reliable, byte-oriented protocol. Although multiple HTTP/2 requests can run in the same TCP connection, if packet loss occurs, TCP needs to retransmit, which may cause all streams on the entire TCP connection to be blocked until the lost packet is resent. If the transmission is successful, this is the "head of line blocking" problem of TCP.

To solve this problem, the bottom layer of HTTP/3 no longer uses TCP, but UDP! UDP is connectionless, and multiple streams are independent of each other, and there is no longer any dependency between them. Therefore, even if a certain stream loses packets, it will only affect the stream and will not block other streams!

At this time, some friends may ask, the bottom layer of HTTP/3 does not use TCP, so how to ensure reliable transmission? The answer is that HTTP/3 reimplements the reliability mechanism itself at the application layer. In other words, HTTP/3 moves some of the functions provided by the original TCP protocol to QUIC and makes improvements.

② Optimize the retransmission mechanism

TCP uses the sequence number + confirmation number + timeout retransmission mechanism to ensure the reliability of the message, that is, if a message has not been confirmed for a certain period of time, the message will be resent.

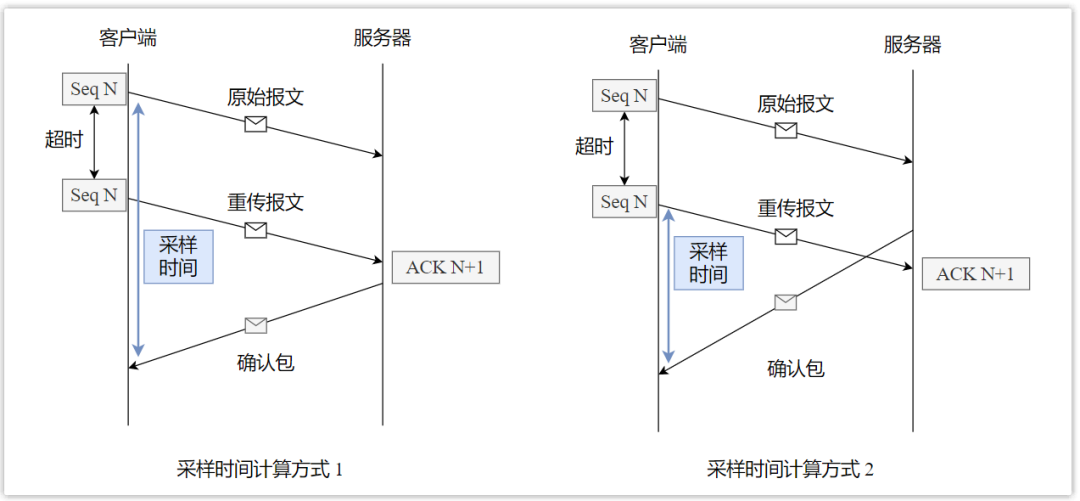

Due to the ever-changing network congestion, the timeout time of the message is not fixed, but is adjusted continuously by sampling the round-trip time of the message, but the TCP timeout sampling has the problem of inaccuracy.

Take a chestnut:

The client sends a packet with a serial number N, and then it times out (it may be lost, or the network may be blocked), so it resends a packet with a serial number N, and the server returns a confirmation number ACK of N+1 after receiving it. Bag. But at this time, the client cannot judge whether the confirmation packet is a confirmation of the original message or a confirmation of the retransmitted message, so how should the round-trip time be calculated at this time?

- If the confirmation packet is considered to be a confirmation of the original message, the time may be considered long;

- If the acknowledgment packet is considered to be the acknowledgment of the retransmission message, the packet time may be considered long.

Therefore, the calculation of TCP retransmission timeout time is inaccurate. If the calculation is too large, the efficiency will be slow and retransmission will take a long time. If the calculation is too small, it may be confirmed that the message is already on the way, but it is retransmitted!

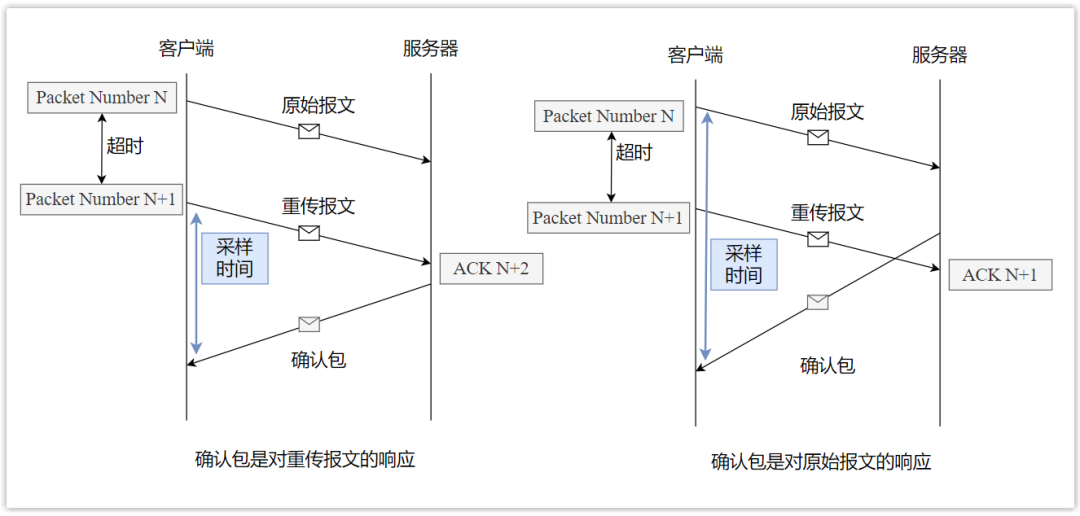

How does QUIC solve this problem? It defines an increasing sequence number (no longer called Seq, but Packet Number), each packet of sequence number is sent only once, even if the same packet is retransmitted, its sequence number is different.

Take a chestnut:

The client sends a packet with the sequence number N, and then it times out, so it resends the same packet, but the sequence number is no longer N, but N+1; then if the returned confirmation packet ACK is N+1, it is the original The response of the message, if the ACK is N+2, is the response to the retransmission message, so the calculation of sampling time is relatively more accurate!

Then how do you know that package N and package N+1 are the same package? QUIC defines an Offset concept. The sent data has an offset, which can be used to know where the data is currently sent, so if a certain Offset packet is not confirmed, it will be resent.

③ Connection Migration

As we all know, a TCP connection is identified by a four-tuple, which are source IP, source port, destination IP, and destination port. Once one of the elements has changed, you need to disconnect and reconnect.

When the mobile phone signal is unstable or when the WIFI and mobile network are switched, it will lead to reconnection, and reconnection means that a three-way handshake needs to be performed again, which will cause a certain delay, and the user will feel stuck and the experience is not friendly.

However, QUIC does not use a quadruple to identify the connection, but uses a 64-bit random number as the ID to identify the two ends of the communication through this connection ID. Even if the network changes, the IP or port changes, but as long as If the ID remains the same, there is no need to reconnect, and only the original connection needs to be reused. The delay is low, which reduces the user's feeling of stuttering and realizes connection migration.

(4) Summary

This article is reproduced from the WeChat public account "Yi Feng Shuo Code", the author "Yi Feng Shuo Code", you can follow through the following QR code.

To reprint this article, please contact the official account of "Yifeng Shuoma".