Learn Kubernetes overlay network from Flannel

Learn Kubernetes overlay network from Flannel

Flannel Introduction

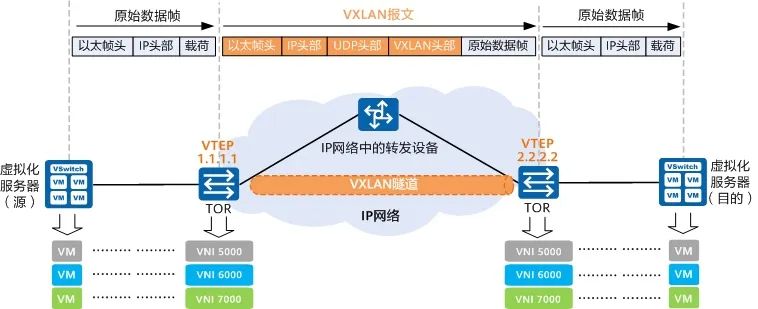

Flannel is a very simple overlay network (VXLAN), one of the solutions for Kubernetes network CNI. Flannel runs a simple lightweight agent flanneld on each host to monitor changes in nodes in the cluster and pre-configure the address space. Flannel will also install vtep flannel.1 (VXLAN tunnel endpoints) on each host, and connect to other hosts through VXLAN tunnels.

flanneld listens on port 8472 and transmits data with vtep of other nodes through UDP. The Layer 2 packet arriving at the vtep will be sent to the peer vtep through UDP intact, and then the Layer 2 packet will be removed for processing. Simply put, it is to use the four-layer UDP to transmit the two-layer data frame.

vxlan-tunnel

Flannel is the default CNI implementation in the Kubernetes distribution K3S[1]. K3S integrates flannel, and flannel runs as a go routine after startup.

Environment build

The Kubernetes cluster uses the k3s distribution, but when installing the cluster, disable the k3s integrated flannel and use the independently installed flannel for verification.

To install the CNI plugin, you need to execute the following command on all node nodes to download the official bin of CNI.

sudo mkdir -p /opt/cni/bin

curl -sSL https://github.com/containernetworking/plugins/releases/download/v1.1.1/cni-plugins-linux-amd64-v1.1.1.tgz | sudo tar -zxf - -C /opt/cni/bin- 1.

- 2.

Install the k3s control plane.

export INSTALL_K3S_VERSION=v1.23.8+k3s2

curl -sfL https://get.k3s.io | sh -s - --disable traefik --flannel-backend=none --write-kubeconfig-mode 644 --write-kubeconfig ~/.kube/config- 1.

- 2.

Install Flannel. Note here that the default Pod CIRD of Flannel is 10.244.0.0/16, we modify it to the default 10.42.0.0/16 of k3s.

curl -s https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml | sed 's|10.244.0.0/16|10.42.0.0/16|g' | kubectl apply -f -- 1.

Add another node to the cluster.

export INSTALL_K3S_VERSION=v1.23.8+k3s2

export MASTER_IP=<MASTER_IP>

export NODE_TOKEN=<TOKEN>

curl -sfL https://get.k3s.io | K3S_URL=https://${MASTER_IP}:6443 K3S_TOKEN=${NODE_TOKEN} sh -- 1.

- 2.

- 3.

- 4.

View node status.

kubectl get node

NAME STATUS ROLES AGE VERSION

ubuntu-dev3 Ready <none> 13m v1.23.8+k3s2

ubuntu-dev2 Ready control-plane,master 17m v1.23.8+k3s2- 1.

- 2.

- 3.

- 4.

Run two pods: curl and httpbin, to explore

NODE1=ubuntu-dev2

NODE2=ubuntu-dev3

kubectl apply -n default -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

labels:

app: curl

name: curl

spec:

containers:

- image: curlimages/curl

name: curl

command: ["sleep", "365d"]

nodeName: $NODE1

---

apiVersion: v1

kind: Pod

metadata:

labels:

app: httpbin

name: httpbin

spec:

containers:

- image: kennethreitz/httpbin

name: httpbin

nodeName: $NODE2

EOF- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

- 26.

- 27.

- 28.

Network Configuration

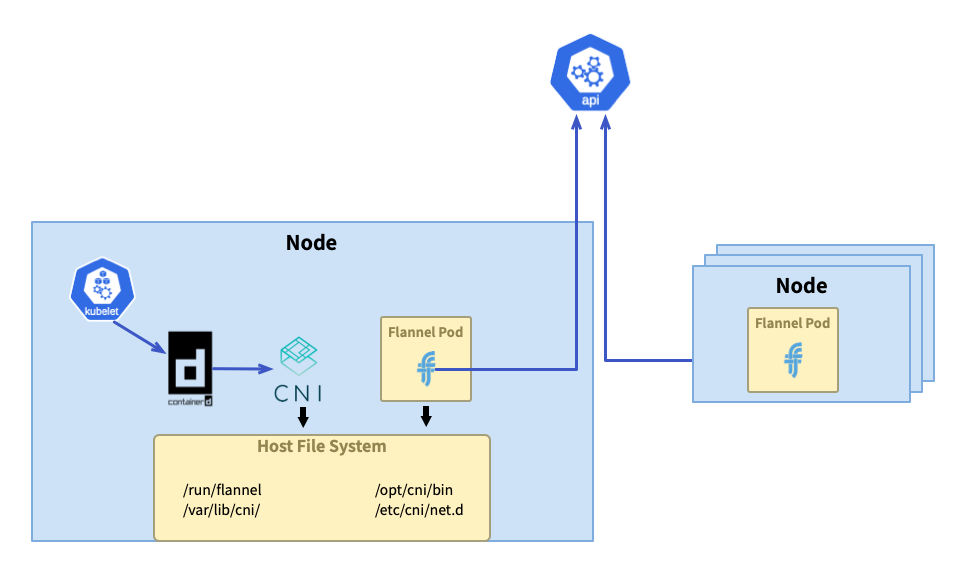

Next, let's take a look at how the CNI plugin configures the pod network.

initialization

Flannel is deployed through Daemonset, and each node will run a flannel pod. By mounting the local disk, when the Pod starts, the binary file and CNI configuration will be copied to the local disk through the initialization container, located in /opt/cni/bin/flannel and /etc/cni/net.d/ 10 - flannel. conflist.

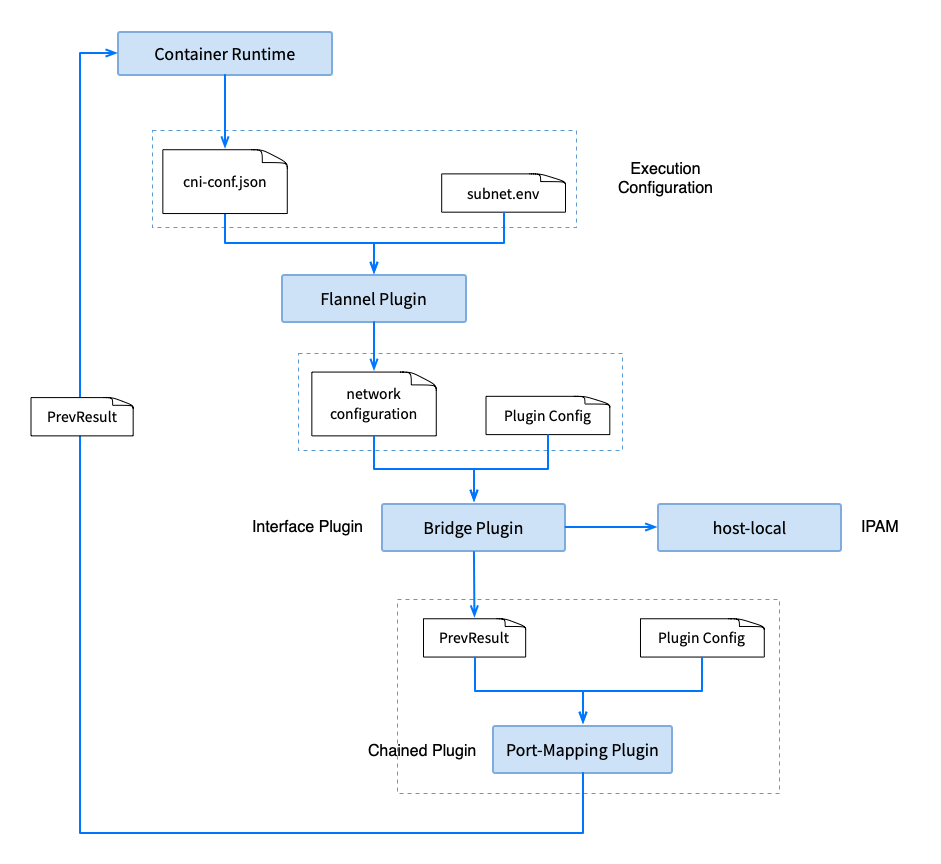

By viewing the ConfigMap in kube-flannel.yml[2], you can find the CNI configuration. The flannel default commission (see the flannel-cni source code `flannel_linux.go#L78`[3]) to the bridge plug-in[4] for network configuration, The network name is cbr0; IP address management is delegated by default (see flannel-cni source code `flannel_linux.go#L40`[5]) host-local plug-in[6] completed.

#cni-conf.json 复制到 /etc/cni/net.d/10-flannel.conflist

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

There is also the network configuration of Flannel, which includes the Pod CIDR 10.42.0.0/16 we set and the type of the backend (backend) vxlan. This is also the default type of flannel, and there are many backend types [7] optional, such as host-gw, wireguard, udp, Alloc, IPIP, IPSec.

#net-conf.json 挂载到 pod 的 /etc/kube-flannel/net-conf.json

{

"Network": "10.42.0.0/16",

"Backend": {

"Type": "vxlan"

}

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

The Flannel Pod runs and starts the flanneld process, specifying the parameters --ip-masq and --kube-subnet-mgr, which enables the kube subnet manager mode.

run

The default Pod CIDR 10.42.0.0/16 is used when the cluster is initialized. When a node joins the cluster, the cluster will assign the node the Pod CIDR 10.42.X.1/24 from this network segment.

In kube subnet manager mode, flannel connects to the apiserver to monitor node update events, and obtains the node's Pod CIDR from the node information.

kubectl get no ubuntu-dev2 -o jsnotallow={.spec} | jq

{

"podCIDR": "10.42.0.0/24",

"podCIDRs": [

"10.42.0.0/24"

],

"providerID": "k3s://ubuntu-dev2"

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

Then write the subnet configuration file on the host, the content of the subnet configuration file of one of the nodes is shown below. The content difference of another node is FLANNEL_SUBNET=10.42.1.1/24, using the Pod CIDR of the corresponding node.

#node 192.168.1.12

cat /run/flannel/subnet.env

FLANNEL_NETWORK=10.42.0.0/16

FLANNEL_SUBNET=10.42.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

CNI plugin execution

The execution of the CNI plug-in is triggered by the container runtime. For details, see the previous article "Source code analysis: the use of CNI from the perspective of kubelet and container runtime".

Flannel Plugin Flow

flannel plugin

When flannel CNI plugin (/opt/cni/bin/flannel) is executed, it receives the incoming cni-conf.json, reads the configuration of subnet.env initialized above, outputs the result, and delegates it to the bridge Next step.

cat /var/lib/cni/flannel/e4239ab2706ed9191543a5c7f1ef06fc1f0a56346b0c3f2c742d52607ea271f0 | jq

{

"cniVersion": "0.3.1",

"hairpinMode": true,

"ipMasq": false,

"ipam": {

"ranges": [

[

{

"subnet": "10.42.0.0/24"

}

]

],

"routes": [

{

"dst": "10.42.0.0/16"

}

],

"type": "host-local"

},

"isDefaultGateway": true,

"isGateway": true,

"mtu": 1450,

"name": "cbr0",

"type": "bridge"

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

- 26.

bridge plugin

The bridge uses the above output together with the parameters as input, and completes the following operations according to the configuration:

- Create bridge cni0 (node's root network namespace)

- Create container network interface eth0 (pod network namespace)

- Create a virtual network interface vethX on the host (the node's root network namespace)

- Connect vethX to bridge cni0

- Entrust the ipam plugin to assign IP address, DNS, routing

- Bind the IP address to interface eth0 in the pod network namespace

- Check bridge status

- set routing

- set DNS

The final output is as follows:

cat /var/li/cni/results/cbr0-a34bb3dc268e99e6e1ef83c732f5619ca89924b646766d1ef352de90dbd1c750-eth0 | jq .result

{

"cniVersion": "0.3.1",

"dns": {},

"interfaces": [

{

"mac": "6a:0f:94:28:9b:e7",

"name": "cni0"

},

{

"mac": "ca:b4:a9:83:0f:d4",

"name": "veth38b50fb4"

},

{

"mac": "0a:01:c5:6f:57:67",

"name": "eth0",

"sandbox": "/var/run/netns/cni-44bb41bd-7c41-4860-3c55-4323bc279628"

}

],

"ips": [

{

"address": "10.42.0.5/24",

"gateway": "10.42.0.1",

"interface": 2,

"version": "4"

}

],

"routes": [

{

"dst": "10.42.0.0/16"

},

{

"dst": "0.0.0.0/0",

"gw": "10.42.0.1"

}

]

}- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- twenty one.

- twenty two.

- twenty three.

- twenty four.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

port-mapping plugin

The plugin will forward traffic from one or more ports on the host to the container.

Debug

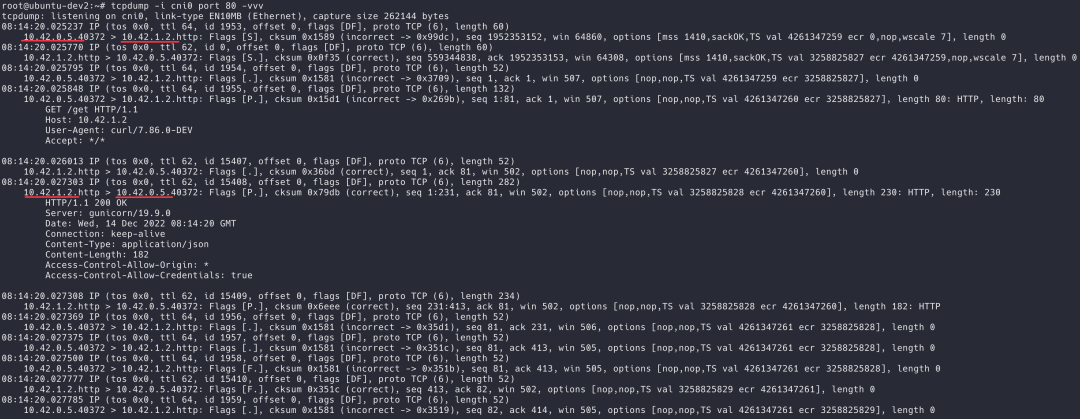

Let's take a packet capture on the interface cni0 using tcpdump on the first node.

tcpdump -i cni0 port 80 -vvv- 1.

Send a request from pod curl using the IP address 10.42.1.2 of pod httpbin :

kubectl exec curl -n default -- curl -s 10.42.1.2/get- 1.

cni0

From the packet capture results on cni0, the IP addresses of the third layer are all the IP addresses of the pods, and it seems that the two pods are in the same network segment.

tcpdump-on-cni0

host eth0

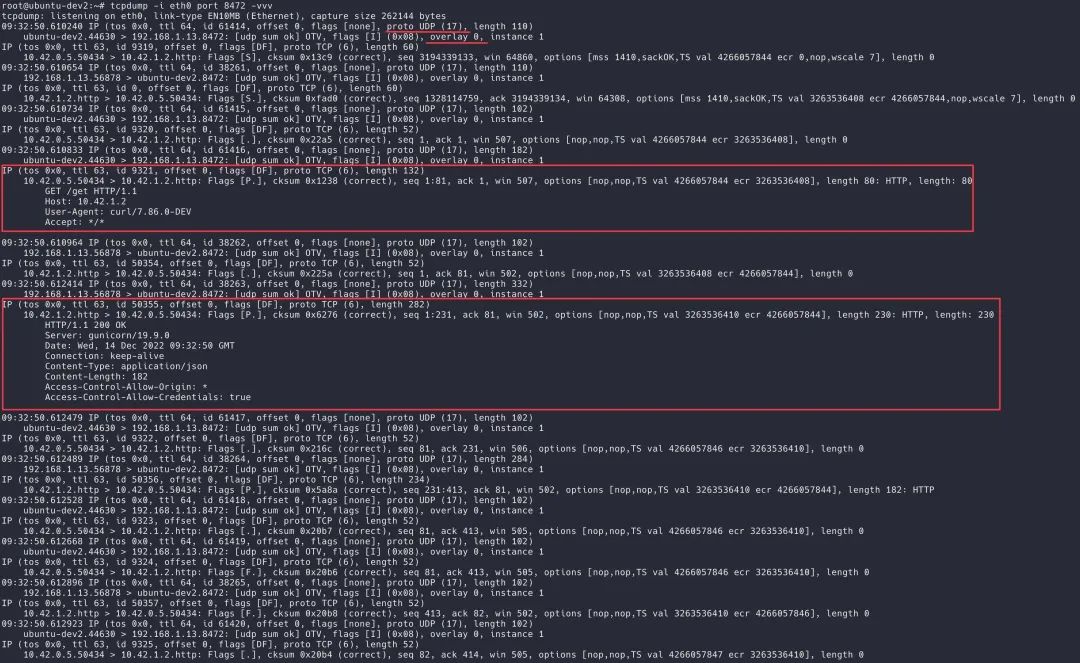

At the beginning of the article, it is mentioned that flanneld listens on udp port 8472.

netstat -tupln | grep 8472

udp 0 0 0.0.0.0:8472 0.0.0.0:* -- 1.

- 2.

We grab UDP packets directly on the Ethernet interface:

tcpdump -i eth0 port 8472 -vvv- 1.

Send the request again, and you can see that the UDP packet is captured, and the transmitted payload is a layer-2 packet.

tcpdump-on-host-eth0

Cross-node communication under the Overlay network

In the first part of the series, when we looked at communication between pods, we mentioned that different CNI plugins handled things differently, this time we explored how the flannel plugin works. I hope that through the following figure, I can have a more intuitive understanding of the overlay network processing cross-node network communication.

When the traffic sent to 10.42.1.2 reaches the bridge cni0 of node A, because the destination IP does not belong to the network segment of the current stage. According to the routing rules of the system, enter the interface flannel.1, which is the vtep of VXLAN. The routing rules here are also maintained by flanneld. When the node goes online or offline, the routing rules will be updated.

#192.168.1.12

Destination Gateway Genmask Flags Metric Ref Use Iface

default _gateway 0.0.0.0 UG 0 0 0 eth0

10.42.0.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

10.42.1.0 10.42.1.0 255.255.255.0 UG 0 0 0 flannel.1

192.168.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

#192.168.1.13

Destination Gateway Genmask Flags Metric Ref Use Iface

default _gateway 0.0.0.0 UG 0 0 0 eth0

10.42.0.0 10.42.0.0 255.255.255.0 UG 0 0 0 flannel.1

10.42.1.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

192.168.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

flannel.1 re-encapsulates the original Ethernet packet using the UDP protocol and sends it to the destination address 10.42.1.0 (the destination's MAC address is obtained through ARP). The vtep at the opposite end, that is, the UDP port 8472 of flannel.1 receives the message, deframes the Ethernet packet, then performs routing processing on the Ethernet packet, sends it to the interface cni0, and finally reaches the target pod.

The data transmission of the response is similar to the processing of the request, except that the source address and destination address are exchanged.

References

[1] K3S: https://k3s.io/

[2] kube-flannel.yml: https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

[3] flannel-cni source flannel_linux.go#L78: https://github.com/flannel-io/cni-plugin/blob/v1.1.0/flannel_linux.go#L78

[4] bridge plugin: https://www.cni.dev/plugins/current/main/bridge/

[5] flannel-cni source flannel_linux.go#L40: https://github.com/flannel-io/cni-plugin/blob/v1.1.0/flannel_linux.go#L40

[6] host-local plugin: https://www.cni.dev/plugins/current/ipam/host-local/

[7] Multiple backend types: https://github.com/flannel-io/flannel/blob/master/Documentation/backends.md