Byte side, asked two classic questions! Do you know what it is?

Byte side, asked two classic questions! Do you know what it is?

Hello everyone, I'm Kobayashi.

Previously, a reader was asked two classic TCP questions:

The first question: the reason why the server is connected in a large number of TIME_WAIT states.

The second question: the reason why the server has a large number of connections in the CLOSE_WAIT state.

These two questions are often asked in interviews, mainly because they are also often encountered at work.

This time, let's talk about these two issues.

What are the reasons for a large number of TIME_WAIT states on the server?

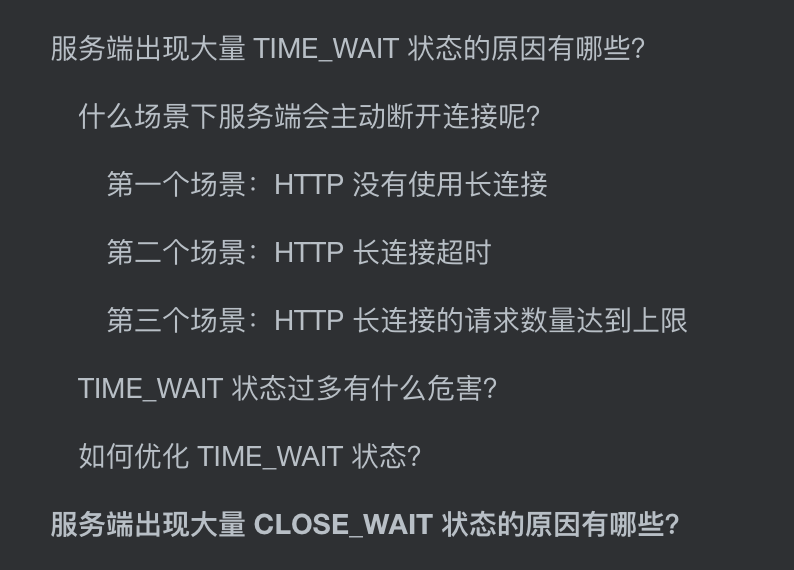

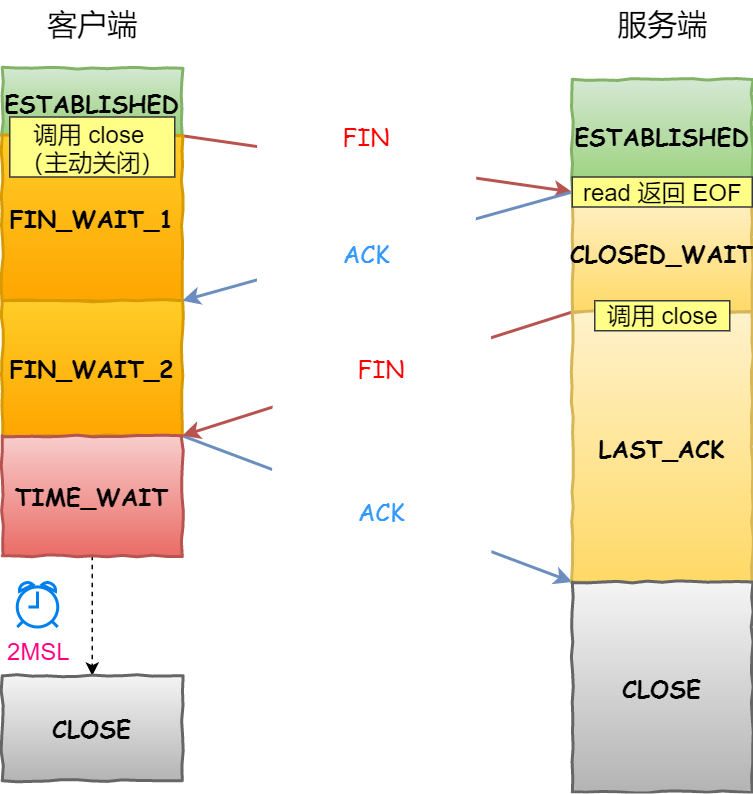

Let's take a look at the TCP four-time wave process to see at what stage the TIME_WAIT state occurs.

The following figure is the flow of TCP waving four times by the "client" as the "active closing party".

TCP four-time wave process

From the above, we can know that the TIME_WAIT state is the state that only appears when the "actively closing the connecting party" occurs. Moreover, the TIME_WAIT state lasts for 2MSL before entering the close state. On Linux, the length of 2MSL is 60 seconds, which means that the time to stay at TIME_WAIT is a fixed 60 seconds.

Why do I need TIME_WAIT status? There are two main reasons:

- Ensure that the party that "passively closes the connection" can be properly closed. The TCP protocol closes the connection four times, and the last ACK packet sent by the active closing party may be lost, at which time the passive party will resend the FIN packet, and if the active party is in the CLOSE state, it will respond to the RST packet instead of the ACK packet. So the active party should be in the TIME_WAIT state, not CLOSE.

- Prevent data from historical connections from being incorrectly received by subsequent connections of the same quadruple. TCP packets may be "lost" due to router abnormalities, during the lost period, the TCP sender may resend this packet due to confirmation timeout, and the lost packet will also be sent to the final destination after the router is repaired, and the original lost packet is called lost duplicate. After closing a TCP connection, a TCP connection between the same IP address and port is immediately re-established, and the latter connection is called the avatar of the previous connection, then it is possible that the lost duplicate packet of the previous connection appears after the previous connection is terminated, and thus is misinterpreted as subordinate to the new incarnation. To avoid this situation, the TIME_WAIT state needs to last 2MSL, because this ensures that duplicate packets from the previous incarnation of the connection have disappeared in the network when a TCP connection is successfully established.

Many people misunderstand that only the client will have a TIME_WAIT state, which is not true. TCP is a full-duplex protocol, either side can close the connection first, so either side may have a TIME_WAIT state.

In short, remember that whoever closes the connection first, it is the active closing party, then the TIME_WAIT will appear in the active closing party.

In what scenarios will the server be actively disconnected?

If a large number of TCP connections in the TIME_WAIT state appear on the server side, it means that the server actively disconnects many TCP connections.

The question is, in what scenarios will the server actively disconnect?

- First scenario: HTTP does not use persistent connections

- The second scenario: HTTP persistent connection timeout

- Scenario 3: The number of requests for HTTP persistent connections reaches the upper limit

Next, let's introduce them separately.

First scenario: HTTP does not use persistent connections

Let's first look at how the HTTP persistent connection (Keep-Alive) mechanism is enabled.

In HTTP/1.0 it is disabled by default, and if the browser wants to open keep-alive, it must be added in the header of the request:

Connection: Keep-Alive- 1.

Then when the server receives the request and responds, it is also added to the response header:

Connection: Keep-Alive- 1.

By doing so, the TCP connection is not interrupted, but remains connected. When the client sends another request, it uses the same TCP connection. This continues until the client or server side raises the disconnection.

Since HTTP/1.1, Keep-Alive has been enabled by default, and now most browsers use HTTP/1.1 by default, so Keep-Alive is open by default. Once the client and server reach an agreement, the persistent connection is established.

If you want to turn off HTTP Keep-Alive, you need to add Connection:close information to the header of the HTTP request or response, that is, as long as there is Connection:close information in the HTTP header of either client and server, then the HTTP persistent connection mechanism cannot be used.

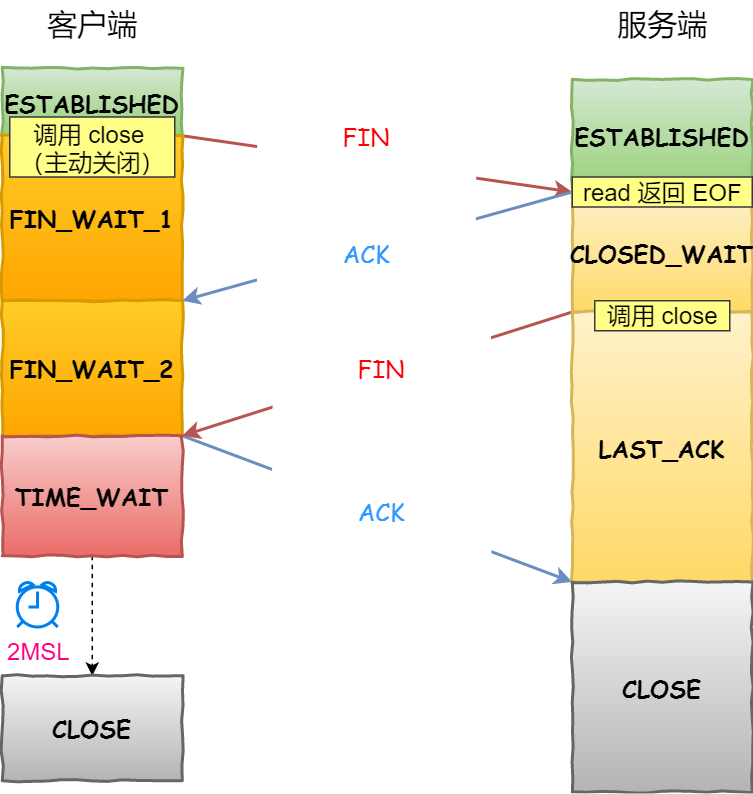

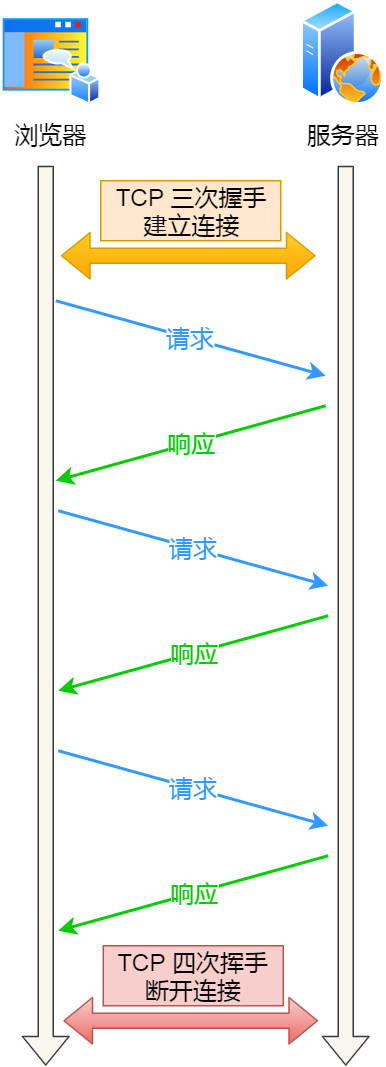

After the HTTP persistent connection mechanism is turned off, each request must go through the following process: establish TCP -> request resource -> response resource -> release connection, then this method is HTTP short connection, as shown below:

HTTP 短连接

在前面我们知道,只要任意一方的 HTTP header 中有 Connection:close 信息,就无法使用 HTTP 长连接机制,这样在完成一次 HTTP 请求/处理后,就会关闭连接。

问题来了,这时候是客户端还是服务端主动关闭连接呢?

在 RFC 文档中,并没有明确由谁来关闭连接,请求和响应的双方都可以主动关闭 TCP 连接。

不过,根据大多数 Web 服务的实现,不管哪一方禁用了 HTTP Keep-Alive,都是由服务端主动关闭连接,那么此时服务端上就会出现 TIME_WAIT 状态的连接。

客户端禁用了 HTTP Keep-Alive,服务端开启 HTTP Keep-Alive,谁是主动关闭方?

当客户端禁用了 HTTP Keep-Alive,这时候 HTTP 请求的 header 就会有 Connection:close 信息,这时服务端在发完 HTTP 响应后,就会主动关闭连接。

为什么要这么设计呢?HTTP 是请求-响应模型,发起方一直是客户端,HTTP Keep-Alive 的初衷是为客户端后续的请求重用连接,如果我们在某次 HTTP 请求-响应模型中,请求的 header 定义了 connection:close 信息,那不再重用这个连接的时机就只有在服务端了,所以我们在 HTTP 请求-响应这个周期的「末端」关闭连接是合理的。

客户端开启了 HTTP Keep-Alive,服务端禁用了 HTTP Keep-Alive,谁是主动关闭方?

当客户端开启了 HTTP Keep-Alive,而服务端禁用了 HTTP Keep-Alive,这时服务端在发完 HTTP 响应后,服务端也会主动关闭连接。

为什么要这么设计呢?在服务端主动关闭连接的情况下,只要调用一次 close() 就可以释放连接,剩下的工作由内核 TCP 栈直接进行了处理,整个过程只有一次 syscall;如果是要求 客户端关闭,则服务端在写完最后一个 response 之后需要把这个 socket 放入 readable 队列,调用 select / epoll 去等待事件;然后调用一次 read() 才能知道连接已经被关闭,这其中是两次 syscall,多一次用户态程序被激活执行,而且 socket 保持时间也会更长。

因此,当服务端出现大量的 TIME_WAIT 状态连接的时候,可以排查下是否客户端和服务端都开启了 HTTP Keep-Alive,因为任意一方没有开启 HTTP Keep-Alive,都会导致服务端在处理完一个 HTTP 请求后,就主动关闭连接,此时服务端上就会出现大量的 TIME_WAIT 状态的连接。

针对这个场景下,解决的方式也很简单,让客户端和服务端都开启 HTTP Keep-Alive 机制。

第二个场景:HTTP 长连接超时

HTTP 长连接的特点是,只要任意一端没有明确提出断开连接,则保持 TCP 连接状态。

HTTP 长连接可以在同一个 TCP 连接上接收和发送多个 HTTP 请求/应答,避免了连接建立和释放的开销。

可能有的同学会问,如果使用了 HTTP 长连接,如果客户端完成一个 HTTP 请求后,就不再发起新的请求,此时这个 TCP 连接一直占用着不是挺浪费资源的吗?

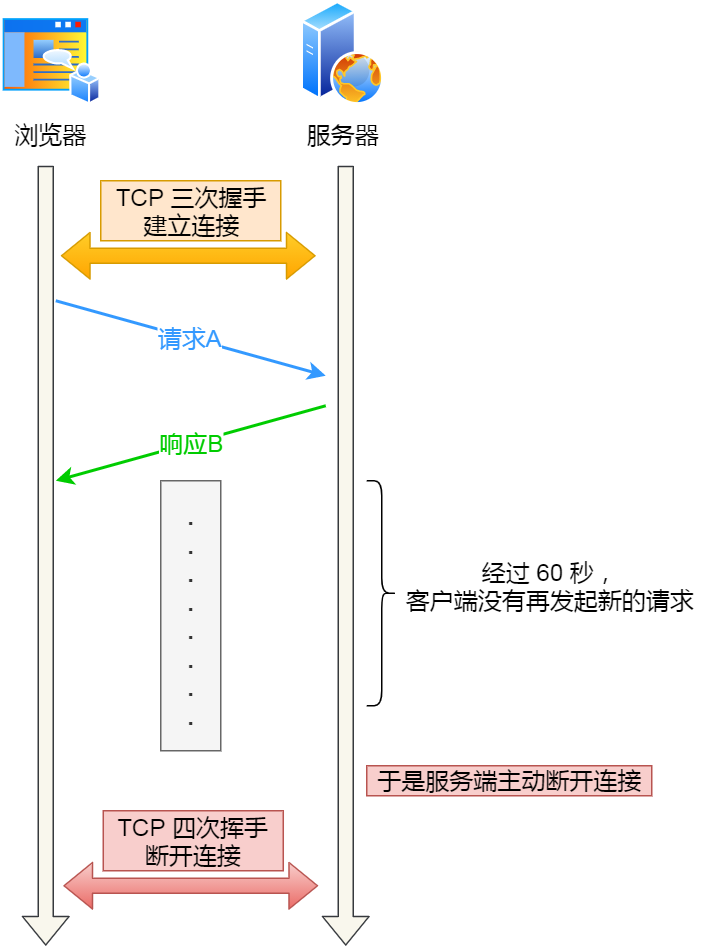

对没错,所以为了避免资源浪费的情况,web 服务软件一般都会提供一个参数,用来指定 HTTP 长连接的超时时间,比如 nginx 提供的 keepalive_timeout 参数。

假设设置了 HTTP 长连接的超时时间是 60 秒,nginx 就会启动一个「定时器」,如果客户端在完后一个 HTTP 请求后,在 60 秒内都没有再发起新的请求,定时器的时间一到,nginx 就会触发回调函数来关闭该连接,那么此时服务端上就会出现 TIME_WAIT 状态的连接。

HTTP 长连接超时

当服务端出现大量 TIME_WAIT 状态的连接时,如果现象是有大量的客户端建立完 TCP 连接后,很长一段时间没有发送数据,那么大概率就是因为 HTTP 长连接超时,导致服务端主动关闭连接,产生大量处于 TIME_WAIT 状态的连接。

可以往网络问题的方向排查,比如是否是因为网络问题,导致客户端发送的数据一直没有被服务端接收到,以至于 HTTP 长连接超时。

第三个场景:HTTP 长连接的请求数量达到上限

Web 服务端通常会有个参数,来定义一条 HTTP 长连接上最大能处理的请求数量,当超过最大限制时,就会主动关闭连接。

比如 nginx 的 keepalive_requests 这个参数,这个参数是指一个 HTTP 长连接建立之后,nginx 就会为这个连接设置一个计数器,记录这个 HTTP 长连接上已经接收并处理的客户端请求的数量。如果达到这个参数设置的最大值时,则 nginx 会主动关闭这个长连接,那么此时服务端上就会出现 TIME_WAIT 状态的连接。

keepalive_requests 参数的默认值是 100 ,意味着每个 HTTP 长连接最多只能跑 100 次请求,这个参数往往被大多数人忽略,因为当 QPS (每秒请求数) 不是很高时,默认值 100 凑合够用。

但是,对于一些 QPS 比较高的场景,比如超过 10000 QPS,甚至达到 30000 , 50000 甚至更高,如果 keepalive_requests 参数值是 100,这时候就 nginx 就会很频繁地关闭连接,那么此时服务端上就会出大量的 TIME_WAIT 状态。

针对这个场景下,解决的方式也很简单,调大 nginx 的 keepalive_requests 参数就行。

TIME_WAIT 状态过多有什么危害?

过多的 TIME-WAIT 状态主要的危害有两种:

第一是占用系统资源,比如文件描述符、内存资源、CPU 资源等;

第二是占用端口资源,端口资源也是有限的,一般可以开启的端口为32768~61000,也可以通过 net.ipv4.ip_local_port_range参数指定范围。

客户端和服务端 TIME_WAIT 过多,造成的影响是不同的。

如果客户端(主动发起关闭连接方)的 TIME_WAIT 状态过多,占满了所有端口资源,那么就无法对「目的 IP+ 目的 PORT」都一样的服务端发起连接了,但是被使用的端口,还是可以继续对另外一个服务端发起连接的。具体可以看我这篇文章:客户端的端口可以重复使用吗?

因此,客户端(发起连接方)都是和「目的 IP+ 目的 PORT 」都一样的服务端建立连接的话,当客户端的 TIME_WAIT 状态连接过多的话,就会受端口资源限制,如果占满了所有端口资源,那么就无法再跟「目的 IP+ 目的 PORT」都一样的服务端建立连接了。

不过,即使是在这种场景下,只要连接的是不同的服务端,端口是可以重复使用的,所以客户端还是可以向其他服务端发起连接的,这是因为内核在定位一个连接的时候,是通过四元组(源IP、源端口、目的IP、目的端口)信息来定位的,并不会因为客户端的端口一样,而导致连接冲突。

如果服务端(主动发起关闭连接方)的 TIME_WAIT 状态过多,并不会导致端口资源受限,因为服务端只监听一个端口,而且由于一个四元组唯一确定一个 TCP 连接,因此理论上服务端可以建立很多连接,但是 TCP 连接过多,会占用系统资源,比如文件描述符、内存资源、CPU 资源等。

如何优化 TIME_WAIT 状态?

这里给出优化 TIME-WAIT 的几个方式,都是有利有弊:

- 打开 net.ipv4.tcp_tw_reuse 和 net.ipv4.tcp_timestamps 选项;

- net.ipv4.tcp_max_tw_buckets

- 程序中使用 SO_LINGER ,应用强制使用 RST 关闭。

方式一:net.ipv4.tcp_tw_reuse 和 tcp_timestamps

开启 tcp_tw_reuse,则可以复用处于 TIME_WAIT 的 socket 为新的连接所用。

有一点需要注意的是,tcp_tw_reuse 功能只能用客户端(连接发起方),因为开启了该功能,在调用 connect() 函数时,内核会随机找一个 time_wait 状态超过 1 秒的连接给新的连接复用。

net.ipv4.tcp_tw_reuse = 1- 1.

使用这个选项,还有一个前提,需要打开对 TCP 时间戳的支持,即

net.ipv4.tcp_timestamps=1(默认即为 1)- 1.

这个时间戳的字段是在 TCP 头部的「选项」里,它由一共 8 个字节表示时间戳,其中第一个 4 字节字段用来保存发送该数据包的时间,第二个 4 字节字段用来保存最近一次接收对方发送到达数据的时间。

由于引入了时间戳,可以使得重复的数据包会因为时间戳过期被自然丢弃,因此 TIME_WAIT 状态才可以被复用。

方式二:net.ipv4.tcp_max_tw_buckets

这个值默认为 18000,当系统中处于 TIME_WAIT 的连接一旦超过这个值时,系统就会将后面的 TIME_WAIT 连接状态重置,这个方法比较暴力。

net.ipv4.tcp_max_tw_buckets = 18000- 1.

方式三:程序中使用 SO_LINGER

我们可以通过设置 socket 选项,来设置调用 close 关闭连接行为。

struct linger so_linger;

so_linger.l_onoff = 1;

so_linger.l_linger = 0;

setsockopt(s, SOL_SOCKET, SO_LINGER, &so_linger,sizeof(so_linger));- 1.

- 2.

- 3.

- 4.

如果l_onoff为非 0, 且l_linger值为 0,那么调用close后,会立该发送一个RST标志给对端,该 TCP 连接将跳过四次挥手,也就跳过了TIME_WAIT状态,直接关闭。

但这为跨越TIME_WAIT状态提供了一个可能,不过是一个非常危险的行为,不值得提倡。

前面介绍的方法都是试图越过 TIME_WAIT状态的,这样其实不太好。虽然 TIME_WAIT 状态持续的时间是有一点长,显得很不友好,但是它被设计来就是用来避免发生乱七八糟的事情。

《UNIX网络编程》一书中却说道:TIME_WAIT 是我们的朋友,它是有助于我们的,不要试图避免这个状态,而是应该弄清楚它。

如果服务端要避免过多的 TIME_WAIT 状态的连接,就永远不要主动断开连接,让客户端去断开,由分布在各处的客户端去承受 TIME_WAIT。

服务端出现大量 CLOSE_WAIT 状态的原因有哪些?

还是拿这张图:

TCP 四次挥手的流程

从上面这张图我们可以得知,CLOSE_WAIT 状态是「被动关闭方」才会有的状态,而且如果「被动关闭方」没有调用 close 函数关闭连接,那么就无法发出 FIN 报文,从而无法使得 CLOSE_WAIT 状态的连接转变为 LAST_ACK 状态。

所以,当服务端出现大量 CLOSE_WAIT 状态的连接的时候,说明服务端的程序没有调用 close 函数关闭连接。

那什么情况会导致服务端的程序没有调用 close 函数关闭连接?这时候通常需要排查代码。

我们先来分析一个普通的 TCP 服务端的流程:

- 创建服务端 socket,bind 绑定端口、listen 监听端口

- 将服务端 socket 注册到 epoll

- epoll_wait 等待连接到来,连接到来时,调用 accpet 获取已连接的 socket

- 将已连接的 socket 注册到 epoll

- epoll_wait 等待事件发生

- 对方连接关闭时,我方调用 close

可能导致服务端没有调用 close 函数的原因,如下。

第一个原因:第 2 步没有做,没有将服务端 socket 注册到 epoll,这样有新连接到来时,服务端没办法感知这个事件,也就无法获取到已连接的 socket,那服务端自然就没机会对 socket 调用 close 函数了。

不过这种原因发生的概率比较小,这种属于明显的代码逻辑 bug,在前期 read view 阶段就能发现的了。

第二个原因:第 3 步没有做,有新连接到来时没有调用 accpet 获取该连接的 socket,导致当有大量的客户端主动断开了连接,而服务端没机会对这些 socket 调用 close 函数,从而导致服务端出现大量 CLOSE_WAIT 状态的连接。

This may happen because the code stuck in a certain logic or threw an exception ahead of time before the server executed the accpet function.

The third reason: step 4 did not do, after obtaining the connected socket through accpet, it was not registered with epoll, resulting in the subsequent receipt of FIN packets, the server could not perceive this event, then the server did not have the opportunity to call the close function.

This may happen because the server gets stuck in a logic or throws an exception ahead of time before registering the connected socket with epoll. I have seen the practical articles of others to solve close_wait problems before, and if you are interested, you can take a look: a large number of CLOSE_WAIT connection cause analysis caused by Netty code is not robust

The fourth reason: step 6 is not done, when it is found that after the client closes the connection, the server does not execute the close function, it may be because the code misses processing, or before executing the close function, the code is stuck in a certain logic, such as a deadlock and so on.

It can be found that when there are a large number of connections in CLOSE_WAIT state on the server side, it is usually a problem of code, at this time we need to troubleshoot and locate the specific code step by step, and the main analysis direction is why the server does not call close.