Deep dive into the Kubernetes networking model and network communication

Deep dive into the Kubernetes networking model and network communication

Kubernetes defines a simple and consistent network model based on the design of the flat network structure. It can communicate efficiently without mapping host ports to network ports, and without other components for forwarding. This model also makes it easy to migrate applications from virtual machines or host physical machines into pods managed by Kubernetes.

This article mainly explores the Kubernetes network model in depth and understands how containers and pods communicate. The implementation of the network model will be introduced in a later article.

Kubernetes network model

The model defines:

- Each pod has its own IP address, which is reachable within the cluster

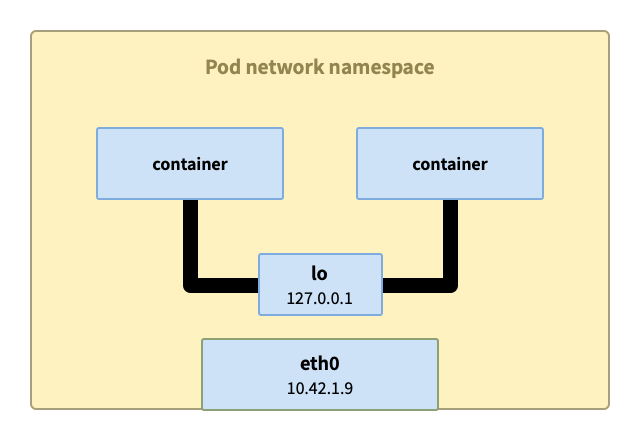

- All containers in the Pod share the pod IP address (including MAC address), and the containers could communicate with each other (using localhost) before

- Pods can communicate with other pods on any node in the cluster using the pod IP address without NAT

- Kubernetes components can communicate with each other and with pods

- Network isolation can be achieved through network policies

Several related components are mentioned in the definition above:

- Pod: A pod in Kubernetes is somewhat similar to a virtual machine with a unique IP address, and pods on the same node share network and storage.

- Container: A pod is a collection of containers that share the same network namespace. A container in a pod is like a process on a virtual machine, and localhost can be used to communicate between processes; the container has its own independent file system, CPU, memory, and process space. Containers need to be created by creating Pods.

- Node: pods run on nodes, and the cluster contains one or more nodes. Each pod's network namespace is connected to the node's namespace to connect to the network.

Having said so much about network namespaces, how exactly does it work?

How Network Namespaces Work

Create a pod in the Kubernetes release version k3s. This pod has two containers: the curl container that sends requests and the httpbin container that provides web services.

Although using the release version, it still uses the Kubernetes network model, which does not prevent us from understanding the network model.

apiVersion: v1

kind: Pod

metadata:

name: multi-container-pod

spec:

containers:

- image: curlimages/curl

name: curl

command: ["sleep", "365d"]

- image: kennethreitz/httpbin

name: httpbin- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

Log in to the node and pass lsns -t net the network namespace on the current host, but the process of httpbin is not found. The command with a namespace is /pause, the pause process is actually an invisible sandbox in each pod

lsns -t net

NS TYPE NPROCS PID USER NETNSID NSFS COMMAND

4026531992 net 126 1 root unassigned /lib/systemd/systemd --system --deserialize 31

4026532247 net 1 83224 uuidd unassigned /usr/sbin/uuidd --socket-activation

4026532317 net 4 129820 65535 0 /run/netns/cni-607c5530-b6d8-ba57-420e-a467d7b10c56 /pause- 1.

- 2.

- 3.

- 4.

- 5.

Since each container has an independent process space, let's change the command to view the space of the process type:

lsns -t pid

NS TYPE NPROCS PID USER COMMAND

4026531836 pid 127 1 root /lib/systemd/systemd --system --deserialize 31

4026532387 pid 1 129820 65535 /pause

4026532389 pid 1 129855 systemd-network sleep 365d

4026532391 pid 2 129889 root /usr/bin/python3 /usr/local/bin/gunicorn -b 0.0.0.0:80 httpbin:app -k gevent- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

The namespace to which the process PID 129889 belongs can be found:

ip netns identify 129889

cni-607c5530-b6d8-ba57-420e-a467d7b10c56- 1.

- 2.

Then you can use exec to execute commands under this namespace:

ip netns exec cni-607c5530-b6d8-ba57-420e-a467d7b10c56 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if17: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether f2:c8:17:b6:5f:e5 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.42.1.14/24 brd 10.42.1.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::f0c8:17ff:feb6:5fe5/64 scope link

valid_lft forever preferred_lft forever- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

From the results, it can be seen that the pod's IP address 10.42.1.14 is bound to the interface eth0, and eth0 is connected to interface 17.

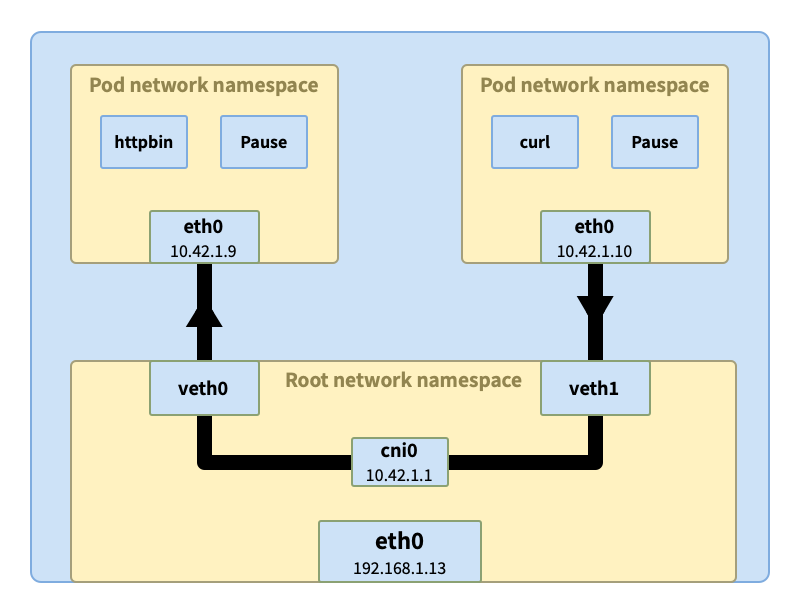

On the node host, check the information about interface 17. veth7912056b is a virtual ethernet interface (virtual ethernet device) under the root namespace of the host, and is a tunnel connecting the pod network and the node network, and the peer end is interface eth0 under the pod namespace.

ip link | grep -A1 ^17

17: veth7912056b@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default

link/ether d6:5e:54:7f:df:af brd ff:ff:ff:ff:ff:ff link-netns cni-607c5530-b6d8-ba57-420e-a467d7b10c56- 1.

- 2.

- 3.

The above results show that the veth is connected to a network bridge (network bridge) cni0.

The bridge works at the data link layer (layer 2 of the OSI model) and connects multiple networks (multiple network segments). When a request reaches the bridge, the bridge asks all connected interfaces (where pods are bridged via veth) if they have the IP address in the original request. If there is an interface response, the bridge will record the matching information (IP -> veth) and forward the data.

So what if there is no interface response? The specific process depends on the implementation of each network plug-in. I plan to introduce commonly used network plug-ins, such as Calico, Flannel, Cilium, etc., in a later article.

Next, let's look at how network communication in Kubernetes is done. There are several types:

- Communication between containers in the same pod

- Communication between pods on the same node

- Communication between pods on different nodes

How Kubernetes networking works

Communication between containers in the same pod

The communication between containers in the same pod is the simplest. These containers share the network namespace, and each namespace has a lo loopback interface, which can communicate through localhost.

Communication between pods on the same node

When we run the curl container and httpbin in two pods, the two pods may be scheduled on the same node. The request sent by curl reaches the eth0 interface in the pod according to the routing table in the container. Then reach the root network space of the node through the tunnel veth1 connected to eth0.

veth1 is connected to the virtual Ethernet interface vethX connected to other pods through the bridge cni0, and the bridge will ask all connected interfaces whether they have the IP address in the original request (such as 10.42.1.9 here). After receiving the response, the bridge will record the mapping information (10.42.1.9 => veth0) and forward the data to it. The final data enters the pod httpbin through the veth0 tunnel.

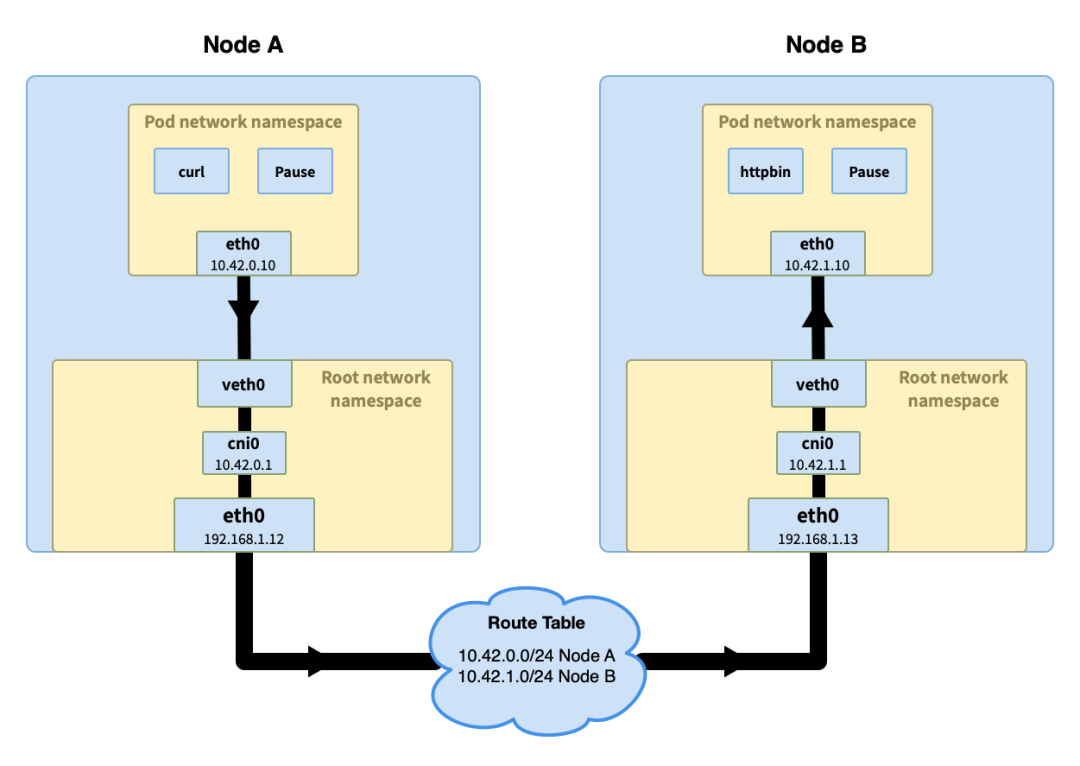

Communication between pods on different nodes

Communication between pods across nodes is more complicated, and different network plug-ins handle it differently. Here we choose an easy-to-understand method to briefly explain.

The process of the first half is similar to the communication between pods on the same node. When the request reaches the bridge, the bridge asks which pod owns the IP but does not get a response. The process enters the host's routing process to a higher cluster level.

There is a routing table at the cluster level, which stores the Pod IP network segment of each node (a Pod network segment (Pod CIDR) is allocated when a node joins the cluster, for example, the default Pod CIDR in k3s is 10.42.0.0/16 , the network segment obtained by the node is 10.42.0.0/24, 10.42.1.0/24, 10.42.2.0/24, and so on). The node requesting the IP can be determined through the Pod IP network segment of the node, and then the request is sent to the node.

Summarize

Now you should have a preliminary understanding of Kubernetes network communication.

The entire communication process requires the cooperation of various components, such as Pod network namespace, pod Ethernet interface eth0, virtual Ethernet interface vethX, network bridge (network bridge) cni0, etc. Some of these components correspond to pods one-to-one, and have the same life cycle as pods. Although it can be created, associated, and deleted manually, for non-permanent resources such as pods, which are frequently created and destroyed, too much manual work is also unrealistic.

In fact, these tasks are delegated by the container to the network plug-in, and the specification CNI (Container Network Interface) that the network plug-in follows.

What do web plugins do?

- Create a pod (container) network namespace

- create interface

- Create veth pairs

- Set up namespace networking

- Set up static routes

- Configure Ethernet Bridge

- Assign IP address

- Create NAT rules

...

- refer to

https://www.tigera.io/learn/guides/kubernetes-networking/

https://kubernetes.io/docs/concepts/services-networking/

https://matthewpalmer.net/kubernetes-app-developer/articles/kubernetes-networking-guide-beginners.html

https://learnk8s.io/kubernetes-network-packets