Uncovering the Secret: Why Sending Letters Over Thousands of Miles Can Arrive Instantly

Uncovering the Secret: Why Sending Letters Over Thousands of Miles Can Arrive Instantly

1. Introduction

Two hundred years ago, it took ten days and half a month for people to send a message to someone far away. In the Internet age, as long as we press Enter, the information will be delivered in seconds, and the results will be displayed in seconds.

Then have you ever thought about why information transmission can be so fast?

You might say that information is transmitted at the speed of light, whether it is optical fiber or electromagnetic waves for 4G/5G, of course it is fast!

But is it just the speed of light? Then why does the original nucleic acid system that scans the code and produces results in seconds, one person may be stuck for more than ten minutes during peak hours; why does the system collapse when the train ticket is rushing for it?

Intuition tells us that because there are many users, the carrying capacity of system applications is limited. Therefore, facing a large number of users, a mature Internet application has developed eighteen martial arts (optimization strategies) to deal with it skillfully.

Some of these strategies are as important as the speed of light, and some can also be found in everyday experience.

2. The overall process

Before introducing the optimization strategy, it is necessary to have an overall impression of the request process of the network signal:

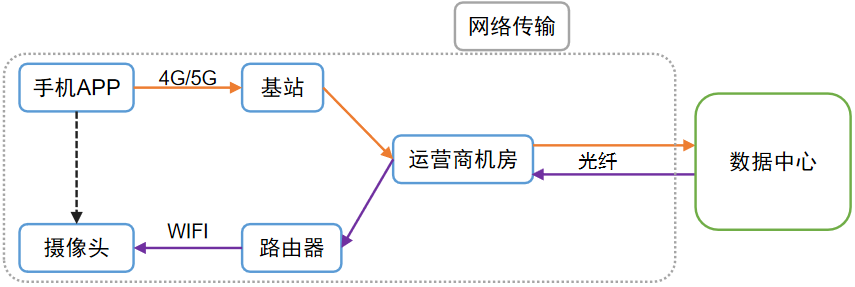

Figure 1 is a schematic diagram of the process of controlling the "turning head" of the camera through the APP, which roughly outlines the typical journey of a signal (network request).

Figure 1 Network transmission process

To put it simply, the time consumption of a network request mainly comes from network transmission and data center processing.

Specifically, information such as clicking the button on the APP --> "turning head" command will be converted into a binary sequence of 0 and 1 --> the mobile phone emits electromagnetic waves through the built-in antenna --> base station --> operator's computer room -- >Transmitted by optical fiber to a data center thousands of miles away (Figure 1).

The data center stores the cloud platform of the system. After the platform is processed, send the "turn head" command --> optical fiber transmission --> to the router at home, and send it to the camera through a wireless signal --> the camera receives the signal and converts it into a sequence of 0 and 1 --> restores to the camera software Comprehensible instructions --> control the motor to rotate.

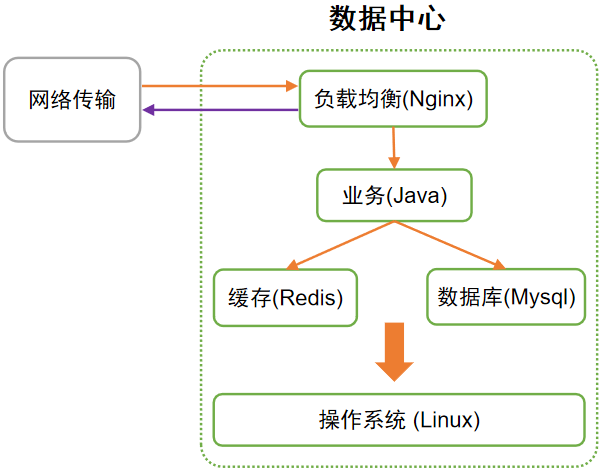

Figure 2 Platform composition of the data center

In the blink of an eye, so many links have been completed (the actual process is far more complicated than this).

It can be seen from this that the speed of light only solves the time-consuming problem of long-distance transmission. If any node is stuck, the efficiency will plummet, just like a short wooden barrel, and the water holding capacity will be greatly reduced.

There are many optimization strategies, and this article will introduce four of the most critical ones: indexing, caching, load balancing, and concurrency.

3. Optimization strategy

3.1 Index

Index can be said to be a very great invention. To put it in one sentence: sort and sort in advance, and it will be convenient to find when the time comes. For example, when looking up a dictionary, we always search by index. If there is no index, it will be exhausting to find the end from the beginning.

In the database in the data center, tens of millions of data are commonplace. Taking mysql as an example, the index scheme used is generally a B+ tree, and a piece of data can be found 1 to 4 times, which takes about tens of milliseconds. And if there is no index, it needs to be traversed, and it may take more than ten seconds to find it, and the gap is hundreds of times.

I believe that you have used the file search that comes with windows, which is so slow that you are speechless; if you use software such as everything, the results will be displayed in an instant, which just reflects the powerful power of the index. (Note: Windows does not use indexes at all, but does not use appropriate indexes)

Of course, the index is not free: it takes up storage space; the index tree changes frequently, causing performance consumption. Although there is a price, it is very worthwhile. As the saying goes, sharpening a knife is not a mistake in chopping firewood.

In the computer world, indexes are ubiquitous. Due to space constraints, I will not elaborate here. If you are interested, see the table at the end of the article. It is the use of indexes in each link as much as possible, and the ingenious "speed up" instead of the clumsy "traversal" that makes a request as fast as possible.

3.2 Caching

Why should the kitchen salt and monosodium glutamate be scooped from the seasoning box instead of the packaging bag? Because it is convenient and fast to scoop from the seasoning box.

Why is the self-operated express delivery so fast? All major warehouses across the country deliver goods nearby.

The above are the two ideas of computer caching: copy commonly used data to a faster place, or a place closer to the user, so that the data can be retrieved much faster.

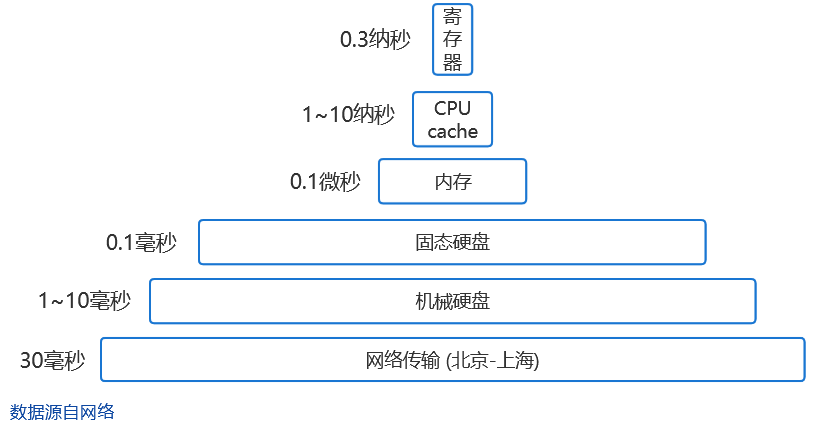

Figure 3 Various types of time-consuming computer

The above figure shows the time-consuming of a single access of each component of the computer. It can be seen that the time-consuming of network transmission and hard disk is the largest, so these two can be avoided if they can be avoided.

In the data center, the database (Mysql) is still taken as an example: all its data is stored on the hard disk, but the commonly used hot data will be copied to the buffer pool of the memory, and the read and write are all in the buffer pool, striving to reduce the drag caused by dealing with the hard disk overall efficiency.

This is the same as when we write a document: when the document is opened, the text is loaded into the computer memory, and the newly written text is also recorded into the memory.

In case the computer or program crashes but is not saved, the newly written data may be lost, because the newly written data has not been saved to the hard disk (the data will disappear after the memory is powered off, but the hard disk will not).

There is no need to worry about the database. It has an additional mechanism to ensure that data changes are not lost, that is, write-ahead logs: each operation simply records the modification results of the hard disk in the hard disk, so that it can be restored even if it is not saved.

To use an inappropriate analogy, it’s like we have to take notes in addition to listening carefully in class. Even if we forget something after class, we can still remember it based on the notes.

3.3 Load Balancing

As the saying goes: There is strength in numbers. Load balancing is to win by volume and distribute pressure by adding nodes. In life, if there are many windows in a cafeteria where you can queue up, will the queue time be much shorter?

In the data center, a load balancer (such as Nginx) is needed to divert the request and forward the request to each back-end node.

In the face of traffic peaks, it is very convenient to expand the number of back-end nodes. As long as the platform implements containerized deployment, adding nodes is just a matter of clicking a few buttons.

The database can also be load balanced, that is, to divide the database into tables, split a large table into several small tables, and distribute them to each machine. As the saying goes, it is difficult for a ship to turn around. Of course, it will be easy and flexible to change to several small boats.

However, sub-database and sub-table cannot be solved by clicking a few buttons. It involves data migration, code modification, testing, and going online. Therefore, the expansion of the database needs to plan ahead and plan early; otherwise, the system may be overwhelmed during the peak traffic period, so that everyone will not be able to use it.

3.4 Concurrency

Although there are many people and strength is great, but the individual is too weak. How to make individuals stronger, that is, how to improve the performance of a single server?

Concurrency is a way of thinking. Today's computers can play songs, chat on WeChat, and download videos at the same time. It seems that this is a matter of course for omnipotent computers.

However, in the early computers, only one software could be run for one user at a time, which was quite inconvenient.

Later, the time-sharing operating system solved this problem. The principle is to divide the CPU time into pieces (tens to hundreds of milliseconds) and distribute them to different software in turn (here refers to single-core CPU). This embodies the idea of concurrency: several events take turns at the micro level, but at the same time at the macro level.

You may think, isn't this a lie, and the efficiency of each software will be significantly reduced, right?

In fact, a software (or process) does not occupy the CPU all the time, but often rests (that is, blocks), such as reading and writing hard disks, waiting for responses from network requests. At this time, the CPU is also idle when it is idle. Wouldn't it be beautiful to hand it over to other software.

Just like the problem of making tea: it is wise to wash the teacup and put the tea leaves while the water is boiling, instead of waiting for the water to boil before doing work.

Therefore, the time-slicing rotation strategy of the operating system makes full use of the CPU on the one hand; I can't perceive this kind of stuttering at the micro level.

3.5 CPUs

Finally, let’s talk about an important factor: CPU.

You may have heard that today's software is complex, with hundreds of thousands of lines of code at every turn. In fact, there are countless codes involved in a network request, and all of this can be completed in just a few (ten) milliseconds, which is no longer what human thinking can keep up with.

This is actually inseparable from the high performance of the CPU. Today's common CPU can execute billions of instructions per second, but it can only execute hundreds of thousands of times at the beginning of its birth. Without the high speed of the CPU, the delicate and complex system of the Internet would be out of the question.

For example, at the moment you stare at the screen, whether it is a mobile phone or a computer, the behind-the-scenes hero CPU is doing all kinds of work at a speed we can't imagine.

Of course, it does not mean that the higher the CPU frequency, the better the performance of the software. There are many influencing factors, so I believe that there is hope for domestic CPUs to come from behind.

4. Conclusion

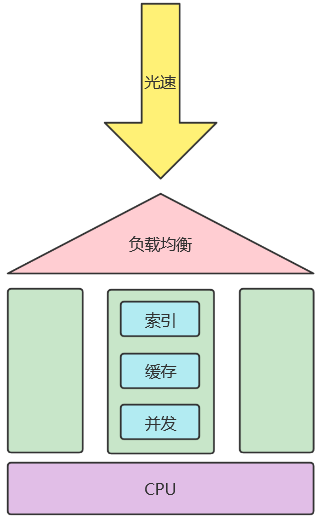

Use a simple diagram (Figure 4) to summarize this article: the speed of light is a necessary condition for "instantaneous" transmission; if users cannot handle too much, then add nodes through load balancing; each node can use indexing, caching, and concurrency to greatly improve performance; and the CPU is the basis for fast processing of all nodes on the link.

Figure 4 Optimization strategy for network requests

The optimization strategies for network requests are far more than those mentioned above. There are also IO multiplexing, pooling, batch processing, peak shaving and valley filling, etc., which should not be underestimated.

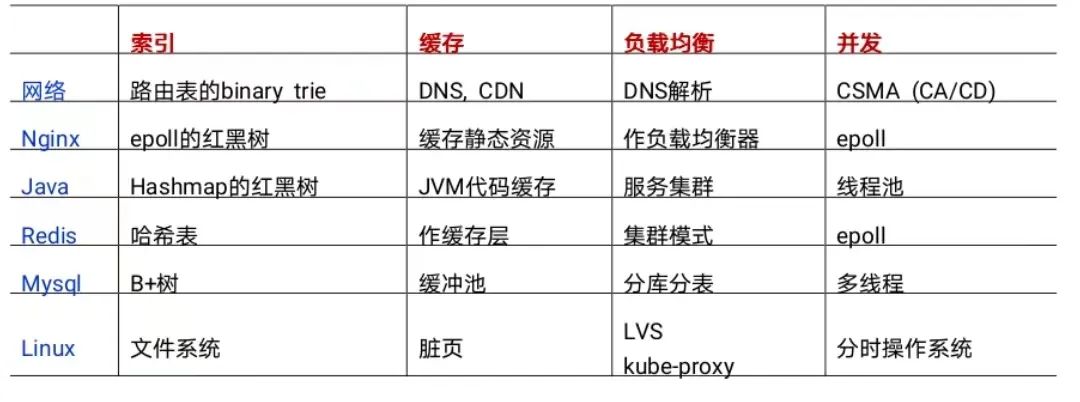

Examples of optimization strategies for each link on the network link mentioned in this article are summarized in the following table:

Table 1 Examples of optimization strategies for each link on the network request link