The network turned out to be so fast Payment transaction scene network optimization practice

The network turned out to be so fast Payment transaction scene network optimization practice

introduction

In recent years, with the development of mobile payment technology and the popularization of payment scenarios, "scanning" payment has become an important part of our daily life. Payment brings us a lot of convenience. As a key link in the mobile payment infrastructure, the stability of the bank's fast payment system will directly affect the payment experience of every consumer.

In view of the frequent interaction requirements of the fast payment business, the fast payment system needs to deal with the daily huge transaction requests. From the network level, it has the characteristics of high frequency and short connections, that is, the connection is quickly established and released. The connection mentioned here is based on TCP ( Transmission Control Protocol) is performed by the Transmission Control Protocol. In recent years, Bank G has continued to optimize the fast payment network, continuously improving the transaction success rate, and helping to improve the customer experience of fast payment.

Introduction to the TCP protocol

TCP is a connection-oriented and reliable transmission communication protocol. The working stage is divided into connection establishment, data transmission and connection termination. The five-tuple we often say is source IP address, source port, destination IP address, destination port and Transport layer protocols, such as TCP, together form a connection.

In order to ensure the reliability of the message transmission, the TCP protocol sets a sequence number for each message, which also ensures the orderly reception of the message sent to the receiving end entity, and then the receiving end entity responds to the bytes that have been successfully received. The corresponding acknowledgment (ACK), if the sender entity does not receive an acknowledgment within a reasonable round-trip delay (RTT), the corresponding data will be retransmitted.

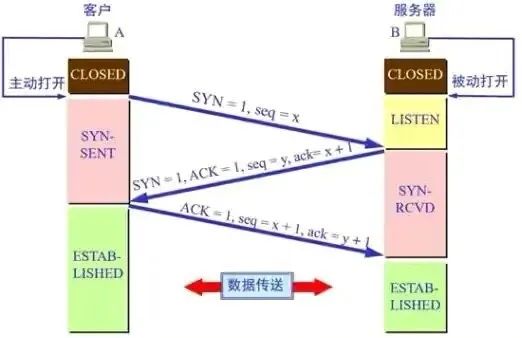

First, the TCP protocol establishes a connection through a "three-way handshake", and the process is shown in the following figure:

Figure 1 TCP protocol three-way handshake

1. The client sends a TCP packet with the flag SYN to the server, and then the client enters the SYN-SENT state;

2. After receiving the TCP message from the client, the server sends a TCP message with the flags SYN and ACK, and then enters the SYN-RCVD state;

3. After receiving the TCP message with the SYN and ACK flags of the server, the client sends the TCP message with the ACK flag, and then enters the ESTABLISHED state. After the server receives the TCP message with the ACK flag from the client It also enters the ESTABLISHED state, and then the two parties enter the data packet exchange phase.

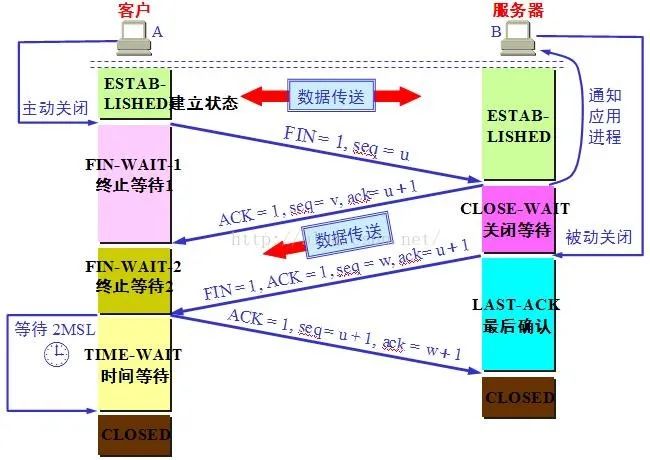

After the three-way handshake is completed, the client and the server successfully establish a TCP connection and start data transmission. After the data transmission is completed, the TCP protocol terminates the connection by "waving four times". example):

Figure 2 The TCP protocol waved four times

1. The client actively sends a TCP message with the flag bit FIN, and then enters the state of FIN-WAIT-1;

2. After receiving the TCP message with the client flag as FIN, the server sends a TCP message with the flag ACK, and then enters the CLOSE-WAIT state;

3. The client enters the FIN-WAIT-2 state after receiving the TCP message with the ACK flag sent by the server, and the server actively sends the TCP message with the FIN and ACK flags to The client, then enters the LAST-ACK state;

4. After receiving the TCP message with the FIN and ACK flags from the server, the client sends a TCP message with the flag ACK to the server, and then enters the TIME-WAIT state. The server receives the flag sent by the client. The connection is closed after the TCP message whose bit is ACK, and the client also closes the connection after waiting for the TIME-WAIT state (2 maximum message survival times) to time out.

Quick Payment Architecture Introduction

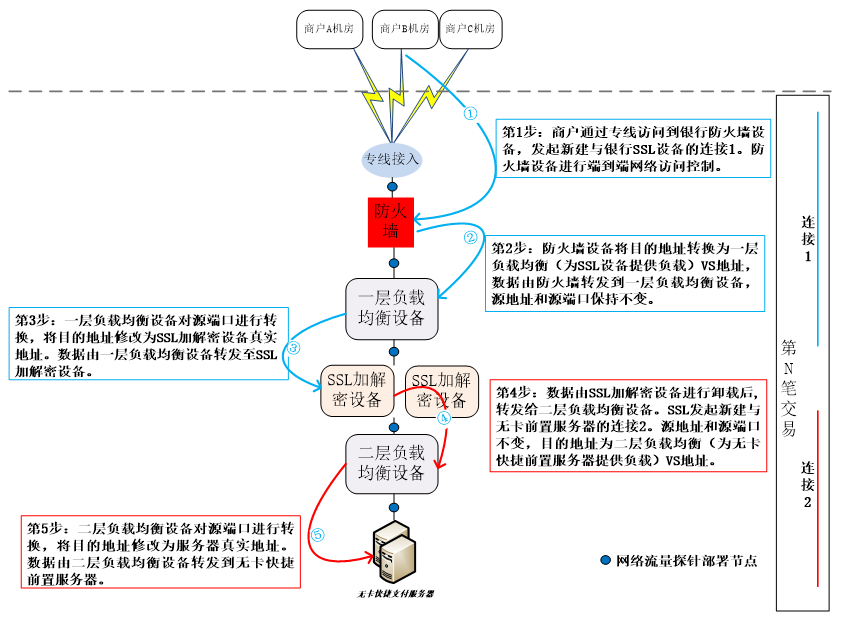

The business logic process of the fast payment system usually includes: the merchant initiates a fast payment transaction request, reaches the DMZ area of the bank's third-party intermediate business through the operator's dedicated line, and processes it with the bank's fast payment system after being processed by the firewall, load balancing or encryption and decryption equipment inside the bank. Connection interaction, each transaction will establish 2 connections. The specific process is as follows: the merchant first establishes a TCP connection with the bank encryption and decryption device through the "three-way handshake" (connection 1 shown in Figure 3), the merchant initiates a transaction request to the bank encryption and decryption device to decrypt the encrypted message, and then encrypts and decrypts the encrypted message. The device establishes a new connection (connection 2 shown in Figure 3) with the bank's cardless fast front-end server through the "three-way handshake" in proxy mode (using the merchant's address and port), and returns the transaction processing result after the bank's background processing. merchant. After the transaction processing is completed, the merchant terminates the connection with the encryption/decryption device 1 by "waving four times", and then the encryption/decryption device terminates the connection with the back-end server 2.

In order to ensure the safe and stable operation of the fast payment business, G Bank has deployed network traffic analysis tools to capture and analyze network traffic in real time at multiple network nodes at the same time, and monitor the fast payment transactions. Retrospective analysis of each failed transaction is used as the basis for continuous optimization and improvement.

Figure 3 Schematic diagram of fast payment architecture

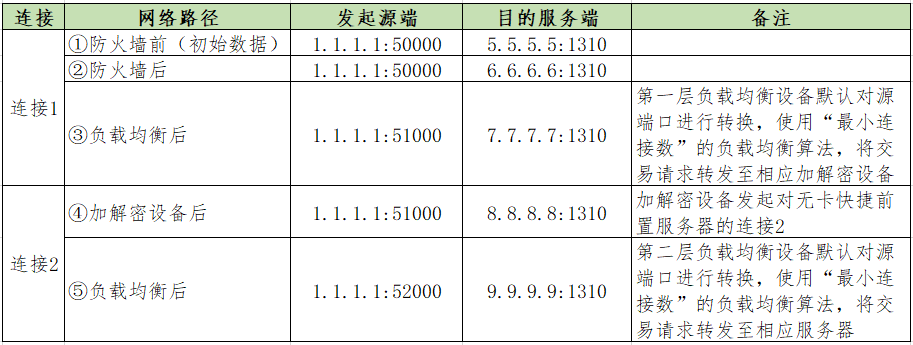

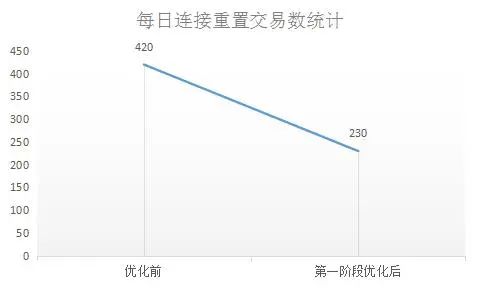

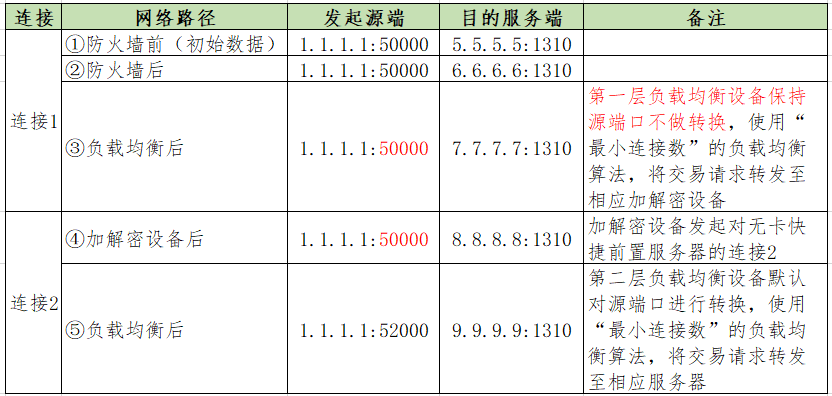

Table 4 shows the source and destination addresses and ports of two connections flowing through each network path in a quick payment transaction in line G. Among them, the bank firewall device performs access control on the transaction and converts the destination address (that is, the address of the bank encryption and decryption device), the first layer load balancing device loads the encryption and decryption device, and the second layer load balancing device performs the cardless fast front-end server. load.

Table 4 Express payment TCP connection source and destination addresses and ports

The problem and optimization of fast multiplexing of source ports of load balancing equipment

In the early daily operation and maintenance process, the operator reported that there are a small number of connection transaction failures in the quick payment business every day. After the network administrator captured the packet and analyzed it, it was found that the encryption and decryption device would sometimes actively send the Reset message to the merchant. The merchant's active closing of the connection described above does not match. Network personnel conduct in-depth analysis of abnormal transaction connections one by one through the network traffic analysis platform. First, conduct network traffic analysis on the side of the encryption and decryption device that generates the disconnection alarm to confirm that the encryption and decryption device actively sends a Reset message to the merchant, such as As shown in Figure 5:

Figure 5 The encryption and decryption device actively sends a Reset message to the merchant

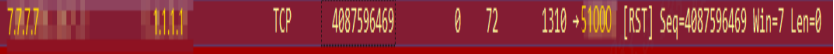

In order to further confirm the root cause of the problem, the technicians followed the clues and continued to analyze the network traffic at the first-layer load balancing node. At this time, they found the source port conversion mechanism of the load balancing. Due to the port conversion, the source port used for the previous connection was quickly used, as shown in the figure. 6 shows:

Figure 6 Fast multiplexing of source ports for a layer of load balancing

The reason for fast multiplexing is that after terminating the connection 1 with the encryption/decryption device through "four waves", the merchant sends a Reset message to quickly recover the connection, so the first layer of load balancing and encryption and decryption devices will quickly recover the connection 1. After recovery, the next new connection may reuse the previous connection source port. At this time, because the encryption and decryption device has quickly recovered the previous connection 1, the new connection 1 of the reused source port can be established normally, as shown in the figure. 7 shows:

Figure 7 Connection 1 of the fast multiplexing source port is established normally

As mentioned above, when a new connection is successfully established, the encryption/decryption device will use the source address and source port of connection 1 to initiate a connection with the back-end cardless fast front-end server (destination address is the L2 load balancing VS address) 2. At this time, because the connection 2 is the standard "four-time wave" disconnection, the encryption and decryption device sends a TCP packet with the flag ACK to the server and enters the TIME-WAIT state, and the TIME-WAIT waiting time is recovered during the processing connection. New connections for the same quintuple cannot be accepted. During this time, if a new connection with the same quintuple occurs, the new connection cannot be established normally, and the encryption and decryption device sends the Reset to the merchant and the chain is disconnected. In addition, the greater the transaction volume, the higher the probability of quintuple conflict, the higher the number and probability of failed transactions. At the business level, it means that a quick payment transaction initiated by the customer fails, and the transaction needs to be re-initiated, which affects the customer experience. .

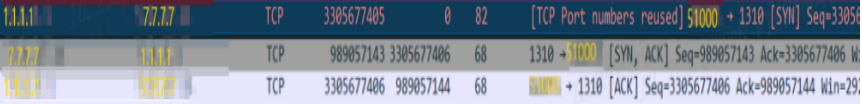

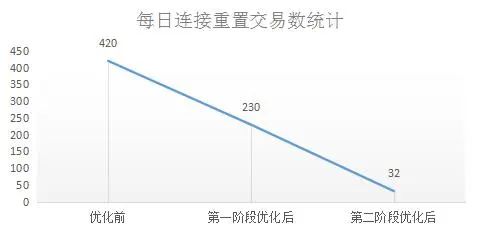

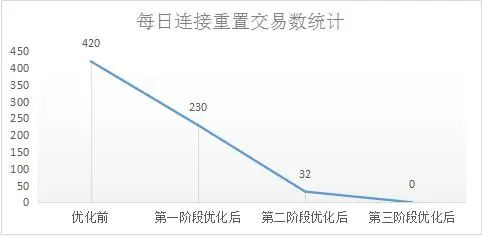

After clarifying the cause and principle of the problem, the idea of network optimization is to avoid the problem that connection 2 cannot be established normally due to TIME-WAIT, and at the same time, it is necessary to ensure that various optimization operations will not cause new impacts on actual production transactions. After comprehensive consideration of various factors, network technicians carry out optimization work in stages. First, set the source port conversion function of the first layer of load balancing equipment to the non-conversion mode, that is, the source port of connection 1 initiated by the merchant will no longer be converted. The probability that connection 1 of the previous connection source port will appear. The number of daily connection resets decreased to a certain extent after optimization, as shown in Figure 8.

Figure 8 Changes in the connection reset transaction after the source port of the first-tier load balancing device remains optimized

Table 9 shows the source and destination addresses and ports of two connections flowing through each network path in a quick payment transaction after optimization.

Table 9 Express Payment TCP connection source and destination addresses and ports

Merchant source port rapid reuse problem and optimization

After disabling the source port conversion settings of the first layer of load balancing, the network technicians found that there were still connection resets every day. For this reason, they continued to analyze the network traffic to the nodes close to the merchant, and found that there was a source when the merchant sent a transaction request. Port fast multiplexing, as shown in Figure 10:

Figure 10 Fast multiplexing of source ports on the merchant side

At this time, there are two optimization ideas. One is to follow the idea of solving the problem of rapid multiplexing of source ports in a layer of load balancing to reduce the probability of the occurrence of connection 1 in the same quintuple. Considering that the quick multiplexing of the source port on the merchant side is not within the maintenance scope of the G-line network technicians, it is difficult to optimize, and the merchant side will establish a connection with the bank's encryption and decryption equipment 1. At this time, you can try to optimize from the destination side. to achieve the same effect.

The first-level load balancing device that provides load for the encryption and decryption devices at the destination of connection 1 uses the "minimum number of connections" load balancing algorithm by default, that is, according to the current connection status of the back-end server, it dynamically selects the one with the least current backlog of connections. A server that forwards the transaction initiated by the merchant to one of the multiple encryption and decryption devices. Compared with the "minimum number of connections" load balancing algorithm, the "round robin" load balancing algorithm allocates transaction requests to the back-end server in turn in turn. The probability of connection 1 of the same quintuple appearing, and in the scenario of card-free fast transaction, the connection processing mechanism on each encryption and decryption device is the same. After adjusting to the "round-robin" load balancing algorithm, the number of connections per encryption and decryption device relatively balanced.

After thorough testing, network technicians carried out the second-stage network optimization, and adjusted the one-layer load balancing algorithm to "round robin". After the adjustment, the number of daily connection reset transactions decreased further, proving that this step of optimization is also effective , as shown in Figure 11:

Figure 11 Changes in connection reset transactions after one-layer load balancing algorithm optimization

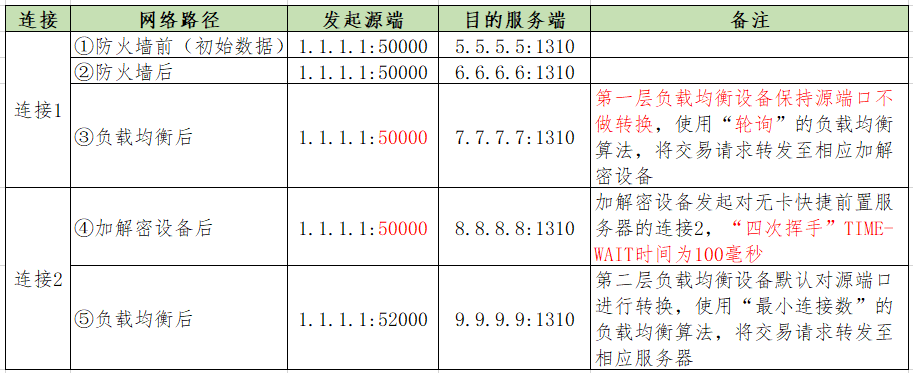

However, there are still a very small number of connection resets at this time. The reason is that the transaction volume of fast payment is large. After adjusting the load balancing algorithm of the first layer to "polling", there are still a very small number of connections of the fast multiplexing source port "polling" to In the case of the same encryption and decryption device, network technicians consider using the second optimization idea, which is to reduce the waiting time for connection 2 to recover. If connection 2 can be recovered in a relatively short time, even if connection 1 has the same quintuple, connection 2 can be established normally. As mentioned above, connection 2 is closed by the encryption and decryption device through "four waves", and the encryption and decryption device has a TIME-WAIT waiting time, and the length of the TIME-WAIT time will determine the recovery time of connection 2. For this reason, network technicians adjusted the TIME-WAIT time of the encryption and decryption equipment from 1 second to 100 milliseconds after thorough testing and piloting in batches. After the third stage of optimization, the number of daily connection reset transactions is reduced to 0, as shown in Figure 12:

Figure 12 Changes in the connection reset transaction after the TIME-WAIT time optimization of the encryption and decryption device

Table 13 shows the source and destination addresses and ports of two connections flowing through each network path in a quick payment transaction after the overall optimization.

Table 13 Quick Payment TCP connection source and destination addresses and ports

Summarize

The fast payment business has the characteristics of short connections and high-frequency transactions at the application and network levels. After three key stages of network optimization, the success rate of the G-line fast payment transaction system has been significantly improved, which strongly supports a large number of daily concurrent transactions and "Double Eleven". ”, “Double Twelve” and other e-commerce promotion peak hours business visits.

With the development and transformation of financial business, in order to achieve high-quality development, the Information Technology Department of Bank G continued to deepen the "123+N" digital banking development system, continued to exert technological momentum, and solved new development challenges through financial technology. The network will also continue to stand in the business perspective, pay attention to various key business transaction scenarios, optimize the network transmission path under the distributed architecture, improve the transaction response speed, continuously consolidate the underlying infrastructure, continue to optimize and improve, improve customer service and user experience, and help banks. Business to a new level.