HTTP/3, it's here, what did you learn?

HTTP 3.0 is the third major version of the HTTP protocol, the first two are HTTP 1.0 and HTTP 2.0, but in fact HTTP 1.1 I think is the real HTTP 1.0.

We all know that HTTP is an application layer protocol, and the data generated by the application layer will be transmitted to other hosts on the Internet through the transport layer protocol as a carrier, and the carrier is the TCP protocol, which is the mainstream mode before HTTP 2.

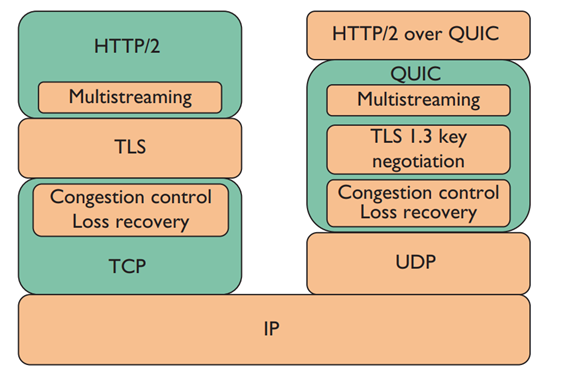

However, with the continuous exposure of the shortcomings of the TCP protocol, the new generation of HTTP protocol - HTTP 3.0 resolutely cut off the connection with TCP and embraced the UDP protocol instead. It is not accurate to say that, in fact, HTTP 3.0 is actually embracing the QUIC protocol , and the QUIC protocol is based on the UDP protocol.

HTTP 3.0

HTTP 3.0 was officially released on June 6, 2022. The IETF formulated the HTTP 3.0 standard in RFC 9114. Compared with HTTP 2.0, HTTP 3.0 is actually much smaller than HTTP 2.0 compared to HTTP 1.1. The improvement lies in efficiency. Replacing the TCP protocol with the UDP protocol, HTTP 3.0 has lower latency, and its efficiency is even more than 3 times faster than HTTP 1.1.

In fact, the continuous development of each generation of HTTP protocol is based on the shortcomings of the previous generation of HTTP. For example, the biggest problem of HTTP 1.0 is transmission security and the lack of support for persistent connections. In response to this, HTTP 1.1 appeared and introduced Keep-Alive. Mechanisms to maintain long links and TLS to ensure communication security. But at this time, the concurrency of the HTTP protocol is not good enough.

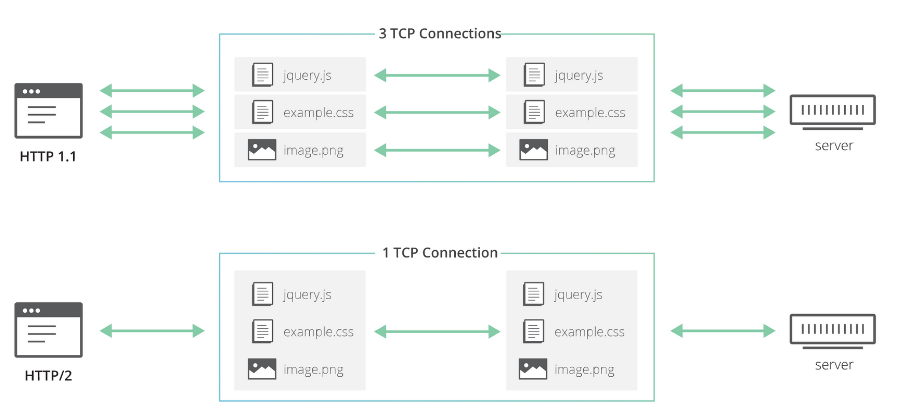

As the web continues to grow and the number of resources (CSS, JavaScript, images, etc.) required for each website increases year by year, browsers find themselves requiring more and more concurrency in fetching and rendering web pages. But since HTTP 1.1 was only able to allow a client/server exchange of HTTP requests, the only way to gain concurrency at the network layer was to use multiple TCP connections in parallel to the same origin, but using multiple TCP connections lost the keep- Meaning of Alive.

Then there was the SPDY protocol, which mainly solved the inefficiency of HTTP 1.1, including reducing latency, compressing headers, etc., which have been proven by the Chrome browser to produce optimization effects. Later, HTTP 2.0 was based on SPDY and introduced **streams ( Stream )** concept, which allows multiplexing different HTTP exchanges onto the same TCP connection, so as to achieve the purpose of allowing the browser to reuse the TCP connection.

The main role of TCP is to transfer the entire stream of bytes from one endpoint to the other in the correct order, but when some packets in the stream are lost, TCP needs to resend these lost packets and wait until the lost packets It can only be processed by HTTP when it reaches the corresponding endpoint, which is called the TCP head-of-line blocking problem.

Then some people may consider modifying the TCP protocol. In fact, this is an impossible task. Because TCP has existed for too long, it has been flooded in various devices, and this protocol is implemented by the operating system, and it is not realistic to update it.

For this reason, Google started a QUIC protocol based on the UDP protocol and used it on HTTP/3. HTTP/3 was previously called HTTP-over-QUIC. From this name, we can also find that HTTP /3 The biggest transformation is the use of QUIC.

QUIC protocol

The lowercase of QUIC is quic, homophonic quick, which means fast. It is a UDP-based transmission protocol proposed by Google, so QUIC is also called a fast UDP Internet connection.

First of all, the first feature of QUIC is fast, why is it fast, and where is it fast?

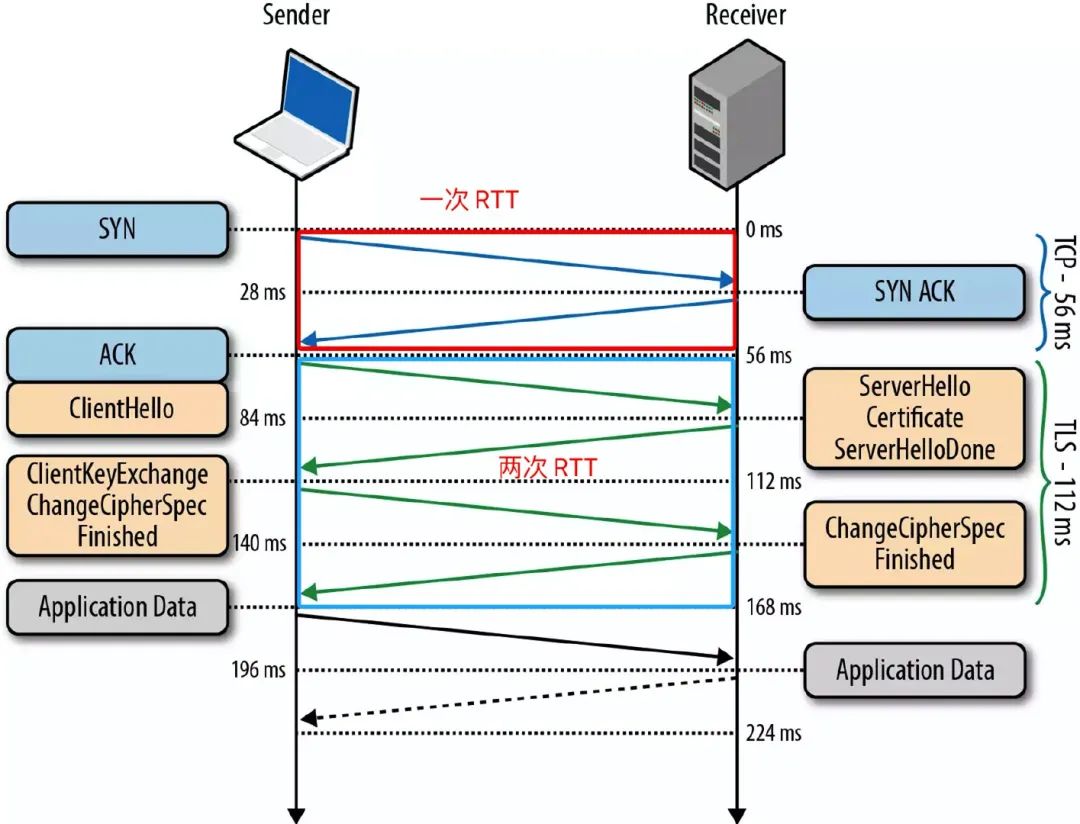

As we all know, the HTTP protocol uses TCP for message transmission at the transport layer, and HTTPS and HTTP/2.0 also use the TLS protocol for encryption, which will cause the connection delay of the three-way handshake: that is, the TCP three-way handshake (one time) and TLS handshake (twice), as shown in the figure below.

For many short connection scenarios, this handshake delay has a large impact and cannot be eliminated. After all RTT is the ultimate struggle between humans and efficiency.

In contrast, QUIC's handshake connection is faster because it uses UDP as the transport layer protocol, which reduces the time delay of the three-way handshake. Moreover, QUIC's encryption protocol adopts the latest version of TLS protocol, TLS 1.3. Compared with the previous TLS 1.1-1.2, TLS1.3 allows the client to start sending application data without waiting for the TLS handshake to complete, and can support 1 RTT and 0 RTT. , so as to achieve the effect of quickly establishing a connection.

We also mentioned above that although HTTP/2.0 solves the problem of head-of-line blocking, the connection it establishes is still based on TCP and cannot solve the problem of request blocking.

UDP itself does not have the concept of establishing a connection, and the streams used by QUIC are isolated from each other and will not block the processing of other stream data, so using UDP will not cause queue head blocking.

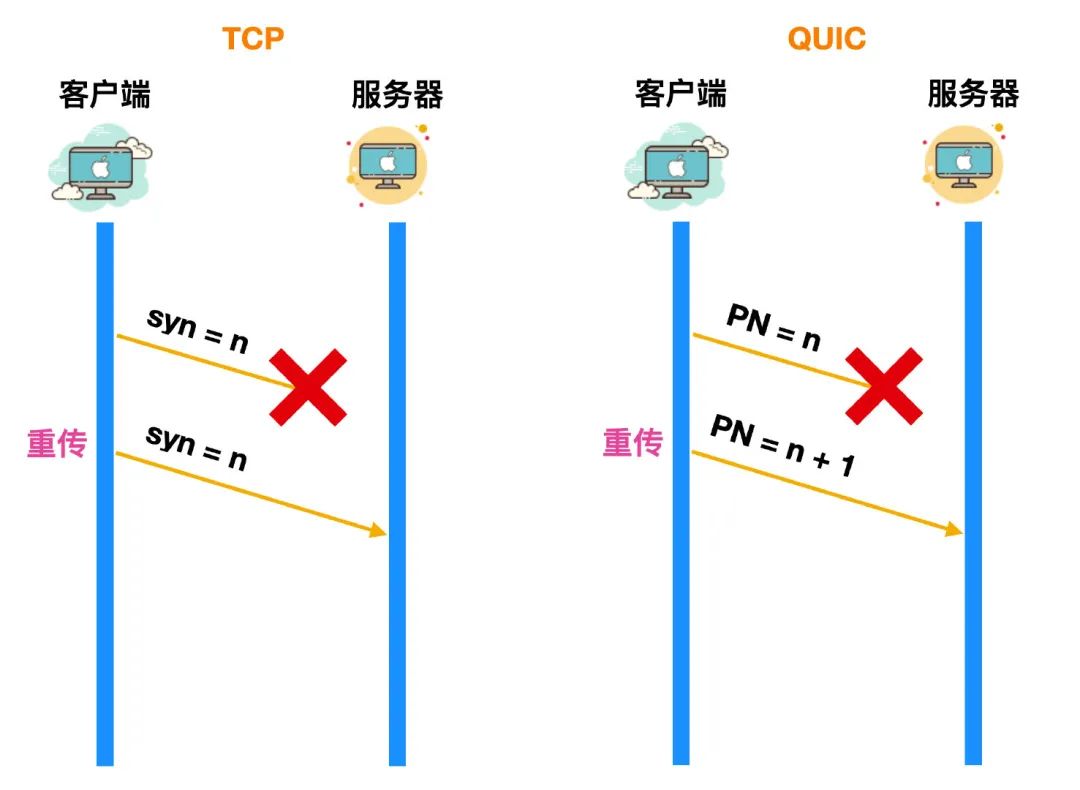

In TCP, in order to ensure the reliability of the data, TCP uses the sequence number + confirmation number mechanism to achieve. Once the packet with the synchronize sequence number is sent to the server, the server will respond within a certain period of time. In response, the client will retransmit the packet until the server receives the packet and responds.

So how does TCP determine its retransmission timeout?

TCP generally adopts an adaptive retransmission algorithm, and the timeout period is dynamically adjusted according to the round-trip time RTT. Each time the client will use the same syn to determine the timeout period, resulting in an inaccurate calculation of the RTT result.

Although QUIC does not use the TCP protocol, it also ensures reliability. The mechanism for QUIC to achieve reliability is to use the Packet Number, which can be considered as a substitute for the synchronize sequence number, which is also incremented. The difference with syn is that this Packet Number will be + 1 regardless of whether the server receives the data packet or not, and syn is only + 1 after the server sends an ack response.

For example, if a data packet with PN = 10 fails to arrive at the server due to some reasons during the sending process, the client will retransmit a data packet with PN = 11. After a period of time, the client receives a data packet with PN = 10. After the response, the response message is sent back, and the RTT at this time is the lifetime of the data packet PN = 10 in the network, so the calculation is relatively accurate.

Although QUIC guarantees the reliability of data packets, how is the reliability of data guaranteed?

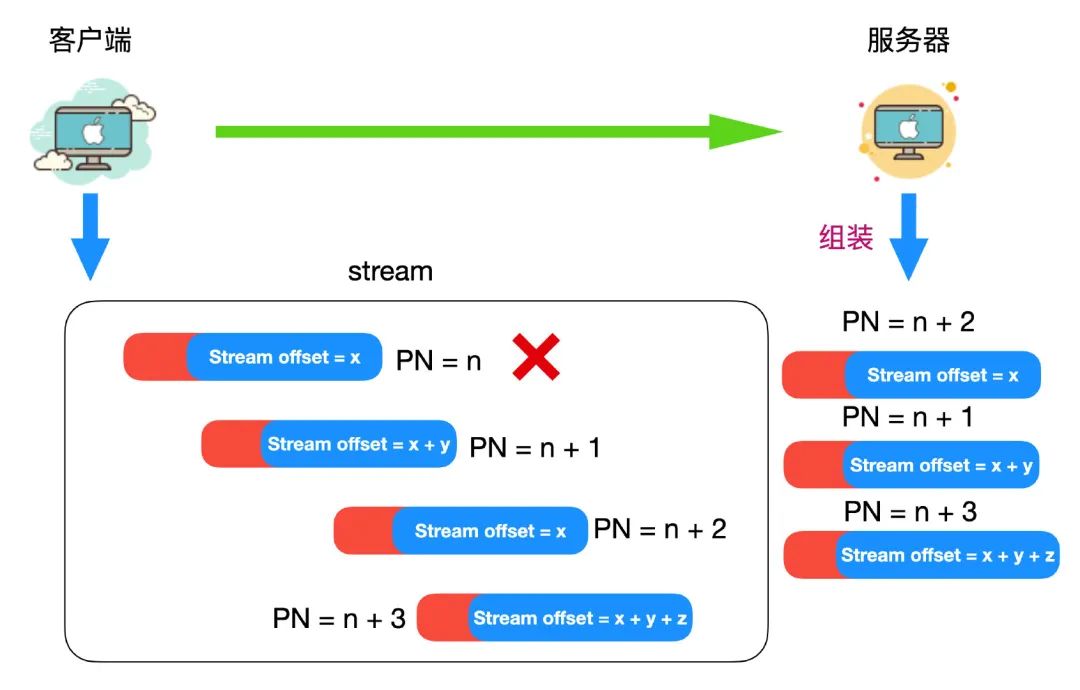

QUIC introduces a concept of stream offset. A stream can transmit multiple stream offsets. Each stream offset is actually a PN-identified data. Even if a PN-identified data is lost, after PN + 1, it will still be retransmitted. The data identified by the PN will be reorganized when all the data identified by the PN is sent to the server to ensure the reliability of the data. The stream offsets arriving at the server will be assembled in order, which also ensures the ordering of the data.

As we all know, the specific implementation of the TCP protocol is completed by the operating system kernel, the application can only be used, and the kernel cannot be modified. As mobile terminals and more and more devices connect to the Internet, performance has gradually become a very important. Metrics. Although the mobile network is developing very fast, the update of the client is very slow. I still see that many computers in many areas still use the xp system, although it has been developed for many years. The server-side system does not depend on user upgrades, but since operating system upgrades involve the update of underlying software and runtime libraries, they are also conservative and slow.

An important feature of the QUIC protocol is pluggability, which can be dynamically updated and upgraded. QUIC implements a congestion control algorithm at the application layer, and does not require the support of the operating system and kernel. When the congestion control algorithm is switched, it only needs to restart the server. Just load one side, no need to stop and restart.

We know that TCP flow control is achieved through sliding windows. If you are not familiar with sliding windows, you can read this article I wrote.

TCP Basics

Some concepts of sliding windows are mentioned later in the article.

QUIC also implements flow control. QUIC's flow control also uses window update window_update to tell the peer the number of bytes it can accept.

The TCP protocol header is not encrypted and authenticated, so it is likely to be tampered with during transmission. Different from it, the message header in QUIC is authenticated and the message is encrypted. In this way, as long as there is any modification to the QUIC message, the receiver can discover it in time, ensuring security.

In general, QUIC has the following advantages

- Using the UDP protocol, there is no need for a three-way handshake, and it also reduces the time for TLS to establish a connection.

- Resolved head-of-line blocking issue.

- Realize dynamic pluggability, and implement congestion control algorithm at the application layer, which can be switched at any time.

- The message header and message body are authenticated and encrypted respectively to ensure security.

- Connections migrate smoothly.

Smooth connection migration means that when your mobile phone or mobile device switches between 4G signals and WiFi and other networks, it will not be disconnected and reconnected, and users will not even have any perception, and can directly achieve smooth signal switching.

The QUCI protocol has been written in RFC 9000.