How to reliably send HTTP requests when the user leaves the page

A few times, when the user does something like navigating to a different page or submitting a form, I need to send an HTTP request with some data for logging. Consider this contrived example of sending some information to an external service when a link is clicked:

<a href="/some-other-page" id="link">Go to Page</a>

<script>

document.getElementById('link').addEventListener('click', (e) => {

fetch("/log", {

method: "POST",

headers: {

"Content-Type": "application/json"

},

body: JSON.stringify({

some: "data"

})

});

});

</script>- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

Nothing very complicated is going on here. The link works fine (I didn't use e.preventDefault()), but before that behavior happens, a POST request is fired on click. No need to wait for any kind of response. I just want it to be sent to whatever service I'm accessing.

At first glance, you might expect the dispatch of this request to be synchronous, after which we'll continue to navigate away from the page while the other server handles the request successfully. But it turns out that's not always the case.

Browsers are not guaranteed to keep open HTTP requests

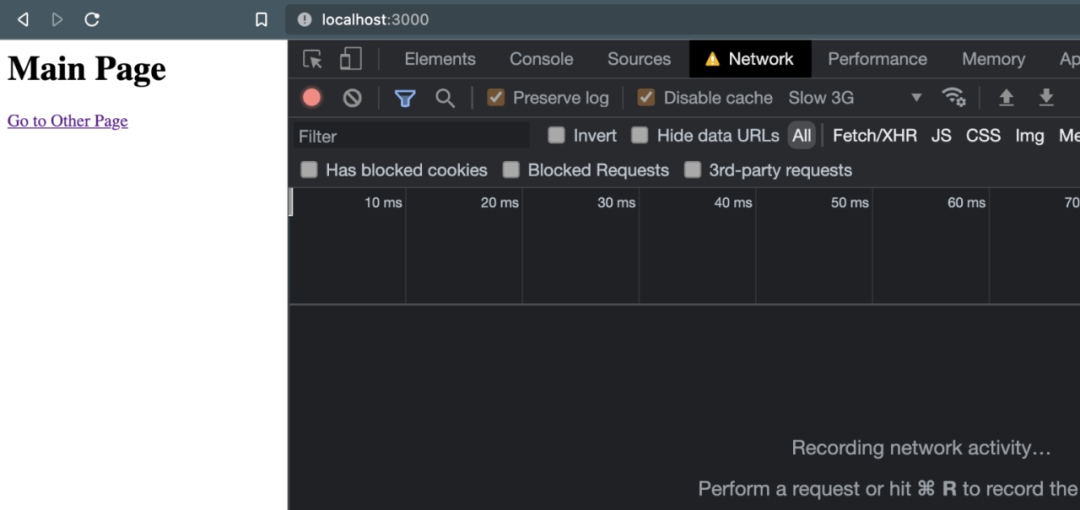

When terminating a page occurs in the browser, there is no guarantee that an in-process HTTP request will succeed (see more about "termination" and other states of the page lifecycle). The reliability of these requests may depend on several aspects - network connectivity, application performance, and even the configuration of the external service itself. As a result, sending data at these moments can be unreliable, which can create a potentially significant problem if you rely on these logs to make data-sensitive business decisions. To help illustrate this unreliability, I set up a small Express application with pages using the code included above. When the link is clicked, the browser navigates to /other, but before that, a POST request is fired. While all was happening, I had my browser's network tab open, and I was on a "slow 3G" connection speed. Once the page loads and I've cleared the logs, things look quiet:

1. webp

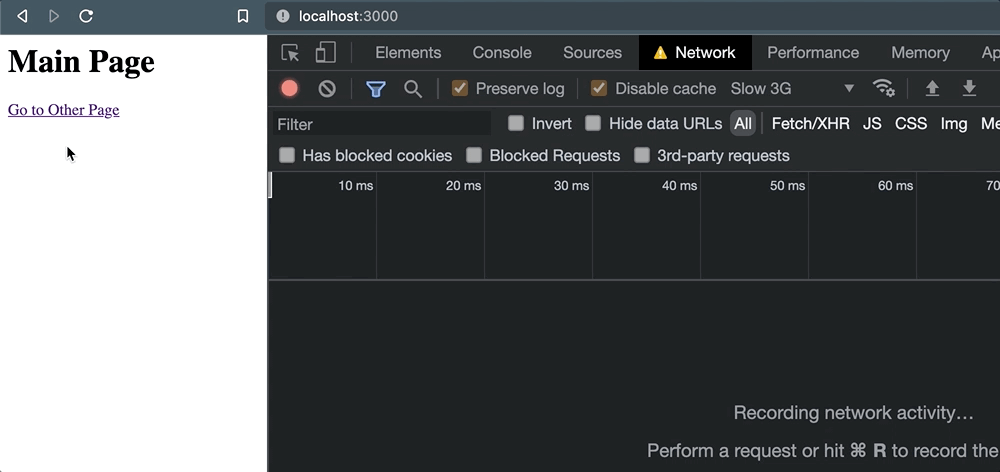

But as soon as the link is clicked, things go wrong and when the navigation happens, the request is cancelled.

2. webp

This gives us a lack of confidence that the external service will be able to handle the request. To verify this behavior, this also happens when we use window.location to navigate programmatically:

document.getElementById('link').addEventListener('click', (e) => {

+ e.preventDefault();

// Request is queued, but cancelled as soon as navigation occurs.

fetch("/log", {

method: "POST",

headers: {

"Content-Type": "application/json"

},

body: JSON.stringify({

some: 'data'

}),

});

+ window.location = e.target.href;

});- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

Regardless of how or when the navigation occurs, and when the active page terminates, those outstanding requests are at risk of being abandoned.

But why was it cancelled?

The root of the problem is that, by default, XHR requests (via fetch or XMLHttpRequest) are asynchronous and non-blocking. Once the request is queued, the actual work of the request is handed off to the browser-level API behind the scenes. Since it's performance related, that's fine - you don't want requests to tie up the main thread. But it also means that when pages enter the "terminated" state, they are at risk of being abandoned, and there is no guarantee that any work behind the scenes will be done. Here's Google's summary of specific lifecycle states:

Once the page starts to be unloaded by the browser and cleared from memory, the page is in a terminated state. No new tasks can be started in this state, and ongoing tasks may be killed if they run for too long.

In short, browsers are designed to assume that when a page is closed, there is no need to continue processing any background processes it has queued.

So, what are our options?

Probably the most obvious way to avoid this problem is to delay user actions as long as possible until the request returns a response. In the past, it was wrong to do this by using the synchronous flags supported in XMLHttpRequest. Using it would completely block the main thread and cause tons of performance issues - I've written about this a few times in the past - so the idea shouldn't even be accepted. In fact, it's being de-platformed (Chrome v80+ has removed it). Instead, if you're going to take this type of approach, it's better to wait for the Promise to resolve when the response comes back. After it comes back, you can safely perform the behavior. Using our previous code snippet, it might look like this:

document.getElementById('link').addEventListener('click', async (e) => {

e.preventDefault();

// Wait for response to come back...

await fetch("/log", {

method: "POST",

headers: {

"Content-Type": "application/json"

},

body: JSON.stringify({

some: 'data'

}),

});

// ...and THEN navigate away.

window.location = e.target.href;

});- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

This gets the job done, but has some not-so-small drawbacks. First, it delays the desired behavior, thereby hurting the user experience. Collecting analytics data will certainly bring benefits to the business (and future users), but making current users pay for those benefits is not ideal. Not to mention, as an external dependency, any latency or other performance issues with the service itself are exposed to the user. If the suspension of analytics services prevents customers from completing a high-value action, everyone loses. Second, this approach is not as reliable as it initially sounds, since some termination behaviors cannot be delayed by programming. For example, e.preventDefault() cannot delay someone from closing a browser tab. So, at best, it covers collecting data for some user actions, but not enough to fully trust it.

Instruct the browser to keep outstanding requests

Thankfully, there are options to preserve outstanding HTTP requests built into the vast majority of browsers without compromising the user experience.

Use Fetch's keepalive flag

If the keepalive flag is set to true when using fetch(), the corresponding request will remain open even if the page that originated the request is terminated. Using our original example, its implementation looks like this:

<a href="/some-other-page" id="link">Go to Page</a>

<script>

document.getElementById('link').addEventListener('click', (e) => {

fetch("/log", {

method: "POST",

headers: {

"Content-Type": "application/json"

},

body: JSON.stringify({

some: "data"

}),

keepalive: true

});

});

</script>- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

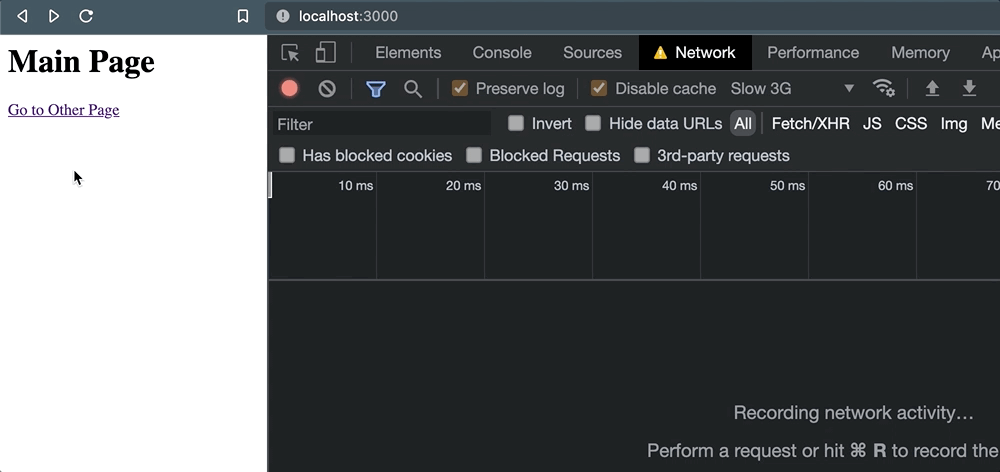

When the link is clicked and the page is navigated, no request cancellation occurs:

3. webp

Instead, we get an (unknown) status for the simple reason that the active page never waited to receive any response. A one-liner like this is easy to fix, especially when it's part of a common browser API. However, if you're looking for a more feature-focused option with a simpler interface, there's another way that actually has the same browser support.

Use Navigator.sendBeacon()

The Navigator.sendBeacon() function is designed to send a one-way request (beacon). A basic implementation would look like this, sending a POST with stringified JSON and a "text/plain" Content-Type:

navigator.sendBeacon('/log', JSON.stringify({

some: "data"

}));- 1.

- 2.

- 3.

But this API doesn't allow you to send custom headers. So, in order for us to send data as "application/json", we need to do a little tweaking and use blobs:

<a href="/some-other-page" id="link">Go to Page</a>

<script>

document.getElementById('link').addEventListener('click', (e) => {

const blob = new Blob([JSON.stringify({ some: "data" })], { type: 'application/json; charset=UTF-8' });

navigator.sendBeacon('/log', blob));

});

</script>- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

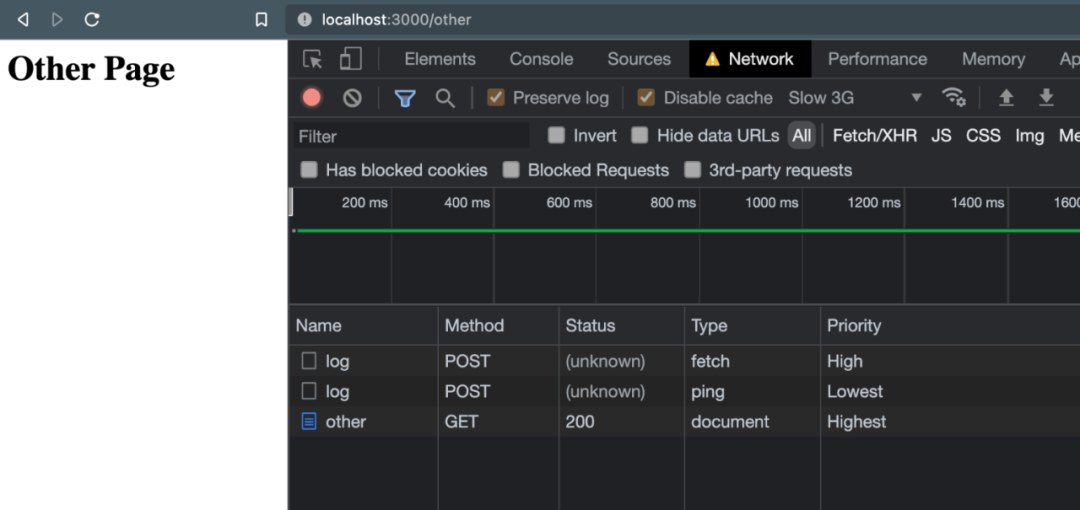

In the end, we got the same result - requests that were allowed to complete even after page navigation. But there are a few things that might give it an advantage over fetch(): the beacon is sent with low priority. To demonstrate, when using fetch() and sendBeacon() with keepalive together, the Network tab shows the following:

4. webp

By default, fetch() gets "high" priority, while beacon (called "ping" type above) has "lowest" priority. This is a good thing for requests that are not critical to the functionality of the page. Taken directly from the Beacon specification:

The specification defines an interface that […] minimizes resource contention with other time-critical operations, while ensuring that such requests are still processed and delivered to their destination.

In other words, sendBeacon() ensures that its requests don't get in the way of those that are really important to your application and user experience.

Honorable mention for the ping attribute

It is worth mentioning that more and more browsers support the ping attribute. When attached to a link, it fires a small POST request:

<a href="http://localhost:3000/other" ping="http://localhost:3000/log">

Go to Other Page

</a>- 1.

- 2.

- 3.

These request headers will contain the page on which the link was clicked (ping-from), and the link's href value (ping-to):

headers: {

'ping-from': 'http://localhost:3000/',

'ping-to': 'http://localhost:3000/other'

'content-type': 'text/ping'

// ...other headers

},- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

It's technically similar to sending a beacon, but with some notable limitations:

It's strictly limited to use on links, which would make it impossible to start if you needed to track data related to other interactions, such as button clicks or form submissions.

Browser support is good, but not great. As of this writing, Firefox specifically doesn't have it enabled by default.

You cannot send any custom data with the request. As mentioned earlier, you only get at most a few ping-* headers, plus any other headers that come with your program.

All things considered, ping is a great tool if you can send simple requests and don't want to write any custom JavaScript. However, if you need to send more substance, it may not be the best option.

So, which one should I choose?

There is definitely a tradeoff in using fetch and keepalive or sendBeacon() to send your last second request. To help discern which approach is best for different situations, consider the following:

You might use fetch() + keepalive if:

- You need to easily pass custom headers with the request.

- You want to make a GET request to the service, not a POST.

- You are supporting an older browser (like IE) and have the fetch polyfill loaded.

But sendBeacon() may be a better choice in the following cases:

- You're making a simple service request that doesn't require much customization.

- You prefer a cleaner, more elegant API.

- You want to make sure that your request doesn't compete with other high-priority requests sent in your application.

Original: https://css-tricks.com/send-an-http-request-on-page-exit/ Author: Alex MacArthur